Robotic Sensing and Stimuli Provision for Guided Plant Growth

Summary

Distributed robot nodes provide sequences of blue light stimuli to steer the growth trajectories of climbing plants. By activating natural phototropism, the robots guide the plants through binary left-right decisions, growing them into predefined patterns that by contrast are not possible when the robots are dormant.

Abstract

Robot systems are actively researched for manipulation of natural plants, typically restricted to agricultural automation activities such as harvest, irrigation, and mechanical weed control. Extending this research, we introduce here a novel methodology to manipulate the directional growth of plants via their natural mechanisms for signaling and hormone distribution. An effective methodology of robotic stimuli provision can open up possibilities for new experimentation with later developmental phases in plants, or for new biotechnology applications such as shaping plants for green walls. Interaction with plants presents several robotic challenges, including short-range sensing of small and variable plant organs, and the controlled actuation of plant responses that are impacted by the environment in addition to the provided stimuli. In order to steer plant growth, we develop a group of immobile robots with sensors to detect the proximity of growing tips, and with diodes to provide light stimuli that actuate phototropism. The robots are tested with the climbing common bean, Phaseolus vulgaris, in experiments having durations up to five weeks in a controlled environment. With robots sequentially emitting blue light-peak emission at wavelength 465 nm-plant growth is successfully steered through successive binary decisions along mechanical supports to reach target positions. Growth patterns are tested in a setup up to 180 cm in height, with plant stems grown up to roughly 250 cm in cumulative length over a period of approximately seven weeks. The robots coordinate themselves and operate fully autonomously. They detect approaching plant tips by infrared proximity sensors and communicate via radio to switch between blue light stimuli and dormant status, as required. Overall, the obtained results support the effectiveness of combining robot and plant experiment methodologies, for the study of potentially complex interactions between natural and engineered autonomous systems.

Introduction

Congruent with the increasing prevalence of automation in manufacturing and production, robots are being utilized to sow, treat, and harvest plants1,2,3,4,5. We use robot technology to automate plant experiments in a non-invasive manner, with the purpose of steering growth via directional responses to stimuli. Traditional gardening practices have included the manual shaping of trees and bushes by mechanical restraint and cutting. We present a methodology that can for instance be applied to this shaping task, by steering growth patterns with stimuli. Our presented methodology is also a step towards automated plant experiments, here with a specific focus on providing light stimuli. Once the technology has become robust and reliable, this approach has potential to reduce costs in plant experiments and to allow for new automated experiments that would otherwise be infeasible due to overhead in time and manual labor. The robotic elements are freely programmable and act autonomously as they are equipped with sensors, actuators for stimuli provision, and microprocessors. While we focus here on proximity sensing (i.e., measuring distances at close-range) and light stimuli, many other options are feasible. For example, sensors can be used to analyze plant color, to monitor biochemical activity6, or for phytosensing7 approaches to monitor for instance environmental conditions through plant electrophysiology8. Similarly, actuator options might provide other types of stimuli9, through vibration motors, spraying devices, heaters, fans, shading devices, or manipulators for directed physical contact. Additional actuation strategies could be implemented to provide slow mobility to the robots (i.e., 'slow bots'10), such that they could gradually change the position and direction from which they provide stimuli. Furthermore, as the robots are equipped with single-board computers, they could run more sophisticated processes such as visioning for plant phenotyping11 or artificial neural network controllers for stimuli actuation12. As the plant science research focus is often on early growth (i.e., in shoots)13, the whole domain of using autonomous robot systems to influence plants over longer periods seems underexplored and may offer many future opportunities. Going even one step further, the robotic elements can be seen as objects of research themselves, allowing the study of the complex dynamics of bio-hybrid systems formed by robots and plants closely interacting. The robots selectively impose stimuli on the plants, the plants react according to their adaptive behavior and change their growth pattern, which is subsequently detected by the robots via their sensors. Our approach closes the behavioral feedback loop between the plants and the robots and creates a homeostatic control loop.

In our experiments to test the function of the robot system, we exclusively use the climbing common bean, Phaseolus vulgaris. In this setup, we use climbing plants, with mechanical supports in a gridded scaffold of overall height 180 cm, such that the plants are influenced by thigmotropism and have a limited set of growth directions to choose from. Given that we want to shape the whole plant over a period of weeks, we use blue light stimuli to influence the plant's phototropism macroscopically, over different growth periods including young shoots and later stem stiffening. We conduct the experiments in fully controlled ambient light conditions where other than the blue light stimuli we provide exclusively red light, with peak emission at wavelength 650 nm. When they reach a bifurcation in the mechanical support grid, they make a binary decision whether to grow left or right. The robots are positioned at these mechanical bifurcations, separated by distances of 40 cm. They autonomously activate and deactivate their blue light emittance, with peak emission at wavelength 465 nm, according to a predefined map of the desired growth pattern (in this case, a zigzag pattern). In this way, the plants are guided from bifurcation to bifurcation in a defined sequence. Only one robot is activated at a given time—during which it emits blue light while autonomously monitoring plant growth on the mechanical support beneath it. Once it detects a growing tip using its infrared proximity sensors, it stops emitting blue light and communicates to its neighboring robots via radio. The robot that determines itself to be the next target in the sequence then subsequently activates, attracting plant growth toward a new mechanical bifurcation.

As our approach incorporates both engineered and natural mechanisms, our experiments include several methods that operate simultaneously and interdependently. The protocol here is first organized according to the type of method, each of which must be integrated into a unified experiment setup. These types are plant species selection; robot design including hardware and mechanics; robot software for communication and control; and the monitoring and maintenance of plant health. The protocol then proceeds with the experiment design, followed by data collection and recording. For full details of results obtained so far, see Wahby et al.14. Representative results cover three types of experiments—control experiments where all robots do not provide stimuli (i.e., are dormant); single-decision experiments where the plant makes a binary choice between one stimuli-providing robot and one that is dormant; and multiple-decision experiments where the plant navigates a sequence of binary choices to grow a predefined pattern.

Protocol

1. Plant species selection procedure

NOTE: This protocol focuses on the plant behaviors related to climbing, directional responses to light, and the health and survival of the plants in the specific season, location, and experimental conditions.

- Select a plant species known to display strong positive phototropism15,16 towards UV-A and blue light (340–500 nm) in the growing tips.

- Select a species that is a winder, in which the circumnutation17 behavior is pronounced and the growing tip has helical trajectories with large enough amplitude to wind around the mechanical supports used in the specific experimental conditions. The twining18 behavior exhibited by the selected winder should tolerate the environment and nutrient conditions present in the experiment and should tolerate mechanical supports with angle of inclination up to 45°.

- Select a species that will grow reliably and quickly in the experimental conditions, with an average growth speed not less than approximately 5 cm per day, and preferably faster if possible.

- Select a species that will display the required behaviors in the present season and geographic location.

- Ensure the species tolerates the range of environmental parameters that will be present in the experimental setup. The plant should tolerate an absence of green light and an absence of light outside the visible spectrum (400–700 nm). The plant should also tolerate any present fluctuations in temperature, kept at approximately 27 °C, as well as any present fluctuations in humidity and watering.

2. Robot conditions and design

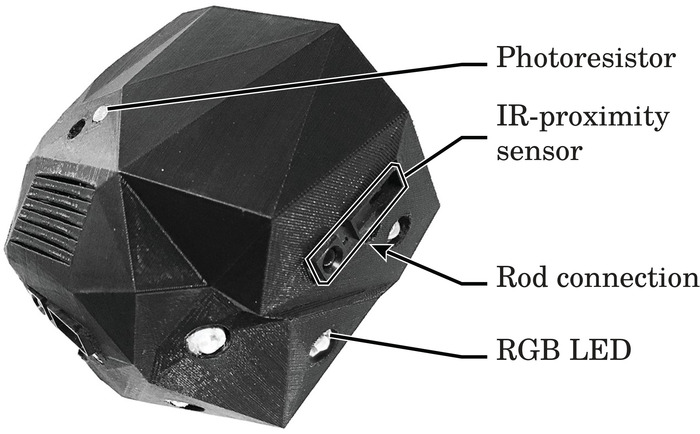

- Organize robot capabilities into decentralized nodes with single-board computers (see Figure 1 and Figure 2), integrated into modular mechanical supports. Ensure each identical robot node is able to control and execute its own behavior.

- For robotic provision of stimuli to plants, provide blue light (400–500 nm) to plants at controllable intervals, at an intensity that will trigger their phototropic response, from the direction and orientation required for the respective portion of the experiment.

- Select a red-green-blue (RGB) light-emitting diode (LED) or an isolated blue LED. In either case, include an LED with a blue diode with peak emission ƛmax = 465 nm.

- Select an LED that when congregated in groups and set in the precise conditions of the utilized robot can maintain the required light intensity level in each direction tested in the experiment setup. For each direction being tested, ensure that the blue diodes in the LEDs in a single robot are collectively capable of maintaining a light intensity level of approximately 30 lumens without overheating, when situated in the utilized robot enclosure and any utilized heat dissipation strategies. The selected LED should have a viewing angle of approximately 120°.

NOTE: For example, in a robot utilizing three LEDs per direction, with microcontroller-enabled regulation of intensity, if the blue diodes emit with maximum light intensity Φ = 15 lumens, then without overheating they should be able to maintain 65% of the maximum.) - Interface the LEDs to the robot’s single-board computer, via LED drivers that regulate the power supply according to the required brightness. Enable individual control, either of each LED or of the LED groups serving each direction being tested in the setup.

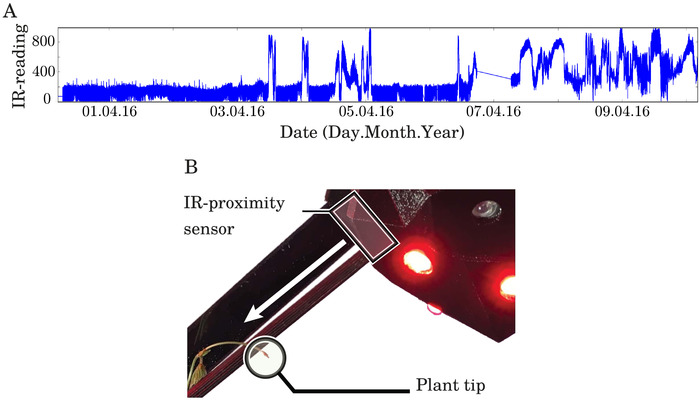

- For the sensing procedure for the proximity of plant growing tips (see Figure 3B), use processed readings from infrared proximity (IR-proximity) sensors to reliably and autonomously detect the presence of plants approaching from each direction tested in the setup.

- Select an IR-proximity sensor that regularly detects the growing tip of the selected plant species, when arranged perpendicularly to the central axis of the direction from which the plant approaches, as tested in an unobstructed environment. Ensure successful detection occurs starting from a distance of 5 cm, as seen in Figure 3A starting at the timestamp labelled ‘07.04.16’ on the horizontal axis.

- Interface each IR-proximity sensor to the robot’s single-board computer, and implement a weighted arithmetic mean approach to process the sensor readings into a determination of whether a plant is present within 5 cm. Use the sensor readings from the most recent five s to give 20% of the final average weight used in detection.

- Ensure the selected IR-proximity sensor does not emit critical wavelengths that could interfere with the light-driven behaviors of the selected species. Ensure that wavelengths emitted by the sensor below 800 nm are not present at distances greater than 5 mm from the sensor’s IR source, as measured by spectrometer.

- Distribute the experiment functions over the set of robots, such that each robot can autonomously manage the portions that proceed in its own local area. Arrange the robots’ provision of light stimuli and sensing capabilities according to the respective plant growth directions being tested.

- Compose each robot around a single-board computer that is Wireless Local Area Network (WLAN) enabled. Interface the computer to sensors and actuators via a custom printed circuit board (PCB). Power each robot individually, with its own battery backup.

- Include one IR-proximity sensor per direction being tested for approaching plants, according to the above requirements.

- Include enough LEDs to deliver the above blue light requirements, per direction being tested for approaching plants.

- If using RGB LEDs rather than blue LEDs, optionally enable emittance from the red diode when the blue diode is not in use, to augment the red light delivery described below (for plant health via the support of photosynthesis).

- If red light is emitted from the robots at certain intervals, then use red diodes with peak emission at approximately ƛmax = 625–650 nm, with no critical wavelengths overlapping the green band (i.e., below 550 nm) or the far-red band (i.e., above 700 nm).

- Do not allow red diodes to produce heat levels higher than those of the blue diodes.

- Include hardware that enables local cues between robots. Include a photoresistor (i.e., light-dependent resistor or LDR) for each direction of a neighboring robot to monitor their light emittance status. Alternatively, communicate the status of local neighbors via WLAN.

- Include hardware to dissipate heat, as required by the conditions of the selected blue diodes and the utilized robot enclosure. Execute by a combination of aluminum heatsinks, vents in the robot’s case enclosure, and fans. Activate fans by a digital temperature sensor on the single-board computer or supplemental PCB.

- Organize the robot components such that the relevant directions are uniformly serviced.

- Position the blue diodes to distribute an equivalent light intensity to each of the directions from which plants may approach (i.e., from the mechanical supports attached to the robot’s lower half, see step 2.5). Orient each diode in the robot case such that the center axis of its lens angle is within 60° of each axis of mechanical support it services, and position it to not be blocked by the robot case.

- Position the IR-proximity sensors equivalently for their respective approaching growth directions (i.e., from the mechanical supports attached to the robot’s lower half, see step 2.5). Position each IR-proximity sensor within 1 cm of the attachment point between the robot and the mechanical support being serviced, and orient it such that its viewing angle is parallel to the support axis. Ensure its emitter and receiver are not blocked by the robot case.

- Position any photoresistors for local communication equivalently for each direction facing a neighboring robot in the setup (i.e., from all mechanical supports attached to the robot, see 2.5). Orient each photoresistor such that the center axis of its viewing angle is within 45° of the support axis it services, and position to not be blocked by the robot case.

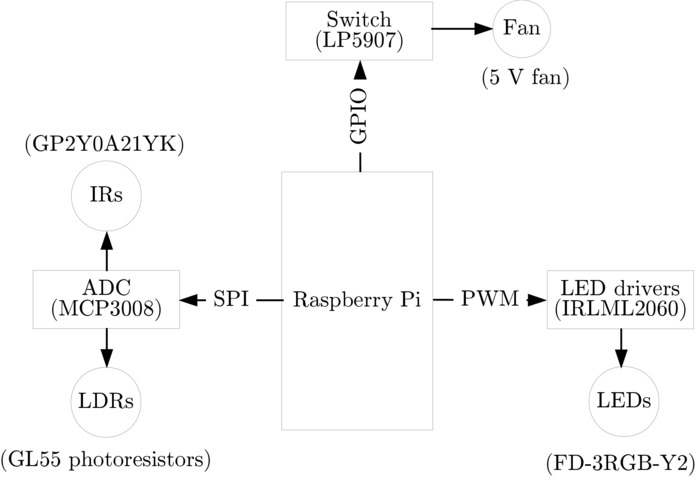

- Assemble all components with the single-board computer (refer to the block diagram in Figure 2). Ensure the computer can be easily accessed for maintenance after assembly.

- Interface LEDs to the computer via LED drivers using pulse width modulation. Use a fixed mechanical connection between the LEDs and either the case or the heatsink, and use a mechanically unconstrained connection between the LEDs and the computer.

- Interface fans to the computer via linear regulator (i.e., switch) using a general purpose input/output header pin. Affix fans where adequate airflow is available, while also ensuring no mechanical stress is placed on them.

- Interface IR-proximity sensors and photoresistors via analog-to-digital converter, using serial peripheral interface. Use a fixed mechanical connection from the sensors to the case, and a mechanically unconstrained connection to the computer.

- Manufacture the robot case from heat-resistant plastic using either selective laser sintering, stereolithography, fused deposition modelling, or injection molding.

- Integrate the robots into a set of modular mechanical supports that dually hold the robots in position and serve as climbing scaffold for the plants, restricting the plants’ likely average growth trajectories. Design the robots to serve as supplemental mechanical joints between the supports, positioned such that they intersect the plant growth trajectories.

- Minimize the size of the robot, and ensure that it can be reliably surpassed by an unsupported growing tip of the selected plant species. Reduce robot size to the greatest extent possible to increase experiment speed.

- Shape the external walls of the robot body to be as unobtrusive to plant growth as possible when a growing tip incrementally navigates around the robot. Round or facet the robot body to not block the helical trajectory of circumnutation in twining plant species. Exclude sharp protrusions and acute indentations.

- Select a material and profile (i.e., shape of cross-section) for the mechanical supports, such that the selected plant species can effectively climb it, for instance a wooden rod with circular profile of a diameter roughly 8 mm or less. Ensure the mechanical supports are structurally stiff enough to support the plants and robots within the setup, augmented by a transparent acrylic sheet behind the setup.

- On each robot include attachment points to anchor the specified mechanical supports. Include one for each direction by which a plant may approach or depart a robot.

- For each attachment point, include a socket in the robot case, with dimensions matching the cross-section of the support material.

- Set the socket with a depth not less than 1 cm. Keep the socket shallow enough that the support does not collide with components inside the robot.

- Arrange the mechanical supports in a regularly gridded pattern, uniformly diagonal with an angle of inclination at 45° or steeper. Make the lengths of the supports uniform. The minimum exposed length of the support is 30 cm, to allow sufficient room for the climbing plants to attach after exploring the area in their unsupported condition. The preferred exposed length is 40 cm or more, to allow some buffer for statistically extreme cases of plant attachment.

- Assemble the mechanical elements with the robots. The following protocol assumes an exposed support length of 40 cm, and a setup of eight robots in four rows (see Figure 6). For other sizes, scale accordingly.

- On the floor surface, build a stand 125 cm wide that is capable of holding the setup in an upright position.

- Affix a 125 cm x 180 cm sheet (8 mm thick or more) of transparent acrylic to the stand, such that it stands upright.

- Position pots with the appropriate soil on the stand, against the acrylic sheet.

- Affix two mechanical y-joints to the acrylic sheet, 10 cm above the pots. Position the joints 45 cm and 165 cm to the right, respectively, of the left edge of the stand.

- Affix two supports to the left y-joint, leaning 45° to the left and to the right, and affix one support to the right y-joint, leaning 45° to the left.

- Affix two robots to the acrylic sheet, and insert the ends of the previously placed supports into the sockets in the robot cases. Position the robots 35 cm above the y-joints, and 10 cm and 80 cm to the right, respectively, of the left edge of the stand.

- Repeat the pattern to affix the remaining robots and support in the diagonally gridded pattern (see Figure 6), such that each row of robots is 35 cm above the previous row, and each robot is horizontally positioned directly above the robot or y-joint that is two rows beneath it.

3. Robot software

- Install an operating system (e.g., Raspbian) on the single-board computers of the robots.

- During each experiment, run the software protocol on each robot in parallel, enabling their distributed autonomous behavior (see Wahby et al.14, for pseudocode and more details).

- Establish two possible states for the robot: one being a stimulus state during which the robot emits blue light at the intensity described above; the other being a dormant state during which the robot either emits no light or emits red light as described above.

- In stimulus state, send a pulse width modulation (PWM) signal via the single-board computer with a frequency corresponding to the required brightness to the blue LEDs drivers.

- In dormant state, trigger no LEDs, or if needed send a PWM signal to only the red LEDs drivers.

- In control experiments, assign all the robots the dormant state.

- In single-decision experiments, assign one robot the dormant state and one robot the stimulus state.

- In multiple-decision experiments, start the Initialization process, as follows.

- Supply to each robot a full configuration map of the pattern of plant growth to be tested in the current experiment.

- Set the location of the robot within the pattern, either automatically using localization sensors or manually.

- Compare the robot’s location to the supplied map. If the robot’s location is the first location on the map, set the robot to stimulus; otherwise, set the robot to dormant. Initialization process ends.

- In multiple-decision experiments, start the Steering process, as follows. Execute iteratively.

- Check the robot’s IR-proximity sensor reading to see if a plant has been detected.

- If a plant is detected and the robot is set to dormant, then maintain.

- If a plant is detected and the robot is set to stimulus, then:

- Notify the adjacent neighboring robots that a plant has been detected, and include the robot’s location in the message.

- Set the robot to dormant.

- Compare the robot’s location to the map. If the robot is at the last location on the map, then send a signal over WLAN that the experiment is complete.

- Check the robot’s incoming messages from its adjacent neighboring robots to see if one of them that was set to stimulus has detected a plant.

- If a stimulus neighbor has detected a plant, compare that neighbor's location to the robot’s location, and also compare to the map.

- If the robot is at the subsequent location on the map, set the robot to stimulus.

- End the iterative loop of the Steering process once a signal has been received that the experiment is complete.

4. Plant health monitoring and maintenance procedure

- Locate the experiment setup in controlled environmental conditions—specifically, indoor with no incident daylight or other light external to the conditions described below, with controlled air temperature and humidity, and with controlled soil watering. Monitor the conditions with sensors that are connected to a microcontroller or single-board computer that is WLAN enabled.

- Maintain plant photosynthesis using LED growth lamps external to the robots and facing the experiment setup.

- Use the growth lamps to deliver monochromatic red light to the setup, with red diodes having peak emission at approximately ƛmax = 625–650 nm, with no critical wavelengths outside the range 550–700 nm, except for a low incidence of ambient blue light if helpful for the health of the selected species. If a low incidence of ambient blue light is included, restrict to levels at a very minor fraction of those emitted by a single robot.

- Provide the levels of red light required for the health of the selected species, usually roughly 2000 lumens or more in total.

- Orient the growth lamps to face the experiment setup, such that their emittance is distributed roughly evenly over the growth area.

- Monitor the ambient light conditions using an RGB color sensor.

- After germinating, provide each plant its own pot at the base of the experiment setup. Provide suitable soil volume and type for the selected species. Ensure the soil and seeds have been sanitized prior to germination. Use suitable pest control methods to prevent or manage insects if present.

- Regulate air temperature and humidity levels, accordingly for the selected species, using heaters, air conditioners, humidifiers, and dehumidifiers. Monitor levels using a temperature-pressure-humidity sensor.

- Monitor the soil using a soil moisture sensor. Maintain an appropriate rate of watering for the selected species. Execute using an automated watering system where water is delivered to the soil via nozzles as triggered by the soil moisture sensor readings, or water soil manually, as regulated by the sensor readings.

5. Experiment design

- Place robots and mechanical supports in a grid large enough to cover the growth area and pattern being tested in the experiment, not smaller than one row and two columns of robots.

- Below the bottom row of robots, place a row of the standard diagonal mechanical supports, matching those throughout the setup. Where the lower ends of these supports intersect, join them mechanically with a ‘y-joint.’ For each ‘y-joint’ at the base of the setup, plant a uniform number of plants according to the size of the diagonal grid cell (roughly one plant per 10 cm of exposed mechanical support length), with the plant health maintenance conditions described above.

- Select an experiment type to run, and where relevant select a quantity and distribution of robots.

- Experiment type 1: Control

NOTE: This experiment type tests growth of the climbing plants in conditions absent of light stimuli to trigger phototropism. It can run on any size and shape of setup.- Assign all robots the dormant state (see step 3.4) and run continuously until results are manually assessed to be complete.

- Observe whether plants attach to the mechanical supports. In a successful experiment, none of the plants will find or attach to the mechanical supports.

- Experiment type 2: Single-decision

NOTE: This experiment type tests the plants’ growth trajectories when presented with binary options—one support leading to a dormant robot and one support leading to a stimulus robot. It runs only on the minimum setup (i.e., one row, two columns).- Assign one robot the dormant state (see 3.5) and one robot the stimulus state. Run continuously until one of the two robots detects a plant with the IR-proximity sensor.

- Observe plant attachment to mechanical support, growth along support, and sensor readings of stimulus robot. In a successful experiment, the robot with the stimulus state will detect a plant after it had grown along the respective support.

- Experiment type 3: Multiple-decision

NOTE: This experiment type tests the plants’ growth when presented with multiple subsequent stimuli conditions, that trigger a series of decisions according to a predefined global map. It can run on any size and shape of setup that has more than the minimum number of rows (i.e., two or more).- Provide to the robots a global map of the pattern to be grown (see steps 3.6-3.7.7).

- Observe the plant attachment events and pattern of growth along the mechanical supports.

- In a successful experiment, at least one plant will have grown on each support present in the global map.

- Additionally, in a successful experiment no plant will have chosen the incorrect direction when its growing tip is located at the currently active decision point.

- Do not consider extraneous growing tips here, if for instance a branching event places a new growing tip at an obsolete location on the map.

- Experiment type 1: Control

6. Recording Procedure

- Store data from sensors and cameras initially at the single-board computer where the data has been generated onboard. Run onboard reply servers that respond to needed requests, such as the last stored sensor reading. At regular intervals upload the data and log files over WLAN to a local network-attached storage (NAS) device.

- Capture time-lapse videos of the experiments continuously using cameras positioned at two or more vantage points, with at least one camera view encompassing the full experiment setup. Ensure the captured images are of high enough resolution to adequately capture the movements of the plant growing tips, typically only a few millimeters in width.

- Automate the image capture process to ensure consistent time intervals between captures, using an onboard camera on a single-board computer or a stand-alone digital camera that is automated with an intervalometer. Install lamps to act as flashes, automated similarly to the cameras. Ensure the flashes are bright enough to compete with the red light of the growth lamps without dramatically post-processing the images for color correction.

- Locate the flashes such that the experiment setup can be fully illuminated and therefore clearly visible in images. Synchronize the cameras and the flashes such that all cameras capture images simultaneously, during a 2 s flash period. Capture the images every 2 minutes, for the duration of each experiment.

- Log the environmental sensor data, specifically the readings from the temperature-pressure-humidity sensor, the RGB color sensor, and the soil moisture sensor. Log the data from all robots in the setup, specifically the IR-proximity sensor and photoresistor readings, as well as the internal state of the robot which defines its LED emittance status.

- Make all recorded data available for remote monitoring of the experiments, via regular real-time reports, to ensure correct conditions are maintained for the full experiment duration up to several months.

Representative Results

Control: Plant Behavior without Robotic Stimuli.

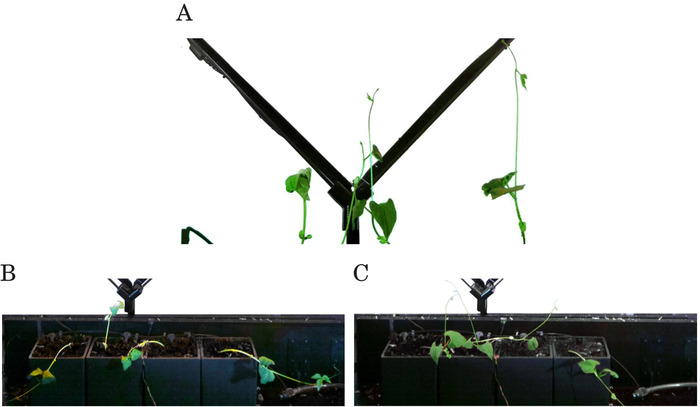

Due to the lack of blue light (i.e., all robots are dormant), positive phototropism is not triggered in the plant. Therefore, the plants show unbiased upwards growth as they follow gravitropism. They also display typical circumnutation (i.e., winding), see Figure 4A. As expected, the plants fail to find the mechanical support leading to the dormant robots. The plants collapse when they can no longer support their own weight. We stop the experiments when at least two plants collapse, see Figure 4B,C.

Single or Multiple Decisions: Plant Behavior with Robotic Stimuli

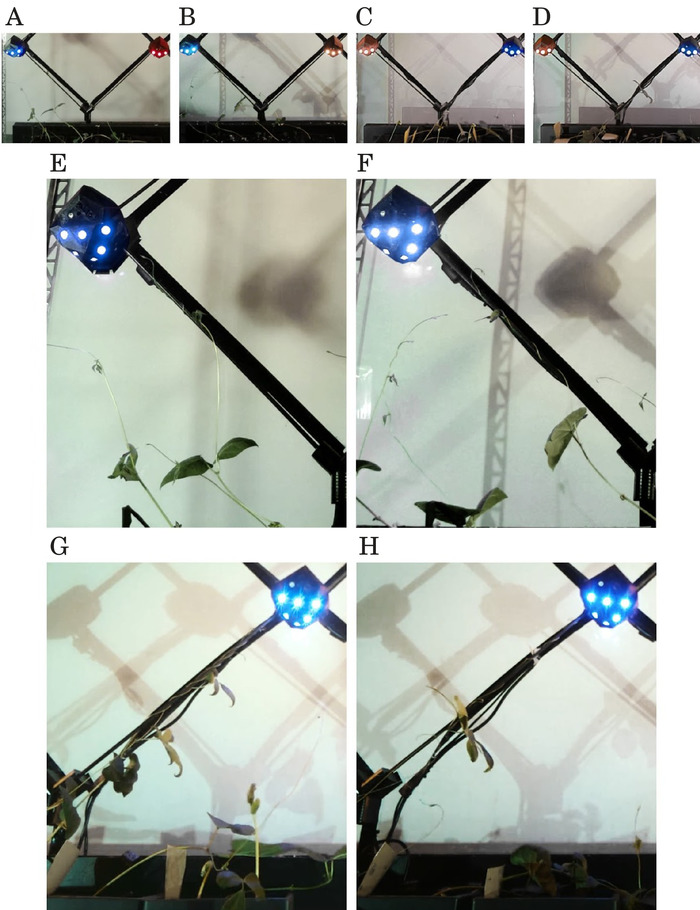

In four single-decision experiments, two runs have leftward steering (i.e., the robot left of the bifurcation is activated to stimulus), and two runs have rightward steering. The stimulus robots successfully steer the plants towards the correct support, see Figure 5. The nearest plant with stem angle most similar to that of the correct support attaches first. In each experiment, at least one plant attaches to the support and climbs it until it reaches the stimulus robot and thereby ends the experiment. In one experiment, a second plant attaches to the correct support. The remaining plants might attach as well in longer experiment durations. None of the plants attaches to the incorrect support. Each experiment runs continuously for 13 days on average.

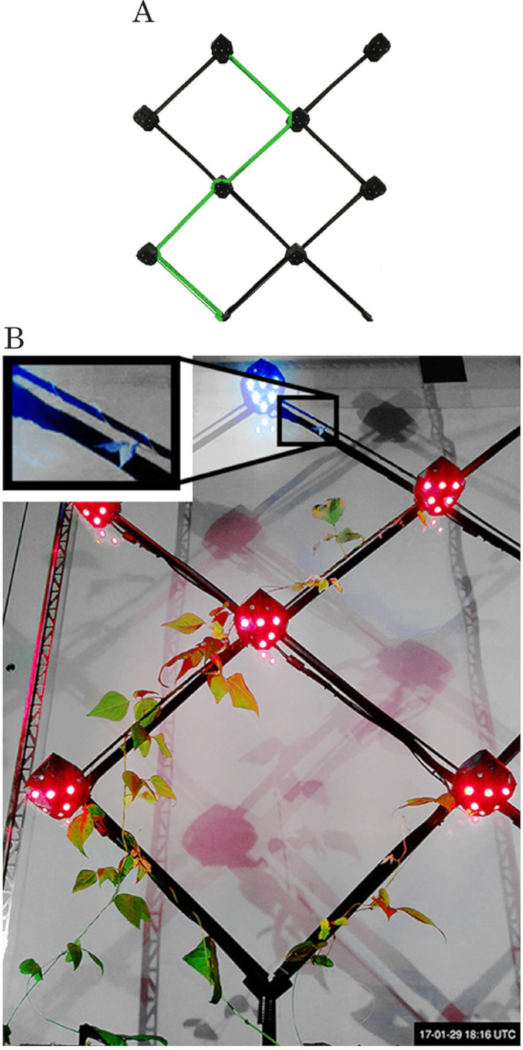

In two multiple-decision experiments, the plants grow into a predefined zigzag pattern, see Figure 6A. Each experiment runs for approximately seven weeks. As an experiment starts, a robot sets its status to stimulus (see 3.6.3) and steers the plants towards the correct support according to the stipulated pattern. A plant attaches and climbs it, arriving at the activated stimulus robot therefore completing the first decision. According to 3.7.3, the current stimulus robot then becomes dormant and notifies its adjacent neighbors. The dormant neighbor that is next on the zigzag pattern switches itself to stimulus (see 3.7.6). If a plant is detected by a dormant robot, that robot does not react (see 3.7.2). The plants continue and complete the remaining three decisions successfully. The predefined zigzag pattern is therefore fully grown, see Figure 6B.

All experiment data, as well as videos, are available online24.

Figure 1. The immobile robot and its primary components. Figure reprinted from author publication Wahby et al.14, used with Creative Commons license CC-BY 4.0 (see supplemental files), with modifications as permitted by license. Please click here to view a larger version of this figure.

Figure 2. The component diagram of the immobile robot electronics. IRLML2060 LED drivers are interfaced with the robot's single-board computer (e.g. Raspberry Pi) via PWM to control the brightness of the LEDs. An LP5907 switch is interfaced with the single-board computer via general-purpose input/output (GPIO) header pin, to control the fan. An MCP3008 analog-to-digital converter (ADC) is interfaced with the single-board computer via serial peripheral interface (SPI) to read the analog IR and light-dependent resistor (LDR) sensor data. Please click here to view a larger version of this figure.

Figure 3. Shortly after '03.04.16,' a plant tip climbs a support and arrives in the field of view of the robot. (A) Sample IR-proximity sensor scaled voltage readings (vertical axis) during an experiment. Higher values indicate plant tip detection. (B) The IR-proximity sensor is placed and oriented according to the support attachment, to ensure effective plant tip detection. Figure reprinted from author publication Wahby et al.14, used with Creative Commons license CC-BY 4.0 (see supplemental files), with modifications as permitted by license. Please click here to view a larger version of this figure.

Figure 4. Control experiments result frames showing that all four plants did not attach to any support in the absence of blue light. (A) After five days, all plants growing upwards in one of the control experiments (see (C) for later growth condition). (B) After 15 days, three plants collapsed, and one still growing upwards in the first control experiment. (C) After seven days, two plants collapsed, and two still growing upwards in the second control experiment (see (A) for previous growth condition). Figure reprinted from author publication Wahby et al.14, used with Creative Commons license CC-BY 4.0 (see supplemental files), with modifications as permitted by license. Please click here to view a larger version of this figure.

Figure 5. Single-decision experiments result frames showing the ability of a stimulus robot to steer the plants through a binary decision, to climb the correct support. In all four experiments, one robot is set to stimulus and the other to dormant-at two opposite sides of a junction. The frames show the plants' location right before the stimulus robot detects them. In each experiment at least one plant attaches to the correct support, and no plant attaches to the incorrect one. Also, the unsupported plants show growth biased towards the stimulus robot. E, F, G, H are closeups of A, B, C, D respectively. Figure reprinted from author publication Wahby et al.14, used with Creative Commons license CC-BY 4.0 (see supplemental files), with modifications as permitted by license. Please click here to view a larger version of this figure.

Figure 6. Multiple-decision experiment. (A) The targeted zigzag pattern is highlighted in green on the map. (B) The last frame from the experiment (after 40 days), showing the plants' situation before the last stimulus robot on the pattern detects them. The robots successfully grow the zigzag pattern. Figure reprinted from author publication Wahby et al.14, used with Creative Commons license CC-BY 4.0 (see supplemental files), with modifications as permitted by license. Please click here to view a larger version of this figure.

Discussion

The presented methodology shows initial steps toward automating the stimuli-driven steering of plant growth, to generate specific patterns. This requires continuous maintenance of plant health while combining into a single experiment setup the distinct realms of biochemical growth responses and engineered mechatronic functions-sensing, communication, and controlled generation of stimuli. As our focus here is on climbing plants, mechanical support is also integral. A limitation of the current setup is its scale, but we believe our methodology easily scales. The mechanical scaffold can be extended for larger setups and therefore longer periods of growth, which also allows expanded configurations and patterns. Here the setup is limited to two dimensions and binary left-right decisions, as growth is limited to a grid of mechanical supports at 45° inclination, and plant decision positions are limited to that grid's bifurcations. Mechanical extensions may include 3D scaffolds and differing materials, to allow for complex shapes9,19. The methodology can be considered a system to automatically grow patterns defined by a user. By extending the possible complexity of mechanical configurations, users should face few restrictions on their desired patterns. For such an application, a user software tool should confirm that the pattern is producible, and the mechatronics should then self-organize the production of the pattern by generating appropriate stimuli to steer the plants. The software should also be extended to include recovery plans and policies determining how to continue with the growth if the original planned pattern has partially failed-for instance if the first activated robot has never detected a plant but the dormant ones have seen that the position of the growing tips are beyond the activated robot.

In the presented methodology, an example plant species meeting the protocol selection criteria is the climbing common bean, P. vulgaris. This is the species used in the representative results. As P. vulgaris has strong positive phototropism to UV-A and blue light, the phototropins (light-receptor proteins) in the plant will absorb photons corresponding to wavelengths 340-500 nm. When the receptors are triggered, first swelling will occur in the stem by the preferential relocation of water to the stem tissues opposing the triggered receptors, causing a reversible directional response. Then, within the stem, auxin (plant patterning hormone) is directed to the same tissue location, perpetuating the directional response and fixing stem tissues as they stiffen. This behavior can be used for shaping the plants in these controlled indoor conditions, as the plants are exposed only to isolated blue light and isolated red light, with incident far-red light from IR-proximity sensors at low enough levels that it does not interfere with behaviors such as the shade-avoidance response20,21. The phototropism reaction in the plant responds in the setup to light from blue diodes with peak emission ƛmax = 465 nm, and photosynthesis22,23 in the plant is supported by red diodes with peak emission ƛmax = 650 nm. P. vulgaris growing up to several meters in height is suitable in the overall setup, as the roughly 3 L of commercial gardening soil needed per pot fits the setup scale.

Although the current setup focuses on light as an attraction stimulus, additional stimuli may be relevant for other experiment types. If the desired pattern requires a separation between different groups of plants (e.g., the desired pattern needs two groups of plants to choose opposite sides), then it may not be feasible using only one type of stimulus. For such complex growth patterns independent of scaffold shape, the different groups of plants can potentially be grown in different time periods such that their respective attraction stimuli do not interfere, which would also allow the integration of branching events. However, this may not always be a suitable solution, and the standard attractive light stimulus could then be augmented by repelling influences such as shading, or by other stimuli like far-red light or vibration motors9,14.

The presented method and the experiment design are only an initial first step towards a sophisticated methodology to automatically influence directional growth of plants. The experiment setup is basic by determining only a sequence of binary decisions in the plants and we focus on one, easy to manage stimulus. Additional studies would be required to prove the method's statistical significance, to add more stimuli, and to control other processes such as branching. With sufficient development to guarantee the long-term reliability of the robots, the presented methodology could allow for automation of plant experiments over long time periods, reducing the overhead associated with the study of plant development stages beyond that of shoots. Similar methods can allow for future investigations into the underexplored dynamics between biological organisms and autonomous robots, when the two act as tightly coupled self-organizing bio-hybrid systems.

Disclosures

The authors have nothing to disclose.

Acknowledgements

This study was supported by flora robotica project that received funding from the European Union's Horizon 2020 research and innovation programme under the FET grant agreement, no. 640959. The authors thank Anastasios Getsopulos and Ewald Neufeld for their contribution in hardware assembly, and Tanja Katharina Kaiser for her contribution in monitoring plant experiments.

Materials

| 3D printed case | Shapeways, Inc | n/a | Customized product, https://www.shapeways.com/ |

| 3D printed joints | n/a | n/a | Produced by authors |

| Adafruit BME280 I2C or SPI Temperature Humidity Pressure Sensor | Adafruit | 2652 | |

| Arduino Uno Rev 3 | Arduino | A000066 | |

| CdS photoconductive cells | Lida Optical & Electronic Co., Ltd | GL5528 | |

| Cybertronica PCB | Cybertronica Research | n/a | Customized product, http://www.cybertronica.de.com/download/D2_node_module_v01_appNote16.pdf |

| DC Brushless Blower Fan | Sunonwealth Electric Machine Industry Co., Ltd. | UB5U3-700 | |

| Digital temperature sensor | Maxim Integrated | DS18B20 | |

| High Power (800 mA) EPILED – Far Red / Infra Red (740-745 nm) | Future Eden Ltd. | n/a | |

| I2C Soil Moisture Sensor | Catnip Electronics | v2.7.5 | |

| IR-proximity sensors (4-30 cm) | Sharp Electronics | GP2Y0A41SK0 | |

| LED flashlight (50 W) | Inter-Union Technohandel GmbH | 103J50 | |

| LED Red Blue Hanging Light for Indoor Plant (45 W) | Erligpowht | B00S2DPYQM | |

| Low-voltage submersible pump 600 l/h (6 m rise) | Peter Barwig Wasserversorgung | 444 | |

| Medium density fibreboard | n/a | n/a | For stand |

| Micro-Spectrometer (Hamamatsu) on an Arduino-compatible breakout board | Pure Engineering LLC | C12666MA | |

| Pixie – 3W Chainable Smart LED Pixel | Adafruit | 2741 | |

| Pots (3.5 l holding capacity, 15.5 cm in height) | n/a | n/a | |

| Power supplies (5 V, 10 A) | Adafruit | 658 | |

| Raspberry Pi 3 Model B | Raspberry Pi Foundation | 3B | |

| Raspberry Pi Camera Module V2 | Raspberry Pi Foundation | V2 | |

| Raspberry Pi Zero | Raspberry Pi Foundation | Zero | |

| RGB Color Sensor with IR filter and White LED – TCS34725 | Adafruit | 1334 | |

| Sowing and herb soil | Gardol | n/a | |

| String bean | SPERLI GmbH | 402308 | |

| Transparent acrylic 5 mm sheet | n/a | n/a | For supplemental structural support |

| Wooden rods (birch wood), painted black, 5 mm diameter | n/a | n/a | For plants to climb |

References

- Åstrand, B., Baerveldt, A. J. An agricultural mobile robot with vision-based perception for mechanical weed control. Autonomous Robots. 13 (1), 21-35 (2002).

- Blackmore, B. S. A systems view of agricultural robots. Proceedings of 6th European conference on precision agriculture (ECPA). , 23-31 (2007).

- Edan, Y., Han, S., Kondo, N. Automation in agriculture. Springer handbook of automation. , 1095-1128 (2009).

- Van Henten, E. J., et al. An autonomous robot for harvesting cucumbers in greenhouses. Autonomous Robots. 13 (3), 241-258 (2002).

- Al-Beeshi, B., Al-Mesbah, B., Al-Dosari, S., El-Abd, M. iplant: The greenhouse robot. Proceedings of IEEE 28th Canadian Conference on Electrical and Computer Engineering (CCECE). , 1489-1494 (2015).

- Giraldo, J. P., et al. Plant nanobionics approach to augment photosynthesis and biochemical sensing. Nature Materials. 13 (4), (2014).

- Mazarei, M., Teplova, I., Hajimorad, M. R., Stewart, C. N. Pathogen phytosensing: Plants to report plant pathogens. Sensors. 8 (4), 2628-2641 (2008).

- Zimmermann, M. R., Mithöfer, A., Will, T., Felle, H. H., Furch, A. C. Herbivore-triggered electrophysiological reactions: candidates for systemic signals in higher plants and the challenge of their identification. Plant Physiology. , 01736 (2016).

- Hamann, H., et al. . Flora robotica–An Architectural System Combining Living Natural Plants and Distributed Robots. , (2017).

- Arkin, R. C., Egerstedt, M. Temporal heterogeneity and the value of slowness in robotic systems. Proceedings of IEEE International Conference on Robotics and Biomimetics (ROBIO). , 1000-1005 (2015).

- Mahlein, A. K. Plant disease detection by imaging sensors-parallels and specific demands for precision agriculture and plant phenotyping). Plant Disease. 100 (2), 241-251 (2016).

- Wahby, M., et al. A robot to shape your natural plant: the machine learning approach to model and control bio-hybrid systems. Proceedings of the Genetic and Evolutionary Computation Conference (GECCO '18). , 165-172 (2018).

- Bastien, R., Douady, S., Moulia, B. A unified model of shoot tropism in plants: photo-, gravi-and propio-ception. PLoS Computational Biology. 11 (2), e1004037 (2015).

- Wahby, M., et al. Autonomously shaping natural climbing plants: a bio-hybrid approach. Royal Society Open Science. 5 (10), 180296 (2018).

- Liscum, E., et al. Phototropism: growing towards an understanding of plant movement. Plant Cell. 26, 38-55 (2014).

- Christie, J. M., Murphy, A. S. Shoot phototropism in higher plants: new light through old concepts. American Journal of Botany. 100, 35-46 (2013).

- Migliaccio, F., Tassone, P., Fortunati, A. Circumnutation as an autonomous root movement in plants. American Journal of Botany. 100, 4-13 (2013).

- Gianoli, E. The behavioural ecology of climbing plants. AoB Plants. 7, (2015).

- Vestartas, P., et al. Design Tools and Workflows for Braided Structures. Proceedings of Humanizing Digital Reality. , 671-681 (2018).

- Pierik, R., De Wit, M. Shade avoidance: phytochrome signalling and other aboveground neighbour detection cues. Journal of Experimental Botany. 65 (10), 2815-2824 (2014).

- Fraser, D. P., Hayes, S., Franklin, K. A. Photoreceptor crosstalk in shade avoidance. Current Opinion in Plant Biology. 33, 1-7 (2016).

- Hogewoning, S. W., et al. Photosynthetic Quantum Yield Dynamics: From Photosystems to Leaves. The Plant Cell. 24 (5), 1921-1935 (2012).

- McCree, K. J. The action spectrum, absorptance and quantum yield of photosynthesis in crop plants. Agricultural Meteorology. 9, 191-216 (1971).

- . Autonomously shaping natural climbing plants: a bio-hybrid approach [Dataset] Available from: https://doi.org/10.5281/zenodo.1172160 (2018)