Using Light Sheet Fluorescence Microscopy to Image Zebrafish Eye Development

Summary

Light sheet fluorescence microscopy is an excellent tool for imaging embryonic development. It allows recording of long time-lapse movies of live embryos in near physiological conditions. We demonstrate its application for imaging zebrafish eye development across wide spatio-temporal scales and present a pipeline for fusion and deconvolution of multiview datasets.

Abstract

Light sheet fluorescence microscopy (LSFM) is gaining more and more popularity as a method to image embryonic development. The main advantages of LSFM compared to confocal systems are its low phototoxicity, gentle mounting strategies, fast acquisition with high signal to noise ratio and the possibility of imaging samples from various angles (views) for long periods of time. Imaging from multiple views unleashes the full potential of LSFM, but at the same time it can create terabyte-sized datasets. Processing such datasets is the biggest challenge of using LSFM. In this protocol we outline some solutions to this problem. Until recently, LSFM was mostly performed in laboratories that had the expertise to build and operate their own light sheet microscopes. However, in the last three years several commercial implementations of LSFM became available, which are multipurpose and easy to use for any developmental biologist. This article is primarily directed to those researchers, who are not LSFM technology developers, but want to employ LSFM as a tool to answer specific developmental biology questions.

Here, we use imaging of zebrafish eye development as an example to introduce the reader to LSFM technology and we demonstrate applications of LSFM across multiple spatial and temporal scales. This article describes a complete experimental protocol starting with the mounting of zebrafish embryos for LSFM. We then outline the options for imaging using the commercially available light sheet microscope. Importantly, we also explain a pipeline for subsequent registration and fusion of multiview datasets using an open source solution implemented as a Fiji plugin. While this protocol focuses on imaging the developing zebrafish eye and processing data from a particular imaging setup, most of the insights and troubleshooting suggestions presented here are of general use and the protocol can be adapted to a variety of light sheet microscopy experiments.

Introduction

Morphogenesis is the process that shapes the embryo and together with growth and differentiation drives the ontogeny from a fertilized egg into a mature multicellular organism. The morphogenetic processes during animal development can be analyzed best by imaging of intact living specimens1-3. This is because such whole embryo imaging preserves all the components that drive and regulate development including gradients of signaling molecules, extracellular matrix, vasculature, innervation as well as mechanical properties of the surrounding tissue. To bridge the scales, at which morphogenesis occurs, the fast subcellular events have to be captured on a minute time scale in the context of development of the whole tissue over hours or days. To fulfill all these requirements, a modern implementation4 of the orthogonal plane illumination microscope5 was developed. Originally, it was named the Selective Plane Illumination Microscopy (SPIM)4; now an all-embracing term Light Sheet Fluorescence Microscopy (LSFM) is typically used. LSFM enables imaging at high time resolution, while inducing less phototoxicity than laser scanning or spinning disc confocal microscopes6,7. Nowadays, there are already many implementations of the basic light sheet illumination principle and it has been used to image a large variety of specimens and processes previously inaccessible to researchers8-11.

We would first like to highlight several key advantages of LSFM over conventional confocal microscopy approaches:

To acquire meaningful results from live imaging microscopic experiments, it is important that the observation only minimally affects the specimen. However, many organisms including zebrafish are very susceptible to laser light exposure, making it challenging to image them in a confocal microscope with high time resolution without phototoxicity effects like stalled or delayed development6,7. LSFM is currently the fluorescence imaging technique with the least disruptive effects on the sample7. Since the thin laser light sheet illuminates only the part of the specimen that is imaged at a particular time point, the light sheet microscope is using photons very efficiently. Consequently, the low light exposure allows for longer time-lapse observations of healthier specimens, e.g.12-17. Furthermore, thanks to the minimal invasiveness of LSFM, the number of acquired images is no longer dictated by how much light the sample can tolerate, but rather by how much data can be processed and stored.

Along the same lines of keeping the specimen in near physiological conditions, LSFM comes with alternative sample mounting strategies well suited for live embryos. In LSFM techniques, the embryos are typically embedded within a thin column of low percentage agarose. The mounting into agarose cylinders allows for the complete freedom of rotation, so the sample can be observed from the perfect angle (in LSFM referred to as view) and from several views simultaneously. The multiview imaging and subsequent multiview fusion is beneficial particularly for big, scattering specimens and allows capturing them with high, isotropic resolution. A summary of other possible LSFM mounting strategies can be found in the official microscope operating manual, in the chapter on sample preparation written by the lab of E. Reynaud. It is a recommended read, especially if the goal is to image different samples than described here.

The image acquisition in LSFM is wide field, camera-based, as opposed to laser scanning confocal microscope. This results in a higher signal-to noise ratio (SNR) for acquired images and can be extremely fast (tens to hundreds of frames per second). The high sensitivity of LSFM further enables imaging of weakly fluorescent samples, like transcription factors expressed at endogenous levels18 or, in the near future, endogenous proteins tagged using CRISPR/Cas9. The high SNR is also important for successful downstream image analysis. The high speed is required not only to capture rapid intracellular processes, but also to image the whole embryo from multiple views fast enough. A seamless fusion of the multiple views can only be achieved, if the observed phenomenon does not change during acquisition of these several z stacks coming from separate views.

The advantages of LSFM do not typically come at the expense of image quality. The lateral resolution of LSFM is slightly worse than the resolution of a confocal microscope. This is because the detection objectives used in LSFM have lower numerical aperture (usually 1.0 or less) compared to 1.2-1.3 of water or silicon immersion objectives on standard confocal setups. Additionally, due to the wide field detection in LSFM (absence of a pinhole), there is more out-of-focus light compared to a confocal microscope. The amount of out-of-focus light is determined by the light sheet thickness. Nevertheless, these disadvantages are compensated by the higher SNR in LSFM. In practice, this results in similar quality images compared to for example spinning disc confocal acquisition15. Consequently, this enables reliable extraction of features like cell membranes or nuclei, e.g., for cell lineage tracing15,19.

The axial resolution of LSFM is determined, in addition to the detection objective, by the light sheet thickness. The axial resolution of LSFM can in some cases surpass the resolution of confocal microscopes. First, the improvement in resolution comes when the light sheet is thinner than the axial resolution of the detection objective, which typically occurs for large specimens imaged with a low magnification objective. The second way, how the LSFM can achieve better axial resolution, is the multiview fusion, in which the high xy resolution information from different views is combined into one image stack. The resulting merged stack has an isotropic resolution approaching the values of resolution in the lateral direction20,21. The strategy for registering the multiple views onto each other described in this article is based on using fluorescent polystyrene beads as fiduciary markers embedded in the agarose around the sample20,21.

As a result of the LSFM commercialization, this technique is now available to a broad community of scientists22. Therefore, the motivation for writing this protocol is to make this technology accessible to developmental biologists lacking practical experience in LSFM and to get these scientists started using this technology with their samples. Our protocol uses the commercial light sheet microscope, which constitutes a conceptually simple microscope that is easy to operate. We would additionally like to mention other recent protocols for imaging zebrafish with home-built LSFM setups, which might be suitable to answer particular questions23-25. Another entry option to LSFM are the open platforms26,27, which use the open access principles to bring light sheet microscopy to a broader community. The documentation of both the hardware and the software aspects can be found at http://openspim.org and https://sites.google.com/site/openspinmicroscopy/.

In this protocol, we use the teleost zebrafish as a model system to study developmental processes with LSFM. Morphogenesis of the zebrafish eye is an example that underlines many of the benefits of LSFM. LSFM has already been used in the past to investigate eye development in medaka28 and in zebrafish29,30. At the early stage of eye development it is complicated to orient the embryo correctly for conventional microscopy, as the bulky yolk does not allow the embryo to lie on the side with its eye facing the objective. However, with LSFM mounting into an agarose column, the sample can be reproducibly positioned. Additionally, during the transition from optic vesicle to the optic cup stage, the eye undergoes major morphogenetic rearrangements accompanied by growth, which requires capturing a large z stack and a big field of view. Also for these challenges LSFM is superior to conventional confocal imaging. The process of optic cup formation is three-dimensional, therefore it is hard to comprehend and visualize solely by imaging from one view. This makes multiview imaging with isotropic resolution beneficial. After optic cup formation, the retina becomes increasingly sensitive to laser exposure. Thus, the low phototoxicity associated with LSFM is a major advantage for long-term imaging.

Here we present an optimized protocol for imaging of one to three days old zebrafish embryos and larvae with focus on the eye development. Our method allows recording of time-lapse movies covering up to 12-14 hr with high spatial and temporal resolution. Importantly, we also show a pipeline for data processing, which is an essential step in LSFM, as this technique invariably generates big datasets, often in the terabyte range.

Protocol

NOTE: All animal work was performed in accordance with European Union (EU) directive 2011/63/EU and the German Animal Welfare Act. The protocol is meant to be followed without interruption, from mounting to imaging the sample. Depending on the practical experience, it will take 2-3 hr to start a time-lapse experiment. Data processing is not included in this time calculation. All the material required for the experiment can be found in the checklist of material needed before starting that is provided as a supplementary document. Wear powder free gloves during the steps 1, 2, 3 and 4. For steps 2, 3, 4 and 5 of the protocol also refer to the official microscope operating manual.

1. Preparatory Work Before the Imaging Experiment

- Fluorescent Bead Stock Solution

- For this protocol, use the 500 or 1,000 nm diameter polystyrene beads (labeled with red emitting fluorescent dye). The working dilution of the beads is 1:4,000. First, vortex the bead stock solution for 1 min. Dilute 10 µl of beads in 990 µl of ddH2O. Store the solution in the dark at 4 °C and use this 1:100 dilution as the stock solution for further dilution in 1:40.

- Preparing Solution for the Sample chamber

- In a 100 ml beaker mix 38.2 ml of E3 medium without methylene blue, 0.8 ml of 10 mM N-phenylthiourea and 1 ml of 0.4% MS-222. It is beneficial to use filtered E3 medium to avoid small particles of dust or undissolved crystals floating in the sample chamber later.

- Fluorescent Fish Embryo Selection

- In the days prior to the experiment, prepare embryos expressing fluorescent proteins. Right before the experiment, sort the embryos under the fluorescent stereoscope for desirable strength of the fluorescent signal. Take 5-10 healthy embryos and dechorionate them using tweezers.

NOTE: This protocol is optimized for 16-72 hr old embryos.

- In the days prior to the experiment, prepare embryos expressing fluorescent proteins. Right before the experiment, sort the embryos under the fluorescent stereoscope for desirable strength of the fluorescent signal. Take 5-10 healthy embryos and dechorionate them using tweezers.

2. Setting Up the Sample Chamber

- Assembling the Three Chamber Windows

- There are 4 windows in the sample chamber, one for the objective and three to be sealed with the coverslips (18 mm diameter, selected thickness 0.17 mm). Store these coverslips in 70% ethanol. Wipe them clean before use with ether:ethanol (1:4).

- Insert the coverslip into the window using fine forceps and make sure it fits into the smallest groove. Cover it with the 17 mm diameter rubber O-ring and screw in the illumination adapter ring using the chamber window tool. Repeat the process for the other two windows.

- Attaching the Remaining Chamber Parts

- Screw the adapter for an appropriate objective into the fourth remaining side of the chamber. Insert the 15 mm diameter O-ring into the center of the adapter.

- Screw in the white Luer-Lock adapter into the lower right opening in the chamber and the grey drain connector in the upper left opening. Block all the three remaining openings with the black blind plugs.

- Attach the Peltier block and the metal dovetail slide bottom of the chamber using an Allen wrench. Attach the hose with the 50 ml syringe to the Luer-Lock adapter. Insert the temperature probe into the chamber.

- Inserting the Objectives and the Chamber into the Microscope

- Check under the stereoscope that all the objectives are clean. Use the 10X/0.2 illumination objectives and the Plan-Apochromat 20X/1.0 W detection objective and make sure that its refractive index correction collar is set to 1.33 for water. Screw the detection objective into the microscope, while keeping the illuminating objectives covered.

- Remove the protective plastic caps covering the illuminating objectives. Carefully slide the chamber into the microscope and tighten it with the securing screw.

- Connect the temperature probe and the Peltier block with the microscope.

NOTE: The two tubes that circulate the cooling liquid for the Peltier block are compatible with both connectors. They form a functioning circuit irrespective of the orientation of connection. - Fill the chamber through the syringe with solution prepared in step 1.2 up to the upper edge of the chamber windows. Check that the chamber is not leaking.

- Start the microscope, incubation and the controlling and storage computers. Start the microscope-operating software and set the incubation temperature to 28.5 °C.

NOTE: It will take 1 hr to completely equilibrate. Prepare the sample in the meantime.

3. Sample Preparation

- Preparing the Agarose Mix

- 15 min before making of the agarose mix, melt one 1 ml aliquot of 1% low melting point agarose (dissolved in E3 medium) in a heating block set to 70 °C. Once the agarose is completely molten, transfer 600 µl into a fresh 1.5 ml tube, add 250 µl of E3 medium, 50 µl of 0.4% MS-222 and 25 µl of vortexed bead stock solution.

NOTE: This makes 925 µl of the mix, while additional 75 µl is calculated for the liquid added later together with the embryos. - Keep the tube in a second heating block at 38-40 °C or ensure that the agarose is very close to its gelling point before putting sample embryos into it.

- 15 min before making of the agarose mix, melt one 1 ml aliquot of 1% low melting point agarose (dissolved in E3 medium) in a heating block set to 70 °C. Once the agarose is completely molten, transfer 600 µl into a fresh 1.5 ml tube, add 250 µl of E3 medium, 50 µl of 0.4% MS-222 and 25 µl of vortexed bead stock solution.

- Mounting the Embryos

- Take five glass capillaries of 20 µl volume (with a black mark, ~1 mm inner diameter) and insert the matching Teflon tip plungers into them. Push the plunger through the capillary so that the Teflon tip is at the bottom of the capillary.

- Transfer five embryos (a number that can be mounted at once) with a glass or plastic pipette into the tube of vortexed 37 °C warm agarose mix.

NOTE: Try to carry over a minimum volume of liquid together with the embryos. - Insert the capillary into the mix and suck one embryo inside by pulling the plunger up. Ensure that the head of the embryo enters the capillary before the tail. Avoid any air bubbles between the plunger and the sample. There should be ±2 cm of agarose above the embryo and ±1 cm below it. Repeat for the remaining embryos.

- Wait until the agarose solidifies completely, which happens within a few minutes and then store the samples in E3 medium, by sticking them to the wall of a beaker with plasticine or tape. The bottom opening of the capillary should be hanging free in the solution allowing gas exchange to the sample.

NOTE: A similar protocol to the sections 3.1 and 3.2 can be also found on the OpenSPIM wiki page http://openspim.org/Zebrafish_embryo_sample_preparation.

4. Sample Positioning

- Sample Holder Assembly

- Insert 2 plastic sleeves of the right size (black) against each other into the sample holder stem. Their slit sides have to face outwards. Attach the clamp screw loosely by turning it 2-3 rounds. Insert the capillary through the clamp screw and push it through the holder until the black color band becomes visible on the other side. Avoid touching the plunger.

- Tighten the clamp screw. Push the excess 1 cm of agarose below the embryo out of the capillary and cut it. Insert the stem into the sample holder disc.

- Confirm in the software that the microscope stage is in the load position. Use guiding rails to glide the whole holder with the sample vertically downwards into the microscope. Turn it, so that the magnetic holder disc locks into position.

- Locating the Capillary

- From now on, control the sample positioning by the software. In the Locate tab choose the Locate capillary option and position the capillary in x, y and z into focus just above the detection objective lens. Use the graphical representation in the specimen navigator for guidance.

- Push the embryo gently out of the capillary until it is in front of the pupil of the detection objective.

NOTE: The 'Locate capillary' is the only step in the remaining protocol, during which the upper lid of the microscope should be opened and the sample pushed out.

- Locating the Sample

- Switch to 'Locate sample' option and at 0.5 zoom bring the zebrafish eye into the center of the field of view. Rotate the embryo, so that the light sheet will not pass through any highly refractive or absorbing parts of the specimen before it reaches the eye. Likewise, the emitted fluorescence needs a clear path out of the specimen. Click on 'Set Home Position'.

- Open the front door of the microscope and put the plastic cover with a 3 mm opening on top of the chamber to avoid evaporation.

NOTE: If the liquid level drops below the imaging level, the experiment will be compromised. - Check the heartbeat of the embryo as a proxy for overall health. If it is too slow, use another sample (compare to non-mounted controls; specific values depend on developmental stage). Switch to final zoom setting and readjust the position of the embryo.

5. Setting up a Multidimensional Acquisition

- Acquisition Parameters

- Switch to the 'Acquisition' tab. Define the light path including laser lines, detection objective, laser blocking filter, beam splitter and the cameras.

- Activate the pivot scan checkbox. Define the other acquisition settings like the bit depth, image format, light sheet thickness and choose single sided illumination.

- Press 'Continuous' and depending on the intensity of the obtained image change the laser power and camera exposure time.

NOTE: For adjusting all the imaging settings use less laser power (0.5% of 100 mW laser, 30 msec exposure time), than for the actual experiment to avoid unnecessary photo damage to the specimen.

- Light Sheet Adjustment

- Switch to the 'Dual Sided Illumination' and activate the 'Online Dual Side Fusio'n checkbox. Start the 'Lightsheet Auto-adjust wizard'. Follow the instructions step by step.

NOTE: The wizard moves the light sheet into the focal plane of the detection objective, and ensures that it is not tilted and its waist is in the center of the field of view. After finishing the automatic adjustment, the positions of the left and right light sheets are automatically updated in the software. An improvement in image quality should now be apparent. Activate the 'Z-stack' checkbox. - Check the light sheet adjustment by inspecting the symmetry of the point spread function (PSF) given by the fluorescent beads in the xz and yz ortho view. If it is not symmetric, manually adjust the light sheet parameter position up and down until achieving a symmetric hourglass shaped PSF (Figure 1A).

- Switch to the 'Dual Sided Illumination' and activate the 'Online Dual Side Fusio'n checkbox. Start the 'Lightsheet Auto-adjust wizard'. Follow the instructions step by step.

- Multidimensional Acquisition Settings

- Define the z-stack with the 'First Slice' and 'Last Slice' options and set the z step to 1 µm.

NOTE: The light sheet in this microscope is static and the z sectioning is achieved by moving the sample through. Always use the 'Continuous Drive' option for faster acquisition of z-stacks. - Activate the 'Time Series' checkbox. Define the total number of time points and the interval in between them.

- Activate the 'Multiview' checkbox. Add the current view into the multiview list, where the x, y, z and angle information is stored. Use the stage controller to rotate the capillary and define the other desired views. Set up a z-stack at each view and add them to the multiview list.

NOTE: The software sorts the views in a serial fashion, so that the capillary is turned unidirectionally, while the image is acquired.

- Define the z-stack with the 'First Slice' and 'Last Slice' options and set the z step to 1 µm.

- Drift Correction and Starting the Experiment

- Once the acquisition set up is complete, wait 15-30 min before starting the actual experiment.

NOTE: The sample initially drifts a few micrometers in x, y and z, but should stop within 30 min. If the sample keeps drifting for longer, use another sample or remount. - Switch to the 'Maintain' tab and in the 'Streaming' option define how the data should be saved, e.g., one file per time point or separate file for each view and channel. Go back to the 'Acquisition' tab, press 'Start Experiment' and define the file name, location where it should be saved and the file format (use .czi).

- Observe the acquisition of the first time point to confirm that everything is running flawlessly. Immediately proceed with registration and fusion of the first time point as described in steps 6 and 7, to confirm that it will be possible to process the whole dataset.

- Once the acquisition set up is complete, wait 15-30 min before starting the actual experiment.

6. Multiview Registration

- Multiview Reconstruction Application

- At the end of the imaging session, transfer the data from the data storage computer at the microscope to a data processing computer. Use the 'MultiView ReconstructionApplication'20,21,31 implemented in Fiji32 for data processing (Figure 1B).

- Define Dataset

- Update Fiji: Fiji > Help > Update ImageJ and Fiji > Help > Update Fiji. Use the main ImageJ and Fiji update sites.

- Transfer the entire dataset into one folder. The results and intermediate files of the processing will be saved into this folder. Start the MultiView Reconstruction Application: Fiji > Plugins > Multiview Reconstruction > Multiview Reconstruction Application.

- Select 'define a new dataset'. As type of dataset select the meu option "Zeiss Lightsheet Z.1 Dataset (LOCI Bioformats)" and create a name for the .xml file. Then select the first .czi file of the dataset (i.e. file without index). It contains the image data as well as the metadata of the recording.

NOTE: Once the program opens the first .czi file, the metadata are loaded into the program. - Confirm that the number of the angles, channels, illuminations and observe the voxel size from the metadata. Upon pressing OK, observe three separate windows open (Figure 1C): a log window, showing the progress of the processing and its results, the 'ViewSetup Explorer' and a console, showing error messages of Fiji.

NOTE: The 'ViewSetup Explorer' is a user-friendly interface that shows each view, channel and illumination and allows for selection of the files of interest. Additionally, the 'ViewSetup Explorer' allows for steering of all the processing steps. - Select the files that need to be processed and press the right mouse button into the explorer. Observe a window open with different processing steps (Figure 1C).

- Upon defining the dataset, observe that an .xml file in the folder with the data is created.

NOTE: This file contains the metadata that were confirmed before. - In the top right corner observe two buttons 'info' and 'save'. Pressing 'info' displays a summary of the content of the .xml file. Pressing 'save' will save the processing results.

NOTE: While processing in the 'MultiView Reconstruction Application' the different processing steps need to be saved into the .xml before closing Fiji.

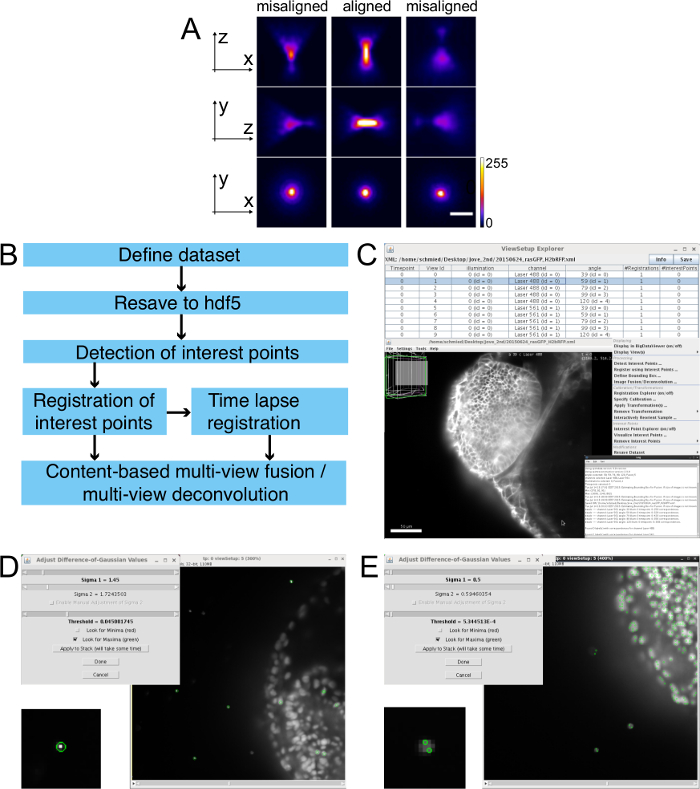

Figure 1: Multiview Reconstruction workflow and interest point detection. (A) Light sheet alignment based on fluorescent bead imaging. The system is aligned (center), when the bead image has a symmetrical hourglass shape in xz and yz projections. The examples of misaligned light sheet in either direction are shown on left and right. The maximum intensity projections of a 500 nm bead in xz, yz and xy axes are shown. Note that the ratio of intensity of the central peak of the Airy disc (in xy) to the side lobes is greater, when the lightsheet is correctly aligned compared to the misaligned situation. Images were taken with 20X/1.0 W objective at 0.7 zoom. Scale bar represents 5 µm. (B) The dataset is defined and then resaved into the HDF5 format. The beads are segmented and then registered. For a time series each time point is registered onto a reference time point. The data are finally fused into a single isotropic volume. (C, top) The ViewSetup Explorer shows the different time points, angles, channels and illumination sides of the dataset. (C, left lower corner) The BigDataViewer window shows the view that is selected in the ViewSetup Explorer. (C, middle right) Right click into the ViewSetup Explorer opens the processing options. (C, right lower corner) The progress and the results of the processing are displayed in the log file. (D and E) The aim of the detection is to segment as many interest points (beads) with as little detection in the sample as possible here shown as screenshots from the interactive bead segmentation. (D and E, upper left corner) The segmentation is defined by two parameters, the Difference-of-Gaussian Values for Sigma 1 and the Threshold. (D) An example of a successful detection with a magnified view of a correctly detected bead. (E) Segmentation with too many false positives and multiple detections of a single bead. Scale bar in (C) represents 50 µm. Please click here to view a larger version of this figure.

- Resave Dataset in HDF5 Format

- To resave the entire dataset, select all the files with Ctrl/Apple+a and right click. Then select resave dataset and as HDF5.

- A window will appear displaying a warning that all views of the current dataset will be resaved. Press yes.

NOTE: The program will continue to resave the .czi files to hdf5 by opening every file and resaving the different resolution levels of the hdf5 format. It will confirm with 'done' upon completion. Resaving usually takes a few min per timepoint (see Table 1).

NOTE: Since the files in the hdf5 format can be loaded very fast, it is now possible to view the unregistered dataset by 'right click' into the explorer and toggling 'display in BigDataViewer (on/off)'. A BigDataViewer window will appear with the selected view (Figure 1C). The core functions of the BigDataViewer33 are explained in Table 2 and http://fiji.sc/BigDataViewer.

- Detect Interest Points

- Select all time points with Ctrl/Apple+a and right click select detect interest points.

- Select Difference-of-Gaussian34 for type of interest point detection. Since the bead-based registration is used here, type "beads" into the field for label interest points. Activate 'Downsample images prior to segmentation'.

- In the next window, observe the detection settings. For 'subpixel localization' use '3-dimensional quadratic fit'34 and to determine the Difference-of-Gaussian values and radius for bead segmentation use 'interactive' in the i'nterest point specification'.

- For 'Downsample XY' use 'Match Z Resolution (less downsampling)' and for 'Downsample Z' use 1×. The z step size is bigger than the xy pixel size, thus simply down sampling the xy to match the z resolution is sufficient. Select 'compute on CPU (JAVA)'. Press 'OK'.

- In a pop up window, select one view for testing the parameters from the drop down menu. Once the view loads, adjust brightness and contrast of the window with Fiji > Image > Adjust > Brightness/Contrast or Ctrl + Shift + C. Tick the box 'look for maxima (green)' for bead detection.

- Observe the segmentation in the 'viewSetup' as green rings around the detections when looking for maxima and red for minima. The segmentation is defined by two parameters, the Difference-of-Gaussian Values for Sigma 1 and the Threshold (Figure 1D). Adjust them to segment as many beads around the sample and as few false positive detections within the sample as possible (Figure 1D). Detect each bead only once and not multiple times (Figure 1E). After determining the optimal parameters, press 'done'.

NOTE: The detection starts by loading each individual view of the time point and segmenting the beads. In the log file, the program outputs the number of beads it detected per view. The detection should be done in a few seconds (see Table 1). - Press save when the amount of detections is appropriate (600 to several thousand per view).

NOTE: A folder will be created in the data directory, which will contain the information about the coordinates of the detected beads.

- Register Using Interest Points

- Select all time points with Ctrl/Apple+a, right click and select 'Register using Interest Points'.

- Use 'fast 3d geometric hashing (rotation invariant)' for bead detection as 'registration algorithm'.

NOTE: This algorithm assumes no prior knowledge about the orientation and positioning of the different views with respect to each other. - For registration of the views onto each other, select 'register timepoints individually' as 'type of registration'. For 'interest points' in the selected channel, the previously specified label for the interest points should now be visible (i.e. "beads").

- In the next window, use the pre set values for registration. Fix the first view by selecting 'Fix tiles: Fix first tile and use Do not map back' (use this if tiles are not fixed) in the 'Map back tiles' section.

- Use an 'affine transformation model' with 'regularization'.

NOTE: The allowed error for RANSAC will be 5px and the 'significance for a descriptor match' will be 10. For 'regularization' use a 'rigid model' with a lambda of 0.10, which means that the transformation is 10% rigid and 90% affine35. Press OK to start the registration.

NOTE: As displayed in the log window, first each view is matched with all the other views. Then the random sample consensus (RANSAC)36 tests the correspondences and excludes false positives. For a robust registration, the RANSAC value should be higher than 90%. When a sufficient number of true candidates corresponding between two views are found, a transformation model is computed between each match with the average displacement in pixels. Then the iterative global optimization is carried out and all views are registered onto the fixed view. With successful registration, a transformation model is calculated and displayed with its scaling and the displacement in pixels. The average error should be optimally below 1 px and the scaling of the transformation close to 1. The registration is executed in seconds (see Table 1). - Confirm that there is no shift between different views as observed on fine structures within the sample, e.g. cell membranes. Then save the transformation for each view into the .xml file.

NOTE: Now the registered views are overlapping each other in the BigDataViewer (Figure 2A) and the bead images should be overlaying as well (Figure 2B). - Remove the transformations by selecting the time points right click on them and then select Remove Transformations > Latest/Newest Transformation.

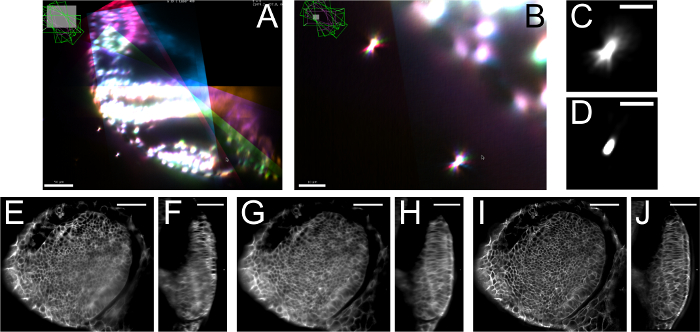

Figure 2: Results of the multiview reconstruction. (A) Overlaid registered views, each in a different color to demonstrate the overlap between them. (B) Magnified view showing the overlap of the PSFs of beads imaged from the different views. (C) Close up of a bead after fusion, the PSF is an average of the different views. (D) PSF of the same bead as in (C) after multiview deconvolution showing that the PSF collapses into a single point. (E) x-y section and (F) y-z section of a single view of an optic vesicle, in which membranes are labeled with GFP showing degradation of the signal in z deeper in the tissue. (G) x-y section and (H) y-z section of the same view after weighted-average fusion of 4 views approximately 20 degrees apart with slightly more degraded x-y resolution overall but increased z-resolution. (I) x-y section and (J) y-z section of the same data after multiview deconvolution, showing a significant increase in resolution and contrast of the signal both in xy and in z. Images in (E-J) are single optical slices. Scale bar represents 50 µm (A, B, E-J) and 10 µm (C, D). Please click here to view a larger version of this figure.

- Time-lapse Registration

- Select the whole time lapse, right click and choose 'Register using Interest Points' to stabilize the time-lapse over time.

- In the 'Basic Registration Parameters window select Match against one reference time point (no global optimization) for Type of registration'. Keep the other settings the same as in the registration of the individual time points.

- In the next window, select the time point to be used as a reference, typically a time point in the middle of the time-lapse. Tick the box 'consider each timepoint as rigid unit', since the individual views within each time point are already registered onto each other.

- Tick the box for 'Show timeseries statistics'. The other registration parameters remain as before including the regularization. Press OK.

NOTE: In the log window, the same output will be displayed as in the individual time point registration. If the registration for the individual time points was successful and robust, the RANSAC is now 99-100% and the average, minimum and maximum error is usually below 1 px. Save this registration before proceeding.

7. Multiview Fusion

NOTE: The resulting transformations from the registration steps are used to compute a fused isotropic stack out of the multiple views. This stack has increased number of z slices compared to the original data, because the z spacing is now equal to the original pixel size in xy. The fusion can be performed by either a content-based multiview fusion21,31 or Bayesian-based multiview deconvolution31, which are both implemented in the multiview reconstruction application.

- Bounding Box

NOTE: Fusion is a computationally expensive process (see Table 1), thus reducing the amount of data by defining a bounding box significantly increases the speed of processing.- Select all time points right click and choose Define 'Bounding Box'. Use 'Define with BigDataViewer' and choose a name for the bounding box.

- Move the slider for 'min' and 'max' in every axis to determine the region of interest and then press 'OK'. The parameters of the bounding box will be displayed.

NOTE: The bounding box will contain everything within the green box that is overlaid with a transparent magenta layer.

- Content-based Multiview Fusion

NOTE: Content-based multiview fusion21 takes the image quality differences over the stack (i.e. degradation of the signal in z) into account and applies higher weights for better image quality instead of using a simple averaging.- Select the time point(s) that should be fused in the 'ViewSetup Explorer', right click and select 'Image Fusion/Deconvolution'.

- In the Image Fusion window, select 'Weighted-average fusion' from the drop down menu, and choose 'Use pre-defined Bounding Box for Bounding Box'. For the output of the fused image select 'Append to current XML Project', which writes new hdf5 files to the already existing hdf5 files and allows using the registered unfused views and the fused image together. Press 'OK'.

- Then in the' Pre-define Bounding Box' pop up window select the name of the previously defined bounding box and press 'OK'.

- Observe the parameters of the bounding box in the next window. For quick fusion, apply a down sampling on the fused dataset.

NOTE: If the fused stack is above a certain size, the program will recommend using a more memory efficient 'ImageLib2 containe'r. Switch from 'ArrayImg' to the 'PlanarImg (large images, easy to display) or CellImg (large images)' container, which allow processing of larger data. Otherwise use the predefined settings and apply 'blending and content-based fusion'. Proceed by pressing 'OK'. - Observe the hdf5 settings in the next window. Use the predefined parameters and start the fusion process. Make sure that Fiji has assigned enough memory in Edit > Options > Memory & Threads.

- Multiview Deconvolution

NOTE: Multiview Deconvolution31 is another type of multiview fusion. Here additionally to the fusion, the PSF of the imaging system is taken into account in order to deconvolve the image and yield increased resolution and contrast of the signal (compared in Figure 2C-J, Figure 5 and Movie 3).- Select the time point(s) to be deconvolved, right click and press 'Image Fusion/Deconvolution'.

- Select 'Multiview Deconvolution' and use 'the pre-defined bounding box and append to the current XML project'. Select the bounding box and continue.

NOTE: The pre-defined parameters for the deconvolution are a good starting point. For the first trial use '20 iterations' and assess the impact of the deconvolution by using the 'Debug mode'. Finally set the compute on to in 512 x 512 x 512 blocks. - In the next window, use the pre-defined settings. Set the 'debug mode' to display the results every '5 iterations'.

NOTE: The output of the deconvolution is 32-bit data, however the BigDataViewer currently only supports 16-bit data. In order to append the output of the deconvolution to the existing hdf5 dataset, it has to be converted to 16-bit. - For the conversion, run 'Use min/max of the first image (might saturate intensities over time)'.

NOTE: The deconvolution will then start by loading the images and preparing them for the deconvolution.

- BigDataServer

- In order to share the very large XML/HDF5 datasets use the http server BigDataServer33. An introduction to how to setup and connect to such server can be found at http://fiji.sc/BigDataServer.

- To connect to an existing BigDataServer open Fiji > Plugins > BigDataViewer > BrowseBigDataServer.

- Enter the URL including the port into the window.

NOTE: The movies described in this publication are accessible via this address: http://opticcup.mpi-cbg.de:8085 - Observe a window that allows selecting the available movies. Double click to open the BigDataViewer window and view the data as described previously.

Supplementary: Checklist of Material Needed Before Starting

- zebrafish embryos/larvae expressing fluorescent proteins (Keep the embryos in the zebrafish E3 medium without methylene blue. For stages older than 24 hr counteract the pigmentation by adding PTU to a final concentration of 0.2 mM.)

- fluorescent stereoscope

- capillaries (20 μl volume, with a black mark) and appropriate plungers (Do not reuse the capillaries. The plungers, on the other hand, can be reused for several experiments.)

- 1.5 ml plastic tubes

- sharp tweezers

- glass (fire polished) or plastic pipettes (plastic can be used for 24 hr and older embryos)

- glass or plastic dishes diameter 60 mm (plastic can be used for 24 hr and older embryos)

- two 100 ml beakers

- 50 ml Luer-Lock syringe with 150 cm extension hose for infusion (The hose and syringe should be kept completely dry in between experiments to avoid contamination by microorganisms.)

- plasticine

- low melting point (LMP) agarose

- E3 medium (5 mM NaCl, 0.17 mM KCl, 0.33 mM CaCl2, 0.33 mM MgSO4)

- MS-222 (Tricaine)

- phenylthiourea (PTU)

- fluorescent microspheres (here referred to as beads)

- double distilled H2O (ddH2O)

Representative Results

LSFM is an ideal method for imaging developmental processes across scales. Several applications are compiled here showing both short and long-term imaging of intracellular structures, as well as cells and entire tissues. These examples also demonstrate that LSFM is a useful tool at various stages of eye development from optic cup formation to neurogenesis in the retina. Movie 1 serves as an illustration of the general LSFM approach, first showing a zoomed out view of an intact embryo in the embedding agarose cylinder in brightfield and later showing a detailed view of the retina in the fluorescence channel.

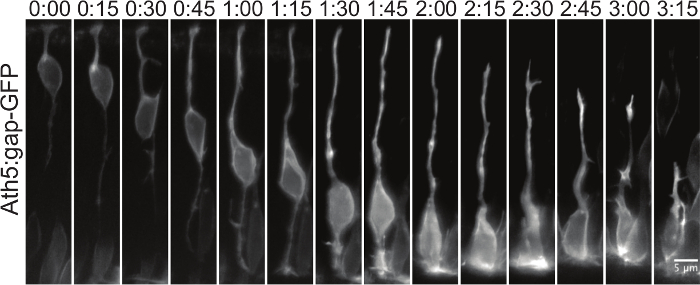

Movie 1: LSFM of the zebrafish retina. To illustrate the LSFM approach, this movie shows first in the brightfield that the embryo is imaged intact inside the agarose cylinder before switching to fluorescence. Later it is shown that the big field of view enables observation of the whole retina. Next, the movie shows a magnified small region of the retina to highlight the good subcellular resolution. An Ath5:gap-GFP37 transgenic zebrafish embryo was used for imaging. This transgene labels different neurons in the retina (mainly ganglion cells and photoreceptor precursors). The fluorescence part of the movie was captured as a single view recording with dual sided illumination using the 40X/1.0 W objective with 5 min intervals. The maximum intensity projection of a 30 µm thick volume is shown. Please click here to download this file.

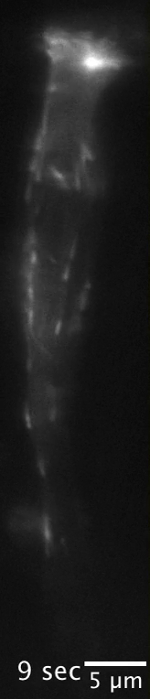

Movie 2 demonstrates, how very fast intracellular events can be captured with high resolution; in this case the growth of microtubules at their plus ends in the retinal neuronal progenitor cells. The information contained in the movie allows for tracking and quantification of microtubule plus end growth.

Movie 2: Microtubule dynamics in a single cell. This movie captures growing plus tips of microtubules labeled by the plus tip marker protein EB3-GFP38. The protein is expressed in a single retinal progenitor cell. The microtubules are growing predominantly in the direction from apical to basal (top to bottom). The average speed of the EB3 comets was measured as 0.28 ±0.05 µm/sec. The bright spot at the apical side of the cell exhibiting high microtubule nucleation activity is the centrosome. The wild type embryo was injected with hsp70:EB3-GFP plasmid DNA. The movie was acquired 4 hr after heat shock (15 min at 37 °C) at around 28 hrpost fertilization (hpf) as a single view recording with single sided illumination using the 63X/1.0 W objective and 1 sec time intervals. The single cell was cropped from a field of view covering the majority of the retina. The maximum intensity projection of two slices is shown. Please click here to download this file.

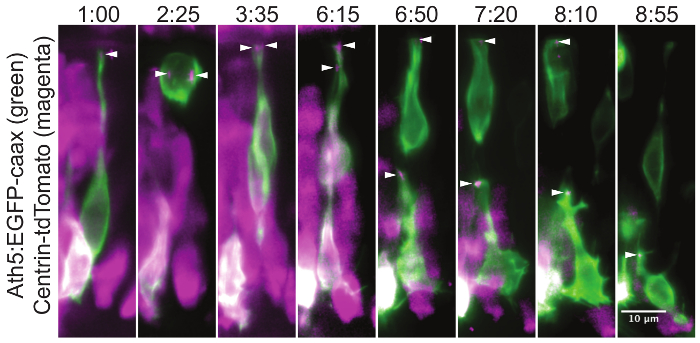

Figure 3 shows, how intracellular structures can be followed over many hours. Here, the centrosome within translocating retinal ganglion cells (RGCs) is captured.

Figure 3: Centrosome localization during the retinal ganglion cell translocation. This montage of a time-lapse experiment shows the position of the centrosome throughout the retinal ganglion cell (RGC) maturation. In the neuronal progenitors the centrosome is localized in the very tip of the apical process (1:00). During cell division, the two centrosomes serve as poles for the mitotic spindle (2:25). This division results in one daughter cell that differentiates into an RGC and a second daughter cell that becomes a photoreceptor cell precursor. After division, the cell body of the RGC translocates to the basal side of the retina, while the apical process remains attached at the apical side. Once the RGC reaches the basal side, its apical process detaches and the centrosome travels with it (6:15). The centrosome can be followed while gradually retracting together with the apical process (6:50, 7:20, 8:10). In the last frame (8:55) the ganglion cell is growing an axon from its basal side, while the centrosome is still localized apically. The mosaic expression was achieved by plasmid DNA injection into wild type embryo at one cell stage. The cells are visualized by the Ath5:GFP-caax (green) construct, which labels RGCs and other neurons. The centrosomes (arrowheads) are labeled by Centrin-tdTomato29 expression (magenta). The apical side of the retina is at the top of the image and the basal side at the bottom. The maximum intensity projection of a 30 µm thick volume is shown. The images were cropped from a movie covering the whole retina. The movie starts at around 34 hr post fertilization (hpf). A z stack was acquired every 5 min with the 40X/1.0 W objective. Time is shown in hh:mm. Scale bar represents 10 µm. Please click here to view a larger version of this figure.

In Figure 4 it is displayed, how single cell behavior can be extracted from data capturing the whole tissue such as in Movie 1. Translocation of an RGC can be easily tracked and its apical and basal processes followed.

Figure 4: Translocation of a single retinal ganglion cell. The RGC translocation from apical to basal side of the retina occurs after the terminal mitosis as described in Figure 3. The RGC is labeled by expression of the Ath5:gap-GFP37 transgene. The maximum intensity projection of a 30 µm thick volume is shown. The images were cropped from a movie covering the whole retina. The movie starts at around 34 hpf. A z stack was acquired every 5 min with the 40X/1.0 W objective. Time is shown in hh:mm. Scale bar represents 5 µm. Please click here to view a larger version of this figure.

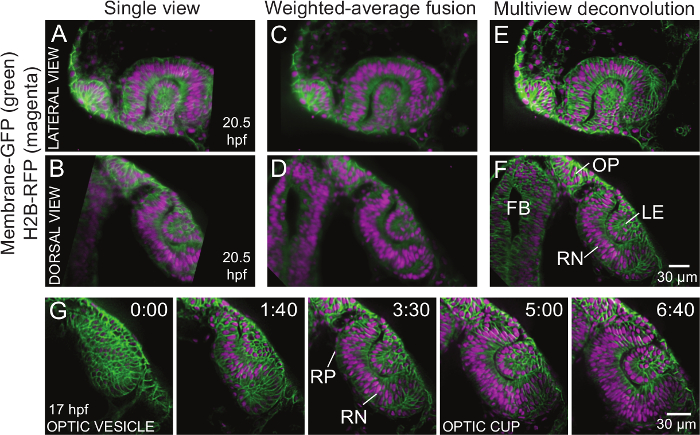

Figure 5 demonstrates the ability of multiview LSFM to capture tissue scale morphogenetic processes with cellular resolution on the example of optic cup morphogenesis, during which optic vesicle transforms into an optic cup. The image quality can be vastly improved by multiview imaging, when the final image is combined from the information out of 5 different views (in this case) into one z stack with isotropic resolution. This figure illustrates the improvement in image quality after weighted-average fusion and further gain in image contrast and resolution after multiview deconvolution. The figure shows two optical slices in different orientations through the dataset. Additionally, a montage from the deconvolved dataset shows the optic cup morphogenesis over time. All the time points are then shown in Movie 3.

Figure 5: Comparison of image quality between single view and two methods of multiview fusion. (A) One optical slice of single view data shown from lateral view and (B) dorsal view. Stripe artifacts and degradation of the signal deeper inside the sample are apparent. Also a part of the image visible in the fused data (C-F) was not captured in this particular view. (C) The same optical slice, now as multiview fused data shown from lateral view and (D) dorsal view. Note that stripe artifacts are suppressed and structures deep in the sample are better resolved. (E) The same optical slice now as multiview deconvolved data shown from lateral view and (F) dorsal view. Note the increased contrast and resolution so the individual cell membranes and nuclei can be well distinguished. The resolution does not deteriorate notably deeper inside the sample. The dataset was acquired with dual sided illumination from 5 views approximately 20 degrees apart. The z stacks of about 100 µm with 1.5 µm step size were acquired at each view in 10 min intervals for 10 hours with the 20X/1.0 W objective. Input images for the multiview fusion and deconvolution were down sampled 2× to speed up the image processing. 15 iterations of the multiview deconvolution were run. (G) The montage shows a cropped area of the dorsal view from the deconvolved data to highlight the morphogenetic events during early eye development from the optic vesicle to the optic cup stage. The two layers of the optic vesicle, which are initially similar columnar epithelia, differentiate into distinct cell populations. The distal layer close to the epidermis becomes the retinal neuroepithelium (RN) and the proximal layer close to the neural tube becomes the retinal pigment epithelium (RP). The cells in the RN elongate and invaginate to form the optic cup (1:40 to 5:00); at the same time the RP cells flatten. The surface ectoderm is induced to form a lens (1:40), which invaginates later (5:00). The movie starts at 17 hpf. Time is shown in hh:mm. All the cellular membranes are labeled by the β-actin:ras-GFP transgene and all the nuclei are labeled by the hsp70:H2B-RFP transgene. Scale bar represents 30 µm. FB forebrain, LE lens, OP olfactory placode, RN retinal neuroepithelium, RP retinal pigment epithelium. Please click here to view a larger version of this figure.

Movie 3: Optic cup morphogenesis shown with single view and two methods of multiview fusion. The time-lapse movie illustrates the complete process of optic cup morphogenesis from the optic vesicle to the optic cup stage. It shows a single optical slice from the lateral view (top) and a single optical slice from the dorsal view (bottom). The cells of the developing optic vesicle undergo complex rearrangements to finally form the hemispherical optic cup with the inner retinal neuroepithelium and outer retinal pigment epithelium. A lens is formed from the surface ectoderm. It invaginates along with the neuroepithelium and sits in the optic cup. All cellular membranes are labeled by expression of the β-actin:ras-GFP (green) transgene and the nuclei are labeled with hsp70:H2B-RFP (magenta). The movie starts at around 17 hpf. The dataset was acquired with dual sided illumination from 5 views approximately 20 degrees apart and a z stacks of about 100 µm were acquired every 10 min with the 20X/1.0 W objective. Time is shown in hh:mm. Scale bar represents 50 µm. Please click here to download this file.

Discussion

1. Critical steps and troubleshooting for the data acquisition

The typical imaging settings for a GFP and RFP expressing sample can be found in Table 3. In the described microscope setup the light sheet is static, formed by a cylindrical lens. The two illumination objectives are air lenses and the detection objective is a water-dipping lens. Zoom 1.0 with 20X/1.0 or 40X/1.0 objectives gives 230 nm and 115 nm pixel size and a field of view of 441 x 441 µm or 221 x 221 µm respectively. It is recommended to use the default light sheet thickness with center to border ratio 1:2. For 20X/1.0 this thickness corresponds to 4.5 µm and for 40X/1.0 to 3.2 µm in the center. If imaging speed is not the primary priority, use separate tracks in case of a multicolor sample to avoid the crosstalk of fluorescence emission between the channels. The highest speed of acquisition is limited to 50 msec per z step by the speed of movement of the z-driver. If the goal is to achieve the maximum imaging speed in case of, e.g., two tracks with dual sided illumination, the exposure time must be set so that the sum of all the images taken per z step is below 50 msec. On the other hand, if only a single image is acquired per z step, it is not beneficial to set the exposure time shorter than 50 msec.

| 1920 x 1920 image size |

| 16-bit |

| Pivot scan on |

| Dual sided illumination with online fusion |

| 10X/0.2 illumination objective |

| 20X/1.0 W Plan-Apochromat detection objective |

| Track 1: Excitation 488 nm typically 2% of 100 mW laser, 550 nm SP emission filter |

| Track 2: Excitation 561 nm typically 3% of 75 mW laser, 585 nm LP emission filter |

| Exposure time up to 100 msec |

| Z stack thickness 50-100 μm |

| 1-1.5 μm z step size in the continuous z drive mode |

| Incubation at 28.5 °C |

Table 3: Imaging Settings.

Inspecting the sample after the experiment

It is important to ensure that the specimen is still healthy at the end of the experiment. As a first readout, check the heartbeat of the specimen under a stereoscope. With a pair of sharp forceps the sample can be taken out of the agarose and moved to the incubator to develop further in order to check if it was affected by the imaging. Alternatively, it can be fixed for antibody staining.

Mounting and drift

It is essential to keep the osmolarity of the chamber solution close to the osmolarity of the embedding agarose, otherwise swelling/shrinking of the agarose and subsequent instability of the sample will occur. Therefore, use the same solution (E3 medium without methylene blue) to fill the chamber and to prepare the 1% low melting point agarose aliquots. Additionally, do not leave the agarose in the 70 °C heating block for more than 2 hr, as it can lose its gelling properties.

Do not embed the fish into too hot agarose, as this can lead to heat shock response or death of the embryo. If unsure about the effect of warm agarose on the embryos, check that the tail does not bend and that the heart rate does not slow down. If this occurs, use a different embryo for the experiment.

Keep the overall length of the agarose column with the sample short (around 2 cm) and mount the zebrafish with its head oriented towards the plunger tip. Likewise, the agarose cylinder extruded from the capillary should be kept as short as possible. These measures will ensure stability of the sample throughout the movie. At the same time, the agarose column must be long enough so that the glass capillary itself does not reach into the light path, as this would cause major refraction and reflection.

The initial drift of the sample is caused by the volume changes of the agarose cylinder itself. Sliding of the plunger is not the reason for it. Therefore, it does not help to fix the plunger with plasticine or nail polish. The embryo might change its position during the movie due to its natural growth too. Accordingly, it is advisable to center the region of interest in the middle of the field of view and keep some room at the edges to accommodate these movements.

Reduced amount of embedding medium in the light path

Orienting the sample correctly helps to achieve the best possible image quality15. Generally, excitation and emission light should travel through as little tissue and mounting media as possible. The optimal solution is agarose-free mounting. This was achieved for example in a setup for Arabidopsis lateral root imaging14, in which the main root was mounted into phytagel and the lateral roots were subsequently let to grow out of the gel column completely. Agarose-free mounting was also developed for imaging of the complete embryogenesis of Tribolium beetle over two days12. Image quality improvement was not a primary motivation in that case. Tribolium embryos simply do not survive inside the agarose long enough. An absolutely embedding media-free mounting has not been achieved for long term imaging in zebrafish. Still, we can take advantage of the fact that when the agarose solidifies, most embryos are positioned diagonally in the capillary with one eye located deep in the agarose and the second eye being close to the surface of the embedding column. The eye closer to the surface provides superior image quality and therefore should be imaged preferentially.

Agarose concentration and long-term imaging

The concentration of agarose for mounting is a compromise between stability of the sample and the possibility to accommodate growth of the embryo and diffusion of oxygen to it. There is no additional gain in the stability of the sample when using agarose concentrations higher than 1%. As a starting point for optimizing the experiments we recommend 0.6% agarose, which is also suitable for embryos younger than 24 hpf that are too delicate to be mounted into 1% agarose. To anaesthetize older embryos and larvae, the MS-222 concentration can be raised to 200 µg/ml without side effects13.

In case developing embryos are imaged for longer than ±12 hr, agarose mounting is not recommended, because it restricts the growth of the embryo and causes tail deformation. This issue was solved for zebrafish by mounting embryos into FEP polymer tubes with refractive index similar to water13,39. Mouse embryos, on the other hand, can be immobilized in hollow agarose cylinders40 or in holes of an acrylic rod attached to a syringe41. FEP tube mounting is not recommended as default method though, because the wall of the tube refracts the light slightly more than agarose.

Light sheet alignment

For good image quality it is critical to perform the automatic light sheet alignment before every experiment. Especially if the zoom settings were changed, the objectives were taken out, or a different liquid was used in the chamber.

Illumination

The pivot scanning of the light sheet should always be activated. For large scattering specimens, it is necessary to apply the dual sided illumination with online fusion to achieve even illumination across the field of view. Dual sided illumination also diminishes a specific problem of the eye imaging, which is the refraction of the incoming light sheet by the lens of the embryo. Smaller, less scattering specimens can be efficiently imaged using single sided illumination, which shortens the imaging time by half and can result in slightly better image quality compared to dual sided illumination. This is because the light paths for the two illumination arms are always different and the more efficient one can be chosen. Additionally, the two light sheets coming from each side are never perfectly in one plane, which causes mild blurring after the fusion. For very fast intracellular events, like the growing microtubules (Movie 2), the dual sided illumination is not appropriate, since the images with illumination from left and right are acquired sequentially, which could result in motion blur.

Photobleaching and phototoxicity

Less fluorophore photobleaching is often mentioned as a major advantage of the LSFM. We would argue that the aim should be no photobleaching at all. If there is noticeable photobleaching in the live imaging experiment, the specimen is probably already out of its physiological range of tolerated laser exposure. When imaging the zebrafish embryos in the spinning disc microscope, in our experience, high phototoxicity can stall embryo development even before the fluorescent signal bleaches markedly. Therefore, the imaging settings in the LSFM should be adjusted so that little or no photobleaching is observed. Even though LSFM is gentle to the sample, it is prudent to use only as much laser power and exposure time as needed to achieve a signal to noise ratio sufficient for the subsequent data analysis.

Z-stack, time intervals and data size

The files generated by LSFM are usually very large; sometimes in the terabyte range. It is often necessary to make a compromise between image quality and data size. This is particularly the case for z spacing of stacks and intervals in the time-lapse acquisitions. To define the z intervals, the Optimal button in the Z-stack tool tab should ideally be used, especially if the dataset will be deconvolved later. It calculates the spacing to achieve 50% overlap between neighboring optical slices. Still, somewhat larger z intervals are usually acceptable. They reduce the time needed to acquire the z stack as well as the final file size. The optimal time sampling depends on the process of interest. For overall eye development 5-10 min intervals are usually acceptable. If some structures are to be automatically tracked, the subsequent time points must be sufficiently similar.

Fluorescent beads

Fluorescent beads primarily serve as fiducial markers for registering different views of a multiview dataset onto each other. Always vortex the bead solutions thoroughly before use. Do not heat the beads as this can lead to loss of the fluorescent dye. The optimal bead concentration for the multiview registration has to be determined experimentally. The described plugin works best with around 1,000 detected beads throughout each view. Larger (500 nm or 1,000 nm) beads are detected more robustly than smaller (less than 500 nm) beads. This is because larger beads are brighter and are easier to segment without false positive detections of structures in the sample. The disadvantage of the larger beads is that they are very prominent in the final fused and deconvolved image. For each new fluorescent marker, the appropriate bead size and fluorescence emission have to be optimized. To give an example of sample from Figure 5 and Movie 3, 100 nm green emission beads gave too many false positive detections in the membrane-GFP channel, but 1,000 nm red emission beads were robustly detected in the H2B-RFP channel with very few positive detections inside the sample. If the bead detection fails in the channel with the fluorescent marker, a separate channel only containing beads can be acquired, but this is not very practical. The sub-resolution sized beads give a direct readout of the point spread function (PSF) of the microscope, which can be used for deconvolution (Figure 2C-D). If registration and fusion works better with bigger beads (e.g. 1,000 nm), a separate image of the PSF can be acquired with sub-resolution, e.g., 100 nm beads. Using multicolor beads is helpful during registration of multichannel acquisition and for verifying that the channels overlay perfectly.

Addition of fluorescent beads is not necessary when imaging from a single view without subsequent multiview registration and fusion. However, even in those cases beads can be helpful during the initial light sheet adjustment to check for the quality of the light sheet and in general to reveal optical aberrations. Such optical aberrations may originate from various sources like damaged or dirty objectives, dirty windows of the chamber or inhomogeneity in the agarose. Beads can also be used for drift correction by the multiview registration Fiji plugin20.

Multiview

For the multiview reconstruction purpose, it is better to acquire an odd number of views 3, 5 and so on, which are not opposing each other. This improves deconvolution since the PSFs are imaged from different directions. It is also important to confirm at the beginning of the time lapse acquisition that there is sufficient overlap among the views. This is best done empirically, i.e., immediately confirming that the views in the first time point can be successfully registered. When the goal of the multiview acquisition is to increase the resolution of an image of a large scattering specimen, it is not advisable to image the entire specimen in each view, but to stop around the center of the sample, where the signal deteriorates. The low quality acquisition from the second half of the specimen would not add useful information to the multiview reconstruction.

2. Critical steps and troubleshooting for the data processing

Currently, there exist several possibilities for processing multiview data from a light sheet microscope that are well documented and relatively easy to adopt. We use the multiview reconstruction application, which is an open source software implemented in Fiji32 (Stephan Preibisch unpublished, Link 1a and Link1b in the List of Materials). This plugin is a major redesign of the previous SPIM registration plugin20, reviewed by Schmied et al.42, integrating the BigDataViewer and its XML and HDF5 format33 with the SPIM registration workflow (Figure 1B, Link 2, Link 3). This application can be also adapted for high performance computing cluster, which significantly speeds up the processing43. This multiview registration application is actively developed further and keeps improving. In case of problems or features requests for the described software, please file issues on the respective GitHub pages (Link 4 for Multiview Reconstruction and Link 5 for BigDataViewer).

The second option is to use the commercial software available together with the microscope. This solution works well and employs the same principle of using fluorescent beads to register the different views. However, it lacks the option to visualize the whole dataset fast like with the BigDataViewer. Also the software cannot be adapted to the cluster and furthermore the processing blocks the microscope for other users, unless additional license for the software is purchased.

The third option, which is also an open source software, was recently published by the Keller lab44 and provides a comprehensive framework for processing and downstream analysis of the light sheet data. This software is using information from within the sample to perform multiview fusion, therefore it does not require the presence of fluorescent beads around the sample. But at the same time it assumes orthogonal orientation of the imaging views (objectives), so it cannot be used for data acquired from arbitrary angles44.

Hardware requirements

The hardware used for processing can be found in Table 4. There has to be enough storage capacity and a clear pipeline for data processing available, ahead of the actual experiment. Acquisition of the images is faster than subsequent analysis and it is easy to get flooded with unprocessed data. It is often unrealistic to store all the raw images, but rather a cropped version or processed images like fused views, maximum intensity projections or spherical projections45.

| Processor | Two Intel Xeon Processor E5-2630 (Six Core, 2.30 GHz Turbo, 15 MB, 7.2 GT/sec) |

| Memory | 128 GB (16×8 GB) 1600 MHz DDR3 ECC RDIMM |

| Hard Drive | 4×4 TB 3.5inch Serial ATA (7.200 rpm) Hard Drive |

| HDD Controller | PERC H310 SATA/SAS Controller for Dell Precision |

| HDD Configuration | C1 SATA 3.5 inch, 1-4 Hard Drives |

| Graphics | Dual 2 GB NVIDIA Quadro 4000 (2 cards w/ 2 DP & 1 DVI-I each) (2 DP-DVI & 2 DVI-VGA adapter) (MRGA17H) |

| Network | Intel X520-T2 Dual Port 10 GbE Network Interface Card |

Table 4: Hardware Requirements.

Data processing speed

The time needed for data processing depends on the dimensions of the data and on the used hardware. In Table 1, we provide an overview of the time required for the key steps in processing an example 8.6 GB multiview dataset that consisted of 1 time point with 4 views and 2 channels.

| Processing step | Time | Protocol step |

| Resave as HDF5 | 6 min 30 sec | 6.3 |

| Detect Interest Points | 20 sec | 6.4 |

| Register using interest points | 3 sec | 6.5 |

| Content-based multiview fusion | 4 hr | 7.2 |

| Multiview deconvolution (CPU) | 8 hr | 7.3 |

| Multiview deconvolution (GPU) | 2 hr | 7.3 |

Table 1: Data Processing Time.

Input data formats for multiview reconstruction

The Fiji Plugin Multiview Reconstruction can support .czi, .tif and ome.tiff formats. Due to the data structure of the .czi format, discontinuous datasets are not supported without pre-processing. Discontinuous means that the recording had to be restarted (e.g. to readjust the positions due to drift of the sample). In this case the .czi files need to be resaved as .tif. For .tif files each view and illumination direction needs to be saved as a separate file.

Calibration of pixel size

The microscope-operating software calculates the calibration for the xy pixel size based on the selected objective. However, the pixel size in z is defined independently by the step size. If a wrong objective is specified in the software the xy to z ratio is incorrect and the registration will fail.

Initial registration

After defining the dataset the number of registrations will be 1 and the number of interest points will be 0 in the ViewSetup Explorer. The initial registration represents the calibration of the dataset. Both the number of registrations and interest points will increase during processing.

Down sampling for detection of interest points

Using down sampling is recommended, since the loading of the files and the segmentation will be much faster. It is however important to note that the detection parameters will change depending on the down sampling, thus transferring detection settings between different down sample settings is not possible.

Detection of interest points

It is advisable to segment as many true beads as possible in each view, even at the price of obtaining some false positive detections, because they do not hinder the registration considerably. Spurious detections, if few in numbers, are excluded during registration (See Registration of interest points). However, massive false positive detections pose a problem to the algorithm. It not only reduces performance for the detection and the registration, since it takes much longer to segment the image as well as compare these beads between the views, but it also reduces the accuracy of the registration. This can be addressed by using more stringent detection parameters. Additionally, over segmentation of the beads (i.e. multiple detections on one bead Figure 1E) is detrimental to the registration and should be avoided.

Registration of interest points

To register the views onto each other, the location of each bead in each view is described by its position with respect to its three nearest neighboring beads. These constellations are forming a local geometric descriptor and allow comparing each bead between the views. Beads with matching descriptor between two views are then considered as candidate correspondences. Note that this works only for randomly distributed beads, for which the local descriptors are typically unique for each bead. One can use other structures such as nuclei in the sample for registration. However, in order to detect nuclei, which are distributed non randomly in the sample, other methods apply20,21.

The candidate correspondences are then tested against RANSAC36 in order to exclude false positives. Each correspondence is suggesting a transformation model for overlaying the views onto each other. True correspondences would likely agree on one transformation model, whereas outliers would each point to a different one. The true correspondences are then used to compute an affine transformation model between the two compared views. A global optimization with an iterative optimization algorithm is then carried out, during which all the views are registered onto the first view with the aim of minimal displacement between the views20,21.

Time-lapse registration

Due to movement of the agarose and imprecise motor movement of the microscope stage, the position of each stack varies moderately over time. Whereas the registration of the individual time point removes the difference between the views of this time point, the time-lapse also needs to be registered as a whole. To this end, each individual time point is registered onto the reference time point.

Reference time point

If a time series with many time points is processed, a representative time point is selected as reference usually from the middle of the time series since the bead intensities can degrade over time due to bleaching. On this reference, the parameters for interest point detection, registration, bounding box and fusion can be determined. These parameters are then applied to the whole time lapse to compute a specific transformation model for each individual time point. During time-lapse registration all other time points are also registered spatially onto this reference time point. Thus the bounding box parameters for the entire recording are depending on this specific time point.

Multichannel registration

When imaging multiple channels, ideally the same fluorescent beads should be visible in all imaged channels. The detection and registration can then be performed on each channel individually, which takes into account the influence of the different light wavelengths on the transformation. Often this is not possible, because the beads are not visible in all channels or the beads are dominating the image too much in one channel and are too dim in the other channel(s). The typical solution is to use beads visible only in one channel for the detection and registration and the acquired transformation model (i.e. after detection, registration and time-lapse registration) is then applied to the other channels by Fiji > Plugins > Multiview Reconstruction > Batch Processing > Tools > Duplicate Transformations. In the drop down menu for Apply transformation of select One channel to other channels. In the following window select the XML and click OK. Then select the channel that contains the beads as Source channel and as Target channel select the channel(s) without beads. For Duplicate which transformations use Replace all transformations and press OK. The transformations are then copied to all other channels and saved in the XML. To see the new transformations in the ViewSetup Explorer, restart the MultiView Reconstruction Application.

Bounding box

The fusion of multiple views is computationally very intensive. However, large images are typically acquired to accommodate not only the sample, but also the beads around it. Once the registration parameters are extracted from the beads, they are no longer useful as part of the image. Therefore, to increase the efficiency of the fusion, only the parts of the image stacks containing the sample should be fused together. A region of interest (bounding box) should be defined to contain the sample and as little of the surrounding agarose as possible. For the example in Figure 2 a weighted average fusion on the entire volume with 2229×2136×2106 px would require 38,196 MB of RAM, whereas with a bounding box the volume is reduced to 1634×1746×1632 px and the memory requirements are reduced to 17,729 MB.

Content-based multiview fusion

The challenge in fusing multiview data is that the views usually end abruptly and do not contain the same image quality for the same voxels. Simple averaging of the views would therefore lead to blending artifacts and unnecessary image degradation. Content-based multiview fusion takes both of these problems into account. First, it blends the different views, where one image ends and the other one begins and second, it assesses the local image quality and applies higher weights to higher image quality in the fusion21. Compared to a single view there is an improvement of the resolution in z with a slight degradation of the signal in xy (Figure 2E-H, Figure 5A-D).

Multiview deconvolution

Multiview deconvolution is another approach to achieve the fusion of the views. With this method also the PSFs of the different views are taken into account in order to recover the image that has been convolved by the optics of the microscope. This method drastically improves image quality by removing image blur and increasing resolution and contrast of the signal31 (Figure 2C-D, Figure 2I-J, Figure 5E-G, Movie 3).

Deconvolution is computationally very intensive (see Table 1), thus using a GPU for processing increases the speed of this process. It may also be necessary to perform the deconvolution on down sampled data. To down sample, use Fiji > Multiview Reconstruction > Batch Processing > Tools > Apply Transformations. This will apply a new transformation model on the views that will be saved in the .xml file.

HDF5 file format for BigDataViewer and BigDataServer

The BigDataViewer33 allows easy visualization of terabyte-sized data. How to control the BigDataViewer is summarized in Table 2. A screencast of the basic operation of the program is also available as a supplement in its original publication33. The BigDataViewer is centered on an .xml file, which contains the metadata, and an hdf5 file, which contains the image data. The image data are present in the hdf5 in multiple resolution levels in 3D blocks. The multiple resolution levels allow for visualizing the data faster with lower resolution, before the full resolution is loaded. Individual blocks are only loaded into memory when needed. Thus, the hdf5 image format allows direct and fast visualization of the data via the BigDataViewer33. It also speeds up processing since the loading of the files is carried out more efficiently. Therefore, we recommend resaving the dataset into this format, although it is not strictly required for the processing. For further explanation of the data format, please refer to Link 3. Additionally, the datasets can be shared with collaborators or the public using the BigDataServer33 (Link 6).

| key | effect |

| F1 | shows the Help with a brief description of the BigDataViewer and its basic operation |

| < > or mouse wheel | movement in z |