Development of a Virtual Reality Assessment of Everyday Living Skills

Summary

A challenge for proving treatment efficacy for cognitive impairments in schizophrenia is finding the optimizing measurement of skills related to everyday functioning. The Virtual Reality Functional Capacity Assessment Tool (VRFCAT) is an interactive gaming based computerized measure aimed at skills associated with everyday functioning, including baseline impairments and treatment related changes.

Abstract

Cognitive impairments affect the majority of patients with schizophrenia and these impairments predict poor long term psychosocial outcomes. Treatment studies aimed at cognitive impairment in patients with schizophrenia not only require demonstration of improvements on cognitive tests, but also evidence that any cognitive changes lead to clinically meaningful improvements. Measures of “functional capacity” index the extent to which individuals have the potential to perform skills required for real world functioning. Current data do not support the recommendation of any single instrument for measurement of functional capacity. The Virtual Reality Functional Capacity Assessment Tool (VRFCAT) is a novel, interactive gaming based measure of functional capacity that uses a realistic simulated environment to recreate routine activities of daily living. Studies are currently underway to evaluate and establish the VRFCAT’s sensitivity, reliability, validity, and practicality. This new measure of functional capacity is practical, relevant, easy to use, and has several features that improve validity and sensitivity of measurement of function in clinical trials of patients with CNS disorders.

Introduction

Schizophrenia is a serious mental illness that affects over two million Americans and costs about $63 billion in total with $22.7 billion dollars a year for direct treatment and community services, with unemployment comprising an additional $32 billion of non-direct costs1,2. This disorder is typically accompanied by cognitive deficits that precede the onset of psychosis and remain throughout the course of the illness3-8. These cognitive deficits lead to adverse consequences, such as the reduced success in social, vocational, and independent living domains. Impaired cognition is a substantial contributor to functional disability in patients with schizophrenia9. Current antipsychotics are effective at controlling the psychotic symptoms of schizophrenia such as delusions and hallucinations, but these therapies provide minimal cognitive benefits10-13. Pharmacological and nonpharmacological treatments to improve cognitive functioning for patients with schizophrenia are in development at the present time. Cognitive functioning in schizophrenia, both at baseline and as a function of changes associated with treatment, can be reliably assessed by measures with proven sensitivity14. However, FDA guidance mandates that treatment studies demonstrate the clinical meaningfulness of changes in cognitive functioning15,16.

Representatives from the Food and Drug Administration (FDA), the National Institute of Mental Health (NIMH), and the pharmaceutical industry, as well as experts in cognition from academia, met as part of the Measurement and Treatment Research to Improve Cognition in Schizophrenia (MATRICS) project15. The purpose of the MATRICS project was to develop standard methods in the development of treatments of cognitive impairment associated with schizophrenia. During the course of this work, FDA representatives asserted that cognitive improvements alone, as measured by standardized neuropsychological assessments, are not sufficient to demonstrate drug efficacy for cognitive enhancers. The approval of new treatments for cognitive impairments would therefore require evidence that the cognitive improvements are clinically meaningful15. As a result, clinical trials of cognitive enhancing treatments in schizophrenia now must demonstrate improvement on a standard cognitive measure and improvement on a measure indicating that any cognitive improvements have meaningful benefit to the patient’s functioning. This is known as a ‘co primary requirement’. The MATRICS project made very specific recommendations about which standard cognitive measures would indicate cognitive change, and developed a battery of tests for this purpose.

Although, the MATRICS Group validated a cognitive outcomes battery, the MATRICS Consensus Cognitive Battery (MCCB), they did not recommend that changes in real world functioning should be a prerequisite for the approval of a treatment aimed at cognition. This argument was based on the following concerns: 1) There are many domains of real world functioning in schizophrenia, such as the patient’s ability to hold employment, live independently, and maintain social relationships. 2) Changes in these domains might not be observed in a treatment study because they are likely to take longer than the duration of a typical clinical trial. 3) Furthermore, real world functional change is dependent upon a variety of circumstances unrelated to treatment, such as whether a patient is receiving disability payments17. As a result, the MATRICS group thus recommended that clinical meaningfulness should be demonstrated through the use of tools measuring the potential to demonstrate real world functional improvements associated with cognitive change. At that time, the MATRICS group made no firm recommendations regarding what measure or measures should be used as the ‘co primary measure’, choosing to make “soft” recommendations about the types of co primary measures that might be used in clinical trials18. Current co primary measures used in schizophrenia pharmaceutical trials are of two types: interview based measures and performance based measures.

Interview based measures of cognition are coprimary measures typically completed by the patient or an informant. They include the Schizophrenia Cognition Rating Scale (SCoRS), the Clinical Global Impression of Cognition in Schizophrenia (CGI-CogS), and the Cognitive Assessment Interview (CAI)18-20. These measures have only modest correlations with cognitive performance measures when self reported by patients, in that patients with serious mental illness and other CNS disorders are limited in their ability to accurately report their own cognitive abilities21. Further, although informant ratings have considerable validity, many patients with mental illnesses do not have an informant who can provide meaningful ratings18,22. When informants are available, they can be limited by selective observations of the patient's behavior or a variety of potential response biases.

Performance based measures of functional capacity are measures that require the performance of critical everyday skills in a controlled format. These measures have been found to demonstrate a closer association to cognitive test data than self reports obtained with the interview based measures described above18,21. Several performance based measures have recently been investigated. Two of these, The Maryland Assessment of Social Competence (MASC) and the Social Skills Performance Assessment (SSPA), measure socially oriented functional capacity through a rater's observation of patient behavior during role playing22-24. A more broadly focused and widely used measure of functional capacity is the University of California, San Diego Performance Based Skills Assessment (UPSA)25. The UPSA takes about 30 minutes and measures performance in several domains of everyday living, such as finances, communication, planning, and household activities. The original UPSA has been modified to create both extended versions involving medication management (UPSA-2) and an abbreviated version examining just communication and finances (UPSA-Brief). All versions of the UPSA have been found to have substantial correlations with cognitive performance26.

The UPSA-2 and UPSA-B are currently the preferred coprimary measures, based on a systematic comparative study of their relationships with performance on the MCCB27. However, the different versions of the UPSA have several potential limitations, in terms of lacking alternative forms, inability to deliver the test remotely, and the fact that their paper and pencil format makes rapid development of alternate forms and new assessment scenarios challenging. Further, their use in repeated assessment situations such as clinical trials is limited as some patients perform close to perfectly at their initial assessment. With this in mind, the Virtual Reality Functional Capacity Assessment Tool (VRFCAT) was designed to address these concerns and to reliably assess functional capacity within the context of a simulated real-world environment.

There have been several previous developments in the area of computerized and virtual reality assessments. For instance, Freeman and colleagues28 developed a series of virtual reality simulations that were designed to induce paranoid ideation. In the area of functional capacity assessment, Kurtz and colleagues29 developed an apartment based virtual reality medication management simulation. Finally, a computerized version of the UPSA has also been developed30. This simulation is not a true virtual reality procedure in that an examiner is present and administers the assessments, which are completed on the computer. This test does have the potential for remote delivery, although it is conceptually quite different from a gaming based test.

The VRFCAT (further referred to as “assessment”) is a novel gaming based virtual reality measure of functional capacity that uses a realistic simulated environment to recreate several routine activities of daily living with an eye toward assessment of both baseline levels of impairment and treatment related changes in functional capacity. The assessment’s realistic, interactive, and immersive environment consists of 6 versions of 4 mini scenarios that include navigating a kitchen, getting on a bus to go to a grocery store, finding/purchasing food in a grocery store, and returning home on a bus. The assessment measures the amount of time subjects spend completing 12 different objectives (listed in Table 2) as well as how many errors the subject makes. If a subject takes too much time or commits too many errors, the subject will be automatically progressed to the next objective. Subjects complete the scenarios through a progressive storyboard design. This unique high resolution software application was developed and pilot tested in the NIMH SBIR Phase 1 project with the stated goals of determining the stability and usability of the program (first portion) and measuring the test retest reliability in groups of healthy controls (second portion). After successfully developing the software in collaboration with Virtual Heroes, Inc., a division of Applied Research Associates, Inc., Phase 1 funding also enabled pilot testing of the assessment as well.

Phase 1 usability and reliability pilot testing examined a sample of 102 healthy controls from Durham, North Carolina. The study was approved by the Western IRB and all participants signed an informed consent form. The average age of the subjects included in the reliability portion of the study was 38.1 with a standard deviation of 12.98. The sample was 61% female and 59% Caucasian, 39% African American and 2% other ethnicity. Reliability findings from the Phase 1 pilot testing met expectations with a test-retest ICC of 0.61 and Pearson r of 0.67 despite the use of multiple test versions and relatively few research participants per version. In a previous study with a much larger sample (n=195) of schizophrenia patients, we had found a single form test retest reliability for the UPSA-B of ICC =.76 at a follow up between 6 weeks and 6 months31.

The assessment is currently being tested in an SBIR Phase 2 project that will validate the assessment against other measures of functional capacity and cognition, and examine the ability of this procedure to discriminate a population with schizophrenia from a healthy control group. Part of the goals of the Phase 2 study is the development of normative standards for healthy controls’ performance and understanding the extent of impairment in people with schizophrenia.

Protocol

1. Installing the Assessment

- Insert the assessment software into a computer that meets the requirements in the Equipment Table.

- If the folder does not automatically open, go to “My Computer” and open the CD Drive

- Double click “NeuroCog.build.319”

- Double click “Installer”

- Double click “Setup.exe”

- Click the “Next” button at the bottom of the setup screen

- Click “Agree” on the licensing agreement

- Click “Next”

- Click “Next”

- Click “Install”

- Click “Finish"

2. Setting-up the Study Room

- Install the assessment on a computer in a quiet room and free of distractions. Make sure that the subject does not have access to any materials that can be used to take notes.

- Ensure that the computer is at a table or desk at a comfortable sitting height. Place a wired mouse on the table with enough room for the subject to rest their arm in order to prevent fatigue.

- Plug in the external speakers prior to starting the program.

- Set up two chairs, one directly in front of the computer for the subject and one to the side and slightly behind the other chair for the administrator to sit in. A rater should be present at all times during the administration of the assessment.

- Prior to administering the assessment, set up the program.

- Double Click on the icon labeled “Play VRFCAT.”

- If this is a returning subject, enter in the previously created Patient ID and Password and click “Confirm.” If this is a new subject, click New Profile and then create a new Patient ID and Password for the subject and click “Confirm.”

- Type in the Site Number, select the subject’s Date of Birth, type in the Age, select the subject’s Handedness and Gender, type in the Rater Initials, select the Study,* and select the Visit*. Then click “Confirm.” NOTE: *These elements can be customized. Please see the assessment manual for further information.

3. Administering the Assessment

- To initiate the administration of the task, sit the subject down at the chair directly in front of the computer. If this is the subject’s first time with the assessment, use the default Scenario: “Tutorial” and the default Mini Scenario: “Apartment.”

- If this is not the subject’s first time with the assessment select the appropriate test version from the Scenario drop down menu and click “Confirm.” Please note that the previous version the subject completed will have turned from green to red. This will help to ensure that the same version is not administered twice to a subject.

- A rater should remain present during the entire administration of the assessment. If the subject asks what they are supposed to be doing, direct the subject to click on the audio icon in the top left corner. If the subject stops paying attention or at any point looks away from the screen, redirect the subject back to the task.

- Take the necessary precautions to try to ensure that the subject will not need a break during the administration of the assessment, but in extreme circumstances, the assessment can be paused and continued at a later time. To do so, press the “Esc” key on the keyboard, and a menu labeled Game Paused will appear.

- In the event that the subject is taking a short break, simply click “Main Menu,” type in the Admin Code, “admin,” and click “Yes.” The assessment will then go back to the screen to select what Mini Scenario is needed to start the subject on when they return. All completed Mini Scenarios will have turned from green to red.

- If the subject is unable to complete the entire version that day, and must come back another day, click “Exit” on the Game Paused menu, type in the Admin Code, “admin,” and click “Yes.” This will shut down the program. Once the subject returns, log back into the program with the correct Patient ID and password, and select the appropriate Scenario and Mini Scenario from the drop down menus. All completed Mini Scenarios will have turned from green to red.

- Once the subject has completed all four Mini Scenarios (Apartment, Bus to Store, Store, and Bus to Apartment) a screen saying “Congratulations! You have completed the task! Please let your test administrator know that you have finished,” will appear. Click “Continue,” and on the next screen click “Begin New Scenario.”In the box labeled “Admin Code” type in “admin” and click “Yes.”

- If the subject completed the test version instead of the tutorial, click “Exit Application.” In the box labeled “Admin Code” type in “admin,” click “Yes,” and the session is complete.

- Select the appropriate test version from the Scenario drop down menu and click “Confirm.” NOTE: The Scenario labeled Tutorial should now have changed from green to red because the subject has completed the entire Scenario.

- Once the subject has completed all four Mini Scenarios a screen will appear that says, “Congratulations! You have completed the task! Please let your test administrator know that you have finished.” Click “Continue,” and on the next screen click “Exit Application.” In the box labeled “Admin Code” type in “admin” and click “Yes,” and the session is complete.

4. Potential Deviations

- If the subject attempts to complete any of the objectives out of order, remind them that they must complete the task described in the instructions at the top of the screen before moving onto the next task.

- If the subject has used up all of their virtual change during the Tutorial scenario and is unable to complete the final objective, escape out of the game using the above listed methods to return to the Scenario selection menu. Encourage the subject to try to not to use up all of their coins during the early objectives, and readminster the entire tutorial.

- If the subject has used up all of their virtual change during the any of the other scenarios and is unable to complete the final objective, escape out of the game using the above listed methods to return to the Scenario selection menu. This data point will be considered missing, and do not readminister any of the scenarios.

Representative Results

During the development phase, 102 healthy controls were tested with 1 of 6 randomly selected versions of the assessment and then asked to return for retest with a different randomly selected version 7 to 14 days later. 90 of those 102 returned for testing with a different version of the application. Due to an initial data management problem that was later rectified, only 69 of the 90 who returned had complete data sets. A significant outlier was discovered and removed from data analysis, leaving 68 completed data sets.

During the initial analysis of the data, it was observed that one of the versions of the application, Scenario 4, had significant outlying data and did not perform in an equitable way to the other five versions. As a result, Scenario 4 was excluded from the analyses for the pilot data and the final analyses for this measure did not include data from this version. The final sample size was n=46 (see Table 1 for demographic information). This version was updated for the current version as described below.

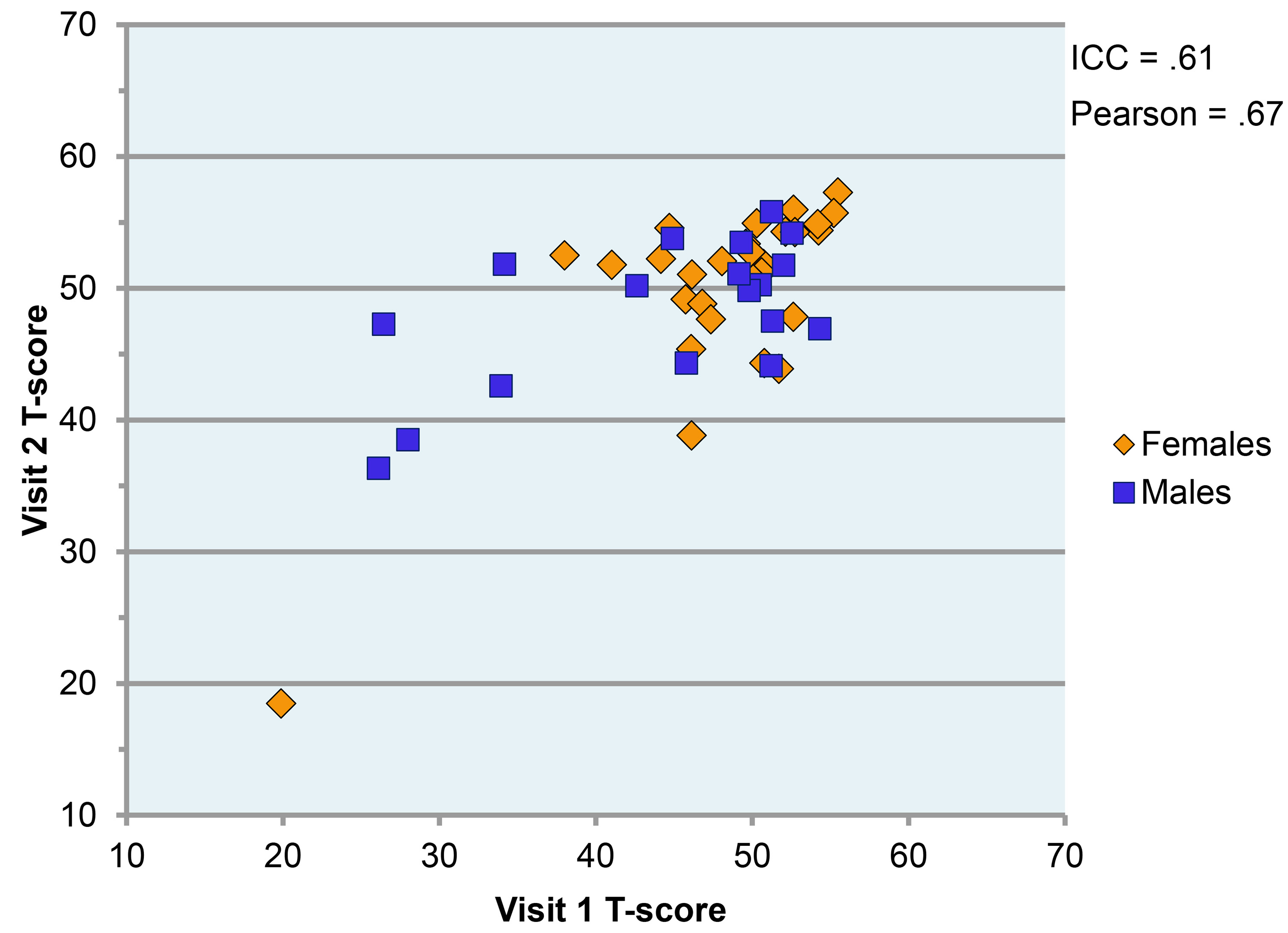

We performed a factor analysis (unrotated principal components) of performance on the 12 objectives of the assessment that are presented in Table 2. Table 3 shows the Factor Analysis that explained 91% of the variance and revealed three factors which were labeled: Reasoning and Problem Solving, Speed of Processing, and Working Memory. The domains were combined into a composite and the test-retest data yielded an intraclass correlation (ICC) of 0.61 and Pearson’s correlation of 0.67. A scatterplot depicting performance at both visits is shown in Figure 1 below.

Towards the end of the study the data were reviewed and it was determined that there were errors that subjects were making on Visit 1 but not Visit 2. For the remaining 13 subjects we instituted having the subject’s complete the tutorial before the test version and the data were showing promise that the tutorial helped to wash out some of the main practice effects that were being seen. Because of that we recommend that the first time the assessment is administered that the tutorial should always be administered before a test version.

| Subjects (N=46) | |

| Age | 38.1 (SD=12.98; Range=19-68) |

| Gender | Female – 28 (61%) Male – 18 (39%) |

| Race | Caucasian – 27 (59%) African American – 18 (39%) Other – 1 (2%) |

Table 1. Pilot Study Demographic Information

| Objective | Description |

| 1 | Pick up the Recipe |

| 2 | Search for Ingredients |

| 3 | Cross Off Correct Ingredients & Pick up Bus Schedule |

| 4 | Pick up the Billfold |

| 5 | Exit the Apartment |

| 6 | Catch the Bus |

| 7 | Pay for the Bus |

| 8 | Select an Aisle |

| 9 | Shop for Groceries |

| 10 | Pay for Groceries |

| 11 | Catch the Bus |

| 12 | Pay for the Bus |

Table 2. VRFCAT Objectives

| Objective | Factors | ||

| 1 | 2 | 3 | |

| (Reasoning/ Problem Solving) |

(Speed of Processing) | (Working Memory) | |

| Time to add bus fare | 0.92 | 0.19 | 0.14 |

| Errors adding bus fare | 0.92 | 0.12 | 0.16 |

| Time to add grocery money | 0.87 | 0.16 | 0.09 |

| Errors adding grocery money | 0.79 | 0.13 | 0.12 |

| No. times bus schedule accessed | 0.5 | 0.34 | 0.25 |

| Errors adding bus fare | 0.48 | 0.24 | 0.08 |

| Time to shop for items | 0.29 | 0.82 | 0.28 |

| Errors shopping for items | 0.11 | 0.82 | 0.21 |

| Time to board bus | 0.25 | 0.78 | 0.01 |

| Time to board bus | 0.13 | 0.69 | -0.06 |

| Time to cross off items | 0.21 | 0.04 | 0.9 |

| Errors crossing off items | 0.31 | 0.01 | 0.86 |

| No. times recipe accessed | 0 | 0.2 | 0.68 |

Table 3. Pilot Study Factor Analysis

Figure 1. Relationship between Performance at Visit 1 and Visit 2 by Gender

Discussion

The pilot study showed encouraging results with the test retest data yielded an intraclass correlation (ICC) of 0.61 and Pearson’s correlation of 0.67.

During the initial analysis of the data, it was observed that one of the versions of the application, Scenario 4, had significant outlying data and did not perform in a manner consistent with the other five versions. Because of the qualitative data that was received we hypothesized that the location of one of the ingredients on the recipe in Scenario 4 was causing the outlying data. The ingredient was updated to more closely resemble the other versions. Validation of this version is currently underway as part of an NIMH SBIR Phase II Grant.

For software troubleshooting, please contact the company who developed the assessment.

The protocol for administering the assessment has several limitations. The assessment requires the use of a computer. The technique as currently configured will have the most successful outcome if there is an administrator present during the entire administration of the assessment. The subject who is completing the assessment should not be left alone at any point. Also, the conclusions drawn from the pilot study are limited by several factors. The sample was a group of healthy controls from one site in Durham, North Carolina. This sample consisted of mainly Caucasian (59%) females (61%). This sample is not representative of the population of people with schizophrenia. The sample size was also relatively small (n=46).

Currently the assessment is being validated in several different studies. The National Institute of Mental Health has provided funding to validate the assessment in a sample of 160 patients and 160 healthy controls. The validation study will look at the validity, sensitivity, and reliability of the assessment as a primary measure of functional capacity in schizophrenia. As well as the assessment’s ability to quantify changes in functional capacity by comparing it to the UPSA-2-VIM, determining the association between performance on the assessment and performance on the MCCB, and examining the association between the assessment and the Schizophrenia Cognition Rating Scale (SCoRS).

The assessment is also a part of the Validation of Everyday Real world Outcomes (VALERO) study, phase 2. The VALERO study recruited patients with schizophrenia who in addition to the assessment received a modified version of the MCCB32, the Wide Range Achievement Test, 3rd edition (WRAT-3)33, and the UPSA-B34. Further, this assessment is being examined in conjunction with a set of complementary functional scenarios (using an automated teller machine; refilling a prescription; and understanding instructions from a physician), in another federally funded study of older people with schizophrenia.

Once validated, it is a goal for the assessment to become a gold standard measure of functional capacity, specifically for patients with schizophrenia in clinical drug trials. The easy administration will also permit the assessment to be used in a clinical setting allowing clinicians to be able to measure change in a patient over time. Finally, remote delivery is feasible and would allow for assessment in participant’s homes, in the event that they were self administering an intervention without clinic visits.

It is very important to pay close attention to step 3.1.1 of the protocol. The tutorial should always be administered the first time a subject receives the assessment, and in order to prevent practice effects, it is imperative that the correct version of the assessment be administered. Step 3.1.3 points out the importance of ensuring that breaks are limited as much as possible to ensure the assessment is administered in a consistent manner with little interruptions. As steps 4.1 and 4.2 point out, if the subject has used up all of their coins during the Tutorial scenario and is unable to complete the final objective, escape out of the game using the above listed methods to return to the Scenario selection menu. Encourage the subject to try to not to use up all of their virtual currency during the early objectives, and readminster the entire tutorial. If the subject has used up all of their virtual currency during any of the other versions and is unable to complete the final objective, escape out of the game using the above listed methods to return to the Scenario selection menu. This data point will be considered missing, and do not readminister any of the scenarios.

Offenlegungen

The authors have nothing to disclose.

Acknowledgements

This work was supported by the National Institute of Mental Health Grant Number 1 R43MH0842-01A2 and the National Institute of Mental Health Grant Number 2 R44MH084240-02.

Materials

| Computer | N/A | N/A | Computer requirements: · Windows 2000/XP or compatible system · 3-D graphics card with 128 MB memory · 1.6 GHz processor or equivalent · 512 MB RAM · 3.5GB of uncompressed hard disk space · DirectX 9.0 or compatible soundcard · 56kbps modem or better network connection · Motherboard/ soundcard containing Dolby Digital · Interactive Content Encoder |

| Hard Wired Mouse | N/A | N/A | Any functional hard wired mouse that fits comfortably into the subject’s hand will suffice |

| External Speakers | N/A | N/A | Any functional external speakers that allow the volume to be adjusted will suffice |

| VRFCAT Software | NeuroCog Trials, Inc. | N/A | Visit www.neurocogtrials.com for further information |

Referenzen

- Murray, C. J., Lopez, A. D. Evidence-based health policy-Lessons from the global burden of disease study. Science. 274, 740-743 (1996).

- Wu, E. Q., et al. The economic burden of schizophrenia in the United States in. J Clin Psychiatry. 66 (9), 1122-1129 (2002).

- Saykin, A. J., et al. Neuropsychological deficits in neuroleptic naïve patients with first-episode schizophrenia. Arch Gen Psychiatry. 51 (2), 124-131 (1994).

- Fuller, R., Nopoulos, P., Arndt, S., O’Leary, D., Ho, B. C., Andreasen, N. C. Longitudinal assessment of premorbid cognitive functioning in patients with schizophrenia through examination of standardized scholastic test performance. Am J Psychiatry. 159 (7), 1183-1189 (2002).

- Hawkins, K. A., et al. Neuropsychological status of subjects at high risk for a first episode of psychosis. Schizophr Res. 67 (2-3), 115-122 (2004).

- Green, M. F., Braff, D. L. Translating the basic and clinical cognitive neuroscience of schizophrenia to drug development and clinical trials of antipsychotic medications. Biol Psychiatry. 49 (4), 374-384 (2001).

- Heinrichs, R. W., Zakzanis, K. K. Neurocognitive deficit in schizophrenia: A quantitative review of the evidence. Neuropsychology. 12 (3), 426-445 (1998).

- Bilder, R. M., et al. Neuropsychology of first-episode schizophrenia: Initial characterization and clinical correlates. Am J Psychiatry. 157 (4), 549-559 (2000).

- Green, M. F. What are the functional consequences of neurocognitive deficits in schizophrenia. Am J Psychiatry. 153 (3), 321-330 (1996).

- Harvey, P. D., Keefe, R. S. E. Studies of cognitive change in patients with schizophrenia following novel antipsychotic treatment. Am J Psychiatry. 158 (2), 176-184 (2001).

- Keefe, R. S. E., Silva, S. G., Perkins, D. O., Lieberman, J. A. The effects of atypical antipsychotic drugs on neurocognitive impairment in schizophrenia: A review and meta-analysis. Schizophrenia Bull. 25 (2), 201-222 (1999).

- Woodward, N. D., Purdon, S. E., Meltzer, H. Y., Zald, D. H. A meta-analysis of neuropsychological change to clozapine, olanzapine, quetiapine, and risperidone in schizophrenia. Int J Neuropsychopharmacol. 8 (3), 457-472 (2005).

- Keefe, R. S. E., et al. Neurocognitive effects of antipsychotic medications in patients with chronic schizophrenia in the CATIE trial. Arch Gen Psychiatry. 64 (6), 633-647 (2007).

- Keefe, R. S. E., Fox, K. H., Harvey, P. D., Cucchiaro, J., Siu, C., Loebel, A. Characteristics of the MATRICS consensus cognitive battery in a 29-site antipsychotic schizophrenia clinical trial. Schizophr Res. 125 (2-3), 161-168 (2011).

- Buchanan, R. W., et al. A summary of the FDA-NIMH-MATRICS workshop on clinical trial designs for neurocognitive drugs for schizophrenia. Schizophrenia Bull. 31 (6), 5-19 (2005).

- Buchanan, R. W., et al. The 2009 schizophrenia PORT psychopharmacological treatment recommendations and summary statements. Schizophrenia Bull. 36 (1), 71-93 (2010).

- Rosenheck, R., et al. Barriers to employment for people with schizophrenia. Am J Psychiatry. 163 (3), 411-417 (2006).

- Green, M. F., et al. Functional co-primary measures for clinical trials in schizophrenia: Results from the MATRICS psychometric and standardization study. Am J Psychiatry. 165 (2), 221-228 (2008).

- Keefe, R. S. E., Poe, M., Walker, T. M., Harvey, P. D. The relationship of the Brief Assessment of Cognition in Schizophrenia (BACS) to functional capacity and real-world functional outcome. J Clin Exp Neuropsychol. 28 (2), 260-269 (2006).

- Ventura, J., et al. The cognitive assessment interview (CAI): Development and validation of an empirically derived, brief interview-based measure of cognition. Schizophr Res. 121 (1-3), 24-31 (2010).

- Bowie, C. R., Twamley, E. W., Anderson, H., Halpern, B., Patterson, T. L., Harvey, P. D. Self-assessment of functional status in schizophrenia. J Psychiatr Res. 41 (12), 1012-1018 (2007).

- Harvey, P. D., Velligan, D. I., Bellack, A. S. Performance-based measures of functional skills: Usefulness in clinical treatment studies. Schizophrenia Bull. 33 (5), 1138-1148 (2007).

- Bellack, A. S., Sayers, M., Mueser, K. T., Bennett, M. Evaluation of social problem solving in schizophrenia. J Abnorm Psychol. 103 (2), 371-378 (1994).

- Patterson, T. L., Moscona, S., McKibbin, C. L., Davidson, K., Jeste, D. V. Social skills performance assessment among older patients with schizophrenia. Schizophr Res. 48 (2-3), 351-2001 (2001).

- Patterson, T. L., Goldman, S., McKibbin, C. L., Hughs, T., Jeste, D. V. UCSD performance-based skills assessment: Development of a new measure of everyday functioning for severely mentally ill adults. Schizophrenia Bull. 27 (2), 235-245 (2001).

- Leifker, F. R., Patterson, T. L., Heaton, R. K., Harvey, P. D. Validating measures of real-world outcome: the results of the VALERO Expert Survey and RAND Panel. 37 (2), (2011).

- Green, M. F., et al. Evaluation of functionally-meaningful measures for clinical trials of cognition enhancement in schizophrenia. Am J Psychiatry. 168 (4), 400-407 (2011).

- Freeman, D. Studying and treating schizophrenia using virtual reality: a new paradigm. Schizophr Bull. 34 (4), 605-610 (2008).

- Kurtz, M. M., Baker, E., Pearlson, G. D., Astur, R. S. A virtual reality apartment as a measure of medication management skills in patients with schizophrenia: a pilot study. Schizophr Bull. 33 (5), 1162-1170 (2007).

- Moore, R. C., et al. Initial validation of a computerized version of the UCSD Performance-Based Skills Assessment (C-UPSA) for assessing functioning in schizophrenia. Schizophr Res. 144 (1-3), 87-92 (2013).

- Harvey, P. D., et al. Factor structure of neurocognition and functional capacity in schizophrenia: A multidimensional examination of temporal stability. J Int Neuropsychol Soc. 19 (6), 656-663 (2013).

- Nuechterlein, K. H., et al. The MATRICS Consensus Cognitive Battery, part 1: Test selection, reliability, and validity. Am J Psychiatry. 165 (2), 214-220 (2008).

- Wilkinson, G. S. . Wide Range Achievement Test: Third Edition. , (1993).

- Mausbach, B. T., et al. Usefulness of the UCSD Performance-Based Skills Assessment (UPSA) for predicting residential independence in patients with chronic schizophrenia. J Psychiatr Res. 42 (4), 320-327 (2008).