Tracking Rats in Operant Conditioning Chambers Using a Versatile Homemade Video Camera and DeepLabCut

Summary

This protocol describes how to build a small and versatile video camera, and how to use videos obtained from it to train a neural network to track the position of an animal inside operant conditioning chambers. This is a valuable complement to standard analyses of data logs obtained from operant conditioning tests.

Abstract

Operant conditioning chambers are used to perform a wide range of behavioral tests in the field of neuroscience. The recorded data is typically based on the triggering of lever and nose-poke sensors present inside the chambers. While this provides a detailed view of when and how animals perform certain responses, it cannot be used to evaluate behaviors that do not trigger any sensors. As such, assessing how animals position themselves and move inside the chamber is rarely possible. To obtain this information, researchers generally have to record and analyze videos. Manufacturers of operant conditioning chambers can typically supply their customers with high-quality camera setups. However, these can be very costly and do not necessarily fit chambers from other manufacturers or other behavioral test setups. The current protocol describes how to build an inexpensive and versatile video camera using hobby electronics components. It further describes how to use the image analysis software package DeepLabCut to track the status of a strong light signal, as well as the position of a rat, in videos gathered from an operant conditioning chamber. The former is a great aid when selecting short segments of interest in videos that cover entire test sessions, and the latter enables analysis of parameters that cannot be obtained from the data logs produced by the operant chambers.

Introduction

In the field of behavioral neuroscience, researchers commonly use operant conditioning chambers to assess a wide range of different cognitive and psychiatric features in rodents. While there are several different manufacturers of such systems, they typically share certain attributes and have an almost standardized design1,2,3. The chambers are generally square- or rectangle-shaped, with one wall that can be opened for placing animals inside, and one or two of the remaining walls containing components such as levers, nose-poke openings, reward trays, response wheels and lights of various kinds1,2,3. The lights and sensors present in the chambers are used to both control the test protocol and track the animals’ behaviors1,2,3,4,5. The typical operant conditioning systems allow for a very detailed analysis of how the animals interact with the different operanda and openings present in the chambers. In general, any occasions where sensors are triggered can be recorded by the system, and from this data users can obtain detailed log files describing what the animal did during specific steps of the test4,5. While this provides an extensive representation of an animal’s performance, it can only be used to describe behaviors that directly trigger one or more sensors4,5. As such, aspects related to how the animal positions itself and moves inside the chamber during different phases of the test are not well described6,7,8,9,10. This is unfortunate, as such information can be valuable for fully understanding the animal’s behavior. For example, it can be used to clarify why certain animals perform poorly on a given test6, to describe the strategies that animals might develop to handle difficult tasks6,7,8,9,10, or to appreciate the true complexity of supposedly simple behaviors11,12. To obtain such articulate information, researchers commonly turn to manual analysis of videos6,7,8,9,10,11.

When recording videos from operant conditioning chambers, the choice of camera is critical. The chambers are commonly located in isolation cubicles, with protocols frequently making use of steps where no visible light is shining3,6,7,8,9. Therefore, the use of infra-red (IR) illumination in combination with an IR-sensitive camera is necessary, as it allows visibility even in complete darkness. Further, the space available for placing a camera inside the isolation cubicle is often very limited, meaning that one benefits strongly from having small cameras that use lenses with a wide field of view (e.g., fish-eye lenses)9. While manufacturers of operant conditioning systems can often supply high-quality camera setups to their customers, these systems can be expensive and do not necessarily fit chambers from other manufacturers or setups for other behavioral tests. However, a notable benefit over using stand-alone video cameras is that these setups can often interface directly with the operant conditioning systems13,14. Through this, they can be set up to only record specific events rather than full test sessions, which can greatly aid in the analysis that follows.

The current protocol describes how to build an inexpensive and versatile video camera using hobby electronics components. The camera uses a fisheye lens, is sensitive to IR illumination and has a set of IR light emitting diodes (IR LEDs) attached to it. Moreover, it is built to have a flat and slim profile. Together, these aspects make it ideal for recording videos from most commercially available operant conditioning chambers as well as other behavioral test setups. The protocol further describes how to process videos obtained with the camera and how to use the software package DeepLabCut15,16 to aid in extracting video sequences of interest as well as tracking an animal’s movements therein. This partially circumvents the draw-back of using a stand-alone camera over the integrated solutions provided by operant manufacturers of conditioning systems, and offers a complement to manual scoring of behaviors.

Efforts have been made to write the protocol in a general format to highlight that the overall process can be adapted to videos from different operant conditioning tests. To illustrate certain key concepts, videos of rats performing the 5-choice serial reaction time test (5CSRTT)17 are used as examples.

Protocol

All procedures that include animal handling have been approved by the Malmö-Lund Ethical committee for animal research.

1. Building the video camera

NOTE: A list of the components needed for building the camera is provided in the Table of Materials. Also refer to Figure 1, Figure 2, Figure 3, Figure 4, Figure 5.

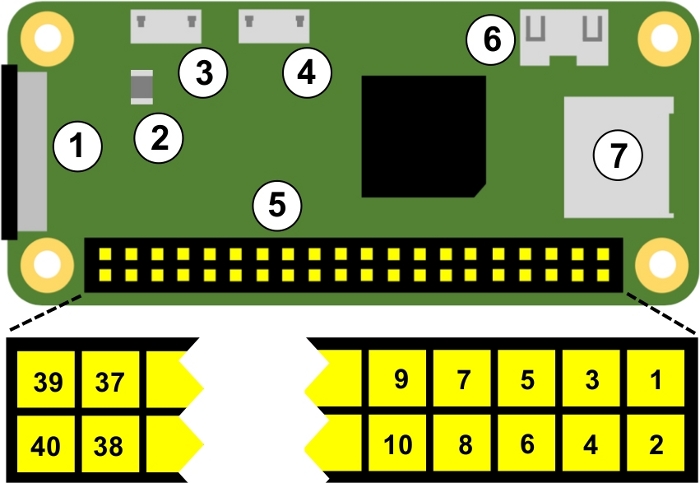

- Attach the magnetic metal ring (that accompanies the fisheye lens package) around the opening of the camera stand (Figure 2A). This will allow the fisheye lens to be placed in front of the camera.

- Attach the camera module to the camera stand (Figure 2B). This will give some stability to the camera module and offer some protection to the electronic circuits.

- Open the camera ports on the camera module and microcomputer (Figure 1) by gently pulling on the edges of their plastic clips (Figure 2C).

- Place the ribbon cable in the camera ports, so that the silver connectors face the circuit boards (Figure2C). Lock the cable in place by pushing in the plastic clips of the camera ports.

- Place the microcomputer in the plastic case and insert the listed micro SD card (Figure 2D).

NOTE: The micro SD card will function as the microcomputer’s hard drive and contains a full operating system. The listed micro SD card comes with an installation manager preinstalled on it (New Out Of Box Software (NOOBS). As an alternative, one can write an image of the latest version of the microcomputer’s operating system (Raspbian or Rasberry Pi OS) to a generic micro SD card. For aid with this, please refer to official web resources18. It is preferable to use a class 10 micro SD card with 32 Gb of storage space. Larger SD cards might not be fully compatible with the listed microcomputer. - Connect a monitor, keyboard and a mouse to the microcomputer, and then connect its power supply.

- Follow the steps as prompted by the installation guide to perform a full installation of the microcomputer’s operating system (Raspbian or Rasberry Pi OS). When the microcomputer has booted, ensure that it is connected to internet either through an ethernet cable or Wi-Fi.

- Follow the steps outlined below to update the microcomputer’s preinstalled software packages.

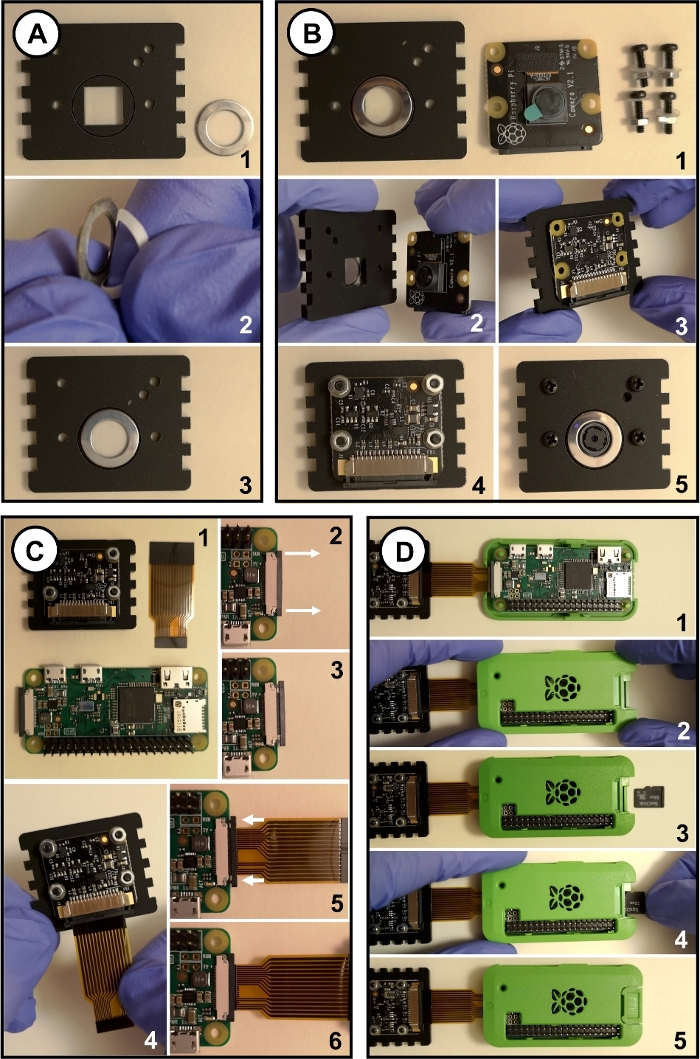

- Open a terminal window (Figure 3A).

- Type “sudo apt-get update” (excluding quotation marks) and press the Enter key (Figure 3B). Wait for the process to finish.

- Type “sudo apt full-upgrade” (excluding quotation marks) and press enter. Make button responses when prompted and wait for the process to finish.

- Under the Start menu, select Preferences and Raspberry Pi configurations (Figure 3C). In the opened window, go to the Interfaces tab and click to Enable the Camera and I2C. This is required for having the microcomputer work with the camera and IR LED modules.

- Rename Supplementary File 1 to “Pi_video_camera_Clemensson_2019.py”. Copy it onto a USB memory stick, and subsequently into the microcomputer’s /home/pi folder (Figure 3D). This file is a Python script, which enables video recordings to be made with the button switches that are attached in step 1.13.

- Follow the steps outlined below to edit the microcomputer’s rc.local file. This makes the computer start the script copied in step 1.10 and start the IR LEDs attached in step 1.13 when it boots.

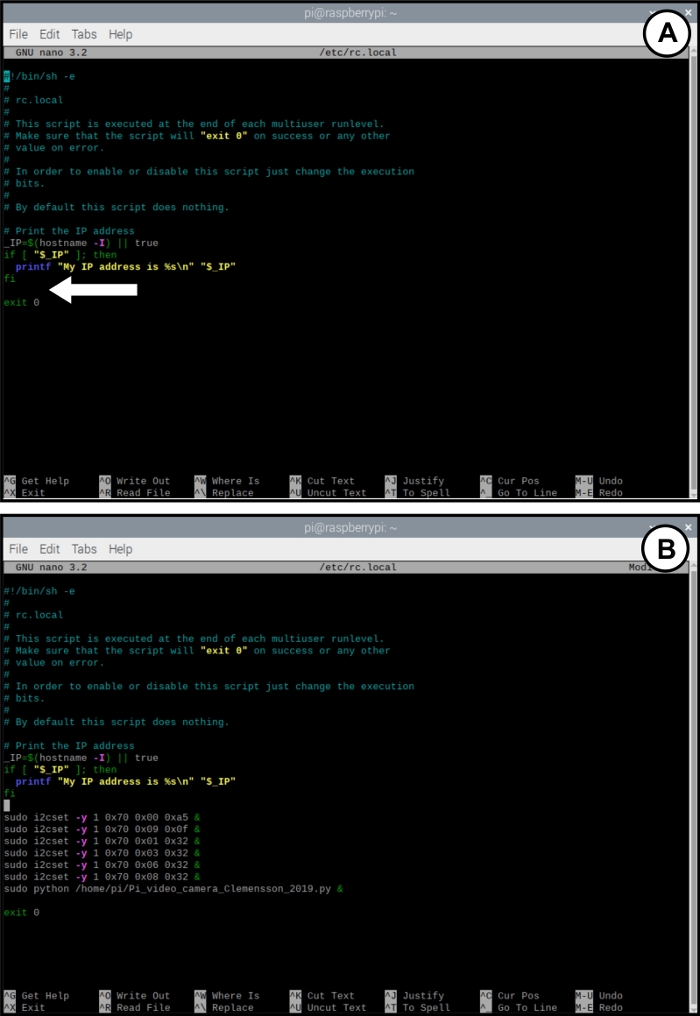

CAUTION: This auto-start feature does not reliably work with microcomputer boards other than the listed model.- Open a terminal window, type “sudo nano /etc/rc.local” (excluding quotation marks) and press enter. This opens a text file (Figure 4A).

- Use the keyboard’s arrow keys to move the cursor down to the space between “fi” and “exit 0” (Figure 4A).

- Add the following text as shown in Figure 4B, writing each string of text on a new line:

sudo i2cset -y 1 0x70 0x00 0xa5 &

sudo i2cset -y 1 0x70 0x09 0x0f &

sudo i2cset -y 1 0x70 0x01 0x32 &

sudo i2cset -y 1 0x70 0x03 0x32 &

sudo i2cset -y 1 0x70 0x06 0x32 &

sudo i2cset -y 1 0x70 0x08 0x32 &

sudo python /home/pi/Pi_video_camera_Clemensson_2019.py & - Save the changes by pressing Ctrl + x followed by y and Enter.

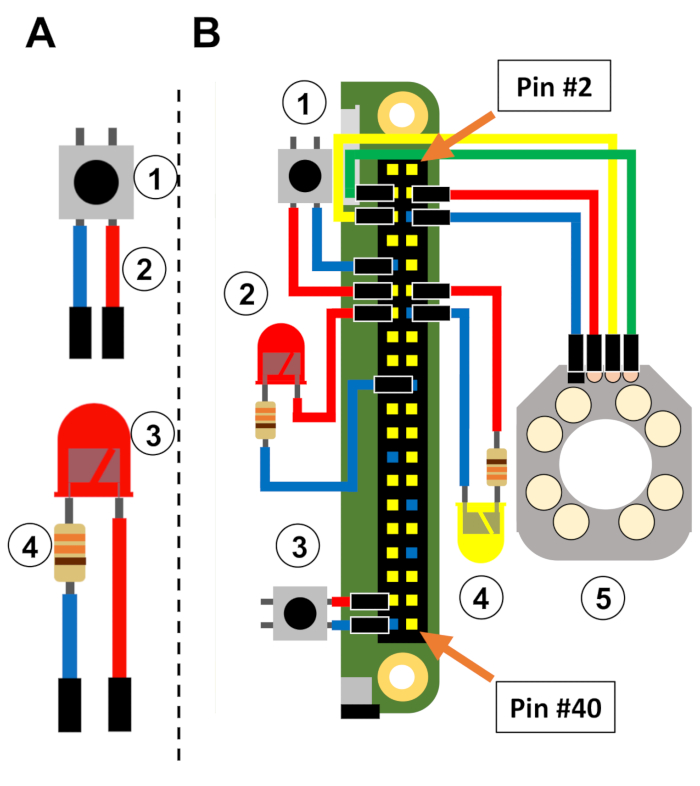

- Solder together the necessary components as indicated in Figure 5A, and as described below.

- For the two colored LEDs, attach a resistor and a female jumper cable to one leg, and a female jumper cable to the other (Figure 5A). Try to keep the cables as short as possible. Take note of which of the LED’s electrodes is the negative one (typically the short one), as this needs to be connected to ground on the microcomputer’s general-purpose input/output (GPIO) pins.

- For the two button switches, attach a female jumper cable to each leg (Figure 5A). Make the cables long for one of the switches, and short for the other.

- To assemble the IR LED module, follow instructions available on its official web resources19.

- Cover the soldered joints with shrink tubing to limit the risk of short-circuiting the components.

- Switch off the microcomputer and connect the switches and LEDs to its GPIO pins as indicated in Figure 5B, and described below.

CAUTION: Wiring the components to the wrong GPIO pins could damage them and/or the microcomputer when the camera is switched on.- Connect one LED so that its negative end connects to pin #14 and its positive end connects to pin #12. This LED will shine when the microcomputer has booted and the camera is ready to be used.

- Connect the button switch with long cables so that one cable connects to pin #9 and the other one to pin #11. This button is used to start and stop the video recordings.

NOTE: The script that controls the camera has been written so that this button is unresponsive for a few seconds just after starting or stopping a video recording. - Connect one LED so that its negative end connects to pin #20 and its positive end connects to pin #13. This LED will shine when the camera is recording a video.

- Connect the button switch with the short cables so that one cable connects to pin #37 and the other one to pin #39. This switch is used to switch off the camera.

- Connect the IR LED module as described in its official web resources19.

2. Designing the operant conditioning protocol of interest

NOTE: To use DeepLabCut for tracking the protocol progression in videos recorded from operant chambers, the behavioral protocols need to be structured in specific ways, as explained below.

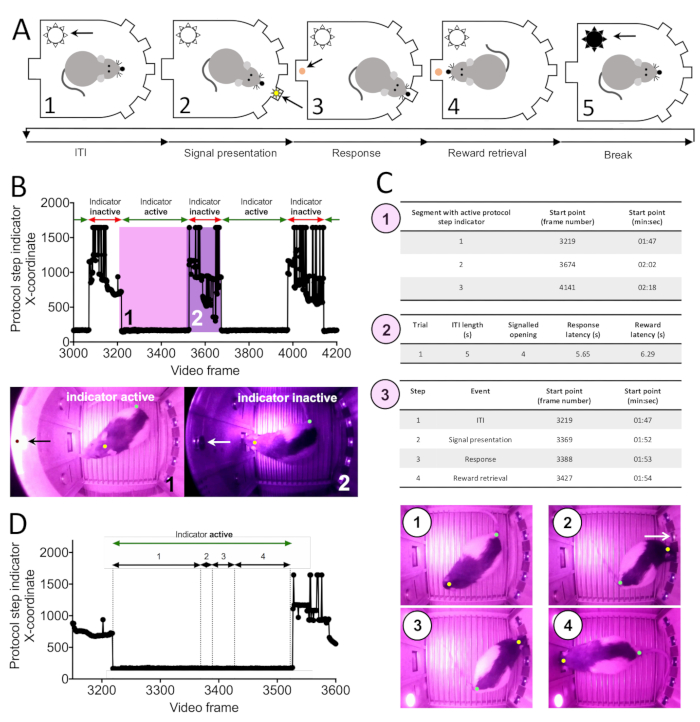

- Set the protocol to use the chamber’s house light, or another strong light signal, as an indicator of a specific step in the protocol (such as the start of individual trials, or the test session) (Figure 6A). This signal will be referred to as the “protocol step indicator” in the remainder of this protocol. The presence of this signal will allow tracking protocol progression in the recorded videos.

- Set the protocol to record all responses of interest with individual timestamps in relation to when the protocol step indicator becomes active.

3. Recording videos of animals performing the behavioral test of interest

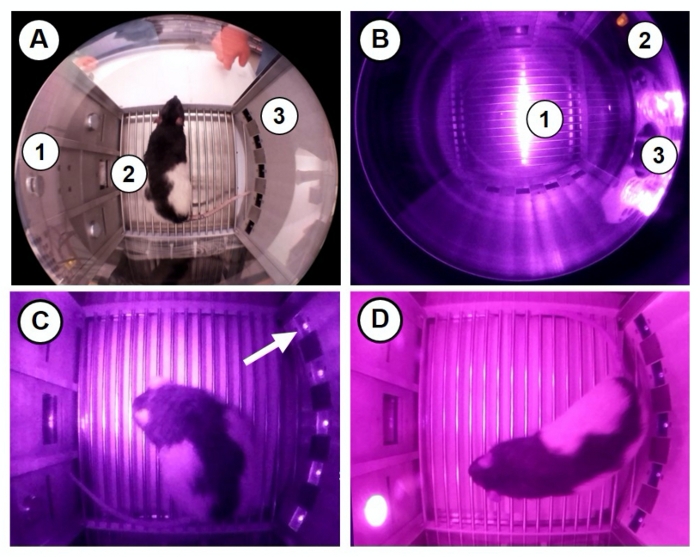

- Place the camera on top of the operant chambers, so that it records a top view of the area inside (Figure 7).

NOTE: This is particularly suitable for capturing an animals’ general position and posture inside the chamber. Avoid placing the camera’s indicator lights and the IR LED module close to the camera lens. - Start the camera by connecting it to an electrical outlet via the power supply cable.

NOTE: Prior to first use, it is beneficial to set the focus of the camera, using the small tool that accompanies the camera module. - Use the button connected in step 1.13.2 to start and stop video recordings.

- Switch off the camera by following these steps.

- Push and hold the button connected in step 1.13.4 until the LED connected in step 1.13.1 switches off. This initiates the camera’s shut down process.

- Wait until the green LED visible on top of the microcomputer (Figure 1) has stopped blinking.

- Remove the camera’s power supply.

CAUTION: Unplugging the power supply while the microcomputer is still running can cause corruption of the data on the micro SD card.

- Connect the camera to a monitor, keyboard, mouse and USB storage device and retrieve the video files from its desktop.

NOTE: The files are named according to the date and time when video recording was started. However, the microcomputer does not have an internal clock and only updates its time setting when connected to the internet. - Convert the recorded videos from .h264 to .MP4, as the latter works well with DeepLabCut and most media players.

NOTE: There are multiple ways to achieve this. One is described in Supplementary File 2.

4. Analyzing videos with DeepLabCut

NOTE: DeepLabCut is a software package that allows users to define any object of interest in a set of video frames, and subsequently use these to train a neural network in tracking the objects’ positions in full-length videos15,16. This section gives a rough outline for how to use DeepLabCut to track the status of the protocol step indicator and the position of a rat’s head. Installation and use of DeepLabCut is well-described in other published protocols15,16. Each step can be done through specific Python commands or DeepLabCut’s graphic user interface, as described elsewhere15,16.

- Create and configure a new DeepLabCut project by following the steps outlined in16.

- Use DeepLabCut’s frame grabbing function to extract 700‒900 video frames from one or more of the videos recorded in section 3.

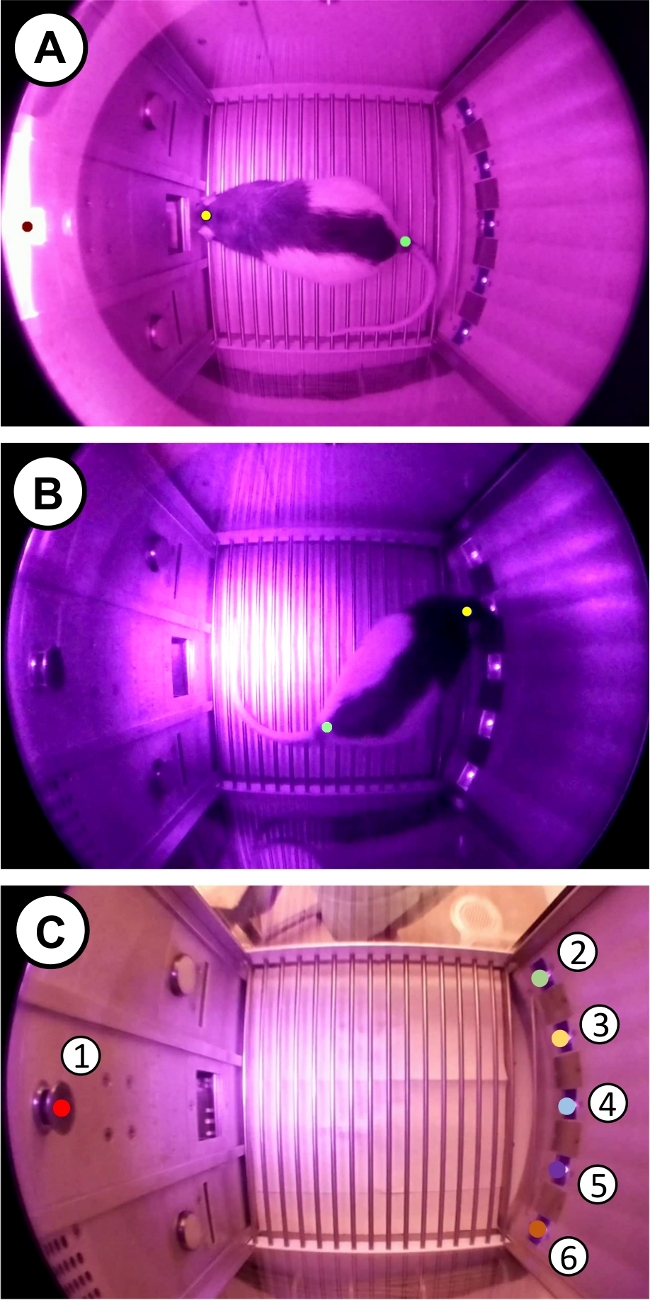

NOTE: If the animals differ considerably in fur pigmentation or other visual characteristics, it is advisable that the 700‒900 extracted video frames are split across videos of different animals. Through this, one trained network can be used to track different individuals.- Make sure to include video frames that display both the active (Figure 8A) and inactive (Figure 8B) state of the protocol step indicator.

- Make sure to include video frames that cover the range of different positions, postures and head movements that the rat may show during the test. This should include video frames where the rat is standing still in different areas of the chamber, with its head pointing in different directions, as well as video frames where the rat is actively moving, entering nose poke openings and entering the pellet trough.

- Use DeepLabCut’s Labeling Toolbox to manually mark the position of the rat’s head in each video frame extracted in step 4.2. Use the mouse cursor to place a “head” label in a central position between the rat’s ears (Figure 8A,B). In addition, mark the position of the chamber’s house light (or other protocol step indicator) in each video frame where it is actively shining (Figure 8A). Leave the house light unlabeled in frames where it is inactive (Figure 8B).

- Use DeepLabCut’s “create training data set” and “train network” functions to create a training data set from the video frames labeled in step 4.3 and start the training of a neural network. Make sure to select “resnet_101” for the chosen network type.

- Stop the training of the network when the training loss has plateaued below 0.01. This may take up to 500,000 training iterations.

NOTE: When using a GPU machine with approximately 8 GB of memory and a training set of about 900 video frames (resolution: 1640 x 1232 pixels), the training process has been found to take approximately 72 h. - Use DeepLabCut’s video analysis function to analyze videos gathered in step 3, using the neural network trained in step 4.4. This will provide a .csv file listing the tracked positions of the rat’s head and the protocol step indicator in each video frame of the analyzed videos. In addition, it will create marked-up video files where the tracked positions are displayed visually (Videos 1-8).

- Evaluate the accuracy of the tracking by following the steps outlined below.

- Use DeepLabCut’s built-in evaluate function to obtain an automated evaluation of the network’s tracking accuracy. This is based on the video frames that were labeled in step 4.3 and describes how far away on average the position tracked by the network is from the manually placed label.

- Select one or more brief video sequences (of about 100‒200 video frames each) in the marked-up videos obtained in step 4.6. Go through the video sequences, frame by frame, and note in how many frames the labels correctly indicate the positions of the rat’s head, tail, etc., and in how many frames the labels are placed in erroneous positions or not shown.

- If the label of a body part or object is frequently lost or placed in an erroneous position, identify the situations where tracking fails. Extract and add labeled frames of these occasions by repeating steps 4.2. and 4.3. Then retrain the network and reanalyze the videos by repeating steps 4.4-4.7. Ultimately, tracking accuracy of >90% accuracy should be achieved.

5. Obtaining coordinates for points of interest in the operant chambers

- Use DeepLabCut as described in step 4.3 to manually mark points of interest in the operant chambers (such as nose poke openings, levers, etc.) in a single video frame (Figure 8C). These are manually chosen depending on study-specific interests, although the position of the protocol step indicator should always be included.

- Retrieve the coordinates of the marked points of interest from the .csv file that DeepLabCut automatically stores under “labelled data” in the project folder.

6. Identifying video segments where the protocol step indicator is active

- Load the .csv files obtained from the DeepLabCut video analysis in step 4.6 into a data management software of choice.

NOTE: Due to the amount and complexity of data obtained from DeepLabCut and operant conditioning systems, the data management is best done through automated analysis scripts. To get started with this, please refer to entry-level guides available elsewhere20,21,22. - Note in which video segments the protocol step indicator is tracked within 60 pixels of the position obtained in section 5. These will be periods where the protocol step indicator is active (Figure 6B).

NOTE: During video segments where the protocol step indicator is not shining, the marked-up video might seem to indicate that DeepLabCut is not tracking it to any position. However, this is rarely the case, and it is instead typically tracked to multiple scattered locations. - Extract the exact starting point for each period where the protocol step indicator is active (Figure 6C: 1).

7. Identifying video segments of interest

- Consider the points where the protocol step indicator becomes active (Figure 6C: 1) and the timestamps of responses recorded by the operant chambers (section 2, Figure 6C: 2).

- Use this information to determine which video segments cover specific interesting events, such as inter-trial intervals, responses, reward retrievals etc. (Figure 6C: 3, Figure 6D).

NOTE: For this, keep in mind that the camera described herein records videos at 30 fps. - Note the specific video frames that cover these events of interest.

- (Optional) Edit video files of full test sessions to include only the specific segments of interest.

NOTE: There are multiple ways to achieve this. One is described in Supplementary File 2 and 3. This greatly helps when storing large numbers of videos and can also make reviewing and presenting results more convenient.

8. Analyzing the position and movements of an animal during specific video segments

- Subset the full tracking data of head position obtained from DeepLabCut in step 4.6 to only include video segments noted under section 7.

- Calculate the position of the animal’s head in relation to one or more of the reference points selected under section 5 (Figure 8C). This enables comparisons of tracking and position across different videos.

- Perform relevant in-depth analysis of the animal’s position and movements.

NOTE: The specific analysis performed will be strongly study-specific. Some examples of parameters that can be analyzed are given below.- Visualize path traces by plotting all coordinates detected during a selected period within one graph.

- Analyze proximity to a given point of interest by using the following formula:

- Analyze changes in speed during a movement by calculating the distance between tracked coordinates in consecutive frames and divide by 1/fps of the camera.

Representative Results

Video camera performance

The representative results were gathered in operant conditioning chambers for rats with floor areas of 28.5 cm x 25.5 cm, and heights of 28.5 cm. With the fisheye lens attached, the camera captures the full floor area and large parts of the surrounding walls, when placed above the chamber (Figure 7A). As such, a good view can be obtained, even if the camera is placed off-center on the chamber’s top. This should hold true for comparable operant chambers. The IR LEDs are able to illuminate the entire chamber (Figure 7B,C), enabling a good view, even when all other lights inside the chamber are switched off (Figure 7C). However, the lighting in such situations is not entirely even, and may result in some difficulties in obtaining accurate tracking. If such analysis is of interest, additional sources of IR illumination might be required. It is also worth noting that some chambers use metal dropping pans to collect urine and feces. If the camera is placed directly above such surfaces, strong reflections of the IR LEDs’ light will be visible in the recorded videos (Figure 7B). This can, however, be avoided by placing paper towels in the dropping pan, giving a much-improved image (Figure 7C). Placing the camera’s IR or colored LEDs too close to the camera lens may result in them being visible in the image periphery (Figure 7B). As the camera is IR sensitive, any IR light sources that are present inside the chambers may be visible in the videos. For many setups, this will include the continuous shining of IR beam break sensors (Figure 7C). The continuous illumination from the camera’s IR LEDs does not disturb the image quality of well-lit chambers (Figure 7D). The size of the videos recorded with the camera is approximately 77 Mb/min. If a 32 Gb micro SD card is used for the camera, there should be about 20 Gb available following the installation of the operating system. This leaves room for approximately 260 min of recorded footage.

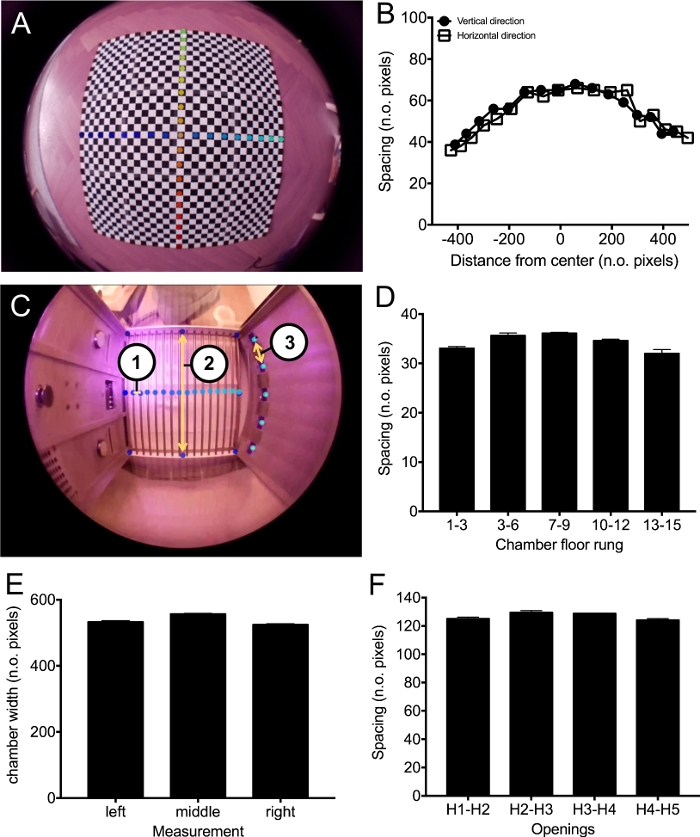

The fisheye lens causes the camera to have a slightly uneven focus, being sharp in the center of the image but reduced sharpness towards the edges. This does not appear to affect the accuracy of tracking. Moreover, the fisheye lens results in the recorded image being distorted. For example, the distances between equally spaced points along straight lines will show artificially reduced spacing towards the periphery of the image (Figure 9A,B). If the camera is used for applications where most of the field of view or absolute measurements of distance and speed are of interest, it is worth considering correcting the data for this distortion23 (Supplementary File 4). The distortion is, however, relatively mild in the center of the image (Figure 9B). For videos gathered in our operant chamber, the area of interest is limited to the central 25% of the camera’s field of view. Within this area, the effect of the fisheye distortion is minimal (Figure 9C‒F).

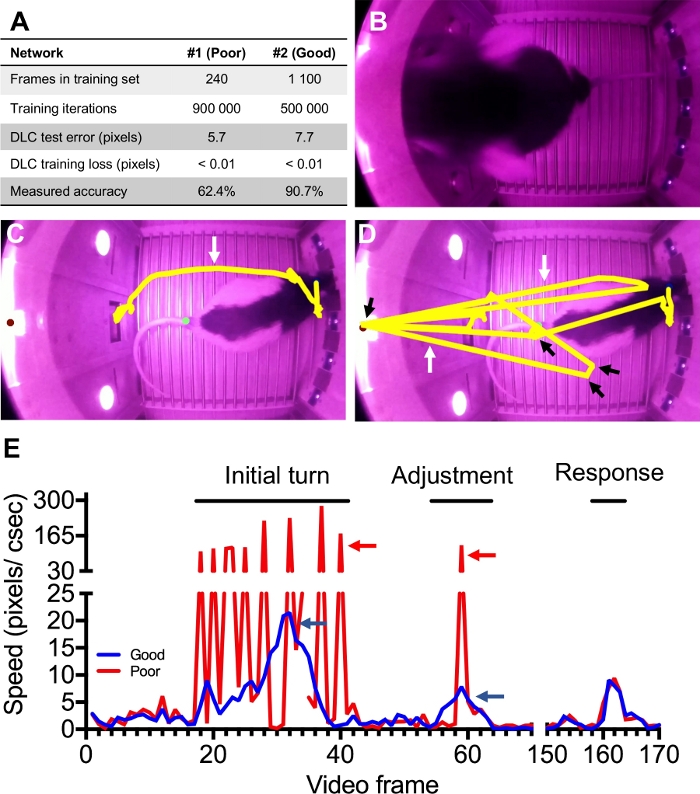

Accuracy of tracking with DeepLabCut

The main factors that will determine the tracking accuracy of a trained network are (i) the number of labeled frames in its training data set, (ii) how well those labeled frames capture the behavior of interest and (iii) the number of training iterations used. DeepLabCut includes an evaluate function, which reports an estimate of how far away (in numbers of pixels) its tracking can be expected to be from the actual location of an object. This, however, does not necessarily give a good description of the number of frames where an object is lost and/or mislabeled (Figure 10A), prompting the need for additional manual assessment of tracking accuracy.

For analyzing behaviors inside an operant chamber, a well-trained network should allow the accurate identification of all events where the protocol step indicator is active. If not, retraining the network or choosing a different indicator might be needed. Despite having a well-trained network, tracking of the protocol step indicator may on occasion be disrupted by animals blocking the camera’s view (Figure 10B). This will cause breaks in the tracking that are reminiscent of episodes where the indicator is inactive. The frequency of this happening will depend on animal strain, type of behavioral protocol and choice of protocol step indicator. In the example data from the 5CSRTT that is used here, it occurred on four out of 400 trials (data not shown). All occasions were easily identifiable, as their durations did not match that of the break step that had been included in the protocol design (Figure 6A). Ultimately, choosing an indicator that is placed high up in the chamber and away from components that animals interact with is likely to be helpful.

A well-trained network should allow >90% accuracy when tracking an animal’s head during video segments of interest (Video 1). With this, only a small subset of video frames will need to be excluded from the subsequent analysis and usable tracking data will be obtainable from virtually all trials within a test session. Accurate tracking is clearly identifiable by markers following an animal throughout its movements (Video 2) and plotted paths appearing smooth (Figure 10C). In contrast, inaccurate tracking is characterized by markers not reliably staying on target (Video 3) and by plotted paths appearing jagged (Figure 10D). The latter is caused by the object being tracked to distant erroneous positions in single video frames within sequences of accurate tracking. As a result of this, inaccurate tracking typically causes sudden shifts in calculated movement speeds (Figure 10E). This can be used to identify video frames where tracking is inaccurate, to exclude them from subsequent analysis. If there are substantial problems with tracking accuracy, the occasions where tracking fails should be identified and the network should be retrained using an expanded training set containing labeled video frames of these events (Figure 10A,E).

Use of video tracking to complement analysis of operant behaviors

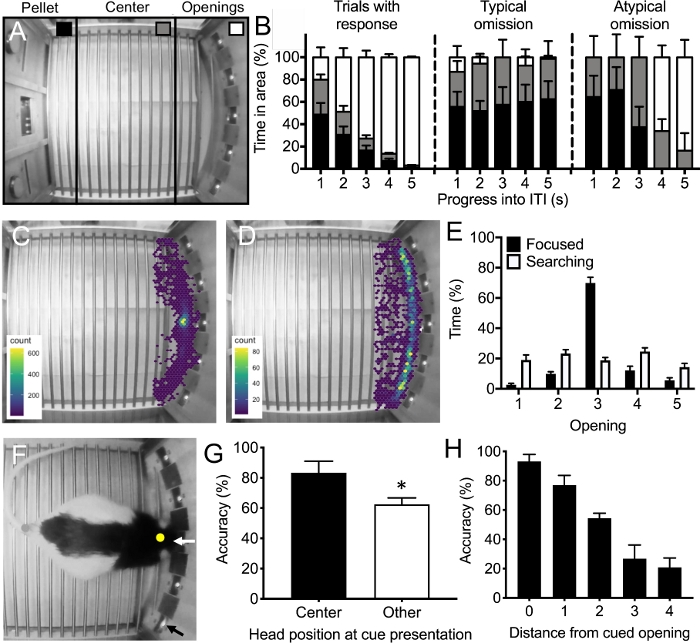

Analyzing how an animal moves and positions itself during operant tests will provide multiple insights into the complex and multifaceted nature of their behaviors. By tracking where an animal is located throughout a test session, one can assess how distinct movement patterns relate to performance (Figure 11A,B). By further investigating head movements during specific protocol steps, one can detect and characterize the use of different strategies (Figure 11C‒E).

To exemplify, consider the representative data presented for rats performing the 5CSRTT test (Figure 6A, Figure 11). In this test, animals are presented with multiple trials that each start with a 5 s waiting step (inter-trial interval – ITI) (Figure 6A: 1). At the end of this, a light will shine inside one of the nose poke openings (randomly chosen position on each trial, Figure 6A: 2). Nose-poking into the cued opening is considered a correct response and is rewarded (Figure 6A: 3). Responding into another opening is considered incorrect. Failing to respond within 5 s following the presentation of the light is considered an omission. Tracking head movements during the ITI of this test has revealed that on trials where rats perform a response, they are fast at moving towards the area around the nose poke openings (Figure 11A,B, Video 4). In contrast, on the majority of omission trials, the rats fail to approach the area around the openings (Figure 11B, Video 5). This behavior is in line with the common interpretation of omissions being closely related to a low motivation to perform the test3,16. However, on a subset of omission trials (approximately 20% of the current data set), the rats showed a clear focus towards the openings (Figure 11B, Video 6) but failed to note the exact location of the cued opening. The data thus indicate that there are at least two different types of omissions, one related to a possible disinterest in the ongoing trial, and another that is more dependent on insufficient visuospatial attention3. Head tracking can also be used to distinguish apparent strategies. As an example, two distinct attentional strategies were revealed when analyzing how the rats move when they are in proximity to the nose poke openings during the 5CSRTT (Figure 11C‒E). In the first strategy, rats showed an extremely focused approach, maintaining a central position throughout most of the ITI (Figure 11C, Video 7). In contrast, rats adopting the other strategy constantly moved their heads between the different openings in a search-like manner (Figure 11D, Video 8). This type of behavioral differences can conveniently be quantified by calculating the amount of time spent in proximity to the different openings (Figure 11E). Finally, by analyzing which opening the rat is closest to at the time of cue light presentation (Figure 11F), it can be demonstrated that being in a central position (Figure 11G) and/or in close proximity to the location of the cued opening (Figure 11H) seems to be beneficial for accurate performance on the test.

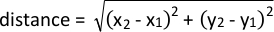

Figure 1: Sketch of the listed microcomputer. The schematic shows the position of several components of interest on the microcomputer motherboard. These are marked with circled numbers as follows: 1: Connector for camera ribbon cable; 2: LED light indicating when computer is running; 3: Micro USB for power cable; 4: Micro USB for mouse/keyboard; 5: General-purpose input/output pins (GPIO pins), these pins are used to connect the microcomputer to LEDs, switches, and the IR LED module; 6: Mini HDMI output; 7: Micro SD card slot. In the lower portion of the figure, a cropped and enlarged part of the GPIO pins is shown to indicate how to count along them to correctly identify the position of a specific pin. Please click here to view a larger version of this figure.

Figure 2: Building the main body of the camera. The figures illustrated the main steps for building the body of the camera. (A) Attach the magnetic metal ring to the camera stand. (B) Attach the camera module to the camera stand. (C) Connect the camera module to the microcomputer via the flat ribbon cable. Note the white arrows indicating how to open and close the camera ports present on both the microcomputer and the camera module. (D) Place the microcomputer into the plastic casing and insert a micro SD card. Please click here to view a larger version of this figure.

Figure 3: Updating the microcomputer’s operating system and enabling the peripherals. The figure shows four different screenshots depicting the user interface of the microcomputer. (A) Terminal windows can be opened by clicking the “terminal” icon in the top left corner of the screen. (B) Within the terminal, one can type in different kinds of commands, as detailed in the protocol text. The screenshot displays the command for updating the system’s software packages. (C) The screenshot displays how to navigate to the configurations menu, where one can enable the use of the camera module and the I2C GPIO pins. (D) The screenshot displays the /home/pi folder, where the camera script should be copied in step 1.10 of the protocol. The window is opened by clicking the indicated icon in the top left corner of the screen. Please click here to view a larger version of this figure.

Figure 4: Configuring the microcomputer’s rc.local file. The figure displays two screenshots of the microcomputer’s rc.local file, when accessed through the terminal as described in step 1.11.1. (A) A screenshot of the rc.local file in its original format. The arrow indicates the space where text needs to be entered in order to enable the auto-start feature of the camera. (B) A screenshot of the rc.local file after it has been edited to shine the IR LEDs and start the python script controlling the camera upon startup of the microcomputer. Please click here to view a larger version of this figure.

Figure 5: Connecting of switches and LEDs to microcomputer’s GPIO pins. (A) Schematic showing a button switch with female jumper cables (top) and a LED with resistor and female jumper cables (bottom). (1) Button switch, (2) female jumper cables, (3) LED, (4) resistor. (B) Schematic image showing how the two button switches, the colored LEDs and the IR LED board are connected to the GPIO pins of the microcomputer. Blue cables and GPIO pins indicate ground. The position of two GPIO pins are indicated in the figure (GPIO pins #2 and #40): (1) Button for starting/stopping video recording. (2) LED indicating when video is being recorded. (3) Button for switching off camera. (4) LED indicating when the camera has booted and is ready to be used. (5) IR LED module. Note that circuits with LEDs also contain 330 Ω resistors. Please click here to view a larger version of this figure.

Figure 6: Using DeepLabCut tracking of protocol step indicator to identify sequences of interest in full-length videos. (A) Schematic of the steps of a single trial in the 5-choice serial reaction time test (5CSRTT): (1) First, there is a brief waiting period (ITI). Arrow indicates an actively shining house light. (2) At the end of the ITI, a light will shine in one of the five nose poke openings (arrow). (3) If a rat accurately responds by performing a nose poke into the cued opening, a reward is delivered (arrow). (4) The rat is allowed to retrieve the reward. (5) To enable the use of the house light as a protocol step indicator, a brief pause step where the house light is switched off (arrow) is implemented before the next trial begins. Note that the house light is shining during subsequent steps of the trial. (B) An example graph depicting the x-coordinate of the active house light, as tracked by DeepLabCut, during a video segment of a 5CSRTT test. During segments where the house light is shining (indicator active – 1), the position is tracked to a consistent and stable point (also note the red marker (indicated by arrow) in the example video frame), comparable to that of the house light’s position in Figure 8C (x, y: 163, 503). During segments where the house light is not shining (indicator inactive – 2, note the white arrow in the example video frame), the tracked position is not stable, and far away from the house light’s actual coordinate. (C) Table 1 shows an example of processed output obtained from DeepLabCut tracking of a protocol step indicator. In this output, the starting point for each occasion where the indicator is active has been listed. Table 2 depicts an example of data obtained from the operant conditioning system, giving relevant details for individual trials. In this example, the duration of the ITI, position of the cued opening and latencies to perform a response and retrieve the reward have been recorded. Table 3 depicts an example of data obtained by merging tracking results from DeepLabCut and data recorded from the operant conditioning system. Through this, the video frames for the starting point of the ITI (step 1 in A), the starting point of the cue light presentation (step 2 in A), the response (step 3 in A) and retrieval (step 4 in A) for an example trial have been obtained. (D) An example graph depicting the x-coordinate of the house light, as tracked by DeepLabCut, during a filmed 5CSRTT trial. The different steps of the protocol are indicated: (1) ITI; (2) presentation of a cue light (position indicated by white arrow); (3) response; (4) reward retrieval. The identification of video frames depicting the start and stop of these different protocol steps was done through a process comparable to that indicated in D. Please click here to view a larger version of this figure.

Figure 7: Image characteristics of camera. (A) Uncropped image obtained from a camera placed on top of an operant conditioning chamber. The image was captured while the chamber was placed in a brightly lit room. Note the (1) house light and (2) reward pellet trough along the chamber’s left wall and (3) the row of five nose poke openings along the chamber’s right wall. Each nose poke opening contains a small cue light. (B) Uncropped image displaying the strong reflection caused by (1) the metal dropping pan, as well as reflections caused by sub-optimal positioning of the camera’s (2) indicator LEDs and (3) IR LED module. (C) Cropped image of the chamber in complete darkness. Note that the lights from the IR beam break detectors in the five nose poke openings along the chamber’s right wall are clearly visible (arrow). (D) Cropped image of the chamber when brightly lit. Please click here to view a larger version of this figure.

Figure 8: Positional tracking of protocol step indicator and body parts of interest. (A) The picture shows the position of a protocol step indicator (red) as well as the head (yellow) and tail (green) of a rat, as tracked by DeepLabCut. As indicated by the tracking of the lit house light, the video frame is taken from a snapshot of an active trial. (B) The picture shows the position of the head (yellow) and tail (green) as tracked by DeepLabCut during a moment when a trial is not active. Note the lack of house light tracking. (C) The positions of points of interest used in the analysis of data shown in Figure 6 and Figure 11; (1) House light, in this case used as protocol step indicator, (2‒6) Nose poke openings #1‒5. Please click here to view a larger version of this figure.

Figure 9: Image distortion from fisheye lens. (A) Image of a checker-board pattern with equally sized and spaced black and white squares taken with the camera described in this protocol. Image was taken at a height comparable to that used when recording videos from operant conditioning chambers. Black squares along the central horizontal and vertical lines have been marked with DeepLabCut. (B) Graph depicting how the spacing of the marked squares in (A) change with proximity to the image center. (C) Image depicting measurements taken to evaluate impact of fisheye distortion effect on videos gathered from operant chambers. The corners and midpoints along the edge of the floor area, the central position of each individual floor rung and the position of the five nose poke openings have been indicated with DeepLabCut (colored dots); (1) spacing of floor rungs, (2) width of chamber floor along the middle of the chamber, (3) spacing of nose poke openings. (D) Spacing of floor rungs (averaged for each set of three consecutive rungs), numbered from left to right in (C). There is a small effect of the fisheye distortion, resulting in the central rungs being spaced roughly 3 pixels (8%) further apart than rungs that are positioned at the edges of the chamber floor. (E) Width of the chamber floor in (C) measured at its left and right edges, as well as midpoint. There is a small effect of the fisheye distortion, resulting in the width measured at the midpoint being roughly 29 pixels (5%) longer than the other measurements. (F) Spacing of nose poke openings in (C), numbered from the top of the image. There is a small effect of the fisheye distortion, resulting in the spacing between the central three openings (H2, H3, H5) being roughly 5 pixels (4%) broader than the spacing between H1-H2 and H4-H5. For D-F, data were gathered from four videos and graphs depict group mean + standard error. Please click here to view a larger version of this figure.

Figure 10: Reviewing accuracy of DeepLabCut tracking. (A) A table listing training information for two neural networks trained to track rats within operant chambers. Network #1 used a smaller training data set, but high number of training iterations compared to Network #2. Both networks achieved a low error score from DeepLabCut’s evaluation function (DLC test error) and displayed a low training loss towards the end of the training. Despite this, Network #1 showed very poor tracking accuracy upon manual evaluation of marked video frames (measured accuracy, estimated from 150 video frames covering a video segment comparable to those in Video 2 and Video 3). Network #2 represents the improved version of Network #1, after having included additional video frames of actively moving rats into the training data set, as described in (E). (B) Image depicting a rat rearing up and covering the chamber’s house light (Figure 7A) with its head, disrupting the tracking of it. (C) Video frame capturing a response made during a 5CSRTT trial (Figure 6A: 3). The head’s movement path during the response and preceding ITI has been superimposed on the image in yellow. The tracking is considered to be accurate. Note the smooth tracking during movements (white arrow). A corresponding video is available as Video 2. Network #2 (see A) was used for tracking. (D) Video frame capturing a response made during a 5CSRTT trial (Figure 6A: 3). The head’s movement path during the response and preceding ITI has been superimposed on the image in yellow. Data concerns the same trial as shown in (C) but analyzed with Network #1 (see A). The tracking is considered to be inaccurate. Note the path’s jagged appearance with multiple straight lines (white arrows), caused by occasional tracking of the head to distant erroneous positions (black arrows). A corresponding video is available as Video 3. (E) Graph depicting the dynamic changes in movement speed of the head tracking in (C) and (D). Identifiable in the graph are three major movements seen in Video 2 and 3, where the rat first turns to face the nose poke openings (initial turn), makes a small adjustment to further approach them (adjustment), and finally performs a response. The speed profile for the good tracking obtained by Network #2 (A) displays smooth curves of changes in movement speed (blue arrows), indicating an accurate tracking. The speed profile for the poor tracking obtained by Network #1 (A) shows multiple sudden spikes in movement speed (red arrows) indicative of occasional tracking errors in single video frames. It is worth noting that these tracking problems specifically occur during movements. To rectify this, the initial training set used to train Network #1 was expanded with a large amount of video frames depicting actively moving rats. This was subsequently used to train Network #2, which efficiently removed this tracking issue. Please click here to view a larger version of this figure.

Figure 11: Use of positional tracking through DeepLabCut to complement the behavioral analysis of operant conditioning tests. (A) A top view of the inside of an operant conditioning chamber. Three areas of the chamber are indicated. The area close to the reward pellet trough (Pellet), the central chamber area (Center) and the area around the nose poke openings (Openings). (B) A graph depicting the relative amount of time rats spend in the three different areas of the operant chamber outlined in (A) during the ITI step of the 5CSRTT. Note that on trials with a response, rats initially tend to be positioned close to the pellet trough (black) and chamber center (grey), but as the ITI progresses, they shift towards positioning themselves around the nose poke openings (white). In contrast, on typical omission trials, rats remain positioned around the pellet trough and chamber center. On a subset of omission trials (approximately 20%) rats clearly shift their focus towards the nose poke openings, but still fail to perform a response when prompted. Two-way ANOVA analysis of the time spend around the nose poke openings using trial type as between-subject factor and time as within-subject factor reveal significant time (p < 0.001, F(4,8) = 35.13), trial type (p < 0.01, F(2,4) = 57.33) and time x trial type interaction (p < 0.001, F(8,16) = 15.3) effects. Data gathered from four animals performing 100 trials each. Graph displays mean + standard error. (C) Heat map displaying all head positions tracked in proximity of the nose poke openings, by one specific rat during 50 ITIs of a 5CSRTT test session. Note that the rat has a strong tendency to keep its head in one spot close to the central nose poke opening. (D) Heat map displaying all head positions tracked in proximity of the nose poke openings, by one specific rat during 50 ITIs of a 5-CSRTT test session. Note that the rat shows no clear preference for any specific opening. (E) Graph depicting the relative amount of time that the two rats displayed in (C) and (D) spend being closest to the different nose poke openings during 50 ITIs of the 5CSRTT. The rat displaying a focused strategy (C) (black) shows a strong preference for being closest to the central opening while the rat with a search-like strategy (D) (white) shows no preference for any particular opening. The graph depicts average + standard error. (F) An image of a rat at the time of cue presentation on a 5CSRTT trial (Figure 6A). Note that the rat has positioned its head closest to the central opening (white arrow), being two openings away from the cued opening (black arrow). (G) A graph depicting performance accuracy on the 5CSRTT (i.e., frequency of performing correct responses) in relation to whether the head of the rats was closest to the central opening or one of the other openings at the time of cue presentation (Figure 6A2). Data gathered from four animals performing roughly 70 responses each. Graph displays group mean + standard error (matched t-test: p < 0.05). (H) A graph depicting performance accuracy on the 5CSRTT in relation to the distance between the position of the cued opening and the position of a rat’s head, at the point of signal presentation. The distance relates to the number of openings between the rats’ head position and the position of the signaled opening. Data gathered from four animals performing roughly 70 responses each. Graph displays mean + standard error (matched one-way ANOVA: p < 0.01). For the presented analysis, Network #2 described in Figure 10A was used. The complete analyzed data set included approximately 160,000 video frames and 400 trials. Out of these, 2.5% of the video frames were excluded due the animal’s noted movement speed being above 3,000 pixels/s, indicating erroneous tracking (Figure 10E). No complete trials were excluded. Please click here to view a larger version of this figure.

Video 1: Representative tracking performance of well-trained neural network. The video shows a montage of a rat performing 45 trials with correct responses during a 5CSRTT test session (see Figure 6A for protocol details). Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. The training of the network (Network #2 in Figure 10A) emphasized accuracy for movements made along the chamber floor in proximity to the nose poke openings (right wall, Figure 7A). Tracking of these segments show on average >90% accuracy. Tracking of episodes of rearing and grooming are inaccurate, as the training set did not include frames of these behaviors. Note that the video has been compressed to reduce file size and is not representable of the video quality obtained with the camera. Please click here to download this video.

Video 2: Example of accurately tracked animal. The video shows a single well-tracked trial of a rat performing a correct response during the 5CSRTT. Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. Neural network #2 described in Figure 10A was used for tracking. Note how the markers follow the movements of the animal accurately. Also refer to Figure 10C,E for the plotted path and movement speed for the head tracking in this video clip. Please click here to download this video.

Video 3: Example of poorly tracked animal. The video shows a single poorly tracked trial of a rat performing a correct response during the 5CSRTT. Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. Neural network #1 described in Figure 10A was used for tracking. The video clip is the same as the one used in Video 2. Note that the marker for the head is not reliably placed on top of the rat’s head. Also refer to Figure 10D,E for the plotted path and movement speed for the head tracking in this video clip. Please click here to download this video.

Video 4: Example of movements made during a 5CSRTT trial with a response. The video shows a single well-tracked trial of a rat performing a correct response during the 5-CSRTT. Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. Note how the rat at first is positioned in clear proximity to the pellet receptacle (left wall, Figure 7A) and then moves over to focus its attention on the row of nose poke openings. Please click here to download this video.

Video 5: Example of a typical omission trial during the 5CSRTT. The video shows a single well-tracked trial of a rat performing a typical omission during the 5CSRTT. Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. Note how the rat maintains its position around the pellet receptacle (left wall, Figure 7A) and chamber center, rather than turning around to face the nose poke openings (right wall, Figure 7A). The displayed behavior and cause of the omission can be argued to reflect low interest in performing the test. Please click here to download this video.

Video 6: Example of an atypical omission trial during the 5CSRTT. The video shows a single well-tracked trial of a rat performing an atypical omission during the 5CSRTT. Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. Note how the rat positions itself towards the nose poke openings along the right wall of the chamber (Figure 7A). This can be argued to indicate that the animal is interested in performing the test. However, the rat faces away from the cued opening (central position) when the cue is presented (5 s into the clip). In contrast to the omission displayed in Video 4, the one seen here is likely related to sub-optimal visuospatial attention processes. Please click here to download this video.

Video 7: Example of an animal maintaining a focused central position during an ITI of the 5CSRTT. The video shows a single well-tracked trial of a rat performing a correct response on a trial of the 5CSRTT. Note how the rat maintains a central position during the ITI, keeping its head steady in proximity to the central nose poke opening along the chambers right wall (Figure 7A). Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. Please click here to download this video.

Video 8: Example of an animal displaying a search-like attentional strategy during an ITI of the 5CSRTT. The video shows a single well-tracked trial of a rat performing a correct response on a trial of the 5CSRTT. Note how the rat frequently repositions its head to face different nose poke openings along the right wall of the chamber (Figure 7A). Tracking of the house light (red marker), tail base (green marker) and head (blue marker) are indicated in the video. Please click here to download this video.

Discussion

This protocol describes how to build an inexpensive and flexible video camera that can be used to record videos from operant conditioning chambers and other behavioral test setups. It further demonstrates how to use DeepLabCut to track a strong light signal within these videos, and how that can be used to aid in identifying brief video segments of interest in video files that cover full test sessions. Finally, it describes how to use the tracking of a rat’s head to complement the analysis of behaviors during operant conditioning tests.

The protocol presents an alternative to commercially available video recording solutions for operant conditioning chambers. As noted, the major benefit of these is that they integrate with the operant chambers, enabling video recordings of specific events. The approach to identifying video segments of interest described in this protocol is more laborious and time-consuming compared to using a fully integrated system to record specific events. It is, however, considerably cheaper (a recent cost estimate for video monitoring equipment for 6 operant chambers was set to approximately 13,000 USD. In comparison, constructing six of the cameras listed here would cost about 720 USD). In addition, the cameras can be used for multiple other behavioral test setups. When working with the camera, it is important to be mindful of the areas of exposed electronics (the back of the camera component as well as the IR LED component), so that they do not come into contact with fluids. In addition, the ribbon cable attaching the camera module to the microcomputer and cables connecting the LEDs and switches to the GPIO pins may come loose if the camera is frequently moved around. Thus, adjusting the design of the camera case may be beneficial for some applications.

The use of DeepLabCut to identify video segments of interest and track animal movements offers a complement and/or alternative to manual video analysis. While the former does not invalidate the latter, we have found that it provides a convenient way of analyzing movements and behaviors inside operant chambers. In particular, it provides positional data of the animal, which contains more detailed information than what is typically extracted via manual scoring (i.e., actual coordinates compared to qualitative positional information such as “in front of”, “next to” etc.).

When selecting a protocol step indicator, it is important to choose one that consistently indicates a given step of the behavioral protocol, and that is unlikely to be blocked by the animal. If the latter is problematic, one may consider placing a lamp outside the operant chamber and film it through the chamber walls. Many operant conditioning chambers are modular and allow users to freely move lights, sensors and other components around. It should be noted that there are other software packages that also allow users to train neural networks in recognizing and tracking user-defined objects in videos24,25,26. These can likely be used as alternatives to DeepLabCut in the current protocol.

The protocol describes how to track the central part of a rats’ head in order to measure movements inside the operant chambers. As DeepLabCut offers full freedom in selecting body parts or objects of interest, this can with convenience be modified to fit study-specific interests. A natural extension of the tracking described herein is to also track the position of the rats’ ears and nose, to better judge not only head position but also orientation. The representative data shown here was recoded with Long Evans rats. These rats display considerable inter-individual variation in their pigmentation pattern, particularly towards their tail base. This may result in some difficulties applying a single trained neural network for the tracking of different individuals. To limit these issues, it is best to include video frames from all animals of interest in the training set for the network. The black head of the Long Evans rat provides a reasonably strong contrast against the metal surfaces of the chamber used here. Thus, obtaining accurate tracking of their heads likely requires less effort than with albino strains. The most critical step of obtaining accurate tracking with DeepLabCut or comparable software packages is to select a good number of diverse video frames for the training of the neural network. As such, if tracking of an object of interest is deemed to be sub-optimal, increasing the set of training frames should always be the first step towards improving the results.

Offenlegungen

The authors have nothing to disclose.

Acknowledgements

This work was supported by grants from the Swedish Brain Foundation, the Swedish Parkinson Foundation, and the Swedish Government Funds for Clinical Research (M.A.C.), as well as the Wenner-Gren foundations (M.A.C, E.K.H.C), Åhlén foundation (M.A.C) and the foundation Blanceflor Boncompagni Ludovisi, née Bildt (S.F).

Materials

| 32 Gb micro SD card with New Our Of Box Software (NOOBS) preinstalled | The Pi hut (https://thpihut.com) | 32GB | |

| 330-Ohm resistor | The Pi hut (https://thpihut.com) | 100287 | This article is for a package with mixed resistors, where 330-ohm resistors are included. |

| Camera module (Raspberry Pi NoIR camera v.2) | The Pi hut (https://thpihut.com) | 100004 | |

| Camera ribbon cable (Raspberry Pi Zero camera cable stub) | The Pi hut (https://thpihut.com) | MMP-1294 | This is only needed if a Raspberry Pi zero is used. If another Raspberry Pi board is used, a suitable camera ribbon cable accompanies the camera component |

| Colored LEDs | The Pi hut (https://thpihut.com) | ADA4203 | This article is for a package with mixed colors of LEDs. Any color can be used. |

| Female-Female jumper cables | The Pi hut (https://thpihut.com) | ADA266 | |

| IR LED module (Bright Pi) | Pi Supply (https://uk.pi-supply.com) | PIS-0027 | |

| microcomputer motherboard (Raspberry Pi Zero board with presoldered headers) | The Pi hut (https://thpihut.com) | 102373 | Other Raspberry Pi boards can also be used, although the method for automatically starting the Python script only works with Raspberry Pi zero. If using other models, the python script needs to be started manually. |

| Push button switch | The Pi hut (https://thpihut.com) | ADA367 | |

| Raspberry Pi power supply cable | The Pi hut (https://thpihut.com) | 102032 | |

| Raspberry Pi Zero case | The Pi hut (https://thpihut.com) | 102118 | |

| Raspberry Pi, Mod my pi, camera stand with magnetic fish eye lens and magnetic metal ring attachment | The Pi hut (https://thpihut.com) | MMP-0310-KIT |

Referenzen

- Pritchett, K., Mulder, G. B. Operant conditioning. Contemporary Topics in Laboratory Animal Science. 43 (4), (2004).

- Clemensson, E. K. H., Novati, A., Clemensson, L. E., Riess, O., Nguyen, H. P. The BACHD rat model of Huntington disease shows slowed learning in a Go/No-Go-like test of visual discrimination. Behavioural Brain Research. 359, 116-126 (2019).

- Asinof, S. K., Paine, T. A. The 5-choice serial reaction time task: a task of attention and impulse control for rodents. Journal of Visualized Experiments. (90), e51574 (2014).

- Coulbourn instruments. Graphic State: Graphic State 4 user’s manual. Coulbourn instruments. , 12-17 (2013).

- Med Associates Inc. Med-PC IV: Med-PC IV programmer’s manual. Med Associates Inc. , 21-44 (2006).

- Clemensson, E. K. H., Clemensson, L. E., Riess, O., Nguyen, H. P. The BACHD rat model of Huntingon disease shows signs of fronto-striatal dysfunction in two operant conditioning tests of short-term memory. PloS One. 12 (1), (2017).

- Herremans, A. H. J., Hijzen, T. H., Welborn, P. F. E., Olivier, B., Slangen, J. L. Effect of infusion of cholinergic drugs into the prefrontal cortex area on delayed matching to position performance in the rat. Brain Research. 711 (1-2), 102-111 (1996).

- Chudasama, Y., Muir, J. L. A behavioral analysis of the delayed non-matching to position task: the effects of scopolamine, lesions of the fornix and of the prelimbic region on mediating behaviours by rats. Psychopharmacology. 134 (1), 73-82 (1997).

- Talpos, J. C., McTighe, S. M., Dias, R., Saksida, L. M., Bussey, T. J. Trial-unique, delayed nonmatching-to-location (TUNL): A novel, highly hippocampus-dependent automated touchscreen test of location memory and pattern separation. Neurobiology of Learning and Memory. 94 (3), 341 (2010).

- Rayburn-Reeves, R. M., Moore, M. K., Smith, T. E., Crafton, D. A., Marden, K. L. Spatial midsession reversal learning in rats: Effects of egocentric cue use and memory. Behavioural Processes. 152, 10-17 (2018).

- Gallo, A., Duchatelle, E., Elkhessaimi, A., Le Pape, G., Desportes, J. Topographic analysis of the rat’s behavior in the Skinner box. Behavioural Processes. 33 (3), 318-328 (1995).

- Iversen, I. H. Response-initiated imaging of operant behavior using a digital camera. Journal of the Experimental Analysis of Behavior. 77 (3), 283-300 (2002).

- Med Associates Inc. Video monitor: Video monitor SOF-842 user’s manual. Med Associates Inc. , 26-30 (2004).

- . Coulbourn Instruments Available from: https://www.coulbourn.com/product_p/h39-16.htm (2020)

- Mathis, A., et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature Neuroscience. 21 (9), 1281-1289 (2018).

- Nath, T., Mathis, A., Chen, A. C., Patel, A., Bethge, M., Mathis, M. W. Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nature Protocols. 14 (7), 2152-2176 (2019).

- Bari, A., Dalley, J. W., Robbins, T. W. The application of the 5-chopice serial reaction time task for the assessment of visual attentional processes and impulse control in rats. Nature Protocols. 3 (5), 759-767 (2008).

- . Raspberry Pi foundation Available from: https://thepi.io/how-to-install-raspbian-on-the-raspberry-pi/ (2020)

- . Pi-supply Available from: https://learn.pi-supply.com/make/bright-pi-quickstart-faq/ (2018)

- . Python Available from: https://wiki.python.org/moin/BeginnersGuide/NonProgrammers (2020)

- . MathWorks Available from: https://mathworks.com/academia/highschool/ (2020)

- . Cran.R-Project.org Available from: https://cran.r-project.org/manuals.html (2020)

- Liu, Y., Tian, C., Huang, Y. . Critical assessment of correction methods for fisheye lens distortion. The international archives of the photogrammetry, remote sensing and spatial information sciences. , (2016).

- Pereira, T. D., et al. Fast animal pose estimation using deep neural networks. Nature Methods. 16 (1), 117-125 (2019).

- Graving, J. M., et al. DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. Elife. 8 (47994), (2019).

- Geuther, B. Q., et al. Robust mouse tracking in complex environments using neural networks. Communications Biology. 2 (124), (2019).