VisualEyes: A Modular Software System for Oculomotor Experimentation

Summary

Neural control and cognitive processes can be studied through eye movements. The VisualEyes software allows an operator to program stimuli on two computer screens independently using a simple, custom scripting language. The system can stimulate tandem eye movements (saccades and smooth pursuit) or opposing eye movements (vergence) or any combination.

Abstract

Eye movement studies have provided a strong foundation forming an understanding of how the brain acquires visual information in both the normal and dysfunctional brain.1 However, development of a platform to stimulate and store eye movements can require substantial programming, time and costs. Many systems do not offer the flexibility to program numerous stimuli for a variety of experimental needs. However, the VisualEyes System has a flexible architecture, allowing the operator to choose any background and foreground stimulus, program one or two screens for tandem or opposing eye movements and stimulate the left and right eye independently. This system can significantly reduce the programming development time needed to conduct an oculomotor study. The VisualEyes System will be discussed in three parts: 1) the oculomotor recording device to acquire eye movement responses, 2) the VisualEyes software written in LabView, to generate an array of stimuli and store responses as text files and 3) offline data analysis. Eye movements can be recorded by several types of instrumentation such as: a limbus tracking system, a sclera search coil, or a video image system. Typical eye movement stimuli such as saccadic steps, vergent ramps and vergent steps with the corresponding responses will be shown. In this video report, we demonstrate the flexibility of a system to create numerous visual stimuli and record eye movements that can be utilized by basic scientists and clinicians to study healthy as well as clinical populations.

Protocol

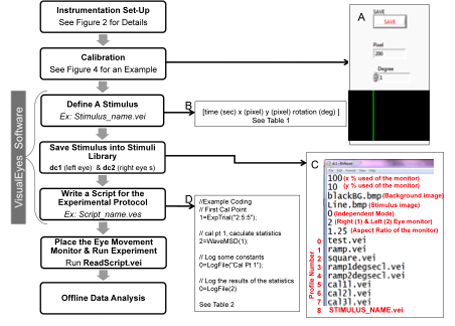

An overview of the key elements needed to conduct an oculomotor experiment is shown in figure 1. Each block in the flow chart will be discussed in detail below.

1. INSTRUMENTATION SET-UP:

- Any type of eye movement monitor can be used for this system. We will demonstrate an infrared limbus tracking and a video monitoring system.

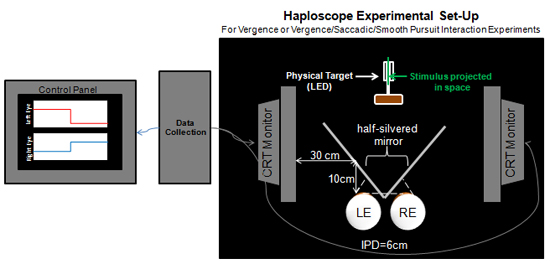

- For tandem tracking movements such as saccadic or smooth pursuit, a single computer can be used for the visual display. To study opposing eye movements such as vergence or the interaction of vergence with tandem version movements (ie, vergent with saccadic stimuli) a haploscope is needed with two computer monitors for visual display, see figure 2.

2. CALIBRATION:

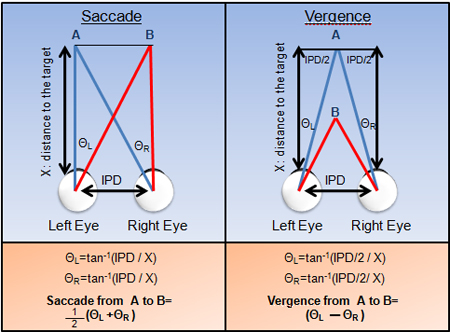

- Calibration is needed to convert one set of metrics into another. Eye movements are typically indicated in degrees (°) of rotation shown in figure 3. However, computer monitors use pixel values compared to vision researchers who often denote the visual stimuli in degrees. Hence, a conversion is needed to convert the pixel values to degrees. One can use trigonometry to calculate where to place the physical targets to calibrate the visual displays. For example, if the stimulus on the computer screen aligns with a 2° physical target (see figure 2) then that pixel value corresponds to a 2° stimulus.

- To calibrate the system, the operator needs to open Pixel2Deg.vei within the VisualEyes directory. First, define the monitor to calibrate using the stretch mode field. Enter number 1 for the left eye monitor and number 2 for the right eye monitor. Then, run the program and move the green line stimulus until the green line is superimposed on top of the physical target. Enter the known position of the physical target in degrees and press the save button. Then, click on the green line. The degree and pixel value will be shown on the display in the bottom left corner. The operator should collect a minimum of three calibration points.

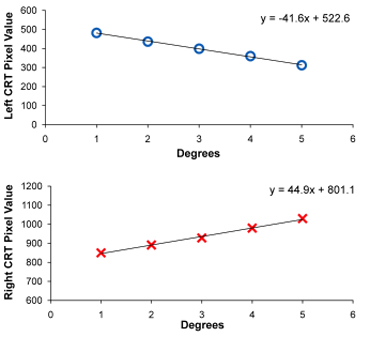

- After saving all the calibration points, open the D2P output file in the VisualEyes directory to obtain the calibration points. Plot the calibration points to attain a linear regression equation. Use the equation to calculate the initial and final position of the visual stimulus the operator desires to program in pixel values. An example of the left eye and right eye calibration curve obtained using five calibration points for a vergence stimulus is shown in figure 4.

- Repeat steps 2.2 and 2.3 for the other monitor if the stimulus requires an additional monitor.

3. VISUALEYES SOFTWARE:

- Define A Stimulus: The operator needs to define the initial and final position of left and right eye stimulus prior to the experiment. First, open a new text file and on the first row, define the initial time and position values of the stimulus. Four parameters need to be defined 1) time (seconds), 2) the horizontal position (pixel), 3) the vertical position (pixel), and 4) the rotation (°) separated by a tab. Likewise, define the four parameters of the final time and position of the stimulus. Save the stimulus in the VisualEyes directory as a stimulus_name.vei (VEI=VisualEyes Input) file and repeat this step for the other eye stimulus.

- The movement of the stimulus can be generalized into two types of movement: an abrupt step or a continuous ramp. A step allows the stimulus to abruptly move or jump from the initial position to the final position. The operator should note that the change in time is 0.001 seconds for a step stimulus. In the next row, define how long you want the stimulus to reside in the initial position as well as the final position. Stimuli are defined using four fields within one row. An example of a saccadic step, smooth pursuit ramp, vergent step and vergent ramp are shown in Table 1.

- For stimuli, you can have a single stimulus such as a step or a sequence of visual tasks such as a multiple steps.

- Save Stimulus into Stimuli Library: There are several default settings in the dc1.txt (left eye stimulus / monitor) and dc2.txt (right eye stimulus / monitor) files within the VisualEyes directory. The first line is the percentage of the screen within the horizontal direction. The second line is the percentage of the screen within the vertical direction. The third is the background image and the fourth is the foreground or target image. The fifth line indicates the computer to work in independent mode. The 6th signifies which monitor (1 is right eye and 2 is left eye). The 7th line is the aspect ratio of the monitors. The rest of the lines are the different type of stimuli the operator can use within an experimental session.

- Open the dc1.txt and dc2.txt from the VisualEyes directory. These two files contain the library stimuli for left eye and right eye respectively. On the last row, write the file name of the stimulus that has been generated from step 3.1. The profile number refers to the mth row corresponding to the stimulus file name. For example, in figure 1, the profile number of the stimulus is 8.

- One can repeat steps 3.1 to 3.2 to create as many stimuli that are needed for an experiment.

- Write Script for the Experimental Protocol: Open a text file to type the experimental protocol commands. This file is called the script file which signifies that the VisualEyes System will read and execute each command from the script found in this file. The ability to create a script file for an experimental protocol allows the user to conduct repeated experimental sessions using the same protocol. Furthermore, numerous scripts can be written to vary the type and sequence of experimental commands. This file can be saved into the VisualEyes directory as a script_name.ves file. (VES = VisualEyes Script)

- The VisualEyes functions have input and output arguments. Table 2 shows all the functions in the VisualEyes software.

- ExpTrial: This function is used to call the stimulus that has been saved in the stimulus library from step 3.2. The length of the data is the time it will allow the function to execute the stimulus. The tempfile.lwf allows the VisualEyes software to temporarily store the incoming data and output it into an output buffer. When the tempfile.lwf is not defined, during the execution of this function, it will not store any incoming data for digitization.

- LogFile: This function outputs strings or the input buffer defined from ExpTrial into the out.txt file in the VisualEyes directory. When the experiment is complete, the operator must change the name of the out.txt file to another name. Otherwise, the data will be overwritten during the next experiment.

- TriggerWait: This function waits for the subject to push a trigger button to start the ExpTrial and digitize the data. This is a channel in on the digital acquisition card that is waiting for the signal to change from a digital high (5 V) to low (0 V).

- RandomDelay: This function generates a random delay to prevent prediction or anticipation of the next stimulus.

- WaveMSD: This function calculates the mean and standard deviation of the data.

- The VisualEyes functions have input and output arguments. Table 2 shows all the functions in the VisualEyes software.

4. PLACE THE EYE MOVEMENT MONITOR & RUN EXPERIMENT:

- Different eye movement monitors such as the corneal reflection video imaging system, limbus tracking system or sclera search coil may be used to collect and record eye movements.

- Before a subject can participate, the experiment must be explained and the subject must read and sign an informed consent form approved by the Institutional Review Board.

- The operator must adjust the eye movement monitor on the subject. First, the subject is asked to fixate on a target. The operator adjusts the eye movement monitor to capture the anatomical attributes of the eye such as the limbus (boundary between the iris and sclera) or the pupil and corneal reflection depending on the eye movement monitor used.

- Once the eye movement monitor is properly adjusted on the subject, the operator should validate that the eye movement monitor is capturing the eye movements by asking the subject to make vergent or saccadic movements.

- Open the program ReadScript.vei in the VisualEyes directory. On the upper right corner, type in the file name of the experimental protocol script file created from step 3.4. Then, run the ReadScript.vei program by pushing the red arrow on top left corner.

- Give the subject the trigger button and explain that when the subject pushes the button, the data collection will begin. Another Acquire.vei file will automatically appear on the screen which will plot the incoming data. Data are sampled at 500Hz.

- When the experiment is complete, the ReadScript.vei will automatically stop. At this time, go into the VisualEyes directory and find the Out1.txt file. Rename the file otherwise the next time the operator runs the experiment, the data file will be overwritten.

5. OFF-LINE DATA ANALYSIS:

- The operator can analyze the data by using different software packages (ie MatLab or Excel). Latency, peak velocity, or amplitude may be of interest depending on the study.

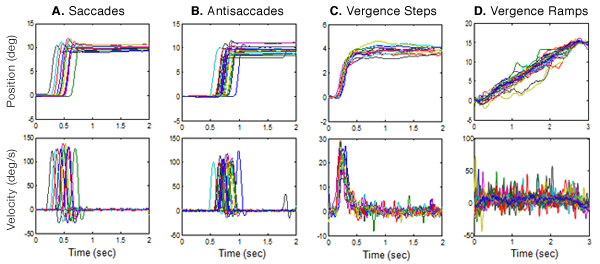

- An example of a Matlab analysis code is provided in the VisualEyes directory to plot saccades, vergence steps and vergence ramps. Examples of the ensemble saccade, vergence step and vergence ramp position traces with the corresponding velocity responses are shown in figure 5.

6. Representative Results:

Examples of the ensemble of eye movements recorded using the VisualEyes System is shown in Figure 5. Typical 10° saccadic movements are shown in plot 4A. Antisaccades are saccadic responses when the subject is told to make a saccade in the opposite direction of the visual stimulus and are shown in plot 4B. This is a more cognitively demanding task; hence one can observe that the latency or the time to begin the movement is longer for antisaccades (plot 4B) compared to saccades towards a visual stimulus also called prosaccades (plot 4A). Vergence responses to 4° steps are shown in plot 4C and vergence responses to 5 °/s ramps stimuli are shown in plot 4D. Each trace is an individual eye movement where the upper row is position denoted in degrees as a function of time. Eye movements are calibrated in the units of degrees, meter angles, or prism diopters. Our research uses degree of rotation. The bottom row is the velocity plotted in °/ s as a function of time and is the speed of the movement. The scale for each ensemble data differs depending on the movement.

Figure 1. Flow chart of key elements to conduct an oculomotor experiment. Examples of steps needed to generate a stimulus using the VisualEyes software and conduct an experiment for offline data analysis are shown. Part A shows the Pixel2Deg.vei window. Part B demonstrates the four parameters needed to define a stimulus. Part C is the stimuli library where the black text lines within the stimulus library are example stimuli files and the red text defines each line. Part D is an example of an experimental script protocol.

Figure 2. VisualEyes System Haploscope Experimental Set-Up. Three CRT monitors are used: 1) A control panel is needed to view stimuli and responses 2) a CRT monitor for the right eye (RE) visual stimuli and 3) a CRT monitor the left eye (LE) visual stimuli. A half-silvered mirror is placed 30 cm away from the two visual stimuli CRT monitors. This is to insure that the stimuli on the CRT monitors are projected onto the half silvered mirror (50% transmission and 50% reflectance mirror). The mirror allows the subject to view stimuli from the computer screens superimposed onto targets located at measured distances from the subject which is needed for calibration. With a haploscope, the accommodation demand to both eyes is held constant. The distance between the subject’s eyes and the mirror is 10 cm. The system can be adjusted to accommodate different inter-pupillary distances (IPD) but for this demonstration we will assume the IPD to be 6 cm.

Figure 3. Calculations of Saccadic (left) and Vergent (right) movement from targets A to B are shown. IPD is the inter-pupillary distance.

Figure 4. Calibration curve of the left eye (top plot) and right eye (bottom plot) stimulus. A similar procedure would be conducted for saccadic or smooth pursuit stimuli.

Figure 5. Examples of saccades (A) antisaccades (B), vergence steps (C) and vergence ramps (D) using the VisualEyes system and analyzed using a custom MATLAB program. Ensemble position traces (° as a function of time in sec) are plotted in the upper row where each colored line represents a different eye movement. The corresponding velocity traces (°/s as a function of time in sec).

| Stimulus Type | Stimulus_Name_Left Eye.vei | Stimulus_Name_Right_Eye.vei | ||||||

| Time (s) | x-position (pixel) | y-position (pixel) | Rotation (°) | Time (s) | x-position (pixel) | y-position (pixel) | Rotation (°) | |

| Smooth Pursuit Ramp | 0 | 100 | 0 | 0 | 0 | 100 | 0 | 0 |

| 10 | 200 | 0 | 0 | 10 | 200 | 0 | 0 | |

| Saccade Step | 0 | 100 | 0 | 0 | 0 | 100 | 0 | 0 |

| 0.5 | 100 | 0 | 0 | 0.5 | 100 | 0 | 0 | |

| 0.501 | 200 | 0 | 0 | 0.501 | 200 | 0 | 0 | |

| 3 | 200 | 0 | 0 | 3 | 200 | 0 | 0 | |

| Vergence Ramp | 0 | 452 | 0 | 0 | 0 | 973 | 0 | 0 |

| 10 | 370 | 0 | 0 | 10 | 1044 | 0 | 0 | |

| Vergence Step | 0 | 452 | 0 | 0 | 0 | 973 | 0 | 0 |

| 0.5 | 452 | 0 | 0 | 0.5 | 973 | 0 | 0 | |

| 0.501 | 416 | 0 | 0 | 0.501 | 1002 | 0 | 0 | |

| 3 | 416 | 0 | 0 | 3 | 1002 | 0 | 0 | |

Table 1. An Example of Smooth Pursuit Ramp, Saccadic Step, Vergence Ramp and Vergence Step Stimuli

| Function | Syntax |

| ExpTrial | Output Buffer # = ExpTrial (“Length of Data: LE Profile: RE Profile”); Example: 2=ExpTrial(“13:3:3”); |

| Output Buffer # = Exo Trial (“Length of Data: LE Profile: RE Profile: tempfile. lwf”) ; Example: 2=ExpTrial(“13:3:3:templfile.lwf”); |

|

| LogFile | Output Buffer # = LogFile(“TEXT”); Example: 0=LogFile(“Experiment 1”); |

| 0=LogFile( Input Buffer #); Example: 0=LogFile(2); |

|

| TriggerWait | 0=TriggerWait(Buffer Number); Example: 0=TriggerWait(0); |

| RandomDelay | 0=RandomDelay(“t2:t1”); Example: 0=RandomDelay(“2000:500”); |

| WaveMSD | Output Buffer #= WaveMSD(Input Buffer #); |

Table 2. Functions Used to Write the Experimental Protocol in the VisualEyes Program

Discussion

Critical Steps:

Eye movement monitors must be properly adjusted on the subject. For example, eye movement recording monitors work within a range and must be adjusted to the subject. If the subject’s eye movement goes beyond the range, then the system becomes saturated. Upon saturation, the eye movement signal is not valid. Calibration is also critical in eye movement recording. All eye movement monitors measure an analog signal that is digitized and needs to be converted to units commonly used in eye movement research such as degree of rotation. Linearity of the system assessed through three or more calibration points is also important to determine if the transformation of the signal into degrees can be done using a simple linear transform or needs a more complex transformation. It is also important to note that the proper placement of the computer monitors and the physical targets is needed to align the visual stimuli on the computer screen when used in a haploscope setting.

Furthermore, the instructions to the subjects are also imperative. For example, for video or limbus tracking systems a blink will result in signal loss; however the operator cannot ask a subject to not blink for a long duration. Instructions to the subject can facilitate when the operators would like the subject to look at a new target to avoid blinks during data collection. Another example of the importance of instructions is represented in a prosaccade versus an antisaccade experiment. For prosaccades the subject looks at the target compared to an antisaccade experiment where the subject looks in the opposite direction of the stimulus target.

Possible Modifications:

The strength of the VisualEyess system is its flexibility. Several studies have published their custom software to stimulate saccade stimuli.2,3,4,5 However, there are many other types of oculomotor studies that one may want to investigate such as smooth pursuit or vergence movements. The VisualEyes System allows one to program each monitor independently so that the operator can program saccadic, smooth pursuit or vergent stimuli or any combination of the three (saccadic with vergent stimuli for example). The background is a static image that currently does not move but the next generation the VisualEyes software will allow the background image to move. The foreground image can be moved horizontally, vertically or rotate. The default image is a line but can be changed to a Distribution of Gaussian function (DOG) stimulus used to further reduce an accommodative stimulus or any other image. Furthermore, the ability to program computer screens independently allows for more flexibility. For example, phoria is routinely measured as a clinical parameter but one may want to record it with an eye movement monitor. Phoria is the resting position of an occluded eye while the other eye has a stimulus. We have validated this method of measuring phoria using the VisualEyes System.6,7,8

Applications and Significance:

Eye movement research can provide significant information to basic scientists and clinicians. It can also be a tool to monitor neurological disorders from traumatic brain injury9 to muscular dystrophy10 to Alzheimer’s disease 11 to Schizophrenia.12 It can provide insight for motor learning,13 attention mechanisms,14 or memory15 to name a few applications. Furthermore, it benefits from a strong neurophysiology foundation from single cell recordings in nonhuman primates1 and can be coupled with functional MRI to simultaneously study brain function to understand visual networks, connectivity and interactions.16

Divulgaciones

The authors have nothing to disclose.

Acknowledgements

This work was supported in part by a CAREER award from the National Science Foundation (BES-0447713) and from a grant from Essilor, International.

Referencias

- Leigh, R. J., Zee, D. S. . The Neurology of Eye Movements. , (2006).

- Pruehsner, W. R., Enderle, J. D. The operating version of the Eye Tracker, a system to measure saccadic eye movements. Biomed Sci Instrum. 38, 113-118 (2002).

- Pruehsner, W. R., Liebler, C. M., Rodriguez-Campos, F., Enderle, J. D. The Eye Tracker System–a system to measure and record saccadic eye movements. Biomed Sci Instrum. 39, 208-213 (2003).

- Rufa, A. Video-based eye tracking: our experience with Advanced Stimuli Design for Eye Tracking software. Ann N Y Acad Sci. 1039, 575-579 (2005).

- Cornelissen, F. W., Peters, E. M., Palmer, J. The Eyelink Toolbox: eye tracking with MATLAB and the Psychophysics Toolbox. Behav Res Methods Instrum Comput. 34, 613-617 (2002).

- Han, S. J., Guo, Y., Granger-Donetti, B., Vicci, V. R., Alvarez, T. L. Quantification of heterophoria and phoria adaptation using an automated objective system compared to clinical methods. Ophthalmic Physiol Opt. 30, 95-107 (2010).

- Kim, E. H., Granger-Donetti, B., Vicci, V. R., Alvarez, T. L. The Relationship between Phoria and the Ratio of Convergence Peak Velocity to Divergence Peak Velocity. Invest Ophthalmol Vis Sci. , (2010).

- Lee, Y. Y., Granger-Donetti, B., Chang, C., Alvarez, T. L. Sustained convergence induced changes in phoria and divergence dynamics. Vision Res. 49, 2960-2972 (2009).

- Maruta, J., Suh, M., Niogi, S. N., Mukherjee, P., Ghajar, J. Visual tracking synchronization as a metric for concussion screening. J Head Trauma Rehabil. 25, 293-305 (2010).

- Osanai, R., Kinoshita, M., Hirose, K. Eye movement disorders in myotonic dystrophy type 1. Acta Otolaryngol Suppl. , 78-84 (2007).

- Kaufman, L. D., Pratt, J., Levine, B., Black, S. E. Antisaccades: a probe into the dorsolateral prefrontal cortex in Alzheimer’s disease. A critical review. J Alzheimers Dis. 19, 781-793 (2010).

- Hannula, D. E. Use of Eye Movement Monitoring to Examine Item and Relational Memory in Schizophrenia. Biol Psychiatry. , (2010).

- Schubert, M. C., Zee, D. S. Saccade and vestibular ocular motor adaptation. Restor Neurol Neurosci. 28, 9-18 (2010).

- Noudoost, B., Chang, M. H., Steinmetz, N. A., Moore, T. Top-down control of visual attention. Curr Opin Neurobiol. 20, 183-190 (2010).

- Herwig, A., Beisert, M., Schneider, W. X. On the spatial interaction of visual working memory and attention: evidence for a global effect from memory-guided saccades. J Vis. 10, (2010).

- McDowell, J. E., Dyckman, K. A., Austin, B. P., Clementz, B. A. Neurophysiology and neuroanatomy of reflexive and volitional saccades: evidence from studies of humans. Brain Cogn. 68, 255-270 (2008).