An Open-Source Virtual Reality System for the Measurement of Spatial Learning in Head-Restrained Mice

Summary

Here, we present a simplified open-source hardware and software setup for investigating mouse spatial learning using virtual reality (VR). This system displays a virtual linear track to a head-restrained mouse running on a wheel by utilizing a network of microcontrollers and a single-board computer running an easy-to-use Python graphical software package.

Abstract

Head-restrained behavioral experiments in mice allow neuroscientists to observe neural circuit activity with high-resolution electrophysiological and optical imaging tools while delivering precise sensory stimuli to a behaving animal. Recently, human and rodent studies using virtual reality (VR) environments have shown VR to be an important tool for uncovering the neural mechanisms underlying spatial learning in the hippocampus and cortex, due to the extremely precise control over parameters such as spatial and contextual cues. Setting up virtual environments for rodent spatial behaviors can, however, be costly and require an extensive background in engineering and computer programming. Here, we present a simple yet powerful system based upon inexpensive, modular, open-source hardware and software that enables researchers to study spatial learning in head-restrained mice using a VR environment. This system uses coupled microcontrollers to measure locomotion and deliver behavioral stimuli while head-restrained mice run on a wheel in concert with a virtual linear track environment rendered by a graphical software package running on a single-board computer. The emphasis on distributed processing allows researchers to design flexible, modular systems to elicit and measure complex spatial behaviors in mice in order to determine the connection between neural circuit activity and spatial learning in the mammalian brain.

Introduction

Spatial navigation is an ethologically important behavior by which animals encode the features of new locations into a cognitive map, which is used for finding areas of possible reward and avoiding areas of potential danger. Inextricably linked with memory, the cognitive processes underlying spatial navigation share a neural substrate in the hippocampus1 and cortex, where neural circuits in these areas integrate incoming information and form cognitive maps of environments and events for later recall2. While the discovery of place cells in the hippocampus3,4 and grid cells in the entorhinal cortex5 has shed light on how the cognitive map within the hippocampus is formed, many questions remain about how specific neural subtypes, microcircuits, and individual subregions of the hippocampus (the dentate gyrus, and cornu ammonis areas, CA3-1) interact and participate in spatial memory formation and recall.

In vivo two-photon imaging has been a useful tool in uncovering cellular and population dynamics in sensory neurophysiology6,7; however, the typical necessity for head restraint limits the utility of this method for examining mammalian spatial behavior. The advent of virtual reality (VR)8 has addressed this shortcoming by presenting immersive and realistic visuospatial environments while head-restrained mice run on a ball or treadmill to study spatial and contextual encoding in the hippocampus8,9,10 and cortex11. Furthermore, the use of VR environments with behaving mice has allowed neuroscience researchers to dissect the components of spatial behavior by precisely controlling the elements of the VR environment12 (e.g., visual flow, contextual modulation) in ways not possible in real-world experiments of spatial learning, such as the Morris water maze, Barnes maze, or hole board tasks.

Visual VR environments are typically rendered on the graphical processing unit (GPU) of a computer, which handles the load of rapidly computing the thousands of polygons necessary to model a moving 3D environment on a screen in real time. The large processing requirements generally require the use of a separate PC with a GPU that renders the visual environment to a monitor, multiple screens13, or a projector14 as the movement is recorded from a treadmill, wheel, or foam ball under the animal. The resulting apparatus for controlling, rendering, and projecting the VR environment is, therefore, relatively expensive, bulky, and cumbersome. Furthermore, many such environments in the literature have been implemented using proprietary software that is both costly and can only be run on a dedicated PC.

For these reasons, we have designed an open-source VR system to study spatial learning behaviors in head-restrained mice using a Raspberry Pi single-board computer. This Linux computer is both small and inexpensive yet contains a GPU chip for 3D rendering, allowing the integration of VR environments with the display or behavioral apparatus in varied individual setups. Furthermore, we have developed a graphical software package written in Python, "HallPassVR", which utilizes the single-board computer to render a simple visuospatial environment, a virtual linear track or hallway, by recombining custom visual features selected using a graphical user interface (GUI). This is combined with microcontroller subsystems (e.g., ESP32 or Arduino) to measure locomotion and coordinate behavior, such as by the delivery of other modalities of sensory stimuli or rewards to facilitate reinforcement learning. This system provides an inexpensive, flexible, and easy-to-use alternative method for delivering visuospatial VR environments to head-restrained mice during two-photon imaging (or other techniques requiring head fixation) for studying the neural circuits underlying spatial learning behavior.

Protocol

All procedures in this protocol were approved by the Institutional Animal Care and Use Committee of the New York State Psychiatric Institute.

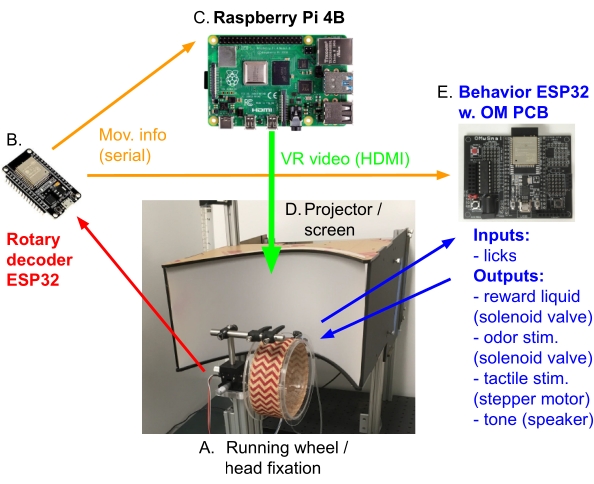

NOTE: A single-board computer is used to display a VR visual environment coordinated with the running of a head-restrained mouse on a wheel. Movement information is received as serial input from an ESP32 microcontroller reading a rotary encoder coupled to the wheel axle. The VR environment is rendered using OpenGL hardware acceleration on the Raspberry Pi GPU, which utilizes the pi3d Python 3D package for Raspberry Pi. The rendered environment is then output via a projector onto a compact wraparound parabolic screen centered on the head-restrained mouse's visual field15,16, while the behavior (e.g., licking in response to spatial rewards) is measured by a second behavior ESP32 microcontroller. The graphical software package enables the creation of virtual linear track environments consisting of repeated patterns of visual stimuli along a virtual corridor or hallway with a graphical user interface (GUI). This design is easily parameterized, thus allowing the creation of complex experiments aimed at understanding how the brain encodes places and visual cues during spatial learning (see section 4). Designs for the custom hardware components necessary for this system (i.e., the running wheel, projection screen, and head-restraint apparatus) are deposited in a public GitHub repository (https://github.com/GergelyTuri/HallPassVR). It is recommended to read the documentation of that repository along with this protocol, as the site will be updated with future enhancements of the system.

1. Hardware setup: Construction of the running wheel, projection screen, and head-fixation apparatus

NOTE: The custom components for these setups can be easily manufactured if the user has access to 3D-printing and laser-cutting equipment or may be outsourced to professional manufacturing or 3D prototyping services (e.g., eMachinehop). All the design files are provided as .STL 3D files or .DXF AutoCAD files.

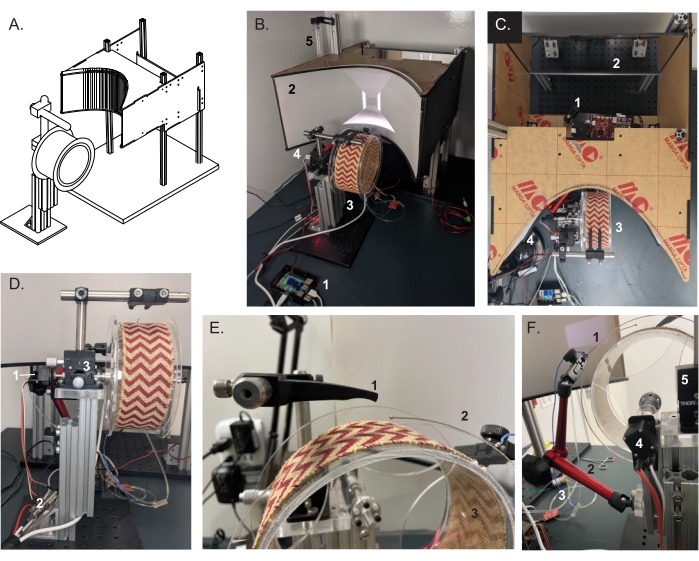

- Running wheel and behavioral setup (Figure 1)

NOTE: The wheel consists of a clear acrylic cylinder (6 in diameter, 3 in width, 1/8 in thickness) centered on an axle suspended from laser-cut acrylic mounts via ball bearings. The wheel assembly is then mounted to a lightweight aluminum frame (t-slotted) and securely fastened to an optical breadboard (Figure 1C-E).- Laser-cut the sides of the wheel and axle mounts from a 1/4 in acrylic sheet, and attach the wheel sides to the acrylic cylinder with acrylic cement. Screw the axle flange into the center of the wheel side piece.

- Insert the axle into the wheel center flange, snap the ball bearings into the axle mounts, and attach them to the vertical aluminum support bar.

- Insert the wheel axle into the mounted ball bearings, leaving 0.5-1 inch of the axle past the bearings for the attachment of the rotary encoder.

- Attach the rotary encoder mount to the end of the axle opposite the wheel, and insert the rotary encoder; then, use the shaft coupler to couple the wheel axle to the rotary encoder shaft.

- Attach the lick port to the flex arm, and then affix to the aluminum wheel frame with t-slot nuts. Use 1/16 inch tubing to connect the lick port to the solenoid valve and the valve to the water reservoir.

NOTE: The lick port must be made of metal with a wire soldered to attach it to the capacitive touch sensing pins of the behavior ESP32.

- Projection screen

NOTE: The VR screen is a small parabolic rear-projection screen (canvas size: 54 cm x 21.5 cm) based on a design developed in Christopher Harvey's laboratory15,16. The projection angle (keystone) of the LED projector used is different from that of the laser projector used previously; thus, the original design is slightly modified by mounting the unit under the screen and simplifying the mirror system (Figure 1A, B). Reading the Harvey lab's documentation along with ours is highly recommended to tailor the VR environment to the user's needs15.- Laser-cut the projection screen sides from 1/4 in black matte acrylic sheets. Laser-cut the back projection mirror from 1/4 in mirrored acrylic.

- Assemble the projection screen frame with the aluminum bars, and laser-cut the acrylic panels.

- Insert the translucent projection screen material into the parabolic slot in the frame. Insert the rear projection mirror into the slot in the back of the projection screen frame.

- Place an LED projector on the bottom mounting plate inside the projection screen frame. Align the projector with mounting bolts to optimize the positioning of the projected image on the parabolic rear projection screen.

- Seal the projector box unit to prevent light contamination of the optical sensors if necessary.

- Head-restraint apparatus

NOTE: This head-restraint apparatus design consists of two interlocking 3D-printed manifolds for securing a metal head post (Figure 1E, F).- Using a high-resolution SLM 3D printer, 3D print the head post holding arms.

NOTE: Resin-printed plastic is able to provide stable head fixation for behavior experiments; however, to achieve maximum stability for sensitive applications like single-cell recording or two-photon imaging, it is recommended to use machined metal parts (e.g., eMachineShop). - Install the 3D-printed head post holder onto a dual-axis goniometer with optical mounting posts so that the animal's head can be tilted to level the preparation.

NOTE: This feature is indispensable for long-term in vivo imaging experiments when finding the same cell population in subsequent imaging sessions is required. Otherwise this feature can be omitted to reduce the cost of the setup. - Fabricate the head posts.

NOTE: Two types of head posts with different complexity (and price) are deposited in the link provided in the Table of Materials along with these instructions.- Depending on the experiment type, decide which head post to implement. The head bars are made of stainless steel and are generally outsourced to any local machine shop or online service (e.g., eMachineShop) for manufacturing.

- Using a high-resolution SLM 3D printer, 3D print the head post holding arms.

2. Setup of the electronics hardware/software (single board computer, ESP32 microcontrollers, Figure 2)

- Configure the single-board computer.

NOTE: The single-board computer included in the Table of Materials (Raspberry Pi 4B) is optimal for this setup because it has an onboard GPU to facilitate VR environment rendering and two HDMI ports for experiment control/monitoring and VR projection. Other single-board computers with these characteristics may potentially be substituted, but some of the following instructions may be specific to Raspberry Pi.- Download the single-board computer imager application to the PC, and install the OS (currently Raspberry Pi OS r.2021-05-07) on the microSD card (16+ GB). Insert the card, and boot the single-board computer.

- Configure the single-board computer for the pi3d Python 3D library: (menu bar) Preferences > Raspberry Pi Configuration.

- Click on Display > Screen Blanking > Disable.

- Click on Interfaces > Serial Port > Enable.

- Click on Performance > GPU Memory > 256 (MB).

- Upgrade the Python image library package for pi3d: (terminal)> sudo pip3 install pillow –upgrade.

- Install the pi3d Python 3D package for the single board computer: (terminal)> sudo pip3 install pi3d.

- Increase the HDMI output level for the projector: (terminal)> sudo nano /boot/config.txt, uncomment config_hdmi_boost=4, save, and reboot.

- Download and install the integrated development environment (IDE) from arduino.cc/en/software (e.g., arduino-1.8.19-linuxarm.tar.gz), which is needed to load the code onto the rotary encoder and the behavior ESP32 microcontrollers.

- Install ESP32 microcontroller support on the IDE:

- Click on File > Preferences > Additional Board Manager URLs = https://raw.githubusercontent.com/espressif/arduino-esp32/gh-pages/package_esp32_index.json

- Click on Tools > Boards > Boards Manager > ESP32 (by Espressif). Install v.2.0.0 (upload currently fails on v2.0.4).

- Download and install the Processing IDE from https://github.com/processing/processing4/releases (e.g., processing-4.0.1-linux-arm32.tgz), which is necessary for the recording and online plotting of the mouse behavior during VR.

NOTE: The Arduino and Processing environments may be run on a separate PC from the VR single-board computer if desired.

- Set up the rotary encoder ESP32 connections.

NOTE: The rotary encoder coupled to the wheel axle measures the wheel rotation with mouse locomotion, which is counted with an ESP32 microcontroller. The position changes are then sent to the single-board computer GPIO serial port to control the movement through the virtual environment using the graphical software package, as well as to the behavior ESP32 to control the reward zones (Figure 2).- Connect the wires between the rotary encoder component and the rotary ESP32. Rotary encoders generally have four wires: +, GND, A and B (two digital lines for quadrature encoders). Connect these via jumper wires to ESP32 3.3 V, GND, 25, 26 (in the case of the attached code).

- Connect the serial RX/TX wires between the rotary ESP32 and the behavior ESP32. Make a simple two-wire connection between the rotary ESP32 Serial0 RX/TX (receive/transmit) and the Serial2 port of the behavior ESP32 (TX/RX, pins 17, 16; see Serial2 port on the right of OMwSmall PCB). This will carry movement information from the rotary encoder to the behavior setup for spatial zones such as reward zones.

- Connect the serial RX/TX wires between the rotary ESP32 and the single-board computer GPIO (or direct USB connection). Make a two-wire connection between the single-board computer GPIO pins 14, 15 (RX/TX) and the rotary ESP32 Serial2 (TX/RX, pins 17, 16). This will carry movement information from the rotary encoder to the graphical software package running on the single-board computer.

NOTE: This step is only necessary if the rotary ESP32 is not connected via a USB (i.e., it is a GPIO serial connection at "/dev/ttyS0"), but the HallPassVR_wired.py code must otherwise be modified to use "/dev/ttyUSB0". This hardwired connection will be replaced with a wireless Bluetooth connection in future versions. - Plug the rotary ESP32 USB into the single-board computer USB (or other PC running the IDE) to upload the initial rotary encoder code.

- Set up the behavior ESP32 connections with the behavioral hardware (via OpenMaze PCB).

NOTE: The behavior ESP32 microcontroller will control all the non-VR animal interactions (delivering non-VR stimuli and rewards, detecting mouse licks), which are connected through a general PCB "breakout board" for the ESP32, "OMwSmall", designs of which are available through the OpenMaze website (www.openmaze.org). The PCB contains the electronic components necessary for driving the electromechanical components, such as the solenoid valves used to deliver liquid rewards.- Connect the 12 V liquid solenoid valve to the ULN2803 IC output on the far left of the OMwSmall PCB (pin 12 in the example setup and code). This IC gates 12 V power to the reward solenoid valve, controlled by a GPIO output on the behavior ESP32 microcontroller.

- Connect the lick port to the ESP32 touch input (e.g., T0, GPIO4 in the example code). The ESP32 has built-in capacitive touch sensing on specific pins, which the behavior ESP32 code uses to detect the mouse's licking of the attached metal lick port during the VR behavior.

- Connect the serial RX/TX wires between the behavior ESP32 Serial2 (pins 16, 17) and rotary encoder ESP32 Serial0 (see step 2.2.2).

- Plug the USB into the single-board computer's USB port (or other PC) to upload new programs to the behavior ESP32 for different experimental paradigms (e.g., number/location of reward zones) and to capture behavior data using the included Processing sketch.

- Plug the 12 V DC wall adapter into the 2.1 mm barrel jack connector on the behavior ESP32 OMwSmall PCB to provide the power for the reward solenoid valve.

- Plug the single-board computer's HDMI #2 output into the projector HDMI port; this will carry the VR environment rendered by the single-board computer GPU to the projection screen.

- (optional) Connect the synchronization wire (pin 26) to a neural imaging or electrophysiological recording setup. A 3.3 V transistor-transistor-logic (TTL) signal will be sent every 5 s to align the systems with near-millisecond precision.

- Set up the software: Load the firmware/software onto the rotary encoder ESP32 (Figure 2B) and behavior ESP32 (Figure 2E) using the IDE, and download the VR Python software onto the single-board computer. See https://github.com/GergelyTuri/HallPassVR/software.

- Plug the rotary encoder ESP32 into the single-board computer's USB port first-this will automatically be named "/dev/ttyUSB0" by the OS.

- Load the rotary encoder code: Open the file RotaryEncoder_Esp32_VR.ino in the IDE, and then select the ESP32 under Tools > Boards > ESP32 Dev Module. Select the ESP32 port by clicking Tools > Port > /dev/ttyUSB0, and then click on Upload.

- Plug the behavior ESP32 into the single-board computer's USB port next-this will be named "/dev/ttyUSB1"by the OS.

- Load the behavior sequence code onto the behavior ESP32 (IDE, ESP32 Dev Module already selected), then click on Tools > Port > /dev/ttyUSB1, and click on Upload: wheel_VR_behavior.ino.

- Test the serial connections by selecting the serial port for each ESP32 in the IDE (Tools > Port > /dev/ttyUSB0, or /dev/ttyUSB1) and then clicking on Tools > Serial Monitor (baud rate: 115,200) to observe the serial output from the rotary board (USB0) or the behavior board (USB1). Rotate the wheel to see a raw movement output from the rotary ESP32 on USB0 or formatted movement output from the behavior ESP32 on USB1.

- Download the graphical software package Python code from https://github.com/GergelyTuri/HallPassVR/tree/master/software/HallPassVR (to /home/pi/Documents). This folder contains all the files necessary for running the graphical software package if the pi3d Python3 package was installed correctly earlier (step 2.1).

3. Running and testing the graphical software package

NOTE: Run the graphical software package GUI to initiate a VR linear track environment, calibrate the distances on the VR software and behavior ESP32 code, and test the acquisition and online plotting of the mouse's running and licking behavior with the included Processing language sketch.

- Open the terminal window in the single-board computer, and navigate to the HallPassVR folder (terminal:> cd /home/pi/Documents/HallPassVR/HallPassVR_Wired)

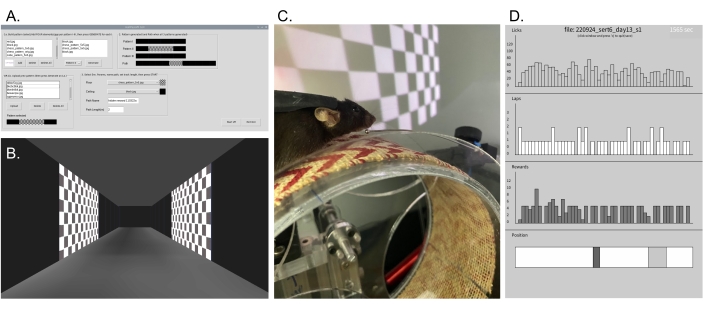

- Run the VR GUI: (terminal)> python3 HallPassVR_GUI.py (the GUI window will open, Figure 3A).

- Graphical software GUI

- Select and add four elements (images) from the listbox (or select the pre-stored pattern below, and then click on Upload) for each of the three patterns along the track, and then click on Generate.

NOTE: New image files (.jpeg) can be placed in the folder HallPassVR/HallPassVR_wired/images/ELEMENTS before the GUI is run. - Select floor and ceiling images from the dropdown menus, and set the length of the track as 2 m for this example code (it must equal the trackLength in millimeters [mm] in the behavior ESP32 code and Processing code).

- Name this pattern if desired (it will be stored in HallPassVR_wired/images/PATH_HIST).

- Click the Start button (wait until the VR window starts before clicking elsewhere). The VR environment will appear on Screen #2 (projection screen, Figure 3B, C).

- Select and add four elements (images) from the listbox (or select the pre-stored pattern below, and then click on Upload) for each of the three patterns along the track, and then click on Generate.

- Run the Processing sketch to acquire and plot the behavioral data/movement.

- Open VRwheel_RecGraphSerialTxt.pde in the Processing IDE.

- Change the animal = "yourMouseNumber"; variable, and set sessionMinutes equal to the length of the behavioral session in minutes.

- Click on the Run button on the Processing IDE.

- Check the Processing plot window, which should show the current mouse position on the virtual linear track as the wheel rotates, along with the reward zones and running histograms of the licks, laps, and rewards updated every 30 s (Figure 3D). Advance the running wheel by hand to simulate the mouse running for testing, or use a test mouse for the initial setup.

- Click on the plot window, and press the q key on the keyboard to stop acquiring behavioral data. A text file of the behavioral events and times (usually <2 MB in size per session) and an image of the final plot window (.png) is saved when sessionMinutes has elapsed or the user presses the q key to quit.

NOTE: Due to the small size of the output .txt files, it is estimated that at least several thousand behavior recordings can be stored on the single-board computer's SD card. Data files can be saved to a thumb drive for subsequent analysis, or if connected to a local network, the data can be managed remotely.

- Calibrate the behavior track length with the VR track length.

- Advance the wheel by hand while observing the VR corridor and mouse position (on the Processing plot). If the VR corridor ends before/after the mouse reaches the end of the behavior plot, increase/decrease the VR track length incrementally (HallPassVR_wired.py, corridor_length_default, in centimeters [cm]) until the track resets simultaneously in the two systems.

NOTE: The code is currently calibrated for a 6 inch diameter running wheel using a 256-position quadrature rotary encoder, so the user may have to alter the VR (HallPassVR_wired.py, corridor_length_default, in centimeters [cm]) and behavior code (wheel_VR_behavior.ino, trackLength, in millimeters [mm]) to account for other configurations. The behavioral position is, however, reset on each VR lap to maintain correspondence between the systems.

- Advance the wheel by hand while observing the VR corridor and mouse position (on the Processing plot). If the VR corridor ends before/after the mouse reaches the end of the behavior plot, increase/decrease the VR track length incrementally (HallPassVR_wired.py, corridor_length_default, in centimeters [cm]) until the track resets simultaneously in the two systems.

4. Mouse training and spatial learning behavior

NOTE: The mice are implanted for head fixation, habituated to head restraint, and then trained to run on the wheel and lick consistently for liquid rewards progressively ("random foraging"). Mice that achieve consistent running and licking are then trained on a spatial hidden reward task using the VR environment, in which a single reward zone is presented following a visual cue on the virtual linear track. Spatial learning is then measured as increased licking selectivity for positions immediately prior to the reward zone.

- Head post implantation surgery: This procedure is described in detail elsewhere in this journal and in others, so refer to this literature for specific instructions7,17,18,19,20,21.

- Water schedule

- Perform water restriction 24 hours prior to first handling (see below), and allow ad libitum water consumption following each session of habituation or head-restrained behavior. Decrease the time of water availability gradually over three days during habituation to around 5 minutes, and adjust the amount for individual mice such that their body weight does not fall below 80% of their pre-restriction weight. Monitor the weight of each animal daily and also observe the condition of each mouse for signs of dehydration22. Mice that are not able to maintain 80% of their pre-restriction body weight or appear dehydrated should be removed from the study and given free water availability.

NOTE: Water restriction is necessary to motivate the mice to run on the wheel using liquid rewards, as well as to use spatial licking as an indication of learned locations along the track. Institutional guidelines may differ on specific instructions for this procedure, so the user must consult their individual institutional animal care committees to assure animal health and welfare during water restriction.

- Perform water restriction 24 hours prior to first handling (see below), and allow ad libitum water consumption following each session of habituation or head-restrained behavior. Decrease the time of water availability gradually over three days during habituation to around 5 minutes, and adjust the amount for individual mice such that their body weight does not fall below 80% of their pre-restriction weight. Monitor the weight of each animal daily and also observe the condition of each mouse for signs of dehydration22. Mice that are not able to maintain 80% of their pre-restriction body weight or appear dehydrated should be removed from the study and given free water availability.

- Handling: Handle the implanted mice daily to habituate them to human contact, following which limited ad libitum water may be administered as a reinforcement (1-5 min/day, 2 days to 1 week).

- Habituation to the head restraint

- Habituate the mice to the head restraint for increasing amounts of time by placing them in the head restraint apparatus while rewarding them with occasional drops of water to reduce the stress of head fixation.

- Start with 5 min of head fixation, and increase the duration by 5 min increments daily until the mice are able to tolerate fixation for up to 30 min. Remove the mice from the fixation apparatus if they appear to be struggling or moving very little. However, mice generally begin running on the wheel spontaneously within several sessions, which means they are ready for the next stage of training.

NOTE: Mice that repeatedly struggle under head restraint or do not run and lick for rewards should be regressed to earlier stages of training and removed from the study if they fail to progress for three such remedial cycles (see Table 1).

- Run/lick training (random foraging)

NOTE: To perform the spatial learning task in the VR environment, the mice must first learn to run on the wheel and lick consistently for occasional rewards. The progression in the operant behavioral parameters is controlled via the behavior ESP32 microcontroller.- Random foraging with non-operant rewards

- Run the graphical software GUI program with a path of arbitrary visual elements (user choice, see step 3.3).

- Upload the behavior program to the behavior ESP32 with multiple non-operant rewards (code variables: isOperant=0, numRew=4, isRandRew=1) to condition the mice to run and lick. Run the mice in 20-30 min sessions until the mice run for at least 20 laps per session and lick for rewards presented in random locations (one to four sessions).

- Random foraging with operant rewards on alternate laps

- Upload the behavior program with altOpt=1 (alternating operant/non-operant laps), and train the mice until they lick for both non-operant and operant reward zones (one to four sessions).

- Fully operant random foraging

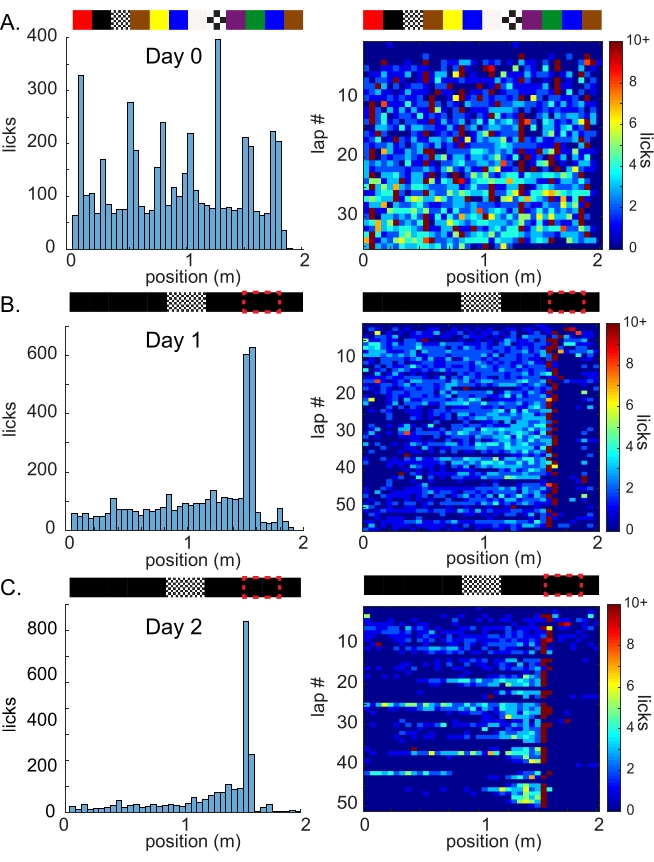

- Upload the behavior program with four operant random reward zones (behavior ESP32 code variables: isOperant=1, numRew=4, isRandRew=1). By the end of this training step, the mice should be running consistently and performing test licks over the entire track length (one to four sessions; Figure 4A).

- Random foraging with non-operant rewards

- Spatial learning

NOTE: Perform a spatial learning experiment with a single hidden reward zone some distance away from a single visual cue by selecting a 2 m long hallway with dark panels along the track and a single high-contrast visual stimulus panel in the middle as a visual cue (0.9-1.1 m position), analogous to recent experiments with spatial olfactory cues20. Mice are required to lick at a reward zone (at a 1.5-1.8 m position) located a distance away from the visual cue in the virtual linear track environment.- Run the graphical software program with a path of a dark hallway with a single visual cue in the center (e.g., chessboard, see step 3.3, Figure 3A).

- Upload the behavior program with a single hidden reward zone to the behavior ESP32 (behavior ESP32 code variables: isOperant=1, isRandRew=0, numRew=1, rewPosArr[]= {1500}).

- Gently place the mouse in the head-fixation apparatus, adjust the lick spout to a location just anterior to the mouse's mouth, and position the mouse wheel into the center of the projection screen zone. Ensure that the head of the mouse is ~12-15 cm away from the screen after the final adjustments.

- Set the animal's name in the Processing sketch, and then press run in the Processing IDE to start acquiring and plotting the behavioral data (see step 3.4).

- Run the mouse for 30 min sessions with a single hidden reward zone and single visual cue VR hallway.

- Offline: download the .txt data file from the Processing sketch folder and analyze the spatial licking behavior (e.g., in Matlab with the included files procVRbehav.m and vrLickByLap.m).

NOTE: The mice should initially perform test licks over the entire virtual track ("random foraging") and then begin to lick selectively only near the reward location following the VR visual cue (Figure 4).

Representative Results

This open-source virtual reality behavioral setup allowed us to quantify licking behavior as a read-out of spatial learning as head-restrained mice navigated a virtual linear track environment. Seven C57BL/6 mice of both sexes at 4 months of age were placed on a restricted water schedule and first trained to lick continuously at low levels while running on the wheel for random spatial rewards ("random foraging") without VR. Although their performance was initially affected when moved to the VR projection screen setup with a 2 m random hallway pattern, it returned to previous levels within several VR sessions (Figure 4A). The mice that developed the random foraging strategy with VR (six of the seven mice, 86%; one mouse failed to run consistently and was excluded) were then required to lick at an uncued operant reward zone at 0.5 m following a single visual location cue in the middle of an otherwise featureless 2 m virtual track in order to receive water rewards ("hidden reward task"). According to the current pilot data with this system, four of the seven (57%) mice were able to learn the hidden reward task with a single visual cue in two to four sessions, as shown by licking near the reward zone with increasing selectivity (Table 1, Figure 4B,C), which is similar to our previous results with a non-VR treadmill17. This fact is important in the study of spatial learning, as it allows for the monitoring and/or manipulation of neural activity during critical periods of learning without extensive training. Furthermore, the mice exhibited both substantial within-session as well as between-session learning (Figure 4C), providing an opportunity to observe both the short-term and long-term neural circuit adaptations that accompany spatial learning. We did not test the learning rate of an equivalent non-VR task, but many classical real-world hippocampus-dependent spatial tasks, such as the Morris water maze, require even more extensive training and present dramatically fewer behavioral trials and, thus, are less suitable for the monitoring of learning behavior along with neural activity changes.

While a majority of mice in this pilot group (57%) were able to learn the hidden reward task in a small number of sessions, additional mice may exhibit spatial learning over longer timescales, and individualized training should increase this fraction of mice. Indeed, variations in learning rates may be useful for dissociating the specific relationships between neural activity in brain areas such as the hippocampus and behavioral learning. However, we observed that a small percentage of mice did not learn to run on the wheel or lick for either non-operant or operant rewards (one of the seven mice, 14%) and, thus, could not be used for subsequent experiments. Additional handling and habituation and a reduction in the general state of stress of the animal through further reinforcement, such as by using desirable food treats, may be useful for helping these animals adopt active running and licking during head-restrained behavior on the wheel.

By manipulating the presence and position of the cue and reward zones on intermittent laps on the virtual track, an experimenter may further discern the dependence of spatially selective licking on specific channels of information in VR to determine, for example, how mice rely on local or distant cues or self-motion information to establish their location in an environment. The licking selectivity of mice that have learned the hidden reward location should be affected by the shift or omission of the visual cue along the track if they actively utilize this spatial cue as a landmark, as we have shown in a recent work using spatial olfactory cues20. However, even with the simple example we have presented here, the highly selective licking achieved by the mice (Figure 4C, right) indicates that they encode the VR visual environment to inform their decisions about where they are and, therefore, when to lick, as the reward zone is only evident in relation to visual cues in the VR environment. This VR system also allows the presentation of other modalities of spatial and contextual cues in addition to the visual VR environment, such as olfactory, tactile, and auditory cues, which can be used to test the selectivity of neural activity and behavior for complex combinations of distinct sensory cues. Additionally, although we did not test for the dependence of task performance on hippocampal activity, a recent study using a similar task but with tactile cues showed a perturbation of spatial learning with hippocampal inactivation23, which should be confirmed for the VR hidden reward task performed in this study.

Figure 1: Head-restrained VR hardware setup: Projection screen, running wheel, and head-fixation apparatus. (A) A 3D design schematic of the running wheel and projection screen. (B) Completed VR behavioral setup. The VR environment is rendered on (1) a single-board computer and projected onto a parabolic (2) rear-projection screen (based on Chris Harvey's lab's design15,16). (3) Wheel assembly. (4) Head post holder. (5) Water reservoir for reward delivery. (C) Top view of the projection screen and behavioral setup. (1) LED projector. (2) Mirror for rear-projecting the VR corridor onto the curved screen. (3) Running wheel. (D) Rear view of the wheel assembly. Wheel rotations are translated by the (1) rotary encoder and transmitted to the single-board computer via an (2) ESP32 microcontroller. (3) A dual-axis goniometer is used to fine-tune the head position for optical imaging. (E) Setup at the level of mouse insertion, showing the (1) head-fixation apparatus and (2) lick port placement over the (3) running wheel surface. (F) Photograph of the (1) lick port attached to the (2) flex arm for precise placement of the reward spout near the mouth of the mouse. Rewards are given via a (3) solenoid valve controlled by the behavior ESP32 (via the OpenMaze OMwSmall PCB). Also visible is the rotary encoder coupled to the (4) wheel axle and (5) the goniometer for head angle adjustment. Please click here to view a larger version of this figure.

Figure 2: VR electronics setup schematic. This schematic depicts the most relevant connections between the electronic components in the open-source virtual reality system for mice. (A) Mice are head-restrained on a custom 3D-printed head-fixation apparatus above an acrylic running wheel. (B) The rotation of the wheel axle when the mouse is running is detected by a high-resolution rotary encoder connected to a microcontroller (Rotary decoder ESP32). (C) Movement information is conveyed via a serial connection to a single-board computer running the HallPassVR GUI software and 3D environment, which updates the position in the VR virtual linear track environment based on the mouse's locomotion. (D) The rendered VR environment is sent to the projector/screen via the HDMI #2 video output of the single-board computer (VR video HDMI). (E) Movement information from the rotary encoder ESP32 is also sent to another microcontroller (Behavior ESP32 with the OpenMaze OMwSmall PCB), which uses the mouse's position to control spatial, non-VR behavioral events (such as reward zones or spatial olfactory, tactile, or auditory stimuli) in concert with the VR environment and measures the mouse's licking of the reward spout via capacitive touch sensing. Please click here to view a larger version of this figure.

Figure 3: Graphical software GUI and behavior. (A) HallPassVR GUI: Four images are selected to tile over each spatial pattern covering one-third of the track length (or the previously saved combination pattern is loaded) for three patterns in each path equal to the track length. Ceiling and floor images are selected, and then Start is pressed to initialize the VR environment on the single-board computer's HDMI output (projection screen). (B) Example virtual corridor created with the GUI parameters shown in A and used for a hidden reward experiment to test spatial learning. (C) Photograph of a head-restrained mouse running on the wheel in the virtual environment shown in B. (D) The top panel shows the online plot of animal behavior in a VR environment from the included Processing sketch to record and plot the behavioral data. Licks, laps, and rewards are plotted per 30 s time bins for the 30 min session during hidden reward spatial learning. The bottom panel shows the current mouse position (black) and the location of any reward zones (gray) during behavior. Please click here to view a larger version of this figure.

Figure 4: Spatial learning using the graphical software environment. Representative spatial licking data from one animal (A) during random foraging with random cues along the virtual linear track and (B–C) 2 days of training with a static hidden reward zone at 1.5 m with a single visual cue in the middle of the track. (A) Day 0 random foraging for four reward zones per lap, selected randomly from eight positions spaced evenly along the 2 m virtual linear track. (Left) The average number of licks per spatial bin (5 cm) over the 30 min session (top: VR hallway with random visual stimulus panels). (Right) Number of licks in each 5 cm spatial bin per lap during this session, represented by a heatmap. (B) Day 1, the first day of training with a single reward zone at 1.5 m (red box on the track diagram, top) using a virtual track containing a single high-contrast stimulus at position 0.8-1.2 m. (Left) Average spatial lick counts over the session, showing increasing licks when the animal approaches the reward zone. (Right) Spatial licks per lap, showing increased selectivity of licking in the pre-reward region. (C) Day 2, from the same hidden reward task and virtual hallway as Day 1 and from the same mouse. (Left) Total licks per spatial bin, showing a decrease in licks outside of the pre-reward zone. (Right) Spatial licks per lap on Day 2, showing increased licking prior to the reward zone and decreased licking elsewhere, indicating the development of spatially specific anticipatory licking. This shows that this animal has learned the (uncued) hidden reward location and developed a strategy to minimize effort (licking) in regions where they do not expect a reward to be present. Please click here to view a larger version of this figure.

| Behavioral outcome | Number of mice | Percentage of mice |

| Mouse did not run/lick | 1 | 14% |

| Random foraging only | 2 | 29% |

| Learned hidden reward | 4 | 57% |

| Total (N) | 7 |

Table 1: VR spatial learning behavioral pilot results. Seven C57BL/6 mice of both sexes at 4 months of age were progressively trained to perform a spatial hidden reward task in VR. Of these mice, one mouse did not run/lick after initial training (one of the seven mice, 14%), while six of the remaining mice learned to run on the wheel and lick for random spatial rewards in the random foraging step of training (six of the seven mice, 86%). Four of the six mice that were able to perform the random foraging behavior subsequently learned to lick selectively in anticipation of the non-cued reward in the hidden reward task (four of the seven mice, 57% of mice in total, four of the six mice, 66% of random foraging mice), while two did not (two of the seven mice, 29%).

Discussion

This open-source VR system for mice will only function if the serial connections are made properly between the rotary and behavior ESP32 microcontrollers and the single-board computer (step 2), which can be confirmed using the IDE serial monitor (step 2.4.5). For successful behavioral results from this protocol (step 4), the mice must be habituated to the apparatus and be comfortable running on the wheel for liquid rewards (steps 4.3-4.5). This requires sufficient (but not excessive) water restriction, as mice given ad libitum water in the homecage will not run and lick for rewards (i.e., to indicate their perceived location), and dehydrated mice may be lethargic and not run on the wheel. It is also worth noting that there are alternative methods for motivating mouse behavior without water restriction24; however, we did not test these methods here. For the training procedure, animals that do not run initially may be given ad hoc (i.e., non-spatial) water rewards by the experimenter via an attached optional button press, or the wheel may be moved gently to encourage locomotion. To develop random foraging behavior, mice that run but do not lick should be run with non-operant rewards (behavior ESP32 code: isOperant = 0;, step 4.5.1) until they run and lick for rewards, and they can then be run with alternating laps of non-operant and operant reward zones (altOpt=1; step 4.5.2) until they start to lick on operant laps before moving to fully operant random reward zones (step 4.5.3).

While we have provided complete instructions and example results for a basic set of experiments aimed at eliciting one form of spatial learning (conditioned licking at a hidden reward location in the virtual linear track environment), the same basic hardware and software setup can also be modified for the delivery of more complex visuospatial environments using the pi3d Python package for Raspberry Pi. For example, this system can incorporate more complex mazes such as corridors with variable lengths, multiple patterns and 3D objects, and naturalistic 3D VR landscapes. Furthermore, the behavioral software for the delivery of water rewards and other non-visual stimuli can be modified for other training paradigms by altering key variables (presented at the beginning of the behavior ESP32 code) or by inserting new types of spatial events into the same code. We are happy to advise users regarding methods for implementing other types of behavioral experiments with this VR setup or in troubleshooting.

Immersive VR environments have proven a versatile tool for studying the underlying neural mechanisms of spatial navigation6,7,8, reward-learning behaviors9, and visual perception25 both in clinical and animal studies. The main advantage of this approach is that the experimenter has tight control over contextual elements such as visual cues and specific spatial stimuli (e.g., rewards and olfactory, auditory, or tactile stimuli), which is not practical in real-world environments with freely moving animals. It should be noted, however, that differences may exist in the manner in which VR environments are encoded by brain areas such as the hippocampus when compared with the use of real-world environments26,27. With this caveat, the use of VR environments allows experimenters to perform a large number of behavioral trials with carefully controlled stimuli, allowing the dissociation of the contributions of distinct sensory elements to spatial navigation.

The complexity of building custom VR setups often requires an extensive background in engineering and computer programming, which may increase the time of setup and limit the number of apparatuses that can be constructed to train mice for experimentation. VR setups are also available from commercial vendors; however, these solutions can be expensive and limited if the user wants to implement new features or expand the training/recording capacity to more than one setup. The estimated price range of the open-source VR setup presented here is <$1,000 (USD); however, a simplified version for training (e.g., lacking goniometers for head angle adjustment) can be produced for <$500 (USD), thus allowing the construction of multiple setups for training mice on a larger scale. The modular arrangement of components also allows the integration of VR with other systems for behavioral control, such as the treadmill system with spatial olfactory and tactile stimuli we have used previously20, and, thus, VR and other stimulus modalities are not mutually exclusive.

This open-source VR system with its associated hardware (running wheel, projection screen, and head-fixation apparatus), electronics setup (single-board computer and ESP32 microcontrollers), and software (VR GUI and behavior code) provides an inexpensive, compact, and easy-to-use setup for delivering parameterized immersive VR environments to mice during head-restrained spatial navigation. This behavior may then be synchronized with neural imaging or electrophysiological recording to examine neural activity during spatial learning (step 2.3.7). The spectrum of experimental techniques compatible with VR is wide, ranging from spatial learning behavior alone to combination with fiber photometry, miniscope imaging, single-photon and multi-photon imaging, and electrophysiological techniques (e.g., Neuropixels or intracellular recording). While head restraint is necessary for some of these recording techniques, the extremely precise nature of stimulus presentation and the stereotyped nature of the behavior may also be useful for other techniques not requiring head fixation, such as miniscope imaging and fiber photometry. It should be noted, however, that our capacitive sensor-based solution for detecting licks may introduce significant noise on electrophysiological traces. To avoid such artifacts, optical or other (e.g., mechanical), sensor-based solutions should be implemented for lick detection.

Future improvements to the VR system will be uploaded to the project GitHub page (https://github.com/GergelyTuri/HallPassVR), so users should check this page regularly for updates. For example, we are in the process of replacing the hardwired serial connections between the microcontrollers and the single-board computer with Bluetooth functionality, which is native to the ESP32 microcontrollers already used in this design. In addition, we are planning to upgrade the HallPassVR GUI to allow the specification of different paths in each behavioral session to contain different positions for key landmark visual stimuli on different laps. This will allow greater flexibility for dissociating the impact of specific visual and contextual features on the neural encoding of space during spatial learning.

Divulgations

The authors have nothing to disclose.

Acknowledgements

We would like to thank Noah Pettit from the Harvey lab for the discussion and suggestions while developing the protocol in this manuscript. This work was supported by a BBRF Young Investigator Award and NIMH 1R21MH122965 (G.F.T.), in addition to NINDS R56NS128177 (R.H., C.L.) and NIMH R01MH068542 (R.H.).

Materials

| 1/4 " diam aluminum rod | McMaster-Carr | 9062K26 | 3" in length for wheel axle |

| 1/4"-20 cap screws, 3/4" long (x2) | Amazon.com | B09ZNMR41V | for affixing head post holders to optical posts |

| 2"x7" T-slotted aluminum bar (x2) | 8020.net | 1020 | wheel/animal mounting frame |

| 6" diam, 3" wide acrylic cylinder (1/8" thick) | Canal Plastics | 33210090702 | Running wheel (custom width cut at canalplastics.com) |

| 8-32 x 1/2" socket head screws | McMaster-Carr | 92196A194 | fastening head post holder to optical post |

| Adjustable arm (14") | Amazon.com | B087BZGKSL | to hold/adjust lick spout |

| Analysis code (MATLAB) | custom written | file at github.com/GergelyTuri/HallPassVR/software/Analysis code | |

| Axle mounting flange, 1/4" ID | Pololu | 1993 | for mounting wheel to axle |

| Ball bearing (5/8" OD, 1/4" ID, x2) | McMaster-Carr | 57155K324 | for mounting wheel axle to frame |

| Behavior ESP32 code | custom written | file at github.com/GergelyTuri/HallPassVR/software/Arduino code/Behavior board | |

| Black opaque matte acrylic sheets (1/4" thick) | Canal Plastics | 32918353422 | laser cut file at github.com/GergelyTuri/HallPassVR/hardware/VR screen assembly |

| Clear acrylic sheet (1/4" thick) | Canal Plastics | 32920770574 | laser cut file at github.com/GergelyTuri/HallPassVR/hardware/VR wheel assembly |

| ESP32 devKitC v4 (x2) | Amazon.com | B086YS4Z3F | microcontroller for behavior and rotary encoder |

| ESP32 shield | OpenMaze.org | OMwSmall | description at www.openmaze.org (https://claylacefield.wixsite.com/openmazehome/copy-of-om2shield). ZIP gerber files at: https://github.com/claylacefield/OpenMaze/tree/master/OM_PCBs |

| Fasteners and brackets | 8020.net | 4138, 3382,3280 | for wheel frame mounts |

| goniometers | Edmund Optics | 66-526, 66-527 | optional for behavior. Fine tuning head for imaging |

| HallPassVR python code | custom written | file at github.com/GergelyTuri/HallPassVR/software/HallPassVR | |

| Head post holder | custom design | 3D design file at github.com/GergelyTuri/HallPassVR/hardware/VR head mount/Headpost Clamp | |

| LED projector | Texas Instruments | DLPDLCR230NPEVM | or other small LED projector |

| Lick spout | VWR | 20068-638 | (or ~16 G metal hypodermic tubing) |

| M 2.5 x 6 set screws | McMaster-Carr | 92015A097 | securing head post |

| Matte white diffusion paper | Amazon.com | screen material | |

| Metal headposts | custom design | 3D design file at github.com/GergelyTuri/HallPassVR/hardware/VR head mount/head post designs | |

| Miscellenous tubing and tubing adapters (1/16" ID) | for constructing the water line | ||

| Optical breadboard | Thorlabs | as per user's requirements | |

| Optical posts, 1/2" diam (2x) | Thorlabs | TR4 | for head fixation setup |

| Processing code | custom written | file at github.com/GergelyTuri/HallPassVR/software/Processing code | |

| Raspberry Pi 4B | raspberry.com, adafruit.com | Single-board computer for rendering of HallPassVR envir. | |

| Right angle clamp | Thorlabs | RA90 | for head fixation setup |

| Rotary encoder (quadrature, 256 step) | DigiKey | ENS1J-B28-L00256L | to measure wheel rotation |

| Rotary encoder ESP32 code | custom written | file at github.com/GergelyTuri/HallPassVR/software/Arduino code/Rotary encoder | |

| SCIGRIP 10315 acrylic cement | Amazon.com | ||

| Shaft coupler | McMaster-Carr | 9861T426 | to couple rotary encoder shaft with axle |

| Silver mirror acrylic sheets | Canal Plastics | 32913817934 | laser cut file at github.com/GergelyTuri/HallPassVR/hardware/VR screen assembly |

| Solenoid valve | Parker | 003-0137-900 | to administer water rewards |

References

- Lisman, J., et al. Viewpoints: How the hippocampus contributes to memory, navigation and cognition. Nature Neuroscience. 20 (11), 1434-1447 (2017).

- Buzsaki, G., Moser, E. I. Memory, navigation and theta rhythm in the hippocampal-entorhinal system. Nature Neuroscience. 16 (2), 130-138 (2013).

- O’Keefe, J., Dostrovsky, J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Research. 34 (1), 171-175 (1971).

- O’Keefe, J. Place units in the hippocampus of the freely moving rat. Experimental Neurology. 51 (1), 78-109 (1976).

- Fyhn, M., Molden, S., Witter, M. P., Moser, E. I., Moser, M. B. Spatial representation in the entorhinal cortex. Science. 305 (5688), 1258-1264 (2004).

- Letzkus, J. J., et al. A disinhibitory microcircuit for associative fear learning in the auditory cortex. Nature. 480 (7377), 331-335 (2011).

- Lacefield, C. O., Pnevmatikakis, E. A., Paninski, L., Bruno, R. M. Reinforcement learning recruits somata and apical dendrites across layers of primary sensory cortex. Cell Reports. 26 (8), 2000-2008 (2019).

- Dombeck, D. A., Harvey, C. D., Tian, L., Looger, L. L., Tank, D. W. Functional imaging of hippocampal place cells at cellular resolution during virtual navigation. Nature Neuroscience. 13 (11), 1433-1440 (2010).

- Gauthier, J. L., Tank, D. W. A dedicated population for reward coding in the hippocampus. Neuron. 99 (1), 179-193 (2018).

- Rickgauer, J. P., Deisseroth, K., Tank, D. W. Simultaneous cellular-resolution optical perturbation and imaging of place cell firing fields. Nature Neuroscience. 17 (12), 1816-1824 (2014).

- Yadav, N., et al. Prefrontal feature representations drive memory recall. Nature. 608 (7921), 153-160 (2022).

- Priestley, J. B., Bowler, J. C., Rolotti, S. V., Fusi, S., Losonczy, A. Signatures of rapid plasticity in hippocampal CA1 representations during novel experiences. Neuron. 110 (12), 1978-1992 (2022).

- Heys, J. G., Rangarajan, K. V., Dombeck, D. A. The functional micro-organization of grid cells revealed by cellular-resolution imaging. Neuron. 84 (5), 1079-1090 (2014).

- Harvey, C. D., Collman, F., Dombeck, D. A., Tank, D. W. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature. 461 (7266), 941-946 (2009).

- . Harvey Lab Mouse VR Available from: https://github.com/Harvey/Lab/mouseVR (2021)

- Pettit, N. L., Yap, E. L., Greenberg, M. E., Harvey, C. D. Fos ensembles encode and shape stable spatial maps in the hippocampus. Nature. 609 (7926), 327-334 (2022).

- Turi, G. F., et al. Vasoactive intestinal polypeptide-expressing interneurons in the hippocampus support goal-oriented spatial learning. Neuron. 101 (6), 1150-1165 (2019).

- Ulivi, A. F., et al. Longitudinal two-photon imaging of dorsal hippocampal CA1 in live mice. Journal of Visual Experiments. (148), e59598 (2019).

- Wang, Y., Zhu, D., Liu, B., Piatkevich, K. D. Craniotomy procedure for visualizing neuronal activities in hippocampus of behaving mice. Journal of Visual Experiments. (173), e62266 (2021).

- Tuncdemir, S. N., et al. Parallel processing of sensory cue and spatial information in the dentate gyrus. Cell Reports. 38 (3), 110257 (2022).

- Dombeck, D. A., Khabbaz, A. N., Collman, F., Adelman, T. L., Tank, D. W. Imaging large-scale neural activity with cellular resolution in awake, mobile mice. Neuron. 56 (1), 43-57 (2007).

- Guo, Z. V., et al. Procedures for behavioral experiments in head-fixed mice. PLoS One. 9 (2), 88678 (2014).

- Jordan, J. T., Gonçalves, J. T. Silencing of hippocampal synaptic transmission impairs spatial reward search on a head-fixed tactile treadmill task. bioRxiv. , (2021).

- Urai, A. E., et al. Citric acid water as an alternative to water restriction for high-yield mouse behavior. eNeuro. 8 (1), (2021).

- Saleem, A. B., Diamanti, E. M., Fournier, J., Harris, K. D., Carandini, M. Coherent encoding of subjective spatial position in visual cortex and hippocampus. Nature. 562 (7725), 124-127 (2018).

- Ravassard, P., et al. Multisensory control of hippocampal spatiotemporal selectivity. Science. 340 (6138), 1342-1346 (2013).

- Aghajan, Z. M., et al. Impaired spatial selectivity and intact phase precession in two-dimensional virtual reality. Nature Neuroscience. 18 (1), 121-128 (2015).