High Throughput Analysis of Liquid Droplet Impacts

Summary

This protocol enables efficient collection of experimental high-speed images of liquid drop impacts, and speedy analysis of those data in batches. To streamline these processes, the method describes how to calibrate and set up apparatus, generate an appropriate data structure, and deploy an image analysis script.

Abstract

Experimental studies of liquid drop impacts on surfaces are often restricted in their scope due to the large range of possible experimental parameters such as material properties, impact conditions, and experimental configurations. Compounding this, drop impacts are often studied using data-rich high-speed photography, so that it is difficult to analyze many experiments in a detailed and timely manner. The purpose of this method is to enable efficient study of droplet impacts with high-speed photography by using a systematic approach. Equipment is aligned and calibrated to produce videos that can be accurately processed by a custom image processing code. Moreover, the file structure setup and workflow described here ensure efficiency and clear organization of data processing, which is carried out while the researcher is still in the lab. The image processing method extracts the digitized outline of the impacting droplet in each frame of the video, and processed data are stored for further analysis as required. The protocol assumes that a droplet is released vertically under gravity, and impact is recorded by a camera viewing from side-on with the drop illuminated using shadowgraphy. Many similar experiments involving image analysis of high-speed events could be addressed with minor adjustment to the protocol and equipment used.

Introduction

Liquid drop impacts on surfaces are of great interest both for understanding of fundamental phenomena1 and for industrial processes2. Drop impacts have been studied for over 100 years3, but many aspects are yet to be fully investigated. High-speed photography is almost universally used for studies of drop impacts4 because it provides rich, accessible data which enables analytical measurements to be made with good time resolution. The outcomes of a drop impact on a solid surface5,6,7 range from simple deposition through to splashing8. Impacts on superhydrophobic surfaces are often studied as they can generate particularly interesting outcomes, including drop bouncing9,10,11,12. The protocol described here was developed to study water drop impacts on polymer surfaces with microscale patterning, and in particular the influence of the pattern on drop impact outcomes13,14.

The outcome of a drop impact experiment may be affected by a large range of possible variables. The size and velocity of the drop may vary, along with fluid properties such as density, surface tension, and viscosity. The drop may be either Newtonian15 or non-Newtonian16. A large variety of impact surfaces has been studied, including liquid7,17, solid18, and elastic19 surfaces. Various possible experimental configurations were described previously by Rein et al.17. The droplet can take different shapes. It can be oscillating, rotating, or impact at an angle to the surface. The surface texture, and environmental factors such as temperature may vary. All these parameters make the field of droplet impacts extremely wide-ranging.

Due to this large range of variables, studies of dynamic liquid wetting phenomena are often limited to focus on relatively specific or narrow topics. Many such investigations use a moderate number of experiments (e.g., 50−200 data points) obtained from manually processed high-speed videos10,20,21,22. The breadth of such studies is limited by the amount of data that may be obtained by the researcher in a reasonable amount of time. Manual processing of videos requires the user to perform repetitive tasks, such as measuring the diameter of impacting droplets, often achieved with the use of image analysis software (Fiji23 and Tracker24 are popular choices). The most widely-used measurement for characterizing drop impacts is the diameter of a spreading drop25,26,27,28.

Due to improvements in image processing, automatic computer-aided methods are starting to improve data collection efficiency. For example, image analysis algorithms for automatic measurement of contact angle29 and surface tension using the pendant drop method30 are now available. Much greater efficiency gains can be made for high-speed photography of drop impacts, which produces movies consisting of many individual images for analysis, and indeed some recent studies have started to use automated analysis15,18, although the experimental workflow has not clearly changed. Other improvements in the experimental design for drop impact experiments have arisen from advancements in commercially available LED light sources, which can be coupled with high-speed cameras via the shadowgraph technique31,32,33,34.

This article describes a standardized method for capture and analysis of drop impact movies. The primary aim is to enable efficient collection of large data sets, which should be generally useful for the wide variety of drop impact studies described above. Using this method, the time-resolved, digitized outline of an impacting drop may be obtained for ~100 experiments a day. The analysis automatically calculates the droplet impact parameters (size, velocity, Weber and Reynolds numbers) and the maximum spreading diameter. The protocol is directly applicable for any basic droplet parameters (including liquid, size, and impact velocity), substrate material, or environmental conditions. Studies that scan a large range of experimental parameters can be conducted in a relatively short timeframe. The method also encourages high resolution studies, covering a small range of variables, with multiple repeat experiments.

The benefits of this method are provided by the standardized experiment, and a clear data structure and workflow. The experimental setup produces images with consistent properties (spatial and contrast) that can be passed to a custom image analysis code (included as a Supplementary Coding File that runs on MATLAB) for prompt processing of recorded videos immediately following the experiment. Integration of data processing and acquisition is a primary reason for the improved overall speed of data collection. After a session of data acquisition, each video has been processed and all relevant raw data is stored for further analysis without requiring reprocessing of the video. Moreover, the user can visually inspect the quality of each experiment immediately after it is carried out and repeat the experiment if necessary. An initial calibration step ensures that the experimental setup can be reproduced between different lab sessions with good precision.

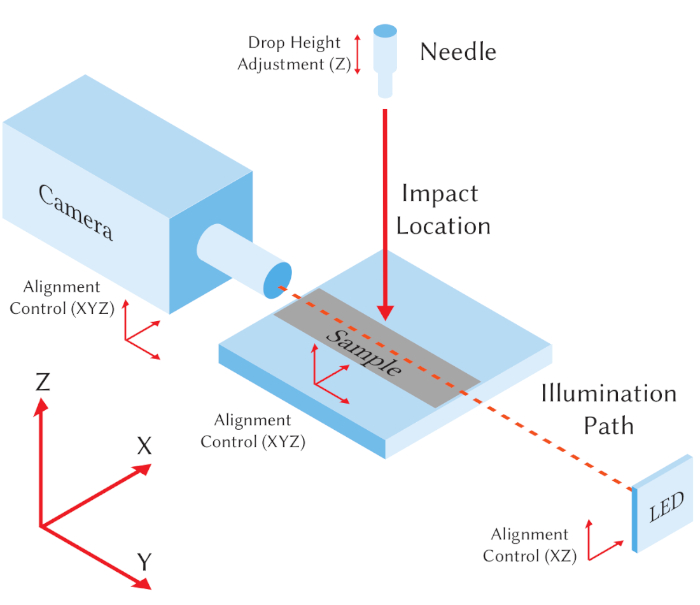

It is assumed that to implement this method the user has access to a high-speed camera arranged so that it images the surface from a horizontal (side-on) point of view. A schematic representation of this arrangement is shown in Figure 1, including definition of Cartesian axes. The system should have the ability to precisely position both the camera and sample in three dimensions (X, Y and Z). A shadowgraph method is implemented for illuminating the droplet and is placed along the optical path of the camera. The system should use a high-quality direct current (DC) LED illumination system (including a collimating condenser lens) that can be moved in X and Z directions to align the optical path with the camera. It is also assumed that the user has access to a syringe pump that they can program to produce individual droplets of desired volume when connected to a particular needle35. The droplet falls under gravity so that its impact velocity is controlled by the position of the needle above the surface. Although this setup is quite generic, Table of Materials lists specific equipment used to obtain the representative results, and notes some potential restrictions imposed by choice of equipment.

Figure 1: Schematic representation of the minimal experimental setup. A high-speed camera is positioned to image droplets impacting vertically on a sample from side-on. An LED light source is aligned with the camera's line of sight for shadowgraphy. A needle is used for individual droplet production, and Cartesian axes are defined. Please click here to view a larger version of this figure.

The method description is focused on the measurement of the edges of liquid droplets as they fall and impact. Images are obtained from the commonly used side-on viewpoint. It is possible to investigate spreading droplets from both side-on and bottom-up views using two high speed cameras13,14, but the bottom-up view is not possible for opaque materials, and a top-down view produces alignment complications. The basic workflow could be used to improve research for any small (2−3 mm diameter) objects that impact surfaces, and it could be used for larger or smaller objects with further minor changes. Improvements and alternatives to the experimental setup and method are considered further in the discussion section.

Protocol

1. Setting up the high-speed camera

- Set the fixed field of view (FOV) for the camera and calculate the conversion factor from pixel to mm.

- Place an alignment marker (e.g., a 4 mm side length marker with the image analysis code provided) on the center position of the sample stage so that it is facing the camera. Adjust the magnification of the camera so that the square marker fits within the FOV. Ensure that the marker is in focus and capture an image.

NOTE: The image analysis code requires that an imaged droplet covers more than 1% of the total FOV, otherwise it is classified as noise. Likewise, the droplet should not take up more than 40% of the FOV, otherwise it is identified as a failed image processing event. - Lock the magnification of the lens and ensure that this remains unchanged during a batch of experiments.

- Load the graphical user interface (GUI) for the droplet impact analysis software by clicking on the icon within MATLAB.

- Run the image analysis code. On the GUI, click the calibrate camera button and select the image obtained in step 1.1.1. Enter the size of the calibration square in mm and click OK.

- Move the rectangle shown on screen until the calibration square is the only object within it. Click OK and the software will automatically calculate the conversion factor. If the automatic calibration fails, follow the software guide to perform manual calibration.

- Place an alignment marker (e.g., a 4 mm side length marker with the image analysis code provided) on the center position of the sample stage so that it is facing the camera. Adjust the magnification of the camera so that the square marker fits within the FOV. Ensure that the marker is in focus and capture an image.

- Align the experimental system.

- Prepare the liquid being used for dispensing of individual droplets.

- Position the needle mount at around the user's eye level to enable ease of loading.

- Manually purge the tubing to remove any fluid by pushing air through with a syringe. Ensure that the tubing is not twisted and that the needle is secure and clean. Fix the needle and tubing so that the needle is vertical.

NOTE: If required, clean the steel needle with ethanol in an ultrasonic bath. - Fill the syringe with the fluid being investigated (e.g., water) and attach it to the computer-controlled syringe pump. Purge the needle using the syringe pump (click and hold the dispense button) until no bubbles are present in the fluid.

- Set the syringe pump so that it will dispense the volume required for release of an individual droplet.

NOTE: For the representative results, the average droplet diameter was 2.6 mm using a dispense rate of 0.5 mL/min and a dispensed volume of 11 µL. The pumping rate should be slow enough so that droplets form and release under gravity, and this can be fine-tuned through trial and error. The volume of the droplet can be approximated as14

where D is the needle diameter, γLG is the liquid-gas surface tension, and ρ is the fluid density.

- Align the sample (e.g., flat polydimethylsiloxane [PDMS]) by placing it under the needle and dispensing a single droplet using the syringe pump. Check that the droplet lands and spreads on the area of the sample that is of interest, and if not alter the sample position as required.

NOTE: If the droplet alignment is proving difficult, check that the needle is mounted correctly in the needle holder vertically and is not bent. The sample is now aligned relative to the X and Y axes and should not be moved during experiments. - Align and focus the camera.

- Dispense a single droplet onto the sample. Adjust the vertical position (Z) of the sample holder until the surface is level with the center of the FOV of the camera.

- Adjust the horizontal position (X) of the camera so that the droplet on the sample is aligned in the center of the FOV. Adjust the vertical (Z) and horizontal (X) positions of the LED to match the position of the camera, so that the center of the light appears in the center of the FOV. Adjust the distance (Y) of the camera from the droplet so that the droplet comes into focus.

NOTE: The system is now aligned and calibrated. If the positioning of all equipment is unchanged, the protocol can be paused and restarted without realignment. Sample alignment in the vertical direction (Z) must be repeated for samples of varying thickness.

- Set the recording conditions for the camera.

- Set the frame rate of the camera to an optimal value for the object being recorded.

NOTE: The optimal frame rate of the camera (fps) can be predicted using31

where N is the sampling rate (number of images captured as the object covers the length scale, normally 10), V is the velocity of the droplet, and j is the imaging length scale (e.g., the FOV). - Set the exposure time of the camera to a value as small as possible while retaining enough illumination. At this stage, adjust the lens aperture to the smallest available setting while retaining enough illumination.

NOTE: An estimate for the minimum exposure time (te) is given by31

where k is the length scale (e.g., the size of a pixel), PMAG is the primary magnification, and V is the velocity of the droplet. - Set the trigger for the camera. Use an end mode trigger so that the camera buffers the recording, then stops on the trigger (e.g., a user mouse-click).

NOTE: An automatic trigger system can be used to automate this process.

- Set the frame rate of the camera to an optimal value for the object being recorded.

- Prepare the liquid being used for dispensing of individual droplets.

2. Conducting experiments

- Prepare the computer file system for a batch of experiments.

- Create a folder to store movies for the current batch of experiments. Set this folder as the save location for the camera software following the camera manufacturer's guide. Make sure the file format for captured images is .tif.

- Click the Set Path button in the image analysis GUI and choose the same folder as in step 2.1.1, which tells the software to monitor this folder for new videos.

- Create the folder structure for a batch of experiments.

- Click the Make Folders button on the image analysis GUI and enter four values as prompted: 1) the minimum droplet release height, 2) the maximum release height, 3) the height step between each experiment, and 4) the number of repeat experiments at each height.

NOTE: Impact velocity can be approximated as V = (2gh)1/2, where g is the acceleration due to gravity and h is the drop release height. - Click OK to run the Make Folders script.

NOTE: A range of folders has now been created in the directory for this experiment. These folders are named "height_xx" where xx is the height of the droplet release. In each of these folders, empty folders are ready to store data for each repeat experiment. Repeat section 2.1 for each new surface or fluid to be studied.

- Click the Make Folders button on the image analysis GUI and enter four values as prompted: 1) the minimum droplet release height, 2) the maximum release height, 3) the height step between each experiment, and 4) the number of repeat experiments at each height.

- Prepare the surface as required for the experiment. For impact on a dry, solid surface, clean the surface with a suitable standard protocol and allow it to completely dry.

- Record a droplet impact event.

- Place the sample on the sample stage. If required, rotate the surface to align it with the camera. Move the needle to the desired droplet release height.

- Make sure the view from the camera is unobstructed, then capture and save an image (to be used later during image processing) using the camera software. Begin the video recording so that the camera is recording and buffering (i.e., filling the internal memory of the camera).

- Dispense a single droplet onto the sample using the syringe pump (step 1.2.1.4). Trigger the recording to stop once the impact event is complete. Remove the surface from the sample holder and dry it, as appropriate.

- Prepare the video file for further analysis.

- Crop the video.

- Using suitable software (e.g., the high-speed camera software), scan through the video to find the first frame in which the droplet is completely within the FOV. Crop the start of the video to this frame.

- Move forward by the number of frames required to capture the phenomena of interest during the impact experiment (e.g., 250 frames are usually sufficient for impacts captured at 10,000 fps). Crop the end of the video to this frame.

- Save the video as an .avi file, setting the save path to the corresponding folder for the current experimental batch, release height, and repeat number.

- In the image analysis GUI, click the Sort Files button. Visually confirm that the background image taken in step 2.3.2 is now displayed on screen. This finds the latest saved .avi file and .tif file and moves them to the same folder, assuming they were taken at the same time.

- Click the Run Tracing button to begin image processing. The video will be displayed with the resulting image processing overlaid. Qualitatively check that the image processing is functioning correctly by watching the video.

NOTE: On completion of the image processing, the image processing code will display an image of the droplet at maximum spread. Failure to properly calibrate the camera can lead to incorrect image processing. If needed, repeat the calibration until image processing is successful. - Repeat sections 2.3 and 2.4, adjusting the height of the needle as required to conduct all experiments in this batch.

NOTE: Each experimental folder will now contain a series of .mat files. These files contain the data extracted by the image processing software and saved for future analysis, including the drop outline, area, bounding box, and perimeter for each frame.

- Crop the video.

3. Analysis of raw data

- In the image analysis GUI, click the Process Data button to begin calculation of the main variables from the raw processed data. If this is run after the experimental session, the user will be prompted to select the folder containing the batch of experiments to process.

- Enter the four values as prompted: 1) frame rate of recording (fps), 2) fluid density (kg/m3), 3) fluid surface tension (N/m), and 4) fluid viscosity (Pa·s).

NOTE: The software defaults to a frame rate of 9,300 fps and the fluid properties of water in ambient conditions. The values entered are used to calculate the Weber and Reynolds numbers. - Save the data in the videofolders.mat file and export as a .csv file.

NOTE: The code will load the file prop_data.mat for a single experiment, calculate the position of the droplet center, find the impact frame (defined as the last frame before the droplet center decelerates), and the frame in which the droplet's horizontal spread is maximized. The output data saved will be the impact velocity (using a 1st order polynomial fit to the vertical position of the droplet center as a function of time), the equivalent diameter of the droplet (calculated by assuming rotational symmetry about the Z axis to find the droplet volume, then finding the diameter of a sphere with that volume36), the droplet diameter at maximum spread, and the impact Weber and Reynolds numbers.

Representative Results

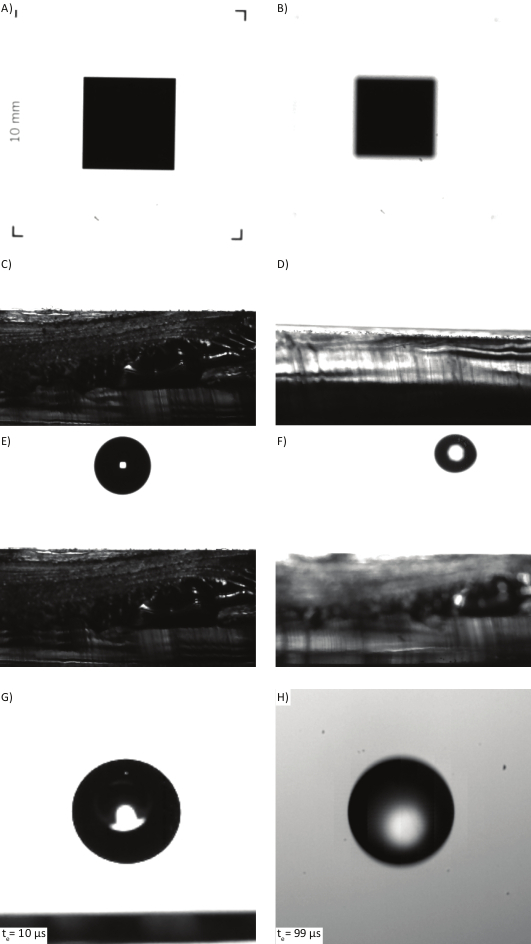

The conversion of distances measured from images in pixels to millimeters is achieved with the use of a known reference square. This square must be unobstructed in the camera's FOV, and in focus (Figure 2A). Incorrect focus of the reference square (Figure 2B) will produce a systematic error in the calculated variables, e.g., velocity. To reduce the error in calculating the conversion factor the reference square should cover as much of the FOV as possible. The side length of the square should be known to as high a precision as possible, given the resolution limit of the camera.

The droplet identification software relies on the surface of the sample being presented horizontally to the camera, as shown in Figure 2C. Surfaces that are bent or poorly resolved (Figure 2D) will produce image processing errors. The software can be used to analyze droplets impacting flat surfaces that are not horizontal, as long as the surface edge produces a sharp contrast against the background.

To ensure that the entire droplet spread is tracked by the software the droplet should land in the center of the sample (Figure 2E). If the system is incorrectly aligned, then the droplet can drift from the center position, and will be out of focus (Figure 2F). If the droplet is out of focus the calculated size will be incorrect. This effect is often caused by poor alignment of the system used for moving the needle vertically away from the surface, which will produce a drift in the impact location as a function of height. It is suggested that the user implements an optical breadboard system (or similar) to ensure parallel and perpendicular alignment.

To ensure that the imaged edges of the impacting droplet appear sharp, it is suggested that the shortest exposure time possible with the available light source should be used (Figure 2G). Incorrect alignment of the illumination path relative to the camera often leads to adjustment of other settings such as the camera aperture and exposure time. This produces a fuzzy edge to the traveling droplet (Figure 2H)

Figure 2: Common issues with incorrect calibration of the system. (A) Calibration square correctly aligned and focused. (B) Calibration square out of focus, producing incorrect calibration factor. (C) Sample surface is horizontal and provides a high contrast between sample surface and background. (D) Sample is at an angle to the camera, producing a reflective surface. (E) Droplet lands in the center of the sample in the plane of focus. (F) Droplet lands off center and is not in focus due to the wide aperture used. (G) A droplet is imaged with sharp edges due to a short exposure time (10 µs). (H) Sub-optimal lighting and a longer exposure time (99 µs) produce motion blur. Please click here to view a larger version of this figure.

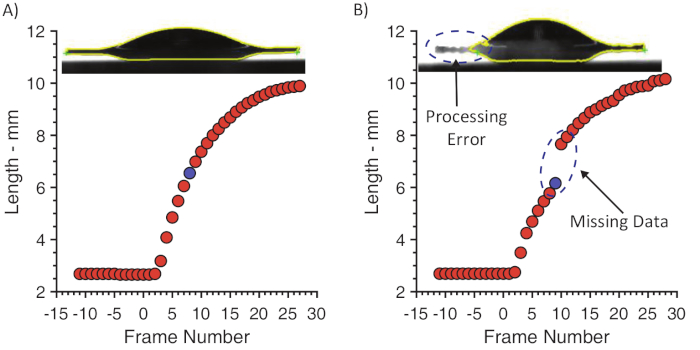

Incorrect illumination and alignment of the sample can produce glare and shadows in the recorded images. These often produce artefacts in the image processing stages, which can reduce the number of good-quality data points gathered. Glare is common for transparent fluids if the illumination path is not aligned horizontally. The software should be able to trace the entire outline of the droplet in the video images (Figure 3A). If the trace is not completed, the measured values such as the length of the spreading droplet will be incorrect (Figure 3B).

Figure 3: Length of an impacting droplet as a function of video frame number (impact frame = 0). Each blue data point corresponds to the inset images. (A) Correct illumination allows the software to trace the entire outline of the droplet (yellow line). Contact points (green crosses) are correctly identified, and the recorded length of the spreading droplet is a smooth function of frame number. (B) Poor illumination produces glare on the liquid and the left edge of the droplet is not traced correctly. The recorded length of the spreading droplet demonstrates inaccuracies in the data. Please click here to view a larger version of this figure.

Supplementary Coding File. Please click here to download this file.

Discussion

This method depends on control of the position and alignment of several parts of the system. A minimum requirement to use this method is the ability to align the sample, camera and illumination LED. Incorrect alignment of the light source to the camera sensor is a common issue. If the light path enters the camera at an angle, unwanted artefacts are produced and hinder image processing. The user should aim to achieve a near-perfect horizontal illumination path between the LED and the camera sensor. Precise positioning controls (e.g., micrometer stages) are helpful for this aspect of the method.

The choice of lens is dependent on the FOV required for the experiment. Although commonly available variable zoom lenses allow for the system to be adapted on the fly, they often suffer from other issues. If using variable zoom lenses, the user must make sure that the total magnification does not change during a batch of experiments (once the system is calibrated, protocol section 1). This issue can be avoided by using fixed magnification lenses. With the magnification fixed, the position of the focal plane of either type of lens can be altered by moving the camera relative to the sample.

While aligning the system it is advisable to use a blank sample of the same thickness as the samples to be investigated. This stops the samples of interest from being damaged or wet prior to experiments. If the sample thickness changes during a batch of experiments, then the system needs to be realigned in the Z direction.

Although not necessary, the addition of a computer-controlled needle positioning system can vastly increase the speed and resolution of the method. Commonly available stepper motor rail systems can be used that allow for positioning of the needle with micrometer accuracy. Digital control of the needle also allows the user to zero the height relative to the surface with greater precision. This additional step ensures that the experimental setup can be accurately restored at the start of a new lab session.

It is advised that the user learns to use the control software for the high-speed camera. Most modern systems can use an image trigger. This method uses the internal high-speed electronics of the camera to monitor an area of the FOV for changes. If calibrated carefully, this can be used to trigger the camera automatically as the droplet impacts the surface. This method reduces the time spent finding the correct frames of the video to crop after a video is recorded.

This method can be expanded to use more than one camera for analysis of directionally dependent phenomena. If using multiple cameras, it is advised that the user uses hardware triggering and syncing. Most high-speed camera systems allow syncing of multiple cameras to record at the same frame rate. Using a shared hardware trigger (e.g., transistor-transistor logic [TTL] pulse), the user can record simultaneous views of the same experiment. This method could be further adapted to record the same event at two varying magnifications.

This protocol aims to enable rapid collection and processing of high-speed video data for droplets impacting surfaces. As demonstrated, it is versatile over a range of impact conditions. With relatively minor alterations to the analysis code, it could be extended to provide further data (e.g., time dependence and splashing profiles) or to study different impact geometries. Further improvements could involve automatic cropping of videos to include the key frames of interest. This step, alongside the automation of the needle height, would allow for batch videos to be collected in a fully automatic fashion, only requiring the user to change sample in between impacts.

Divulgazioni

The authors have nothing to disclose.

Acknowledgements

This work was supported by the Marsden Fund, administered by the Royal Society of New Zealand.

Materials

| 24 gauge blunt tip needle | Sigma Aldrich | CAD7930 | |

| 4 x 4 mm alignment square (chrome on glass) | Made in-house using lithography. | ||

| 5 ml syringe | ~ | ~ | Should be compatible with syringe pump. Leur lock connectors join the syringe to the needle. |

| Aspheric condenser lens | Thor Labs | ACL5040U | Determines beam width, which should cover the field of view. |

| Cat 5e ethernet cable | ~ | ~ | A fast data connection between the high-speed camera and PC, suitable for Photron cameras. |

| Droplet impact analysis software | ~ | ~ | Provided as Supplementary Coding File. Outline data are stored in .mat files. Calculations are output as .csv files. |

| Front surface high-power LED | Luminus | CBT-40-G-C21-JE201 LED | Separate power supply should be DC to avoid flickering. |

| High-speed camera | Photron | Photron SA5 | Typically operated at ~10,000 fps for drop impacts. |

| High-speed camera software | Photron | Photron Fastcam Viewer | Protocol assumes camera has an end trigger; that movie files can be saved in .avi format, and screenshots in .tif format, to a designated folder; and that movies can be cropped. |

| Linear translation stages | Thor Labs | DTS25/M | Used to position the LED, sample and camera. |

| Macro F-mount camera lens | Nikon | Nikkor 105mm f/2.8 Lens | Choice of lens determines field of view. |

| PC running Matlab 2018b | Matlab | ~ | PC processing power and RAM can effect protocol speed and hence efficiency. |

| Polydimethylsiloxane (PDMS) | Dow | SYLGARD™ 184 Silicone Elastomer | Substrates made using a 10:1 (monomer:cross-linker) ratio. |

| PTFE tubing | ~ | ~ | |

| Syringe pump | Pump Systems Inc | NE-1000 | Protocol assumes this can be set to dispense a specific volume. |

Riferimenti

- Josserand, C., Thoroddsen, S. T. Drop impact on a solid surface. Annual Review of Fluid Mechanics. 48, 365-391 (2016).

- Van Dam, D. B., Le Clerc, C. Experimental study of the impact of an ink-jet printed droplet on a solid substrate. Physics of Fluids. 16, 3403-3414 (2004).

- Worthington, A. M. . A study of splashes. , (1908).

- Thoroddsen, S., Etoh, T., Takehara, K. High-speed imaging of drops and bubbles. Annual Review of Fluid Mechanics. 40, 257-285 (2008).

- Chandra, S., Avedisian, C. On the collision of a droplet with a solid surface. Proceedings of the Royal Society of London. Series A: Mathematical and Physical Sciences. 32 (1884), 13-41 (1991).

- Marengo, M., Antonini, C., Roisman, I. V., Tropea, C. Drop collisions with simple and complex surfaces. Current Opinion in Colloid and Interface Science. 16, 292-302 (2011).

- Yarin, A. L. Drop impact dynamics: Splashing, spreading, receding, bouncing. Annual Review of Fluid Mechanics. 38 (1), 159-192 (2006).

- Thoroddsen, S. T. The making of a splash. Journal of Fluid Mechanics. 690, 1-4 (2012).

- Bartolo, D., et al. Bouncing or sticky droplets: Impalement transitions on superhydrophobic micropatterned surfaces. Europhysics Letters. 74 (2), 299-305 (2006).

- Richard, D., Quéré, D. Bouncing water drops. Europhysics Letters. 50 (6), 769-775 (2000).

- Bird, J. C., Dhiman, R., Kwon, H. M., Varanasi, K. K. Reducing the contact time of a bouncing drop. Nature. 503, 385-388 (2013).

- Khojasteh, D., Kazerooni, M., Salarian, S., Kamali, R. Droplet impact on superhydrophobic surfaces: A review of recent developments. Journal of Industrial and Engineering Chemistry. 42, 1-14 (2016).

- Robson, S., Willmott, G. R. Asymmetries in the spread of drops impacting on hydrophobic micropillar arrays. Soft Matter. 12 (21), 4853-4865 (2016).

- Broom, M. . Imaging and Analysis of Water Drop Impacts on Microstructure Designs. , (2019).

- Lee, J. B., Derome, D., Guyer, R., Carmeliet, J. Modeling the maximum spreading of liquid droplets impacting wetting and nonwetting surfaces. Langmuir. 32 (5), 1299-1308 (2016).

- Laan, N., de Bruin, K. G., Bartolo, D., Josserand, C., Bonn, D. Maximum diameter of impacting liquid droplets. Physical Review Applied. 2 (4), 044018 (2014).

- Rein, M. Phenomena of liquid drop impact on solid and liquid surfaces. Fluid Dynamics Research. 12 (2), 61-93 (1993).

- Wang, M. J., Lin, F. H., Hung, Y. L., Lin, S. Y. Dynamic behaviors of droplet impact and spreading: Water on five different substrates. Langmuir. 25 (12), 6772-6780 (2009).

- Weisensee, P. B., Tian, J., Miljkovic, N., King, W. P. Water droplet impact on elastic superhydrophobic surfaces. Scientific Reports. 6, 30328 (2016).

- Xu, L., Zhang, W. W., Nagel, S. R. Drop splashing on a dry smooth surface. Physical Review Letters. 94 (18), 184505 (2005).

- Clanet, C., Béguin, C., Richard, D., Quéré, D. Maximal deformation of an impacting drop. Journal of Fluid Mechanics. 517, 199-208 (2004).

- Collings, E., Markworth, A., McCoy, J., Saunders, J. Splat-quench solidification of freely falling liquid-metal drops by impact on a planar substrate. Journal of Materials Science. 25 (8), 3677-3682 (1990).

- Schindelin, J., et al. Fiji: An open-source platform for biological-image analysis. Nature Methods. 9 (7), 676-682 (2012).

- . Tracker Video Analysis and Modeling Tool for Physics Education (software) Available from: https://physlets.org/tracker (2019)

- Bennett, T., Poulikakos, D. Splat-quench solidification: Estimating the maximum spreading of a droplet impacting a solid surface. Journal of Materials Science. 28 (4), 963-970 (1993).

- Rioboo, R., Marengo, M., Tropea, C. Time evolution of liquid drop impact onto solid, dry surfaces. Experiments in Fluids. 33 (1), 112-124 (2002).

- Ukiwe, C., Kwok, D. Y. On the maximum spreading diameter of impacting droplets on well-prepared solid surfaces. Langmuir. 21 (2), 666-673 (2005).

- Wildeman, S., Visser, C. W., Sun, C., Lohse, D. On the spreading of impacting drops. Journal of Fluid Mechanics. 805, 636-655 (2016).

- Biolè, D., Bertola, V. A goniometric mask to measure contact angles from digital images of liquid drops. Colloids and Surfaces A: Physicochemical and Engineering Aspects. 467, 149-156 (2015).

- Daerr, A., Mogne, A. Pendent_Drop: An ImageJ plugin to measure the surface tension from an image of a pendent drop. Journal of Open Research Software. 4 (1), 3 (2016).

- Versluis, M. High-speed imaging in fluids. Experiments in Fluids. 54 (2), 1458 (2013).

- Rydblom, S., Thӧrnberg, B. Liquid water content and droplet sizing shadowgraph measuring system for wind turbine icing detection. IEEE Sensors Journal. 16 (8), 2714-2725 (2015).

- Castrejón-García, R., Castrejón-Pita, J., Martin, G., Hutchings, I. The shadowgraph imaging technique and its modern application to fluid jets and drops. Revista Mexicana de Física. 57 (3), 266-275 (2011).

- Castrejón-Pita, J. R., Castrejón-García, R., Hutchings, I. M., Klapp, J., Medina, A., Cros, A., Vargas, C. High speed shadowgraphy for the study of liquid drops. Fluid Dynamics in Physics, Engineering and Environmental Applications. , 121-137 (2013).

- Tripp, G. K., Good, K. L., Motta, M. J., Kass, P. H., Murphy, C. J. The effect of needle gauge, needle type, and needle orientation on the volume of a drop. Veterinary ophthalmology. 19 (1), 38-42 (2016).

- Hugli, H., Gonzalez, J. J. Drop volume measurements by vision. Machine Vision Applications in Industrial Inspection VIII. 3966, 60-67 (2000).