A Single-Channel and Non-Invasive Wearable Brain-Computer Interface for Industry and Healthcare

Summary

This paper discusses how to build a brain-computer interface by relying on consumer-grade equipment and steady-state visually evoked potentials. For this, a single-channel electroencephalograph exploiting dry electrodes was integrated with augmented reality glasses for stimuli presentation and output data visualization. The final system was non-invasive, wearable, and portable.

Abstract

The present work focuses on how to build a wearable brain-computer interface (BCI). BCIs are a novel means of human-computer interaction that relies on direct measurements of brain signals to assist both people with disabilities and those who are able-bodied. Application examples include robotic control, industrial inspection, and neurorehabilitation. Notably, recent studies have shown that steady-state visually evoked potentials (SSVEPs) are particularly suited for communication and control applications, and efforts are currently being made to bring BCI technology into daily life. To achieve this aim, the final system must rely on wearable, portable, and low-cost instrumentation. In exploiting SSVEPs, a flickering visual stimulus with fixed frequencies is required. Thus, in considering daily-life constraints, the possibility to provide visual stimuli by means of smart glasses was explored in this study. Moreover, to detect the elicited potentials, a commercial device for electroencephalography (EEG) was considered. This consists of a single differential channel with dry electrodes (no conductive gel), thus achieving the utmost wearability and portability. In such a BCI, the user can interact with the smart glasses by merely staring at icons appearing on the display. Upon this simple principle, a user-friendly, low-cost BCI was built by integrating extended reality (XR) glasses with a commercially available EEG device. The functionality of this wearable XR-BCI was examined with an experimental campaign involving 20 subjects. The classification accuracy was between 80%-95% on average depending on the stimulation time. Given these results, the system can be used as a human-machine interface for industrial inspection but also for rehabilitation in ADHD and autism.

Introduction

A brain-computer interface (BCI) is a system allowing communication with and/or control of devices without natural neural pathways1. BCI technology is the closest thing that humanity has to controlling objects with the power of the mind. From a technical point of view, the system operation works by measuring induced or evoked brain activity, which could either be involuntarily or voluntarily generated from the subject2. Historically, research focused on aiding people with motor disabilities through BCI3, but a growing number of companies today offer BCI-based instrumentation for gaming4, robotics5, industry6, and other applications involving human-machine interaction. Notably, BCIs may play a role in the fourth industrial revolution, namely industry 4.07, where cyber-physical production systems are changing the interaction between humans and the surrounding environment8. Broadly speaking, the European project BNCI Horizon 2020 identified application scenarios such as replacing, restoring, improving, enhancing, or supplementing lost natural functions of the central nervous system, as well as the usage of BCI in investigating the brain9.

In this framework, recent technological advances mean brain-computer interfaces may be applicable for usage in daily life10,11. To achieve this aim, the first requirement is non-invasiveness, which is important for avoiding the risks of surgical intervention and increasing user acceptance. However, it is worth noting that the choice of non-invasive neuroimaging affects the quality of measured brain signals, and the BCI design must then deal with the associated pitfalls12. In addition, wearability and portability are required. These requirements are in line with the need for a user-friendly system but also pose some constraints. Overall, the mentioned hardware constraints are addressed by the usage of an electroencephalographic (EEG) system with gel-free electrodes6. Such an EEG-based BCI would also be low-cost. Meanwhile, in terms of the software, minimal user training (or ideally no training) would be desired; namely, it would be best to avoid lengthy periods for tuning the processing algorithm before the user can use the system. This aspect is critical in BCIs because of inter-subject and intra-subject non-stationarity13,14.

Previous literature has demonstrated that the detection of evoked brain potentials is robust with respect to non-stationarity and noise in signal acquisition. In other words, BCIs relying on the detection of evoked potential are termed reactive, and are the best-performing BCIs in terms of brain pattern recognition15. Nevertheless, they require sensory stimulation, which is probably the main drawback of such interfaces. The goal of the proposed method is, thus, to build a highly wearable and portable BCI relying on wearable, off-the-shelf instrumentation. The sensory stimuli here consist of flickering lights, generated by smart glasses, that are capable of eliciting steady-state visually evoked potentials (SSVEPs). Previous works have already considered integrating BCI with virtual reality either alone or in conjunction with augmented reality16. For instance, a BCI-AR system was proposed to control a quadcopter with SSVEP17. Virtual reality, augmented reality, and other paradigms are referred to with the term extended reality. In such a scenario, the choice of smart glasses complies with the wearability and portability requirements, and smart glasses can be integrated with a minimal EEG acquisition setup. This paper shows that SSVEP-based BCI also requires minimal training while achieving acceptable classification performance for low-medium speed communication and control applications. Hence, the technique is applied to BCI for daily-life applications, and it appears especially suitable for industry and healthcare.

Protocol

The study was approved by the Ethical Committee of Psychological Research of the Department of Humanities of the University of Naples Federico II. The volunteers signed informed consent before participating in the experiments.

1. Preparing the non-invasive wearable brain – computer interface

- Obtain a low-cost consumer-grade electroencephalograph with dry electrodes, and configure it for single-channel usage.

- Short-circuit or connect any unused input channels on the low-cost electroencephalograph to an internal reference voltage as specified by the inherent datasheet. In doing so, the unused channels are disabled, and they do not generate crosstalk noise.

- Adjust the electroencephalograph gain (typically through a component with variable resistance) to have an input range in the order of 100 µV.

NOTE: The EEG signals to be measured are in order of tens of microvolts. However, the dry electrodes are greatly affected by motion artifacts, which result in oscillations in the order of 100 µV due to the variability in the electrode-skin impedance. Increasing the input voltage range helps to limit EEG amplifier saturation, but it does not completely eliminate it. On the other hand, it would be inconvenient to increase the input voltage range even more, because this would affect the voltage resolution in measuring the desired EEG components. Ultimately, the two aspects must be balanced by also taking into account the bit-resolution of the analog-to-digital converter inside the electroencephalograph board. - Prepare three dry electrodes to connect to the electroencephalograph board. Use a passive electrode (no pre-amplification) as the reference electrode. The remaining two measuring electrodes should be active ones (i.e., involve pre-amplification and eventual analog filtering).

NOTE: Electrodes placed on a hairy scalp area require pins to overcome electrode-skin contact impedance. If possible, solder silver pins with flat heads (to avoid too much pain for the user), or ideally use conducting (soft) rubber with an Ag/AgCl coating.

- Obtain commercial smart glasses with an Android operating system and a refresh rate of 60 Hz. Alternatively, use a lower refresh rate. A higher refresh rate would be desirable for stimuli as there would be less eye fatigue, but there are no currently available solutions on the market.

- Download the source code of an Android application for communication or control, or develop one.

- Replace the virtual buttons in the application with flickering icons by changing the inherent object (usually in Java or Kotlin). White squares with at least 5% screen dimension are recommended. Usually, the bigger the stimulating square, the higher the SSVEP component to detect will be, but an optimum can be found depending on the specific case. Recommended frequencies are 10 Hz and 12 Hz flickering. Implement the flicker on the graphical processing unit (GPU) to avoid overloading the computing unit (CPU) of the smart glasses. For this purpose, use objects from the OpenGL library.

- Implement a module of the Android application for real-time processing of the input EEG stream. The Android USB Service can be added so that the stream may be received via USB. The real-time processing may simply apply a sliding window to the EEG stream by considering the incoming packets. Calculate the power spectral densities associated with the 10 Hz and 12 Hz frequencies through a fast Fourier transform function. A trained classifier can, thus, distinguish that the user is looking at the 10 Hz flickering icon or the 12 Hz flickering icon by classifying the power spectral density features.

2. Calibrating the SSVEP-based brain – computer interface

NOTE: Healthy volunteers were chosen for this study. Exclude subjects with a history of brain diseases. The involved subjects were required to have normal or corrected-to-normal vision. They were instructed to be relaxed during the experiments and to avoid unnecessary movements, especially of the head.

- Let the user wear the smart glasses with the Android application.

- Let the user wear a tight headband for holding the electrodes.

- Connect the low-cost electroencephalograph to a PC via a USB cable while the PC is disconnected from the main power supply.

- Initially disconnect all the electrodes from the electroencephalograph acquisition board to start from a known condition.

- In this phase, the EEG stream is processed offline on the PC with a script compatible with the processing implemented in the Android application. Start the script to receive the EEG signals and visualize them.

- Check the displayed signal that is processed offline. This must correspond to only the quantization noise of the EEG amplifier.

- Connect the electrodes.

- Apply the passive electrode to the left ear with a custom clip, or use an ear-clip electrode. The output signal must remain unchanged at this step because the measuring differential channel is still an open circuit.

- Connect an active electrode to the negative terminal of the differential input of the measuring EEG channel, and apply to the frontal region (Fpz standard location) with a headband. After a few seconds, the signal should return to zero (quantization noise).

- Connect the other active electrode to the positive terminal of the differential input of the measuring EEG channel, and apply to the occipital region (Oz standard location) with the headband. A brain signal is now displayed, which corresponds to the visual activity measured with respect to the frontal brain area (no visual activity foreseen there).

- Acquire signals for system calibration.

- Repeatedly stimulate the user with 10 Hz and 12 Hz (and eventually other) flickering icons by starting the flickering icon in the Android application, and acquire and save the inherent EEG signals for offline processing. Ensure each stimulation in this phase consists of a single icon flickering for 10 s, and start the flickering icon by pressing on the touchpad of the smart glasses while also starting the EEG acquisition and visualization script.

- From the 10 s signals associated with each stimulation, extract two features by using the fast Fourier transform: the power spectral density at 10 Hz and at 12 Hz. Alternatively, consider second harmonics (20 Hz and 24 Hz).

- Use a representation of the acquired signals in the features domain to train a support vector machine classifier. Use a tool (in Matlab or Python) to identify the parameters of a hyperplane with an eventual kernel based on the input features. The trained model will be capable of classifying future observations of EEG signals.

3. Assemble the final wearable and portable SSVEP-based interface

- Disconnect the USB cable from the PC, and connect it directly to the smart glasses.

- Insert the parameters of the trained classifier into the Android application. The system is now ready.

Representative Results

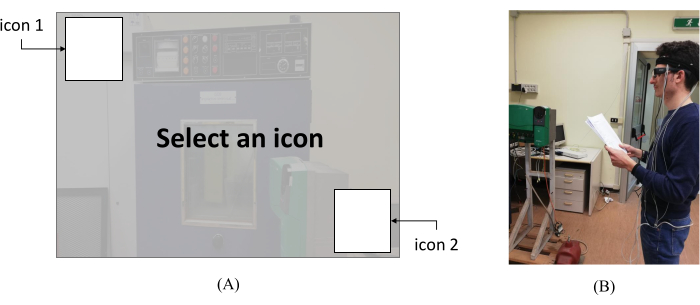

A possible implementation of the system described above is shown in Figure 1; this implementation allows the user to navigate in augmented reality through brain activity. The flickering icons on the smart glasses display correspond to actions for the application (Figure 1A), and, thus, these glasses represent a substitute for a traditional interface based on button presses or a touchpad. The efficacy of such an interaction is strictly related to the successful classification of the potentials evoked by the flickering. To achieve this aim, a metrological characterization was first carried out for the system18, and then human users were involved in an experimental validation.

Figure 1: Possible implementation of the proposed brain-computer interface. (A) Representation of what the user sees through the smart glasses, namely the real scenario and the visual stimuli; (B) a user wearing the hands-free system. Please click here to view a larger version of this figure.

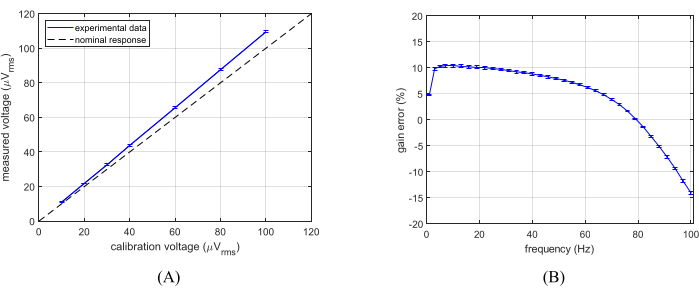

The electroencephalograph was characterized with respect to linearity and magnitude error. Linearity was assessed at 20 Hz through measures conducted with a sinusoidal input signal to the device tested at seven different amplitudes (10 µV, 20 µV, 30 µV, 40 µV, 60 µV, 80 µV, and 100 µV). By plotting the voltage of the electroencephalograph as a function of the input voltage, Figure 2A highlights the clear linear behavior of the electroencephalograph. Linearity was also confirmed by a Fisher's test for the goodness of the linear fit. However, the figure also indicates some gain and offset error. These errors were tested by fixing the amplitude at 100 µV and varying the frequency. The results are reported in Figure 2B and confirm the magnitude error with respect to the nominal gain.

Figure 2: Results of the low-cost electroencephalograph characterization. (A) Linear errors; (B) magnitude errors. The number of samples for each measuring point was 4,096. A more detailed discussion can be found in Arpaia et al.18. Please click here to view a larger version of this figure.

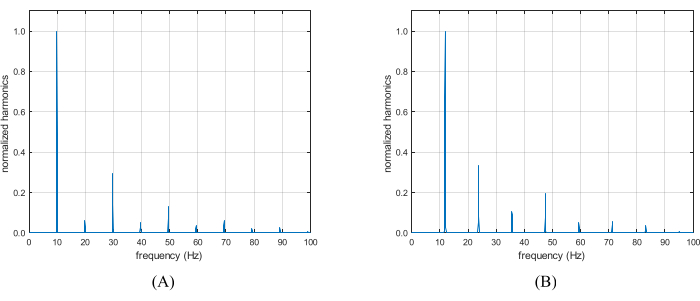

Finally, the flicker of the smart glasses was measured to highlight eventual deviations from the nominal square wave path. Such deviations were especially visible in the amplitude spectrum of the 12 Hz flickering (Figure 3B). However, all these errors can be considered or eventually compensated, thus demonstrating the feasibility of using consumer-grade material for the SSVEP-BCI system.

Figure 3: Results of the characterization of the commercial smart glasses in terms of the amplitude spectrum of the flickering buttons. (A) Flickering at 10 Hz; (B) flickering at 12 Hz. A more detailed discussion can be found in Arpaia et al.18. Please click here to view a larger version of this figure.

Regarding the experimental validation, 20 subjects (7 female) took part in the campaign. Each subject underwent 24 trials with two simultaneous flickering icons on the smart glasses display. The subject had to stare at one of the two randomly ordered icons, which each had a different frequency (10 Hz flickering or 12 Hz flickering). The randomness of which frequency the subjects stared at first was guaranteed by letting them decide the icon to stare at without any predefined criteria. Once 12 trials were completed at a specific frequency, the subject was then asked to focus on the icon with the other frequency for the remaining trials. In the exploited application, the 12 Hz flickering icon appeared on the top-left corner while the 10 Hz flickering icon appeared on the bottom-right corner. A single trial lasted 10.0 s, and a few seconds (of random duration) passed between consecutive trials. Smaller time windows could be then analyzed offline by cutting the recorded signals.

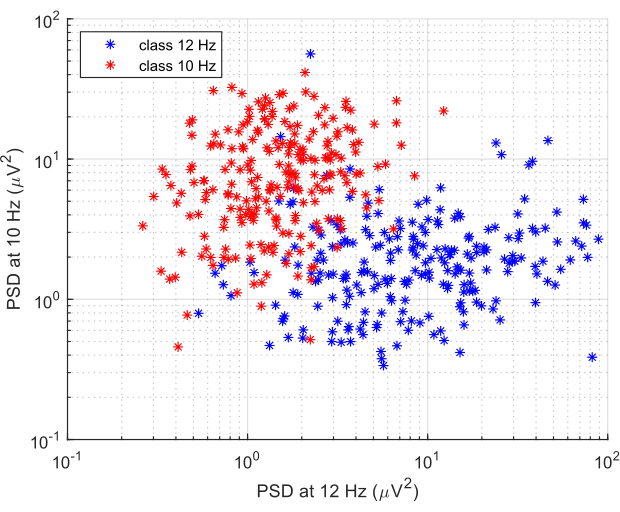

Figure 4: Representation of the signals measured during visual stimulation in the features domain. The signals associated with the 12 Hz flickering stimuli (class 12 Hz) are represented in blue, while the signals associated with the 10 Hz flickering stimuli (class 10 Hz) are represented in red. Features from all the subjects are considered here. Please click here to view a larger version of this figure.

Figure 4 reports the 10 s signals represented by the two power spectral density features, namely at 12 Hz and 10 Hz. A single dot in two dimensions corresponded to a single trial. The dots corresponding to the two different flickering frequencies were distinguished by their color. Hence, the two classes were separated, although an overlap between the two classes existed, which may have caused misclassification. Classification results were obtained with a four-fold cross-validation, so that the data were split four times into 18 trials for training and 6 trials for validation. The data confirmed that, in the 10 s stimulation case (Table 1), 8 subjects out of 20 reached 100% accuracy, but for other subjects, the classification accuracy was as low as 65%-70%. Meanwhile, one subject reached 100% with a 2 s stimulation (obtained by cutting the signals in post processing), and a relevant number of subjects reached random (50%) classification accuracy. The data from all the subjects were considered as a whole, and the classification accuracy was obtained. The performance was also assessed in terms of the information transfer rate (ITR), which was above 30 bits/min on average. These results were enhanced by considering the power spectral densities at 20 Hz and 24 Hz. Table 2 shows that they increased or remained constant, and at least in the 10 s stimulation case, the standard deviation diminished, thus indicating less dispersion in the classification performance for different subjects. Finally, the classification accuracy was recalculated for all the subjects in this four-features case, and it was found again that they were close to the mean accuracies.

| Subject | 10 s Stimulation Accuracy % | 2 s Stimulation Accuracy % |

| Tutti | 94.2 | 77.5 |

| S1 | 100 | 100 |

| S2 | 100 | 98.8 |

| S3 | 100 | 92 |

| S4 | 100 | 92 |

| S5 | 100 | 88 |

| S6 | 100 | 87 |

| S7 | 100 | 87 |

| S8 | 100 | 64 |

| S9 | 97.9 | 57 |

| S10 | 96.7 | 88.8 |

| S11 | 96 | 64 |

| S12 | 95.8 | 78 |

| S13 | 95.4 | 83 |

| S14 | 95.4 | 53 |

| S15 | 94 | 80 |

| S16 | 92.9 | 61 |

| S17 | 91 | 78 |

| S18 | 89.6 | 61 |

| S19 | 81 | 52 |

| S20 | 71 | 49 |

| MEAN | 94.9 | 76 |

| STD | 7.4 | 16 |

Table 1: Cross-validation accuracy in classifying the SSVEP-related EEG signals. For each subject, the results associated with a 10 s stimulation are compared with the results associated with a 2 s stimulation. The mean accuracy among all the subjects is reported, as well as the accuracy obtained by considering all the subjects together (row all).

| 10 s Stimulation (Mean accuracy ± std) % | 10 s Stimulation (Mean accuracy ± std) % | |

| 2D Case | 94.9 ± 7.4 | 76 ± 16 |

| 4D Case | 97.2 ± 4.3 | 76 ± 15 |

Table 2: Comparison of the classification performance when considering two PSD features (2D case) versus four PSD features (4D case) for the SSVEP-related EEG data. A 10 s stimulation is compared to a 2 s one by reporting the mean cross-validation accuracies and their associated standard deviations.

Discussion

The proper functioning of the system involves two crucial aspects: SSVEP elicitation and signal acquisition. Aside from the specific devices chosen for the current study, SSVEP could be elicited with different devices providing a flickering light, though smart glasses are preferred to ensure wearability and portability. Analogously, further commercial electroencephalographs could be considered, but they would have to be wearable, portable, and involve a minimum number of dry electrodes to be user-friendly. Moreover, the system could be customized to use devices that are easy to program. Additionally, the processing strategy determines the final performance; although the processing strategy did not appear crucial in the described system design, its enhancement would certainly contribute to a faster and more accurate system for communication and control applications10,11,19.

Some shortcomings of the system can be highlighted. Indeed, for SSVEP elicitation, the flickering stimuli should have large dimensions and a stable flickering frequency. The former implies that the buttons of the Android application may be bulky and pose limitations on the extended reality scenario. The latter requires smart glasses with metrological characteristics that are not typically present in commercial devices. In addition, even after fulfilling such conditions, the presence of an SSVEP depends on the subject. Notably, there is intra-subject and inter-subject variability in SSVEP amplitudes, which depends on several uncontrollable factors, such as user fatigue or attention/engagement13. This issue adds to the need to find optimal stimuli for proper SSVEP elicitation20.

On the other hand, signal acquisition is critical for final performance because a suitable signal-to-noise ratio must be achieved when measuring brain activity. In order to achieve the utmost wearability, portability, user comfort, and low cost, brain activity was measured with a single-channel non-invasive EEG and dry electrodes. Of course, dry electrodes are mostly compliant with daily-life requirements, but avoiding the use of conductive gels limits electrode-skin contact. Therefore, the mechanical stability of the electrodes must be ensured by correctly placing them and by stabilizing them in continuous usage. In fact, however, the drawback of the proposed acquisition system is that the signal quality is degraded with respect to more invasive neuroimaging techniques.

Despite the expected poor signal-to-noise ratio, the validation of the proposed system demonstrated that high performance for communication and control applications is possible. In particular, the metrological characterization of the consumer-grade equipment showed that the employed devices were suitable for BCI applications of interest, and it also suggested that similar devices should be suitable for those too. Through the experimental validation, subject-dependent classification accuracies were observed, but positive overall conclusions could be drawn.

The classification accuracy can be enhanced by increasing the stimulation time. In such a case, the system responsiveness will be slower, but alternative communication and control capabilities can be still provided. Secondly, a system without training (or at most a system with minimal training) could be obtained by using preliminary data from other subjects. To understand the contribution of the proposed method to the field, the results can be compared with the literature on non-invasive SSVEP-BCI. In more traditional BCIs, accuracy reaches about 94% even with 2 s stimulation21, but such systems require extensive training and may be not wearable and portable. However, performance drops when trying to increase user-friendliness (e.g., when employing dry electrodes22 and even more when attempting to use a single electrode23). In those cases, classification accuracies are diminished to about 83%.

Therefore, the system described in this work brings BCI technology closer to daily-life applications by increasing user-friendliness while keeping the performance high, although the usage of this system in clinical settings may be inappropriate. Some participants commented that the dry electrodes in the occipital region were more disturbing than their traditional counterparts, but overall, they appreciated the wearability and portability of the system. Many upgrades are possible, and some of them are already being developed. For instance, the number of stimuli (and, hence, the number of commands) can be increased to four. Then, the stimuli themselves can be designed to strain the eyes less. Finally, the classification performance can be enhanced through a more advanced processing strategy to achieve a more accurate and/or faster system.

Divulgazioni

The authors have nothing to disclose.

Acknowledgements

This work was carried out as part of the ICT for Health project, which was financially supported by the Italian Ministry of Education, University and Research (MIUR), under the initiative Departments of Excellence (Italian Budget Law no. 232/2016), through an excellence grant awarded to the Department of Information Technology and Electrical Engineering of the University of Naples Federico II, Naples, Italy. The project was indeed made possible by the support of the Res4Net initiative and the TC-06 (Emerging Technologies in Measurements) of the IEEE Instrumentation and Measurement Society. The authors would like to also thank L. Callegaro, A. Cioffi, S. Criscuolo, A. Cultrera, G. De Blasi, E. De Benedetto, L. Duraccio, E. Leone, and M. Ortolano for their precious contributions in developing, testing, and validating the system.

Materials

| Conductive rubber with Ag/AgCl coating | ab medica s.p.a. | N/A | Alternative electrodes – type 2 |

| Earclip electrode | OpenBCI | N/A | Ear clip |

| EEG-AE | Olimex | N/A | Active electrodes |

| EEG-PE | Olimex | N/A | Passive electrode |

| EEG-SMT | Olimex | N/A | Low-cost electroencephalograph |

| Moverio BT-200 | Epson | N/A | Smart glasses |

| Snap electrodes | OpenBCI | N/A | Alternative electrodes – type 1 |

Riferimenti

- Wolpaw, J. R., et al. Brain-computer interface technology: A review of the first international meeting. IEEE Transactions on Rehabilitation Engineering. 8 (2), 164-173 (2000).

- Zander, T. O., Kothe, C., Jatzev, S., Gaertner, M., Tan, D. S., Nijholt, A. Enhancing human-computer interaction with input from active and passive brain-computer interfaces. Brain-Computer Interfaces. , 181-199 (2010).

- Ron-Angevin, R., et al. Brain-computer interface application: Auditory serial interface to control a two-class motor-imagery-based wheelchair. Journal of Neuroengineering and Rehabilitation. 14 (1), 49 (2017).

- Ahn, M., Lee, M., Choi, J., Jun, S. C. A review of brain-computer interface games and an opinion survey from researchers, developers and users. Sensors. 14 (8), 14601-14633 (2014).

- Bi, L., Fan, X. A., Liu, Y. EEG-based brain-controlled mobile robots: A survey. IEEE Transactions on Human-Machine Systems. 43 (2), 161-176 (2013).

- Arpaia, P., Callegaro, L., Cultrera, A., Esposito, A., Ortolano, M. Metrological characterization of a low-cost electroencephalograph for wearable neural interfaces in industry 4.0 applications. IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4. 0&IoT). , 1-5 (2021).

- Rüßmann, M., et al. Industry 4.0: The future of productivity and growth in manufacturing industries. Boston Consulting Group. 9 (1), 54-89 (2015).

- Angrisani, L., Arpaia, P., Moccaldi, N., Esposito, A. Wearable augmented reality and brain computer interface to improve human-robot interactions in smart industry: A feasibility study for SSVEP signals. IEEE 4th International Forum on Research and Technology for Society and Industry (RTSI). , 1-5 (2018).

- Brunner, C., et al. BNCI Horizon 2020: Towards a roadmap for the BCI community. Brain-Computer Interfaces. 2 (1), 1-10 (2015).

- Yin, E., Zhou, Z., Jiang, J., Yu, Y., Hu, D. A dynamically optimized SSVEP brain-computer interface (BCI) speller. IEEE Transactions on Biomedical Engineering. 62 (6), 1447-1456 (2014).

- Chen, L., et al. Adaptive asynchronous control system of robotic arm based on augmented reality-assisted brain-computer interface. Journal of Neural Engineering. 18 (6), 066005 (2021).

- Ball, T., Kern, M., Mutschler, I., Aertsen, A., Schulze-Bonhage, A. Signal quality of simultaneously recorded invasive and non-invasive EEG. Neuroimage. 46 (3), 708-716 (2009).

- Grosse-Wentrup, M. What are the causes of performance variation in brain-computer interfacing. International Journal of Bioelectromagnetism. 13 (3), 115-116 (2011).

- Arpaia, P., Esposito, A., Natalizio, A., Parvis, M. How to successfully classify EEG in motor imagery BCI: A metrological analysis of the state of the art. Journal of Neural Engineering. 19 (3), (2022).

- Ajami, S., Mahnam, A., Abootalebi, V. Development of a practical high frequency brain-computer interface based on steady-state visual evoked potentials using a single channel of EEG. Biocybernetics and Biomedical Engineering. 38 (1), 106-114 (2018).

- Friedman, D., Nakatsu, R., Rauterberg, M., Ciancarini, P. Brain-computer interfacing and virtual reality. Handbook of Digital Games and Entertainment Technologies. , 151-171 (2015).

- Wang, M., Li, R., Zhang, R., Li, G., Zhang, D. A wearable SSVEP-based BCI system for quadcopter control using head-mounted device. IEEE Access. 6, 26789-26798 (2018).

- Arpaia, P., Callegaro, L., Cultrera, A., Esposito, A., Ortolano, M. Metrological characterization of consumer-grade equipment for wearable brain-computer interfaces and extended reality. IEEE Transactions on Instrumentation and Measurement. 71, 1-9 (2021).

- Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., Vaughan, T. M. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 113 (6), 767-791 (2002).

- Duszyk, A., et al. Towards an optimization of stimulus parameters for brain-computer interfaces based on steady state visual evoked potentials. PLoS One. 9 (11), 112099 (2014).

- Prasad, P. S., et al. SSVEP signal detection for BCI application. IEEE 7th International Advance Computing Conference (IACC). , 590-595 (2017).

- Xing, X., et al. A high-speed SSVEP-based BCI using dry EEG electrodes). Scientific Reports. 8, 14708 (2018).

- Luo, A., Sullivan, T. J. A user-friendly SSVEP-based brain-computer interface using a time-domain classifier. Journal of Neural Engineering. 7 (2), 026010 (2010).