Simultaneous Eye Tracking and Single-Neuron Recordings in Human Epilepsy Patients

Summary

We describe a method to conduct single-neuron recordings with simultaneous eye tracking in humans. We demonstrate the utility of this method and illustrate how we used this approach to obtain neurons in the human medial temporal lobe that encode targets of a visual search.

Abstract

Intracranial recordings from patients with intractable epilepsy provide a unique opportunity to study the activity of individual human neurons during active behavior. An important tool for quantifying behavior is eye tracking, which is an indispensable tool for studying visual attention. However, eye tracking is challenging to use concurrently with invasive electrophysiology and this approach has consequently been little used. Here, we present a proven experimental protocol to conduct single-neuron recordings with simultaneous eye tracking in humans. We describe how the systems are connected and the optimal settings to record neurons and eye movements. To illustrate the utility of this method, we summarize results that were made possible by this setup. This data shows how using eye tracking in a memory-guided visual search task allowed us to describe a new class of neurons called target neurons, whose response was reflective of top-down attention to the current search target. Lastly, we discuss the significance and solutions to potential problems of this setup. Together, our protocol and results suggest that single-neuron recordings with simultaneous eye tracking in humans are an effective method to study human brain function. It provides a key missing link between animal neurophysiology and human cognitive neuroscience.

Introduction

Human single-neuron recordings are a unique and powerful tool to explore the function of the human brain with extraordinary spatial and temporal resolution1. Recently, single-neuron recordings have gained wide use in the field of cognitive neuroscience because they permit the direct investigation of cognitive processes central to human cognition. These recordings are made possible by the clinical need to determine the position of epileptic foci, for which depth electrodes are temporarily implanted into the brains of patients with suspected focal epilepsy. With this setup, single-neuron recordings can be obtained using microwires protruding from the tip of the hybrid depth electrode (a detailed description of the surgical methodology involved in the insertion of hybrid depth electrodes is provided in the previous protocol2). Among others, this method has been used to study human memory3,4, emotion5,6, and attention7,8.

Eye tracking measures gaze position and eye movements (fixations and saccades) during cognitive tasks. Video-based eye trackers typically use the corneal reflection and the center of the pupil as features to track over time9. Eye tracking is an important method to study visual attention because the gaze location indicates the focus of attention during most natural behaviors10,11,12. Eye tracking has been used extensively to study visual attention in healthy individuals13 and neurological populations14,15,16.

While both single-neuron recordings and eye tracking are individually used extensively in humans, few studies have used both simultaneously. As a result, it still remains largely unknown how neurons in the human brain respond to eye movements and/or whether they are sensitive to the currently fixated stimulus. This is in contrast to studies with macaques, where eye-tracking with simultaneous single-neuron recordings has become a standard tool. In order to directly investigate the neuronal response to eye movements, we combined human single-neuron recordings and eye tracking. Here we describe the protocol to conduct such experiments and then illustrate the results through a concrete example.

Despite the established role of the human medial temporal lobe (MTL) in both object representation17,18 and memory3,19, it remains largely unknown whether MTL neurons are modulated as a function of top-down attention to behaviorally relevant goals. Studying such neurons is important to start to understand how goal-relevant information influences bottom-up visual processes. Here, we demonstrate the utility of eye tracking while recording neurons using guided visual search, a well-known paradigm to study goal-directed behavior20,21,22,23,24,25. Using this method, we recently described a class of neurons called target neurons, which signals whether the currently attended stimulus is the goal of an ongoing search8. In the below, we present the study protocol needed to reproduce this previous scientific study. Note that along this example, the protocol can easily be adjusted to study an arbitrary visual attention task.

Protocol

1. Participants

- Recruit neurosurgical patients with intractable epilepsy who are undergoing placement of intracranial electrodes to localize their epileptic seizures.

- Insert depth electrodes with embedded microwires into all clinically indicated target locations, which typically include a subset of amygdala, hippocampus, anterior cingulate cortex and pre-supplementary motor area. See details for implantation in our previous protocol2.

- Once the patient returns to the epilepsy monitoring unit, connect the recording equipment for both macro- and micro- recordings. This includes carefully preparing a head-wrap that includes head stages (see our previous description for details2). Then, wait for the patient to recover from the surgery and conduct testing when the patient is fully awake (typically at least 36 to 48 h after surgery).

2. Experimental setup

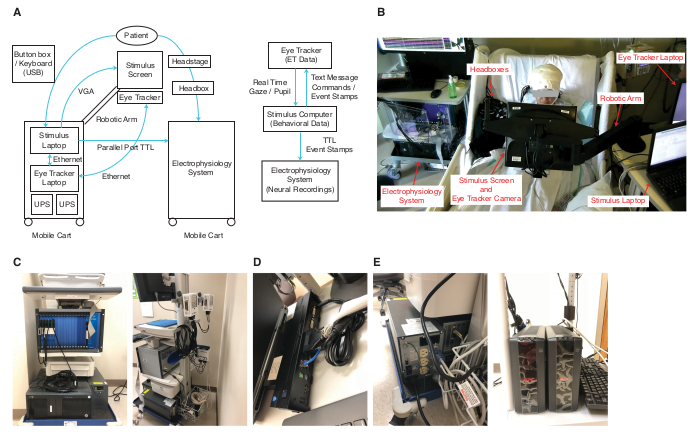

- Connect the stimulus computer to the electrophysiology system and eye tracker following the diagram in Figure 1.

- Use the remote non-invasive infrared eye tracking system (see Table of Materials). Place the eye tracking system on a robust mobile cart (Figure 1A, B). To the same cart, attach a flexible arm that holds an LCD display. Use the remote mode to track the patients head and eyes.

- Place a fully charged uninterrupted power supply (UPS) on the eye tracking cart and connect all devices related to eye tracking (i.e., LCD in front of patient, eye tracker camera and light source, and eye tracker host computer) to the UPS rather than to an external power source.

- Adjust the distance between the patient and the LCD screen to 60-70 cm and adjust the angle of the LCD screen so that the surface of the screen is approximately parallel to the patient’s face. Adjust the height of the screen relative to the patient’s head such that the camera of the eye tracker is approximately at the height of the patient’s nose.

- Provide the patient with the button box or keyboard. Verify that triggers (TTLs) and button press are recorded properly before starting the experiment.

3. Single-neuron recording

- Start the acquisition software. First, visually inspect the broadband (0.1 Hz – 8 kHz) local field potentials and make sure they are not contaminated by line noise. Otherwise, follow standard procedures to remove noise (see Discussion).

- To identify single neurons, band-pass filter the signal (300 Hz – 8 KHz). Select one of the eight microwires as a reference for each microwire bundle. Test different references and adjust the reference so that (1) the other 7 channels show clear neurons, and (2) the reference does not contain neurons. Set the input range to be ± 2,000 µV.

- Enable saving the data as an NRD file (i.e., the broadband raw data file that will be used for subsequent off-line spike sorting) before recording data. Set the sampling rate to 32 kHz.

4. Eye tracking

- Start the eye tracking software. Because it is a head-fixation free system, place the sticker on the patient’s forehead so that the eye tracker can adjust for head movements.

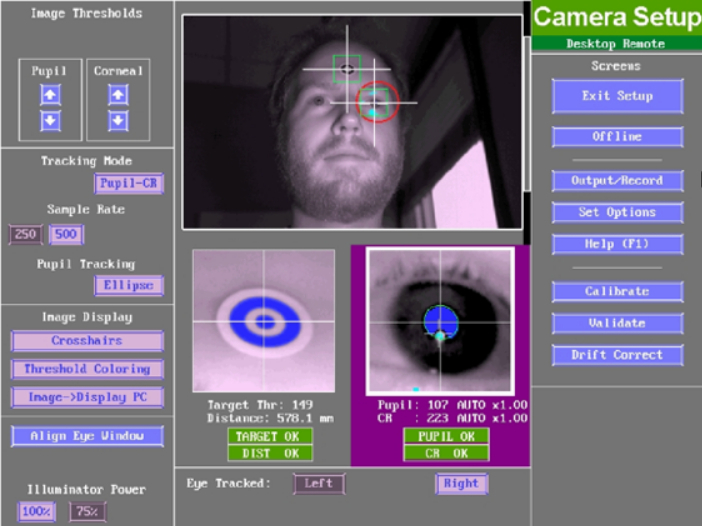

- Adjust the distance and angle between the eye tracker and patient so that the target marker, head distance, pupil, and corneal reflection (CR) are marked as ready (as shown in green in the eye tracking software; Figure 2 shows a good example camera setup screen). Click on the eye to be recorded and set the sampling rate to 500 Hz.

- Use the auto-adjustment of pupil and CR threshold. For patients wearing glasses, adjust the position and/or angle of the illuminator and camera so that reflections from the glass will not interfere with pupil acquisition.

- Calibrate the eye tracker with the built-in 9-point grid method at the beginning of each block. Confirm that eye positions (shown as “+”) register nicely as a 9-point grid. Otherwise, redo calibration.

- Accept the calibration and do validation. Accept the validation if the maximal validation error is < 2° and the average validation error is < 1°. Otherwise, redo validation.

- Do drift correction and proceed to the actual experiment.

5. Task

- In this visual search task, use the stimuli from our previous study14 and follow the task procedure as described before8.

- Provide task instructions to participants. Instruct the participants to find the target item in the search array and respond as soon as possible. Instruct the participants to press the left button of a response box (see Table of Materials) if they find the target and the right button if they think the target is absent. Explicitly instruct the participants that there will be target-present and target-absent trials.

- Start stimulus presentation software (see Table of Materials) and run the task: Present a target cue for 1 s and present the search array using the stimulus presentation software. Record button presses and provide trial-by-trial feedback (Correct, Incorrect, or Time Out) to participants.

6. Data analysis

- Because the acquisition and eye tracking systems run on different clocks, use the behavioral log file to find the alignment timestamp for electrophysiology recording and eye tracking. Match the triggers from electrophysiology recording and eye tracking before proceeding to further analysis. Extract segments of data according to timestamps and analysis windows separately for electrophysiology recording and eye tracking.

- Use the semi-automatic template matching algorithm Osort26 and follow the steps described before2,26 to identify putative single neurons. Assess the quality of the sorting before moving to further analysis2.

- To analyze eye movement data, first convert the EDF data from the eye tracker into ASCII format. Also, extract fixations and saccades. Then, import the ASCII file and save the following information into a MAT file: (1) time stamps, (2) eye coordinates (x,y), (3) pupil size, and (4) event time stamps. Parse the continuous recording into each trial.

- Follow previously described procedures to analyze the correlation between spikes and behavior8.

Representative Results

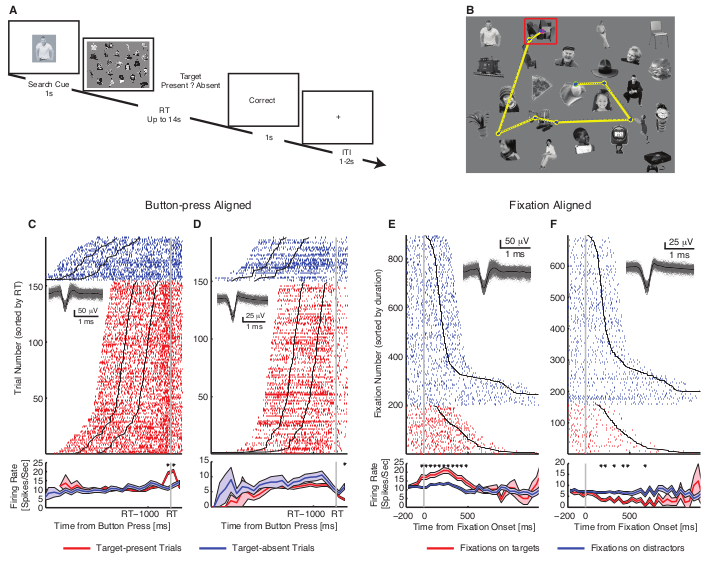

To illustrate the usage of the above-mentioned method, we next briefly describe a use-case that we recently published8. We recorded 228 single neurons from the human medial temporal lobe (MTL; amygdala and hippocampus) while the patients were performing a visual search task (Figure 3A, B). During this task, we investigated whether the activity of neurons differentiated between fixations on targets and distractors.

First, when we aligned responses at the button press, neurons were found that showed differential activity between target-present trials and target-absent trials (Figure 3C, D). Importantly, with simultaneous eye tracking, the fixation-based analysis was conducted. To select such target neurons, the mean firing rate in a time window starting 200 ms before fixation onset and ending 200 ms after fixation offset (next saccade onset) was used. A subset of MTL neurons (50/228; 21.9%; binomial P < 10−20) showed significantly different activities between fixations on targets vs. distractors (Figure 3E, F). Furthermore, one type of such target neuron had a greater response to targets relative to distractors (target-preferring; 27/50 neurons; Figure 3E) whereas the other had a greater response to distractors relative to targets (distractor-preferring; 23/50; Figure 3F). Together, this result demonstrates that a subset of MTL neurons encode whether the present fixation landed on a target or not.

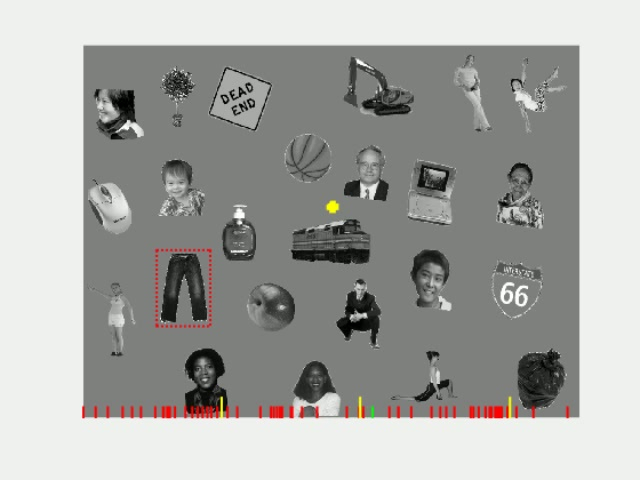

The dynamic process of visual search is demonstrated in Movie 1.

Figure 1. Experimental setup. (A) The left panels show a sketch of the connections between the different systems. The stimulus computer serves as the central controller. It connects to the electrophysiology system through the parallel port and sends TTL pulses as triggers. The stimulus computer connects to the eye tracking system through an ethernet cable, over which it sends text messages to the eye tracker and receives the current gaze position online. The stimulus computer also presents stimuli on the stimulus screen (VGA) and receives a response from the patient from a USB button box or keyboard. Blue lines show the connections between devices and the arrows show the direction of communication between devices. The right panel shows the signal flow between systems and data saved in each system. (B) An example setup with key parts of the system labeled. (C) Electrophysiology system. (D) Docking station that has the parallel port and ethernet port. (E) UPS for electrophysiology system (left) and eye tracking system (right). Please click here to view a larger version of this figure.

Figure 2. Example eye tracker camera setup screen. Target marker bounding box, eye bounding box, head distance, pupil, and corneal reflection (CR) should be marked as green and/or “OK” before proceeding. Please click here to view a larger version of this figure.

Figure 3. Example results. (A) Task. The search cue was presented for 1s, immediately followed by the search array. Participants were instructed to indicate by button press whether the target is present or absent (timeout 14s). Trial-by-Trial feedback is given immediately after button press (‘Correct’, ‘Incorrect’, or ‘Time Out’), followed by a blank screen for 1-2 s. (B) Example visual search arrays with fixations indicated. Each circle represents a fixation. Green circle: first fixation. Magenta circle: last fixation. Yellow line: saccades. Blue dot: raw gaze position. Red box: target. (C-F) Single neuron examples. (C-D) Button-press-aligned examples. (C) The neuron that increased its firing rate for target-present trials, but not for target-absent trials. (D) The neuron that decreased its firing rate for target-present trials, but not for target-absent trials. Trials are aligned to the button-press (gray line) and are sorted by reaction time. Black lines represent the onset and offset of the search cue (1 s duration). The inset shows waveforms for each unit. Asterisk indicates a significant difference between target-present and absent trials in that bin (P < 0.05, two-tailed t-test, Bonferroni-corrected; bin size = 250 ms). Shaded area denotes ±SEM across trials. (E-F) Fixation-aligned examples. t=0 is fixation onset. (E) The neuron that increased its firing rate when fixating on targets, but not distractors (the same neuron as (C)). (F) The neuron that decreased its firing rate when fixating on targets but not distractors (the same neuron as (D)). Fixations are sorted by fixation duration (black line shows the start of the next saccade). Asterisk indicates a significant difference between fixations on targets and distractors in that bin (P < 0.05, two-tailed t-test, Bonferroni-corrected; bin size = 50 ms). This figure has been modified with permission from8. Please click here to view a larger version of this figure.

Movie 1. Typical trials of visual search with responses from a single target neuron. In target-present trials, this neuron increased its firing rate regardless of the identity of the cue. Yellow dot denotes eye position. Yellow vertical bars at the bottom are event markers (i.e., cue onset, array onset, and inter-trial-interval onset). Red vertical bars at the bottom show spikes, which are also played as sound. The red dotted box denotes the location of the search target (not shown to participants). Please click here to view this video. (Right-click to download.)

Discussion

In this protocol, we described how to employ single-neuron recordings with concurrent eye tracking and described how we used this method to identify target neurons in the human MTL.

The setup involves three computers: one executing the task (stimulus computer), one running the eye tracker, and one running the acquisition system. To synchronize between the three systems, the parallel port is used to send TTL triggers from the stimulus computer to the electrophysiology system (Figure 1C). At the same time, the stimulus computer sends the same TTLs using an ethernet cable to the eye tracker. The stimulus computer should have a parallel port on its docking station in the example shown (Figure 1D), or alternatively, have a PCI Express parallel port card or a similar device.

The mobile cart for the stimulus computer and eye tracker with the flexible arm attached allows flexible positioning of the screen in front of the patient (Figure 1A, B). The usage of a UPS to power the devices on the cart is strongly suggested to eliminate line noise introduced into the electrophysiological recordings due to the proximity of the eye tracking devices to the patient’s head (Figure 1E). Furthermore, laptops running on battery power should be used as stimulus computer and eye tracker computer.

If the recordings are contaminated by noise, the eye tracker should be removed first to assess whether it is the source of the noise. If not, standard procedures should be used to denoise before using the eye tracker again2. Note that typical sources of line noise include the patient bed, IV devices, devices in the patient room, or ground loops created by using different plugs for different systems. If the eye tracker is the source of the noise, all devices (the camera, light source, and LCD screen) should be powered from the battery and/or UPS. If there is still noise, it is likely that the LCD screen and/or the power supply for the LCD screen of the eye tracker is faulty. A different screen / power supply should then be used. If possible, an LCD screen with an external power supply should be used. It is also important to ensure that the TTL cable does not introduce noise (i.e., use a TTL isolator).

The significance of recording single-neuron data in neurosurgical patients simultaneously with eye tracking is high for several reasons. First, single-neuron recordings have a high spatial and temporal resolution, and, thereby, allow the investigation of fast cognitive processes such as visual search. Second, they provide a much-needed link between human cognitive neuroscience and animal neurophysiology, which relies heavily on eye tracking. Third, because human single-neuron recordings are often performed simultaneously from multiple brain regions, our approach permits the temporal resolution that will help distinguish between visually driven vs. top-down modulation from the frontal cortex. In summary, single-neuron recordings with eye tracking make it possible to isolate specific processes that underlie goal-directed behavior. In addition, our concurrent eye tracking permitted fixation-based analysis, which greatly increased statistical power (e.g., Figure 3A, B vs. Figure 3C, D).

A challenge of this method is that the eye tracking system may introduce additional noise into the electrophysiological data. However, with the procedures outlined in this protocol, such additional noise can be eliminated, and once these procedures are established, they can be executed routinely. Furthermore, eye tracking lengthens the time needed for a given experiment because additional setup is required, especially when calibration of the eye tracker is challenging for some patients, in particular those with small pupils or glasses. However, the benefits from simultaneous eye tracking are worth this additional effort for several studies, making eye tracking a valuable addition to single-neuron recordings.

Divulgazioni

The authors have nothing to disclose.

Acknowledgements

We thank all patients for their participation. This research was supported by the Rockefeller Neuroscience Institute, the Autism Science Foundation and the Dana Foundation (to S.W.), an NSF CAREER award (1554105 to U.R.), and the NIH (R01MH110831 and U01NS098961 to U.R.). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. We thank James Lee, Erika Quan, and the staff of the Cedars-Sinai Simulation Center for their help in producing the demonstration video.

Materials

| Cedrus Response Box | Cedrus (https://cedrus.com/) | RB-844 | Button box |

| Dell Laptop | Dell (https://dell.com) | Precision 7520 | Stimulus Computer |

| EyeLink Eye Tracker | SR Research (https://www.sr-research.com) | 1000 Plus Remote with laptop host computer and LCD arm mount | Eye tracking |

| MATLAB | MathWorks Inc | R2016a (RRID: SCR_001622) | Data analysis |

| Neuralynx Neurophysiology System | Neuralynx (https://neuralynx.com) | ATLAS 128 | Electrophysiology |

| Osort | Open source | v4.1 (RRID: SCR_015869) | Spike sorting algorithm |

| Psychophysics Toolbx | Open source | PTB3 ( RRID: SCR_002881) | Matlab toolbox to implement psychophysical experiments |

Riferimenti

- Fried, I., Rutishauser, U., Cerf, M., Kreiman, G. . Single Neuron Studies of the Human Brain: Probing Cognition. , (2014).

- Minxha, J., Mamelak, A. N., Rutishauser, U., Sillitoe, R. V. Surgical and Electrophysiological Techniques for Single-Neuron Recordings in Human Epilepsy Patients. Extracellular Recording Approaches. , 267-293 (2018).

- Rutishauser, U., Mamelak, A. N., Schuman, E. M. Single-Trial Learning of Novel Stimuli by Individual Neurons of the Human Hippocampus-Amygdala Complex. Neuron. 49, 805-813 (2006).

- Rutishauser, U., Ross, I. B., Mamelak, A. N., Schuman, E. M. Human memory strength is predicted by theta-frequency phase-locking of single neurons. Nature. 464, 903-907 (2010).

- Wang, S., et al. Neurons in the human amygdala selective for perceived emotion. Proceedings of the National Academy of Sciences. 111, E3110-E3119 (2014).

- Wang, S., et al. The human amygdala parametrically encodes the intensity of specific facial emotions and their categorical ambiguity. Nature Communications. 8, 14821 (2017).

- Minxha, J., et al. Fixations Gate Species-Specific Responses to Free Viewing of Faces in the Human and Macaque Amygdala. Cell Reports. 18, 878-891 (2017).

- Wang, S., Mamelak, A. N., Adolphs, R., Rutishauser, U. Encoding of Target Detection during Visual Search by Single Neurons in the Human Brain. Current Biology. 28, 2058-2069 (2018).

- Holmqvist, K., et al. . Eye tracking: A comprehensive guide to methods and measures. , (2011).

- Liversedge, S. P., Findlay, J. M. Saccadic eye movements and cognition. Trends in Cognitive Sciences. 4, 6-14 (2000).

- Rehder, B., Hoffman, A. B. Eyetracking and selective attention in category learning. Cognitive Psychology. 51, 1-41 (2005).

- Blair, M. R., Watson, M. R., Walshe, R. C., Maj, F. Extremely selective attention: Eye-tracking studies of the dynamic allocation of attention to stimulus features in categorization. Journal of Experimental Psychology: Learning, Memory, and Cognition. 35, 1196 (2009).

- Rutishauser, U., Koch, C. Probabilistic modeling of eye movement data during conjunction search via feature-based attention. Journal of Vision. 7, (2007).

- Wang, S., et al. Autism spectrum disorder, but not amygdala lesions, impairs social attention in visual search. Neuropsychologia. 63, 259-274 (2014).

- Wang, S., et al. Atypical Visual Saliency in Autism Spectrum Disorder Quantified through Model-Based Eye Tracking. Neuron. 88, 604-616 (2015).

- Wang, S., Tsuchiya, N., New, J., Hurlemann, R., Adolphs, R. Preferential attention to animals and people is independent of the amygdala. Social Cognitive and Affective Neuroscience. 10, 371-380 (2015).

- Fried, I., MacDonald, K. A., Wilson, C. L. Single Neuron Activity in Human Hippocampus and Amygdala during Recognition of Faces and Objects. Neuron. 18, 753-765 (1997).

- Kreiman, G., Koch, C., Fried, I. Category-specific visual responses of single neurons in the human medial temporal lobe. Nature Neuroscience. 3, 946-953 (2000).

- Squire, L. R., Stark, C. E. L., Clark, R. E. The Medial Temporal Lobe. Annual Review of Neuroscience. 27, 279-306 (2004).

- Chelazzi, L., Miller, E. K., Duncan, J., Desimone, R. A neural basis for visual search in inferior temporal cortex. Nature. 363, 345-347 (1993).

- Schall, J. D., Hanes, D. P. Neural basis of saccade target selection in frontal eye field during visual search. Nature. 366, 467-469 (1993).

- Wolfe, J. M. What Can 1 Million Trials Tell Us About Visual Search?. Psychological Science. 9, 33-39 (1998).

- Wolfe, J. M., Horowitz, T. S. What attributes guide the deployment of visual attention and how do they do it?. Nature Review Neuroscience. 5, 495-501 (2004).

- Sheinberg, D. L., Logothetis, N. K. Noticing Familiar Objects in Real World Scenes: The Role of Temporal Cortical Neurons in Natural Vision. The Journal of Neuroscience. 21, 1340-1350 (2001).

- Bichot, N. P., Rossi, A. F., Desimone, R. Parallel and Serial Neural Mechanisms for Visual Search in Macaque Area V4. Science. 308, 529-534 (2005).

- Rutishauser, U., Schuman, E. M., Mamelak, A. N. Online detection and sorting of extracellularly recorded action potentials in human medial temporal lobe recordings, in vivo. Journal of Neuroscience Methods. 154, 204-224 (2006).