Detecting Pre-Stimulus Source-Level Effects on Object Perception with Magnetoencephalography

Summary

This article describes how to set up an experiment that allows detecting pre-stimulus source-level influences on object perception using magnetoencephalography (MEG). It covers stimulus material, experimental design, MEG recording, and data analysis.

Abstract

Pre-stimulus oscillatory brain activity influences upcoming perception. The characteristics of this pre-stimulus activity can predict whether a near-threshold stimulus will be perceived or not perceived, but can they also predict which one of two competing stimuli with different perceptual contents is perceived? Ambiguous visual stimuli, which can be seen in one of two possible ways at a time, are ideally suited to investigate this question. Magnetoencephalography (MEG) is a neurophysiological measurement technique that records magnetic signals emitted as a result of brain activity. The millisecond temporal resolution of MEG allows for a characterization of oscillatory brain states from as little as 1 second of recorded data. Presenting an empty screen around 1 second prior to the ambiguous stimulus onset therefore provides a time window in which one can investigate whether pre-stimulus oscillatory activity biases the content of upcoming perception, as indicated by participants' reports. The spatial resolution of MEG is not excellent, but sufficient to localise sources of brain activity at the centimetre scale. Source reconstruction of MEG activity then allows for testing hypotheses about the oscillatory activity of specific regions of interest, as well as the time- and frequency-resolved connectivity between regions of interest. The described protocol enables a better understanding of the influence of spontaneous, ongoing brain activity on visual perception.

Introduction

Brain states preceding stimuli presentation influence the way stimuli are perceived as well as the neural responses associated with perception1,2,3,4. For example, when a stimulus is presented with an intensity close to perceptual threshold (near-threshold), pre-stimulus neural oscillatory power, phase, and connectivity can influence whether the upcoming stimulus will be perceived or not perceived5,6,7,8,9,10. These pre-stimulus signals could also influence other aspects of perception, such as perceptual object content.

Presenting people with an ambiguous image which can be interpreted in one of two ways is an ideal way to probe object perception11. This is because the subjective content of perception can be one of two objects, while the actual stimulus remains unchanged. One can therefore assess the differences in recorded brain signals between trials on which people reported perceiving one versus the other possible interpretation of the stimulus. Given the reports, one can also investigate whether there were any differences in the brain states prior to stimulus onset.

Magnetoencephalography (MEG) is a functional neuroimaging technique that records magnetic fields produced by electrical currents in the brain. While blood-oxygenation level dependent (BOLD) responses resolve at a timescale of seconds, MEG provides millisecond resolution and therefore allows investigating brain mechanisms that occur at very fast timescales. A related advantage of MEG is that it allows characterizing brain states from short periods of recorded data, meaning experimental trials can be shortened such that many trials fit into an experimental session. Further, MEG allows for frequency-domain analyses which can uncover oscillatory activity.

In addition to its high temporal resolution, MEG offers good spatial resolution. With source reconstruction techniques12, one can project sensor-level data to source space. This then enables testing hypotheses about the activity of specified regions of interests. Finally, while signals in sensor-space are highly correlated and therefore the connectivity between sensors cannot be accurately assessed, source reconstruction allows for the assessment of connectivity between regions of interest because it reduces the correlations between source signals13. These connectivity estimates can be resolved in both the time and frequency domains.

Given these advantages, MEG is ideally suited to investigate pre-stimulus effects on object perception in specified regions of interest. In the present report we will illustrate how to design such an experiment and the MEG acquisition set-up, as well as how to apply source reconstruction and assess oscillatory activity and connectivity.

Protocol

The described protocol follows the guidelines of the human research ethics committee at the University of Salzburg, and is in accordance with the Declaration of Helsinki.

1. Prepare stimulus material

- Download an image of the Rubin face/vase illusion14. This will be shown to half of the participants.

- Use the Matlab command ~ to invert the original black and white binary Rubin image to create a second Rubin face/vase negative image with the black and white colors flipped with respect to the original image (white background instead of black background). This will be shown to the other half of the participants.

- Create a mask by randomly scrambling blocks of pixels of the Rubin image. Divide the image in square blocks that are small enough to hide obvious contour features, for example between 2% and 5% of the size of the original image (5 by 5 pixels out of an image of 250 by 250), then randomly shuffle them to create the mask.

- Create a black fixation cross on a white background, such that the fixation cross is smaller than the Rubin image (less than 5° of visual angle).

2. Set up MEG and stimulation equipment

- Connect the stimulus presentation computer to the projector. Connect the DLP LED projector controller via a USB optoisolated extension (for data), and a digital visual interface (DVI) cable (for stimuli).

- Connect the MEG acquisition computer to the stimulus presentation computer to let it send and receive triggers. Plug the digital input/output (DIO) system (buttons and triggers, 2x standard D24 connectors) of the integrated stimulus presentation system into the MEG connector on the optoisolated BNC breakout box.

- Record 1 minute of empty room MEG data at 1 kHz.

- Monitor the signals from the 102 magnetometers and 204 orthogonally placed planar gradiometers at 102 different positions by visualizing all signals in real time on the acquisition computer.

3. Prepare participant for MEG experiment

NOTE: Details of MEG data acquisition have been previously described15.

- Make sure the participant understands the informed consent in accordance with the declaration of Helsinki and have them sign the form which also includes a statement of consent to the processing of personal data.

- Provide the participant with non-magnetic clothes and make sure they have no metallic objects in or on their bodies. Ask participants to fill another anonymous questionnaire to ensure this, and that the participant does not have any other exclusion criteria such as neurological disorders, and to document other personal data like handedness and level of rest.

- Seat the participant on a non-ferromagnetic (wooden) chair. Attach 5 head position indicator (HPI) coils to the head with adhesive plaster, two above one eye, one above the other eye, and one behind each ear.

- Place the tracker sensor of the digitization system firmly on the participant's head and fix it to spectacles for maximum stability.

NOTE: A 3D digitizer was used (Table of Materials). - Digitize the anatomical landmarks, the left and right pre-auricular points and the nasion, and make sure the left and right pre-auricular points are symmetrical. These fiducials define the 3D coordinate frame.

- Digitize the 5 HPI coil positions using a 3D digitizer stylus.

- Digitize up to 300 points along the scalp and maximize the coverage of the head shape. Cover the well-defined areas of the scalp on magnetic resonance (MR) images, above the inion on the back and the nasion on the front, as well as the nasal bridge.

NOTE: These points will be used for the co-registration with an anatomical image for better individual source reconstruction. - Remove the spectacles with the tracker sensor.

- Attach disposable electrodes above (superciliary arch) and below (medial to the zygomatic maxillary bone) the right eye to monitor vertical eye movements.

- Attach disposable electrodes to the left of the left eye and to the right of the right eye (dorsal to the zygomatic maxillary bone) to monitor horizontal eye movements.

- Attach disposable electrodes below the heart and below the right collarbone to monitor heart rate.

NOTE: The eyes and the heart signals are relatively robust, so checking the impedance of disposable electrodes is not necessary. - Attach a disposable electrode as a ground below the neck.

- Escort the participant to the MEG shielded room and instruct them to sit in the MEG chair.

- Plug the HPI wiring harness and the disposable electrodes in the MEG system.

- Raise the chair such that the participant’s head touches the top of the MEG helmet and make sure the participant is comfortable in this position.

- Shut the door to the shielded room and communicate with the participant through the intercom system inside and outside the shielded room.

- Instruct the participant to passively stare at an empty screen (empty except for a central fixation cross) for 5 min while recording resting-state MEG data at 1 kHz. Keep the sampling rate at 1 kHz throughout the experiment.

- Instruct the participant of the task requirements and have them perform 20 practice trials.

NOTE: Example instructions: "Keep your fixation at the center of the screen at all times. A cross will appear, and after the cross disappears, you will see an image followed by a scrambled image. As soon as the scrambled image disappears, click the yellow button if you had seen faces and the green button if you had seen a vase." - Alternate the response buttons across participants (e.g., right for faces, left for vase, or vice versa).

NOTE: The color of response buttons does not matter.

4. Present the experiment using Psychtoolbox16

- Display instructions to the participants, telling them which button to press when they see faces and which button to press when they see a vase.

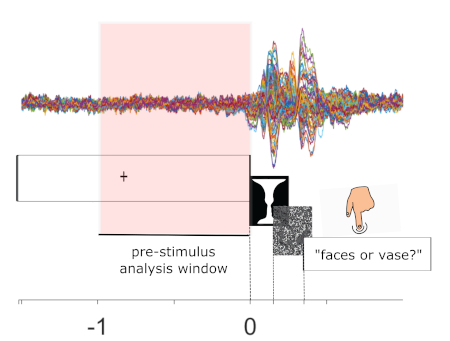

- Create a single trial with 4 events which will apply to all trials in this order: fixation cross, Rubin image, mask, and response prompt (Figure 1).

- At the beginning of each trial, display the fixation cross for a variable time period between 1 s and 1.8 s.

- At the end of that time period, remove the fixation cross and display the Rubin image for 150 ms.

- At the end of the 150 ms, remove the Rubin image and display the mask for 200 ms.

- At the end of the 200 ms, remove the mask and display a question to prompt participants to respond with a maximum response deadline of 2 s.

- Program the response period such that if participants respond within 2 s, the next trial (starting with a fixation cross) begins when they do so. Otherwise, start the next trial after 2 s.

- Save the timing of all 4 events as well as the response choice and its timing.

- Repeat the same trial structure 100 times before displaying an instruction for participants to rest briefly. This constitutes one experimental block.

- Repeat the block structure 4 times for a total of 400 trials.

5. Monitor MEG signal and participant during experiment

- Monitor the participant via video.

- At the beginning of each block, before the task starts, start measuring MEG data and record the initial position of the participants' head position with respect to the MEG. In the MEG system used, click GO to start. When a dialog asks if the HPI data is to be omitted or added to the recording, inspect the HPI coils signal, and click Accept to record that initial head position. After that, click Record raw to start recording MEG data.

- If at any point throughout the experiment the participant wishes to stop the experiment, terminate the experiment and go inside the shielded room to unplug all sensors from the MEG system and release the participant from the chair.

- Monitor the MEG signals by visualizing them in real time on the acquisition computer.

- In between blocks, communicate with the participant through the speaker system to make sure they are well and ready to continue, and instruct them to move their limbs if they wish, but not their head.

- In between blocks, save the acquired MEG signals of that block.

- After the end of the experiment, go inside the shielded room, unplug all sensors from the MEG system, and release the participant from the chair.

- Escort the participant out of the shielded room and offer them the choice to either detach all sensors from their face and body themselves, or detach the sensors for them.

- Thank the participant and provide them with monetary compensation.

6. Pre-process and segment MEG signals

- Use the signal space separation algorithm implemented in the Maxfilter program (provided by the MEG manufacturer) with default parameter values to remove external noise from the continuous MEG signals.

- Apply a 0.1 Hz high-pass filter to the continuous data using the Fieldtrip toolbox17 function ft_preprocessing.

NOTE: all subsequently reported functions prefixed with 'ft_' are part of the Fieldtrip toolbox. - Segment the MEG data by extracting the 1 second preceding the stimulus presentation on each trial.

- Assign these epochs a 'face' or 'vase' trial type label according to the participants' behavioral responses on each trial.

- Visually inspect trials and channels to identify and remove those showing exceeding noise or artifacts, regardless of the nature of the artefacts, using ft_rejectvisual.

- Reject trials and channels with z-scores above 3 by clicking zscore and selecting trials and channels exceeding the value of 3 or trials with excess variance by removing outliers showing after clicking var. Inspect the MEG signal for all the trials before or after this procedure.

7. Source reconstruction

- Include both trial types to perform the source localization to obtain common linearly constrained minimum variance12 spatial filters in the beamforming procedure implemented in Fieldtrip.

- Band-pass filter the epoched data to the frequencies of interest, in this case between 1 and 40 Hz.

- Select the time of interest to calculate the covariance matrix, in this case the 1 second prestimulus period.

NOTE: The resulting data segments (selected between -1 to 0 s and 1 to 40 Hz) are used in all the following steps that require data input. - Segment the brain and the scalp out of individual structural MR images with ft_volumesegment. If not available, use a standard T1 (from the statistical parametric mapping [SPM] toolbox) Montreal Institute of Neurology (MNI, Montreal, Quebec, Canada) brain scan instead.

- Create for each participant a realistic single-shell head model using ft_prepare_headmodel.

- On individual MR images, locate the fiducial landmarks by clicking on their location on the image to initiate a coarse co-registration with ft_volumerealign.

- Align the head shape points with the scalp for a finer co-registration.

- Prepare an individual 3D grid at 1.5 cm resolution based on the MNI template brain morphed into the brain volume of each participant with ft_prepare_sourcemodel.

- Compute the forward model for the MEG channels and the grid locations with ft_prepare_leadfield. Use the configuration fixedori to compute the leadfield for only one optimal dipole orientation.

- Calculate the covariance matrix of each trial and average it across all trials.

- Compute the spatial filters using the forward model and the average covariance matrix with ft_sourceanalysis.

- Multiply the sensor-level signal to the LCMV filters to obtain the time series for each source location in the grid and for each trial.

8. Analyze pre-stimulus oscillatory power in region of interest

- Define a region of interest (ROI), for example from previous literature18 (here fusiform face area [FFA]; MNI coordinates: [28 -64 -4] mm).

- Single out the virtual sensor that spatially corresponds to the ROI, using ft_selectdata.

- Split face and vase trials using ft_selectdata.

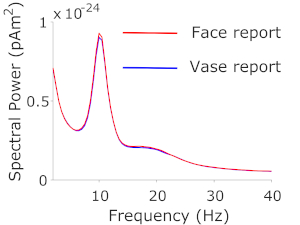

- Perform a frequency analysis on the ROI, separately on the data from the two trial types, using ft_freqanalysis.

- Set the method option to mtmfft to perform a fast Fourier transform.

- Set the taper option to hanning to use a Hann function taper.

- Define the frequencies of interest from 1 Hz to 40 Hz.

- Set the output option to pow to extract power values from the complex Fourier spectra.

- Repeat the procedure for each participant before averaging the spectra across participants and plotting the resulting grand-averaged power values as a function of the frequencies of interest.

9. Analyze pre-stimulus connectivity between regions of interest

- Define one (or more) ROI with which the previously selected ROI is hypothesized to be connected, for example from previous literature18 (here V1; MNI coordinates: [12 -88 0]).

- Repeat steps 8.2 and 8.3.

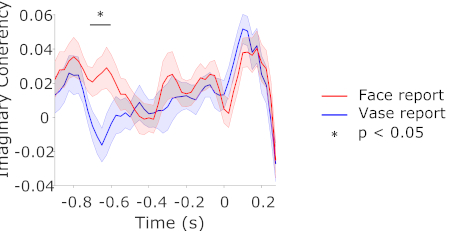

- Perform time-frequency analysis on both ROIs (represented as 2 channels or 'virtual sensors' within the same data structure), separately on the data from the two trial types, using ft_freqanalysis.

- Set the method to mtmconvol to implement a multitaper time-frequency transformation based on multiplication in the frequency domain.

- Set the taper option to dpss to use a discrete prolate spheroidal sequences function taper.

- Define the frequencies of interest from 8 Hz to 13 Hz.

- Set the width of the time window to 200 ms and the smoothing parameter to 4 Hz.

- Set the keeptrials option to yes to return the time-frequency estimates of the single trials.

- Set the output to fourier to return the complex Fourier spectra.

- Perform a connectivity analysis on the resulting time-frequency data using ft_connectivityanalysis.

- Set the method to coh and the complex field to imag to return the imaginary part of coherency19.

- Repeat the procedure for each participant before averaging the coherence spectra across frequencies and participants and plotting the resulting grand-averaged imaginary coherency values as a function of time.

10. Statistically comparing the face and vase pre-stimulus power or coherence spectra

- Combine the pre-stimulus power or coherence data from each subject, within each of the 2 conditions, into one Matlab variable using ft_freqgrandaverage with the option keepindividual set to yes.

- Perform a cluster-based permutation test20 comparing the 2 resulting variables using ft_freqstatistics.

- Set the method option to motecarlo.

- Set the frequency option to [8 13] and set avgoverfreq 세스 yes.

- Set clusteralpha to 0.05 and set correcttail 세스 alpha.

- Set the statistic option to ft_statfun_depsamplesT.

- Create a design matrix with a first row of 20 ones followed by 20 twos, and a second row of consecutive numbers from 1 to 20 repeated twice. Pass this design matrix to the design option.

NOTE: The design matrix is divided in blocks of 20 because the data were collected from 20 participants. - Set the ivar option to 1 and the uvar option to 2.

Representative Results

We presented the Rubin face/vase illusion to participants briefly and repeatedly and asked participants to report their percept (face or vase?) following each trial (Figure 1). Each trial was preceded by at least 1 s of a blank screen (with fixation cross); this was the pre-stimulus interval of interest.

We asked whether pre-stimulus oscillatory power in regions of interest or pre-stimulus connectivity between regions of interest influenced the perceptual report of the upcoming ambiguous stimulus. Therefore, as a first step, we projected our data to source space such that we could extract signals from the relevant ROIs.

Based on previous literature investigating face and object perception with both ambiguous21 and unambiguous22 stimuli, we determined the FFA to be our ROI. We subsequently analyzed the low-frequency (1-40 Hz) spectral components of the FFA source signal and contrasted the spectral estimates from trials reported as 'face' with those from trials reported as 'vase'. A cluster-based permutation test, clustering over the frequencies 1-40 Hz, contrasting spectral power on trials where people reported face vs vase, revealed no significant differences between the 2 trial types. Nevertheless, descriptively, the power spectra showed the expected oscillatory alpha band peak in the range of 8-13 Hz, and to a lesser extent beta band activity in the range of 13-25 Hz (Figure 2).

Having found no differences in pre-stimulus spectral power, we next investigated whether there were differences in pre-stimulus connectivity between the trial types. In addition to FFA, we determined V1 to be our second ROI due to its ubiquitous involvement in vision. Based on the results of the power analysis, we determined the frequencies 8-13 Hz to be our frequencies of interest. We calculated the time- and frequency-resolved imaginary part of coherency between our two ROIs, separately for face and vase trials, and averaged the result across the frequencies of interest. This measure reflects synchrony of oscillatory phase amongst brain regions and conservatively controls against volume conduction effects in MEG reconstructed sources19, so it was the method of choice for assessing functional coupling. A cluster-based permutation test, clustering over the time-points -1 to 0 s, contrasting imaginary coherency between V1 and FFA on trials where people reported face vs vase, revealed that face trials had stronger pre-stimulus connectivity compared to vase trials, around 700 ms prior to stimulus onset (Figure 3).

Figure 1: Example trial structure and raw data. Bottom panel: A trial starts with the display of a fixation cross. After 1 to 1.8 s, the Rubin stimulus appears for 150 ms followed by a mask for 200 ms. A response screen then appears to prompt participants to respond with 'face' or 'vase'. Top panel: Multi-channel raw data from an example participant, time-locked to stimulus onset and averaged across trials. This is a schematic to highlight the data in the pre-stimulus analysis window (-1 s to 0 s; highlighted in pink), which will be the target interval for analysis. Please click here to view a larger version of this figure.

Figure 2: Spectral power in FFA. Spectral power estimates from source-localized FFA signals on face and vase trials. Please click here to view a larger version of this figure.

Figure 3: Connectivity between V1 and FFA. Imaginary part of coherency between source-localized V1 and FFA signals on face and vase trials, in the frequency range of 8-13 Hz. Shaded regions represent the standard error of the mean for within-subjects designs23. Please click here to view a larger version of this figure.

Discussion

Presenting a unique stimulus which can be interpreted as more than one object over time, but as only one object at any given time, allows for investigating pre-stimulus effects on object perception. In this way one is able to relate pre-stimulus brain states to subjective reports of the perceived objects. In a laboratory setting, ambiguous images which can be interpreted in one of two ways, such as the Rubin vase illusion, provide an optimal case which allows for straightforward contrasts of brain activity between two trial types: those perceived one way (e.g., 'face') and those perceived the other way (e.g., 'vase').

Presenting these stimuli briefly (<200 ms) ensures that people see and subsequently report only one of the two possible interpretations of the stimulus on a given trial. Counterbalancing (randomly alternating) between the black vase/white faces and white vase/black faces versions of the stimulus across participants reduces the influence of low-level stimulus features on the subsequent analysis. Presenting a mask immediately after the stimulus prevents after-images from forming and biasing participants' responses. Because analyzing the period after stimulus onset is not of interest, no matching between low frequency features of the stimulus and mask is required. Finally, alternating the response buttons across participants (e.g., left for vase, right for face, or vice versa) prevents activity due to motor preparation from factoring into the contrasts.

Given the millisecond resolution of MEG, a pre-stimulus interval of as short as 1 s is sufficient to estimate measures such as spectral power and connectivity. Given the short duration of each resulting trial, a large number of trials can be accommodated in an experimental session, ensuring a high signal-to-noise ratio when averaging MEG signals across trials.

Specific category-sensitive regions of interest have been shown to be active during object perception24,25. For example, FFA is widely reported to be involved in face perception22. To investigate the effects of measured activity stemming from specific sources, one can source-reconstruct MEG data. To investigate connectivity between sources, source reconstruction is necessary. To facilitate source data analysis, single-trial source-level data can be represented by 'virtual sensors'. Representing the data in this way enables one to analyze single-trial source data in the exact same way in source space and sensor space (that is, using the same analysis functions, for example using the Fieldtrip toolbox). This then enables testing hypotheses about the activity of specified regions of interests in a straightforward manner.

While pre-stimulus oscillatory power has been shown to influence stimulus detection near perceptual threshold (perceived vs not perceived), whether it influences the content of what is seen is less known. Here we contrasted pre-stimulus oscillatory power in FFA between trials on which people reported face vs vase, and found no statistical differences. We subsequently tested whether the connectivity between V1 and FFA influences the upcoming perceptual report, and found that face trials were preceded by enhanced connectivity between V1 and FFA in the alpha frequency range around 700 ms prior to stimulus onset. That we found no effect in alpha power, but rather in connectivity in the alpha band, suggests that while pre-stimulus alpha power might influence stimulus detection7,8, it does not necessarily influence object categorization. Our results therefore show that for a more complete understanding of the oscillatory dynamics preceding object perception and their subsequent influence on object perception, simply analyzing oscillatory power in regions of interest is not sufficient. Rather, connectivity between regions of interest must be taken into account, as the ongoing fluctuations in the strength of these connections can bias subsequent perception18. Finally, despite the less-than-optimal spatial resolution of MEG, our protocol demonstrates that one is able to clearly identify regions of interest and investigate their relationships. MEG can supersede Electroencephalography (EEG) because it offers superior spatial resolution, and can supersede function MRI because it offers superior temporal resolution. Therefore, MEG combined with source reconstruction is ideally suited to investigate fast and localized neural processes.

Disclosures

The authors have nothing to disclose.

Acknowledgements

This work was supported by FWF Austrian Science Fund, Imaging the Mind: Connectivity and Higher Cognitive Function, W 1233-G17 (to E.R.) and European Research Council Grant WIN2CON, ERC StG 283404 (to N.W.). The authors would like to acknowledge the support of Nadia Müller-Voggel, Nicholas Peatfield, and Manfred Seifter for contributions to this protocol.

Materials

| Data analysis sowftware | Elekta Oy, Stockholm, SWEDEN | NM23321N | Elekta standard data analysis software including MaxFilter release 2.2 |

| Data analysis workstation | Elekta Oy, Stockholm, SWEDEN | NM20998N | MEG recoding PC and software |

| Head position coil kit | Elekta Oy, Stockholm, SWEDEN | NM23880N | 5 Head Position Indicator (HPI) coils |

| Neuromag TRIUX | Elekta Oy, Stockholm, SWEDEN | NM23900N | 306-channel magnetoencephalograph system |

| Polhemus Fastrak 3D | Polhemus, VT, USA | 3D head digitization system | |

| PROPixx | VPixx Technologies Inc., QC, CANADA | VPX-PRO-5001C | Projector and data acquisition system |

| RESPONSEPixx | VPixx Technologies Inc., QC, CANADA | VPX-ACC-4910 | MEG-compatible response collection handheld control pad system |

| Screen | VPixx Technologies Inc., QC, CANADA | VPX-ACC-5180 | MEG-compatible rear projection screen with frame and stand |

| VacuumSchmelze AK-3 | VacuumSchmelze GmbH & Co. KG, Hanau, GERMANY | NM23122N | Two-layer magnetically-shielded room |

| Software | Version | ||

| Fieldtrip | Open Source | FTP-181005 | fieldtriptoolbox.org |

| Matlab | MathWorks, MA, USA | R2018b | mathworks.com/products/matlab |

| Psychophysics Toolbox | Open Source | PTB-3.0.13 | psychtoolbox.org |

References

- Arieli, A., Sterkin, A., Grinvald, A., Aertsen, A. Dynamics of ongoing activity: explanation of the large variability in evoked cortical responses. Science. 273 (5283), 1868-1871 (1996).

- Boly, M., et al. Baseline brain activity fluctuations predict somatosensory perception in humans. Proceedings of the National Academy of Sciences of the United States of America. 104 (29), 12187-12192 (2007).

- Rahn, E., Başar, E. Prestimulus EEG-activity strongly influences the auditory evoked vertex response: a new method for selective averaging. The International Journal of Neuroscience. 69 (1-4), 207-220 (1993).

- Supèr, H., van der Togt, C., Spekreijse, H., Lamme, V. A. F. Internal state of monkey primary visual cortex (V1) predicts figure-ground perception. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 23 (8), 3407-3414 (2003).

- Frey, J. N., et al. The Tactile Window to Consciousness is Characterized by Frequency-Specific Integration and Segregation of the Primary Somatosensory Cortex. Scientific Reports. 6, 20805 (2016).

- Leonardelli, E., et al. Prestimulus oscillatory alpha power and connectivity patterns predispose perceptual integration of an audio and a tactile stimulus. Human Brain Mapping. 36 (9), 3486-3498 (2015).

- Leske, S., et al. Prestimulus Network Integration of Auditory Cortex Predisposes Near-Threshold Perception Independently of Local Excitability. Cerebral Cortex. 25 (12), 4898-4907 (2015).

- Weisz, N., et al. Prestimulus oscillatory power and connectivity patterns predispose conscious somatosensory perception. Proceedings of the National Academy of Sciences of the United States of America. 111 (4), 417-425 (2014).

- Busch, N. A., Dubois, J., VanRullen, R. The phase of ongoing EEG oscillations predicts visual perception. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 29 (24), 7869-7876 (2009).

- Mathewson, K. E., Gratton, G., Fabiani, M., Beck, D. M., Ro, T. To see or not to see: prestimulus alpha phase predicts visual awareness. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 29 (9), 2725-2732 (2009).

- Blake, R., Logothetis, N. K. Visual competition. Nature Reviews Neuroscience. 3 (1), 13-21 (2002).

- Van Veen, B. D., van Drongelen, W., Yuchtman, M., Suzuki, A. Localization of brain electrical activity via linearly constrained minimum variance spatial filtering. IEEE Transactions on Biomedical Engineering. 44 (9), 867-880 (1997).

- Bastos, A. M., Schoffelen, J. -. M. A Tutorial Review of Functional Connectivity Analysis Methods and Their Interpretational Pitfalls. Frontiers in Systems Neuroscience. 9, 175 (2015).

- Rubin, E. . Synsoplevede Figurer. , (1915).

- Balderston, N. L., Schultz, D. H., Baillet, S., Helmstetter, F. J. How to detect amygdala activity with magnetoencephalography using source imaging. Journal of Visualized Experiments. (76), e50212 (2013).

- Kleiner, M., et al. What’s new in psychtoolbox-3. Perception. 36 (14), 1-16 (2007).

- Oostenveld, R., Fries, P., Maris, E., Schoffelen, J. -. M. FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience. 2011, 156869 (2011).

- Rassi, E., Wutz, A., Mueller-Voggel, N., Weisz, N. Pre-stimulus feedback connectivity biases the content of visual experiences. BioRxiv. , (2019).

- Nolte, G., et al. Identifying true brain interaction from EEG data using the imaginary part of coherency. Clinical Neurophysiology: Official Journal of the International Federation of Clinical Neurophysiology. 115 (10), 2292-2307 (2004).

- Maris, E., Oostenveld, R. Nonparametric statistical testing of EEG- and MEG-data. Journal of Neuroscience Methods. 164 (1), 177-190 (2007).

- Hesselmann, G., Kell, C. A., Eger, E., Kleinschmidt, A. Spontaneous local variations in ongoing neural activity bias perceptual decisions. Proceedings of the National Academy of Sciences of the United States of America. 105 (31), 10984-10989 (2008).

- Kanwisher, N., McDermott, J., Chun, M. M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience. 17 (11), 4302-4311 (1997).

- Morey, R. D. Confidence Intervals from Normalized Data: A correction to Cousineau. Tutorials in Quantitative Methods for Psychology. 4 (2), 61-64 (2008).

- Miller, E. K., Nieder, A., Freedman, D. J., Wallis, J. D. Neural correlates of categories and concepts. Current Opinion in Neurobiology. 13 (2), 198-203 (2003).

- Kreiman, G., Koch, C., Fried, I. Category-specific visual responses of single neurons in the human medial temporal lobe. Nature Neuroscience. 3 (9), 946-953 (2000).