Using Virtual Reality to Transfer Motor Skill Knowledge from One Hand to Another

Summary

We describe a novel virtual-reality based setup which exploits voluntary control of one hand to improve motor-skill performance in the other (non-trained) hand. This is achieved by providing real-time movement-based sensory feedback as if the non-trained hand is moving. This new approach may be used to enhance rehabilitation of patients with unilateral hemiparesis.

Abstract

As far as acquiring motor skills is concerned, training by voluntary physical movement is superior to all other forms of training (e.g. training by observation or passive movement of trainee's hands by a robotic device). This obviously presents a major challenge in the rehabilitation of a paretic limb since voluntary control of physical movement is limited. Here, we describe a novel training scheme we have developed that has the potential to circumvent this major challenge. We exploited the voluntary control of one hand and provided real-time movement-based manipulated sensory feedback as if the other hand is moving. Visual manipulation through virtual reality (VR) was combined with a device that yokes left-hand fingers to passively follow right-hand voluntary finger movements. In healthy subjects, we demonstrate enhanced within-session performance gains of a limb in the absence of voluntary physical training. Results in healthy subjects suggest that training with the unique VR setup might also be beneficial for patients with upper limb hemiparesis by exploiting the voluntary control of their healthy hand to improve rehabilitation of their affected hand.

Introduction

Physical practice is the most efficient form of training. Although this approach is well established1, it is very challenging in cases where the basic motor capability of the training hand is limited2. To bypass this problem, a large and growing body of literature examined various indirect approaches of motor training.

One such indirect training approach uses physical practice with one hand to introduce performance gains in the other (non-practiced) hand. This phenomenon, known as cross-education (CE) or intermanual transfer, has been studied extensively 3,4,5,6,7,8,9 and used to enhance performance in various motor tasks 10,11,12. For instance, in sport skill settings, studies have demonstrated that training basketball dribbling in one hand transfers to increased dribbling capabilities in the other, untrained hand 13,14,15.

In another indirect approach, motor learning is facilitated through the use of visual or sensory feedback. In learning by observation, it has been demonstrated that significant performance gains can be obtained simply by passively observing someone else perform the task16,17,18,19,20. Similarly, proprioceptive training, in which the limb is passively moved, was also shown to improve performance on motor tasks 12,21,22,23,24,25,26.

Together, these lines of research suggest that sensory input plays an important role in learning. Here, we demonstrate that manipulating online sensory feedback (visual and proprioceptive) during physical training of one limb results in augmented performance gain in the opposite limb. We describe a training regime that yields optimal performance outcome in a hand, in the absence of its voluntary physical training. The conceptual novelty of the proposed method resides in the fact that it combines the three different forms of learning – namely, learning by observation, CE, and passive movement. Here we examined whether the phenomenon of CE, together with mirrored visual feedback and passive movement, can be exploited to facilitate learning in healthy subjects in the absence of voluntary physical movement of the training limb.

The concept in this setup differs from direct attempts to physically train the hand. At the methodological level – we introduce a novel setup including advanced technologies such as 3D virtual reality, and custom built devices that allow manipulating visual and proprioceptive input in a natural environmental setting. Demonstrating improved outcome using the proposed training has key consequences for real-world learning. For example, children use sensory feedback in a manner that is different from that of adults27,28,29 and in order to optimize motor learning, children may require longer periods of practice. The use of CE together with manipulated sensory feedback might reduce training duration. Furthermore, acquisition of sport skills might be facilitated using this kind of sophisticated training. Finally, this can prove beneficial for the development of a new approach for rehabilitation of patients with unilateral motor deficits such as stroke.

Protocol

The following protocol was conducted in accordance with guidelines approved by the Human Ethics Committee of Tel-Aviv University.The study includes 2 experiments – one using visual manipulation, and another combining visual with proprioceptive sensory manipulation. Subjects were healthy, right handed (according to the Edinburgh handedness questionnaire), with normal vision and no reported cognitive deficits or neurological problems. They were naïve to the purpose of the study and provided written informed consent to take part in the study.

1. Setting up the Virtual Reality environment

- Have the subjects sit in a chair with their hands forward and palms facing down.

- Put on the virtual reality (VR) headset with the head-mounted specialized 3D camera to provide online visual feedback of the real environment. Make sure the video from the camera is presented in the VR headset.

NOTE: The video is presented by C# codebase custom software, built based on an open-source, cross-platform 3D rendering engine. - Put on the motion-sensing MR-compatible gloves that allow online monitoring of individual finger flexure in each hand. Ensure that the software embeds the virtual hands in a specific location in space such that the subjects see the virtual hands only when looking down towards the place where their real hands would normally be.

- Throughout the entire experiment, make sure the software records the hand configuration provided by the gloves.

NOTE: The embedded virtual hand movement is controlled by the same software that uses C-based application program interface (API) for accessing calibrated raw data and gesture information from the gloves including the angles between fingers' joints. - Place the subjects' hands in a specialized motion control device and strap the right and left fingers individually to the pistons. Make sure the subjects can move their right hand fingers separately.

NOTE: The right hand finger pistons move a plunger on a potentiometer according to the degree of their flexion. This in turn controls a module that reads the location of every potentiometer on each finger of the right hand and powers motors that push/pull the corresponding left hand finger to the corresponding position. - Verify that voluntary movement of the left hand fingers is restricted by asking the subjects to move their left hand while it is located inside the device.

NOTE: Since only the active (right) hand finger movement activates the motors, voluntary left hand finger movement is impossible when the device is turned on.

2. Conducting the experiment

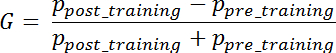

NOTE: See Figure 1 for the experimental stages. Each subject underwent three instruction-evaluation-train-evaluation experimental sessions. The details of the instructions and evaluation stages are provided in the Representative results section.

- Unstrap subjects' hands from the motion control device.

- Have the subjects perform a unimanual 5-digit finger sequence movement repeatedly as accurately and rapidly as possible with the non-training hand in a pre-defined time frame (e.g. 30 s). Each individual finger flexion should be at least 90 degrees.

NOTE: The fingers are numbered from index (1) to little finger (4) and the instructions include a specific 5-digit sequence. If the sequence is 4-1-3-2-4, have the subjects move their fingers in the following order: little-index-ring-middle-little. - After the evaluation (step 2.2), strap the hands of the subject to the motion control device.

- Cue the patient to the upcoming training stage to perform the sequence of finger movements with the active hand in a self-paced manner.

- Repeat the evaluation stages 2.1 – 2.2 again.

3. Analyzing the behavioral data and calculating performance gains

- In the customized software that reads the data files of the gloves recorded during the experiments, click 'load left hand data' and choose the files created in the 'Left Hand Capture' folder under the relevant subject.

NOTE: There are no different folders for pre- and post-evaluations. The file names contain the evaluation step identification. - Click 'load right hand data' and choose the files created in the 'Right Hand Capture' folder under the relevant subject.

- Click 'Go' to replay and visualize the virtual hands movements during each evaluation stage based on the data recorded from the sensors in the motion-tracking glove.

- For each evaluation step and each subject separately, count the number of complete and correct finger sequences (P) performed with the non-trained hand.

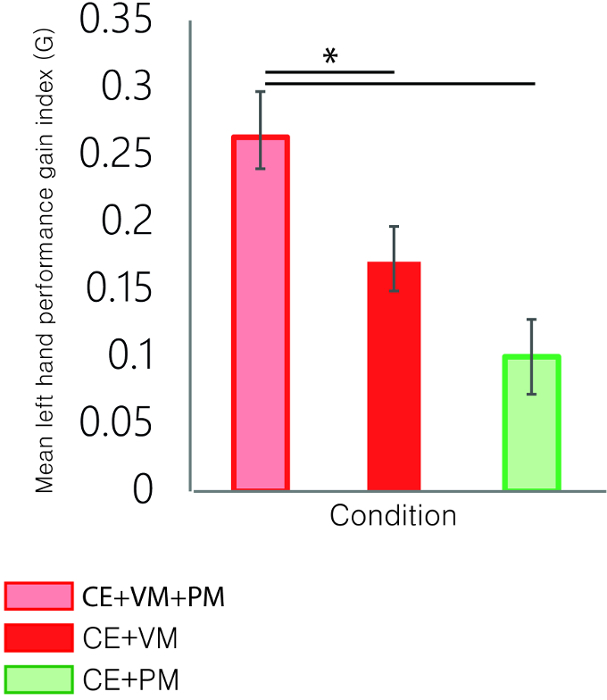

NOTE: A finger movement is considered valid only when the angle between the proximal phalange and the metacarpal reached 90˚. A 5-digit sequence is considered complete and correct only if all finger movements were valid. - Calculate performance gains index (G) according to the following formula:

Where Ppost_training/Ppre_training correspond to the subject's performance (number of complete finger sequences) in the post/pre training evaluation stage respectively.

Representative Results

36 subjects in two experiments trained to execute rapid sequences of right hand finger movements while sensory (visual/proprioceptive) feedback was manipulated. Fingers were numbered from index (1) to little finger (4) and each subject was asked to learn three different sequences in three consecutive experimental sessions such as: 4-1-3-2- 4, 4-2-3-1-4, and 3-1-4-2-3. Each sequence/session was associated with a specific training type and the association between sequence and training type was counterbalanced across subjects. At the beginning of each session, subjects were presented with an instruction slide that depicted two hand illustrations (right and left) with numbered fingers and a specific 5 number sequence underneath, representing the sequence of finger movements to be learned (see Figure 1). The instructions slide (12 s) was followed by the pre-training evaluation stage (30 s). At this stage, online visual feedback consisted of a display of two virtual hands whose finger movements were yoked in real-time to the subjects' actual finger movements (virtual hands were based on a model available in 5DT gloves toolbox). Thus, real left hand movement was accompanied by visual feedback of left (congruent) virtual hand movement. Subjects were instructed to repeatedly execute the sequence as fast and as accurately as possible with their left hand. In the following training stage, subjects trained on the sequence under a specific experimental condition in a self-paced manner. The training stage contained 20 blocks, each training block lasted 15 s followed by 9 s of yellow blank screen, which served as cue for resting period. We used 20 training blocks, which in our case were sufficient to obtain significant differences between conditions. Finally, a post-training evaluation stage identical to the pre-training evaluation was conducted. Each subject underwent three such instruction-evaluation-train-evaluation experimental sessions. Each experimental session was associated with a unique training condition and finger sequence. In experiment 1, we compared the G index values across the following training conditions: (1) training by observation – subjects passively observed the virtual left hand performing the sequence while both their real hands were immobile; (2) CE – subjects physically trained with their right hand while receiving congruent online visual feedback of right virtual hand movement; (3) CE + Visual manipulation (VM) – importantly, the VR setup allowed us to create a unique 3d experimental condition in which subjects physically trained with their right hand while receiving online visual feedback of left (incongruent) virtual hand movement (CE + VM condition). Left virtual hand finger movement was based on real right hand finger movement detected by the gloves (step 1.4). In all conditions – the palm of the subjects' hands were facing up. The pace of virtual hand finger movement in the training by observation condition (condition 1) was set based on the average pace of the subject during previous active right hand conditions (conditions 2 and 3). In cases where the order of training conditions due to counterbalancing was such that training-by-observation was first, the pace was set based on the average pace of the previous subject. All G index comparisons were performed in a within-subject paired-fashion across the different training conditions.

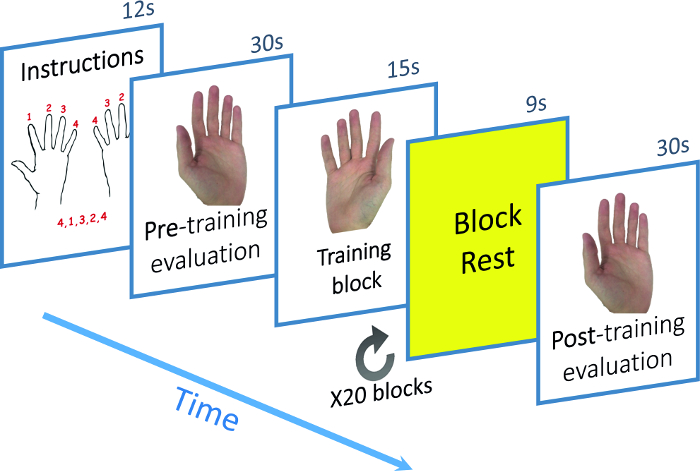

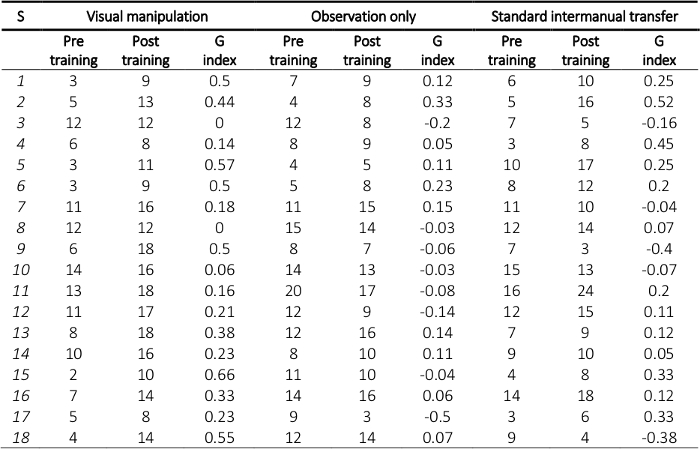

Left hand performance gains following training in condition 3 (CE + Visual manipulation) were significantly higher relative to the gains obtained following training by left hand observation (condition 1; p<0.01; two-tailed paired t-test) or following right hand training with congruent visual feedback – the traditional form of CE (condition 2; p<0.05; two tailed paired t-test; Figure 2 and Table 1). Interestingly, the training with incongruent visual feedback (CE + VM) yielded higher performance gain than the sum of gains obtained by two basic training types: physical training of the right hand, and training by observation of left-hand without physical movement. This super additive effect demonstrates that performance gains in the left hand are non-linearly enhanced when right hand training is supplemented with left hand visual feedback that is controlled by the subject. This implies that CE and learning by observation are interacting processes that can be combined to a novel learning scheme.

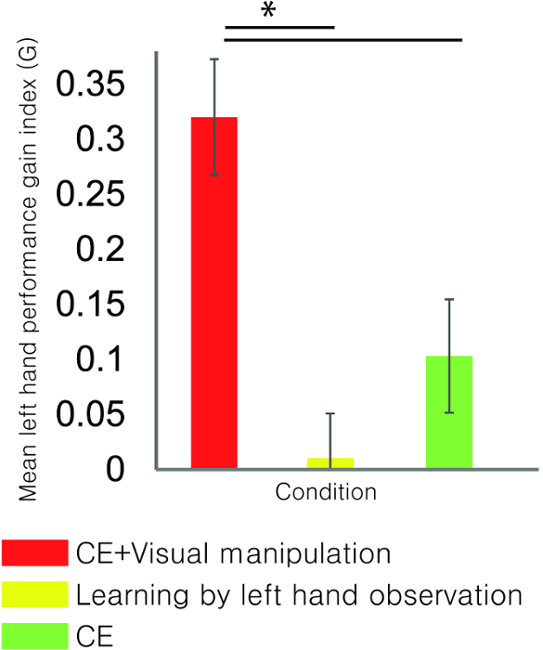

We also examined in another set of 18 healthy subjects whether the addition of passive left hand movement can further enhance left hand performance gains. To this end in study 2, subjects underwent a similar protocol with 3 training types while their hands were placed inside the aforementioned custom-built device (step 1.7) that controls left hand finger movement. In this experiment, subjects trained for 10 blocks. Each training block lasted 50 s followed by 10 s of a yellow blank screen which served as cue for resting period. The following three training types were used: (1) CE + VM – cross education accompanied by manipulated visual feedback (similar to condition 3 from study 1); (2) CE+PM – standard cross-education (i.e. right hand active movement + visual feedback of right virtual hand movement), together with yoked passive movement (PM) of the left hand; (3) CE+VM+ PM – subjects physically trained with their right hand while visual input was manipulated such that corresponding left virtual hand movement was displayed (similar to condition 3 used in the first study). However, in addition, right hand active finger movement resulted in yoked passive left hand finger movement through the device.

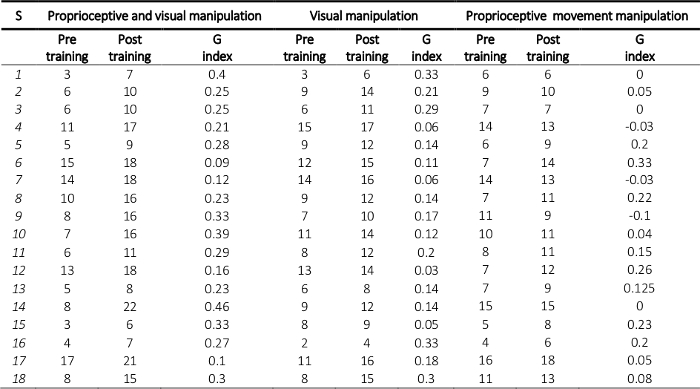

The addition of passive left-hand finger movement to the visual manipulation, yielded the highest left-hand performance gains (Figure 3 and Table 2), that were significantly higher than performance gains following the visual manipulation alone (condition 1; p<0.01; two-tailed paired t-test). It should be noted that although the CE+VM training condition was similar to that in study 1, absolute G values are only comparable across conditions within the same study. This is due to the fact that (1) training design was slightly different (in study 2 the palms faced down and not up due to the device, different duration/number of training blocks) and (2) each experiment was conducted on a different group of subjects. Importantly, within each study, each subject performed all three training types and G indices across conditions are compared in a paired fashion.

Figure 1. Experiment Design. Schematic illustration of a single experimental session in study 1. Each subject performed 3 such sessions. In each session, a unique sequence of five digits was presented together with a sketch of the mapped fingers. After instructions, subjects performed the sequence as fast and as accurate as possible using their left hand for initial evaluation of performance level. Next, subjects trained on the sequence by one of the training types (see representative results) in a self-paced manner. After training, subjects repeated the evaluation stage for re-assessment of performance level. In study 2, the overall design was similar, with different durations/amount of training blocks (detailed in the representative results). Hands in the illustration represent the active hand only (the visual feedback always contained two virtual hands). Please click here to view a larger version of this figure.

Figure 2. Study 1 – left hand performance gains. Physical training with the right hand while receiving online visual feedback as if the left hand is moving (CE + visual manipulation; VM; red) resulted in highest left hand performance gains relative to the other training conditions examined: left hand observation (yellow), and cross-education without visual manipulation (i.e. right hand training + congruent visual feedback of right virtual hand movement; green). Error bars denote SEM across 18 subjects. Please click here to view a larger version of this figure.

Figure 3. Study 2 – left hand performance gains. The highest left hand performance gain was obtained when cross education with visual manipulation was combined with passive left hand finger movement by the device (CE+VM+PM; light red). This improvement was significantly higher than that obtained following cross education with visual manipulation (CE+VM; red) and cross education with proprioceptive manipulation (CE+PM; green). Error bars denote SEM across 18 subjects. Please click here to view a larger version of this figure.

Table 1. Study 1 data. Individual subject's performance (P) during pre- and post-training evaluation stages in study 1. Each cell represents the number of correctly performed complete 5-digit sequences within 30 s. S – Subject number. Please click here to download this Table.

Table 2. Study 2 data. Same as Table 1 for study 2. Note that training duration and hand orientation in this experiment were different than experiment 1 (see text). Please click here to download this Table.

Discussion

We describe a novel training setup and demonstrate how embedding virtual sensory feedback in a real-world environment optimizes motor learning in a hand that is not trained under voluntary control. We manipulated feedback in two modalities: visual and proprioceptive.

There are few critical steps in the presented protocol. First, the system consists of several separate components (gloves, VR headset, camera, and passive movement device) that should be carefully connected while setting up the VR environment. To that end, the experimenter should keep the exact order described in the protocol and verify subjects' convenience.

The combination of visual and proprioceptive manipulation during training introduced significantly higher performance gains in the non-trained hand relative to other existing training types such as learning by observation17, and CE3 with and without passive hand movements24,25,26.

It is an open question whether the enhanced performance gains in the current demonstration generalizes to other tasks, training durations, feedback modalities or hand identities (left active hand, or bi-manual movements). The current study was limited to right-handed subjects using a simple finger sequence task. Additionally, the proprioception manipulation in the current setup is based on a system that allows very limited movements (such as finger flexion/extension) for a relatively short-term training. Further work is required to establish the generalizability of the presented setup to other types of behaviors.

The current setup can be extended in several ways. First, new types of modalities can be added for example, binding different auditory sounds to different finger movements during the sequence task. This might result in a supra-additive effect which will further optimize learning in the untrained hand. Second, the current design of the system enables an easy swap between the voluntary moving hand (right hand in the current description) and the passively yoked hand (left hand). Future studies can capitalize on this flexibility to examine how directionality of transfer (between dominant and non-dominant hands3) can modify the level of performance gains when using the presented sensory manipulations. Finally, the unique VR setup we developed may be adapted to more complex tasks (as opposed to the simple finger sequence task). Virtual simulation of external objects such as balls, pins, and boards can be embedded into the real environment providing a rich and engaging training experience.

As for future applications, the effect described in this study can be readily used with clinical populations such as patients with upper-limb hemiparesis by introducing physical training with the healthy hand and providing visual feedback as if the affected hand is moving. Given that voluntary control of the affected limb is limited in such populations, this training scheme has the potential of circumventing the challenges of direct physical therapy of the affected hand and perhaps resulting in better recovery rates30,31. This approach, exploiting the phenomenon of cross-education and mirror-therapy, together with well-established rehabilitation tasks, has not been previously tested in clinical patients and has the potential for providing a more efficient rehabilitation regime. Finally, since this setup is partially MR compatible, it enables the use of whole-brain functional magnetic resonance imaging (fMRI) to probe the relevant neural circuits engaged during such training12.

Disclosures

The authors have nothing to disclose.

Acknowledgements

This study was supported by the I-CORE Program of the Planning and Budgeting Committee and the Israel Science Foundation (grant no. 51/11), and The Israel Science Foundation (grants no. 1771/13 and 2043/13) (R.M.); the Yosef Sagol Scholarship for Neuroscience Research, the Israeli Presidential Honorary Scholarship for Neuroscience Research, and the Sagol School of Neuroscience fellowship (O.O.). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript. The authors thank E. Kagan and A. Hakim for help with data acquisition, Lihi Sadeh and Yuval Wilchfort with filming and setup, and O. Levy and Y. Siman-Tov from Rehabit-Tec System for providing access to the passive movement device.

Materials

| Oculus Development Kit 1 | Oculus VR | The Oculus Rift DK1 is a virtual reality headset developed and manufactured by Oculus VR, and contains development kit. | |

| 5DT Data Glove 14 MRI Right-handed and left handed | Fifth dimension Technologies | 100-0009 and 100-0010 | The 5DT Data Glove Ultra is designed to satisfy the stringent requirements of modern Motion Capture and Animation Professionals. It offers comfort, ease of use, a small form factor and multiple application drivers. The high data quality, low cross-correlation and high data rate make it ideal for realistic realtime animation. |

| PlayStation Eye Camera | Sony | The PlayStation Eye (trademarked PLAYSTATION Eye) is a digital camera device, similar to a webcam, for thePlayStation 3. The technology uses computer vision and gesture recognition to process images taken by the camera. | |

| REHABILITATION SYSTEM REHABIT-TEC | Rehabit-Tec | www.rehabit-tec.com | The Rehabit-Tec Rehabilitation system is a rehabilitation system intended to allow a CVA injured individual advance self rehabilitation on the basis of mirror movements |

References

- Coker, C. A. . Motor learning and control for practitioners. , (2017).

- Hoare, B. J., Wasiak, J., Imms, C., Carey, L. Constraint-induced movement therapy in the treatment of the upper limb in children with hemiplegic cerebral palsy. Cochrane Database Syst Rev. 18 (2), (2007).

- Sainburg, R. L., Wang, J. Interlimb transfer of visuomotor rotations: independence of direction and final position information. Exp Brain Res. 145 (4), 437-447 (2002).

- Malfait, N., Ostry, D. J. Is interlimb transfer of force-field adaptation a cognitive response to the sudden introduction of load?. J Neurosci. 24 (37), 8084-8089 (2004).

- Perez, M. A., Wise, S. P., Willingham, D. T., Cohen, L. G. Neurophysiological mechanisms involved in transfer of procedural knowledge. J Neurosci. 27 (5), 1045-1053 (2007).

- Nozaki, D., Kurtzer, I., Scott, S. H. Limited transfer of learning between unimanual and bimanual skills within the same limb. Nat Neurosci. 9 (11), 1364-1366 (2006).

- Carroll, T. J., Herbert, R. D., Munn, J., Lee, M., Gandevia, S. C. Contralateral effects of unilateral strength training: evidence and possible mechanisms. J Appl Physiol. 101 (5), 1514-1522 (2006).

- Farthing, J. P., Borowsky, R., Chilibeck, P. D., Binsted, G., Sarty, G. E. Neuro-physiological adaptations associated with cross-education of strength. Brain Topogr. 20 (2), 77-88 (2007).

- Gabriel, D. A., Kamen, G., Frost, G. Neural adaptations to resistive exercise: mechanisms and recommendations for training practices. Sports Med. 36 (2), 133-149 (2006).

- Kirsch, W., Hoffmann, J. Asymmetrical intermanual transfer of learning in a sensorimotor task. Exp Brain Res. 202 (4), 927-934 (2010).

- Panzer, S., Krueger, M., Muehlbauer, T., Kovacs, A. J., Shea, C. H. Inter-manual transfer and practice: coding of simple motor sequences. Acta Psychol (Amst). 131 (2), 99-109 (2009).

- Ossmy, O., Mukamel, R. Neural Network Underlying Intermanual Skill Transfer in Humans. Cell Reports. 17 (11), 2891-2900 (2016).

- Stockel, T., Weigelt, M., Krug, J. Acquisition of a complex basketball-dribbling task in school children as a function of bilateral practice order. Res Q Exerc Sport. 82 (2), 188-197 (2011).

- Stockel, T., Weigelt, M. Brain lateralisation and motor learning: selective effects of dominant and non-dominant hand practice on the early acquisition of throwing skills. Laterality. 17 (1), 18-37 (2012).

- Steinberg, F., Pixa, N. H., Doppelmayr, M. Mirror Visual Feedback Training Improves Intermanual Transfer in a Sport-Specific Task: A Comparison between Different Skill Levels. Neural Plasticity. 2016, (2016).

- Kelly, S. W., Burton, A. M., Riedel, B., Lynch, E. Sequence learning by action and observation: evidence for separate mechanisms. Br J Psychol. 94 (Pt 3), 355-372 (2003).

- Mattar, A. A., Gribble, P. L. Motor learning by observing. Neuron. 46 (1), 153-160 (2005).

- Bird, G., Osman, M., Saggerson, A., Heyes, C. Sequence learning by action, observation and action observation. Br J Psychol. 96 (Pt 3), 371-388 (2005).

- Nojima, I., Koganemaru, S., Kawamata, T., Fukuyama, H., Mima, T. Action observation with kinesthetic illusion can produce human motor plasticity. Eur J Neurosci. 41 (12), 1614-1623 (2015).

- Ossmy, O., Mukamel, R. Activity in superior parietal cortex during training by observation predicts asymmetric learning levels across hands. Scientific reports. , (2016).

- Darainy, M., Vahdat, S., Ostry, D. J. Perceptual learning in sensorimotor adaptation. J Neurophysiol. 110 (9), 2152-2162 (2013).

- Wong, J. D., Kistemaker, D. A., Chin, A., Gribble, P. L. Can proprioceptive training improve motor learning?. J Neurophysiol. 108 (12), 3313-3321 (2012).

- Vahdat, S., Darainy, M., Ostry, D. J. Structure of plasticity in human sensory and motor networks due to perceptual learning. J Neurosci. 34 (7), 2451-2463 (2014).

- Bao, S., Lei, Y., Wang, J. Experiencing a reaching task passively with one arm while adapting to a visuomotor rotation with the other can lead to substantial transfer of motor learning across the arms. Neurosci. Lett. 638, 109-113 (2017).

- Wang, J., Lei, Y. Direct-effects and after-effects of visuomotor adaptation with one arm on subsequent performance with the other arm. J Neurophysiol. 114 (1), 468-473 (2015).

- Lei, Y., Bao, S., Wang, J. The combined effects of action observation and passive proprioceptive training on adaptive motor learning. 신경과학. 331, 91-98 (2016).

- Blank, R., Heizer, W., Von Voß, H. Externally guided control of static grip forces by visual feedback-age and task effects in 3-6-year old children and in adults. Neurosci. Lett. 271 (1), 41-44 (1999).

- Hay, L. Spatial-temporal analysis of movements in children: Motor programs versus feedback in the development of reaching. J Mot Behav. 11 (3), 189-200 (1979).

- Fayt, C., Minet, M., Schepens, N. Children’s and adults’ learning of a visuomanual coordination: role of ongoing visual feedback and of spatial errors as a function of age. Percept Mot Skills. 77 (2), 659-669 (1993).

- Grotta, J. C., et al. Constraint-induced movement therapy. Stroke. 35 (11 Suppl 1), 2699-2701 (2004).

- Taub, E., Uswatte, G., Pidikiti, R. Constraint-Induced Movement Therapy: a new family of techniques with broad application to physical rehabilitation–a clinical review. J Rehabil Res Dev. 36 (3), 237 (1999).