MPI CyberMotion Simulator: Implementation of a Novel Motion Simulator to Investigate Multisensory Path Integration in Three Dimensions

Summary

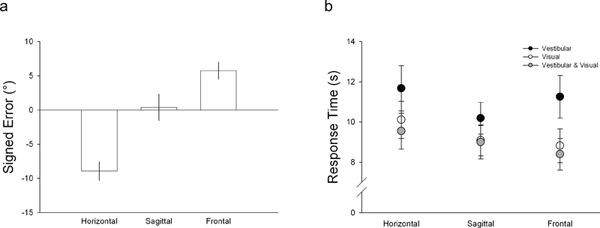

An efficient way to gain insight into how humans navigate themselves in three dimensions is described. The method takes advantage of a motion simulator capable of moving observers in ways unattainable by traditional simulators. Results confirm that movement in the horizontal plane is underestimated, while vertical movement is overestimated.

Abstract

Path integration is a process in which self-motion is integrated over time to obtain an estimate of one’s current position relative to a starting point 1. Humans can do path integration based exclusively on visual 2-3, auditory 4, or inertial cues 5. However, with multiple cues present, inertial cues – particularly kinaesthetic – seem to dominate 6-7. In the absence of vision, humans tend to overestimate short distances (<5 m) and turning angles (<30°), but underestimate longer ones 5. Movement through physical space therefore does not seem to be accurately represented by the brain.

Extensive work has been done on evaluating path integration in the horizontal plane, but little is known about vertical movement (see 3 for virtual movement from vision alone). One reason for this is that traditional motion simulators have a small range of motion restricted mainly to the horizontal plane. Here we take advantage of a motion simulator 8-9 with a large range of motion to assess whether path integration is similar between horizontal and vertical planes. The relative contributions of inertial and visual cues for path navigation were also assessed.

16 observers sat upright in a seat mounted to the flange of a modified KUKA anthropomorphic robot arm. Sensory information was manipulated by providing visual (optic flow, limited lifetime star field), vestibular-kinaesthetic (passive self motion with eyes closed), or visual and vestibular-kinaesthetic motion cues. Movement trajectories in the horizontal, sagittal and frontal planes consisted of two segment lengths (1st: 0.4 m, 2nd: 1 m; ±0.24 m/s2 peak acceleration). The angle of the two segments was either 45° or 90°. Observers pointed back to their origin by moving an arrow that was superimposed on an avatar presented on the screen.

Observers were more likely to underestimate angle size for movement in the horizontal plane compared to the vertical planes. In the frontal plane observers were more likely to overestimate angle size while there was no such bias in the sagittal plane. Finally, observers responded slower when answering based on vestibular-kinaesthetic information alone. Human path integration based on vestibular-kinaesthetic information alone thus takes longer than when visual information is present. That pointing is consistent with underestimating and overestimating the angle one has moved through in the horizontal and vertical planes respectively, suggests that the neural representation of self-motion through space is non-symmetrical which may relate to the fact that humans experience movement mostly within the horizontal plane.

Protocol

1. KUKA Roboter GmbH

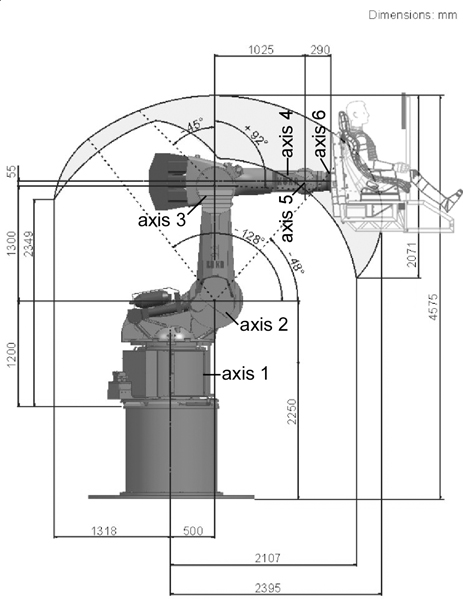

- The MPI CyberMotion Simulator consists of a six-joint serial robot in a 3-2-1 configuration (Figure 1). It is based on the commercial KUKA Robocoaster (a modified KR-500 industrial robot with a 500 kg payload). The physical modifications and the software control structure needed to have a flexible and safe experimental setup have previously been described, including the motion simulator’s velocity and acceleration limitations, and the delays and transfer function of the system 9. Modifications from this previous setup are defined below.

Figure 1. Graphical representation of the current MPI CyberMotion Simulator work space.

- Complex motion profiles that combine lateral movements with rotations are possible with the MPI CyberMotion Simulator. Axes 1, 4 and 6 can rotate continuously. 4 pairs of hardware end-stops limit Axis 2, 3 and 5 in both directions. The maximum range of linear movements is strongly dependent on the position from which the movement begins. The current hardware end-stops of the MPI CyberMotion Simulator are shown in Table 1.

Axis Range [deg] Max. velocity [deg/s] Axis 1 Continuous 69 Axis 2 -128 to -48 57 Axis 3 -45 to +92 69 Axis 4 Continuous 76 Axis 5 -58 to +58 76 Axis 6 Continuous 120

Table 1. Current technical specifications of the MPI CyberMotion Simulator.

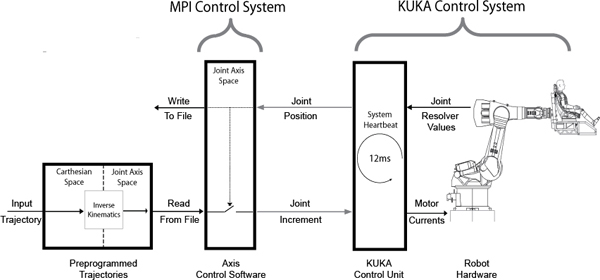

- Before any experiment is performed on the MPI CyberMotion Simulator, each experimental motion trajectory undergoes a testing phase on a KUKA simulation PC (Office PC). The “Office PC” is a special product sold by KUKA which simulates the real robot arm and includes the identical operating system and control screen layout as the real robot. A schematic overview of the control system of the MPI CyberMotion Simulator for an open-loop configuration is shown in Figure 2.

Figure 2. Schematic overview of the open-loop control system of the MPI CyberMotion Simulator. Click here for larger figure.

- The details of the control structure can be found here 9. In brief, for an open-loop configuration such as that used in the current experiment, trajectories are pre-programmed by converting input trajectories in Cartesian coordinates to joint space angles through inverse kinematics (Figure 2).

- The MPI control system reads in these desired joint angle increments and sends these to the KUKA control system to perform axis movements via motor currents. Joint resolver values are sent to the KUKA control system which determines the current joint angle positions at an internal rate of 12ms, which in turn trigger the next joint increment to be read in from file by the MPI control system as well as write the current joint angle positions to disk. Communication between the MPI and KUKA control systems is by an Ethernet connection using the KUKA-RSI protocol.

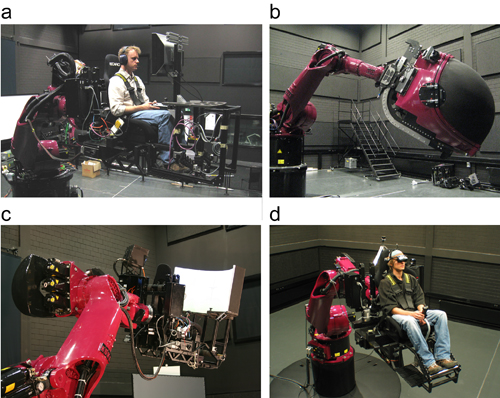

- A racecar seat (RECARO Pole Position) equipped with a 5-point safety belt system (Schroth) is attached to a chassis which includes a footrest. The chassis is mounted to the flange of the robot arm (Figure 3a). Experiments are also possible by seating participants within an enclosed cabin (Figure 3b).

Figure 3. MPI CyberMotion Simulator setup. a) Configuration for current experiment with LCD display. b) Configuration for experiments requiring an enclosed cabin with front projection stereo display. c) Front projection mono display. d) Head mounted display.

- As the experiment is performed in darkness, infrared cameras allow visual monitoring from the control room.

2. Visualization

- Multiple visualization configurations are possible with the MPI CyberMotion Simulator including LCD, stereo or mono front projection, and head mounted displays (Figure 3). For the current experiment visual cues to self-motion are provided by an LCD display (Figure 3a) placed 50 cm in front of the observers who were otherwise tested in the dark.

- The visual presentation was generated using Virtools 4.1 software and consisted of a random, limited life time dot-field. A cuboid extending eight virtual units to the front, right, left, upwards and downwards from the point of view of the participant (i.e., 16 x 16 x 8 units in size) was filled with 200,000 equal size particles consisting of white circles 0.02 units in diameter in front of a black background. The dots were randomly distributed across the space (homogeneous probability distribution within the space). Movement in virtual units was scaled to correspond 1 to 1 with physical motion (1 virtual unit = 1 physical meter).

- Each particle was shown for two seconds before vanishing and immediately showing up again at a random location within the space. Thus half of the dots changed their position within one second. Dots between a distance of 0.085 and 4 units were displayed to the participants (corresponding visual angles: 13° and 0.3°).

- Movement within the dot field was synchronized with physical motion by receiving motion trajectories from the MPI control computer transmitted by an Ethernet connection using the UDP protocol. When moving through the dot-field the average number of dots stayed constant for all movements. This display provided no absolute size scale, but optic flow and motion parallax as dots were spheres with a fixed size; looking smaller according to their distance relative to the observer.

3. Experimental Design

- 16 participants, who were naïve to the experiment with the exception of one author (MB-C), wore noise-cancelling headphones equipped with a microphone to allow two-way communication with the experimenter. Additional auditory noise was continuously played through the headphones to further mask noise produced by the robot.

- Participants used a custom built joystick equipped with response buttons with data transmitted by an Ethernet connection using the UDP protocol.

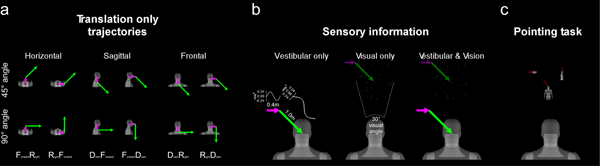

- The angle of the two movement segments was either 45° or 90°. Movements in the horizontal, sagittal and frontal planes consisted of: forward-rightward (FR) or rightward-forward (RF), downward-forward (DF) or forward-downward (FD), and downward-rightward (DR) or rightward-downward (RD) movements respectively (Figure 4a).

Figure 4. Procedure. a) Schematic representation of trajectories used in the experiment. b) Sensory information provided for each trajectory type tested. c) Pointing task used to indicate the origin of where participants thought that they had moved from. Click here for larger figure.

- Sensory information was manipulated by providing visual (optic flow, limited lifetime star field), vestibular-kinaesthetic (passive self motion with eyes closed), or visual and vestibular-kinaesthetic motion cues (Figure 4b).

- Movement trajectories consisted of two segment lengths (1st: 0.4 m, 2nd: 1 m; ±0.24 m/s2 peak acceleration; Figure 4b). Trajectories consisted of translation only. No rotations of the participants occurred. To reduce possible interference from motion prior to each trial and ensure that the vestibular system was tested starting from a steady state, a 15 s pause preceded each trajectory.

- Observers pointed back to their origin by moving an arrow that was superimposed on an avatar presented on the screen (Figure 4c). Movement of the arrow was constrained to the trajectory’s plane and controlled by the joystick. The avatar was presented from frontal, sagittal and horizontal viewpoints. Observers were allowed to use any or all viewpoints to answer. The starting orientation of the arrow was randomized across trials.

- As the pointing task required participants to mentally transform their pointing perspective from an egocentric to an exocentric representation, participants were given instructions on how to point back to their origin with reference to the avatar prior to practice and experimental trials. Participants were told that pointing should be made as if the avatar were their own body. Participants were then instructed to point to physical targets relative to the self using the exocentric measurement technique. For example, participants were instructed to point to the joystick resting on their lap half-way between themselves and the screen, which required participants to point the arrow forward and down relative to the avatar. All participants were able to perform these tasks without expressing confusion.

- Each experimental condition was repeated 3 times and presented in random order. Signed error and response time were analyzed as dependent variables in two separate 3 (plane) * 2 (angle) * 3 (modality) repeated measures ANOVA. Response times from one extreme outlier participant were removed from analysis.

4. Representative Results

Signed error results are collapsed across modalities and angles as no significant main effects were found for these factors. Figure 5a shows the significant main effect of movement plane (F(2,30) = 7.0, p = 0.003) where observers underestimated angle size (average data less than 0°) for movement in the horizontal plane (-8.9°, s.e. 1.8). In the frontal plane observers were more likely on average to overestimate angle size (5.3°, s.e. 2.6), while there was no such bias in the sagittal plane (-0.7°, s.e. 3.7). While main effects of angle and modality were not significant, angle was found to significantly interact with plane (F(2,30) = 11.1, p < 0.001) such that overestimates in the frontal plane were larger for movements through 45° (7.9°, s.e. 2.6) than through 90° (2.8°, s.e. 2.7), while such a discrepancy was absent for the other planes. In addition, modality was found to significantly interact with angle (F(2,30) = 4.7, p = 0.017) such that underestimates from vestibular information alone for movements through 90° were significantly larger (-4.3°, s.e. 2.1) compared to the visual (-2.0°, s.e. 2.4) and vestibular and visual information combined (2.3°, s.e. 2.2) conditions, while such discrepancies were absent for movements through 45°. No significant between subjects effect was for signed error (F(1,15) = 0.7, p = 0.432). Figure 5b shows the response time results. There was a significant main effect of modality (F(2,28) = 22.6, p < 0.001) where observers responded slowest when answering based on vestibular-kinaesthetic information alone (11.0 s, s.e. 1.0) compared to the visual (9.3 s, s.e. 0.8) and combined (9.0 s, s.e. 0.8) conditions. There was also a significant main effect of plane (F(2,28) = 7.5, p = 0.002) where observers responded slowest when moved in the horizontal plane (10.4 s, s.e. 1.0) compared to the sagittal (9.4 s, s.e. 0.8) and the frontal (9.4 s, s.e. 0.9) planes. There was no significant main effect of segment angle or any interactions. A significant between subjects effect was found for response time (F(1,14) = 129.1, p < 0.001).

Figure 5. Results. a) Signed error collapsed across modality for the planes tested. b) Response time collapsed across movement planes for the modalities tested. Error bars are +/- 1 s.e.m.

Discussion

Path integration has been well established as a means used to resolve where an observer originated but is prone to underestimates of the angle one has moved through 5. Our results show this for translational movement but only within the horizontal plane. In the vertical planes participants are more likely to overestimate the angle moved through or have no bias at all. These results may explain why estimates of elevation traversed-over terrain tend to be exaggerated 10 and also why spatial navigation between different floors of a building is poor 11. These results may also be related to known asymmetries in the relative proportion of saccule to utricule receptors (~0.58) 12. Slower response time based on vestibular-kinaesthetic information alone compared to when visual information is present suggests that there may be additional delays associated with trying to determine one’s origin based on inertial cues alone, which may relate to recent studies showing that vestibular perception is slow compared to the other senses 13-16. Overall our results suggest that alternative strategies for determining one’s origin may be used when moving vertically which may relate to the fact that humans experience movement mostly within the horizontal plane. Further, while sequential translations are rarely experienced they do occur most often in the sagittal plane – where errors are minimal – such as when we walk toward and move on an escalator. While post-experiment interviews did not reflect different strategies among the planes, experiments should explore this possibility. Experiments with trajectories using additional degrees of freedom, longer paths, with the body differently orientated relative to gravity, as well as using larger fields of view which are now possible with the MPI CyberMotion Simulator are planned to further investigate path integration performance in three dimensions.

Declarações

The authors have nothing to disclose.

Acknowledgements

MPI Postdoc stipends to MB-C and TM; Korean NRF (R31-2008-000-10008-0) to HHB. Thanks to Karl Beykirch, Michael Kerger & Joachim Tesch for technical assistance and scientific discussion.

Materials

KUKA KR 500 Heavy Duty Industrial Robot

Dell 24″ 1920×1200 lcd display (effective field of view masked to 1200 x 1200)

Custom built joystick with UDP communication

Referências

- Loomis, J. M., Klatzky, R. L., Golledge, R. G. Navigating without vision: Basic and applied research. Optometry and Vision Science. 78, 282-289 (2001).

- Vidal, M., Amorim, M. A., Berthoz, A. Navigating in a virtual three-dimensional maze: how do egocentric and allocentric reference frames interact. Cognitive Brain Research. 19, 244-258 (2004).

- Vidal, M., Amorim, M. A., McIntyre, J., Berthoz, A. The perception of visually presented yaw and pitch turns: Assessing the contribution of motion, static, and cognitive cues. Perception & Psychophysics. 68, 1338-1350 (2006).

- Loomis, J. M., Klatzky, R. K., Philbeck, J. W., Golledge, R. Assessing auditory distance perception using perceptually directed action. Perception & Psychophysics. 60, 966-980 (1998).

- Loomis, J. M., Klatzky, R. L., Golledge, R. G., Cicinelli, J. G., Pellegrino, J. W., Fry, P. A. Nonvisual navigation by blind and sighted: Assessment of path integration ability. Journal of Experimental Psychology General. 122, 73-91 (1993).

- Bakker, N. H., Werkhoven, P. J., Passenier, P. O. The effects of proprioceptive and visual feedback on geographical orientation in virtual environments. Presence. 8, 36-53 (1999).

- Kearns, M. J., Warren, W. H., Duchon, A. P., Tarr, M. J. Path integration from optic flow and body senses in a homing task. Perception. 31, 349-374 (2002).

- Pollini, L., Innocenti, M., Petrone, A. Study of a novel motion platform for flight simulators using an anthropomorphic robot. , 2006-6360 (2006).

- Teufel, H. J., Nusseck, H. -. G., Beykirch, K. A., Butler, J. S., Kerger, M., Bulthoff, H. H. MPI motion simulator: development and analysis of a novel motion simulator. , 2007-6476 (2007).

- Gärling, T., Böök, A., Lindberg, E., Arce, C. Is elevation encoded in cognitive maps. Journal of Environmental Psychology. 10, 341-351 (1990).

- Montello, D. R., Pick, H. L. J. Integrating knowledge of vertically aligned large-scale spaces. Environment and Behaviour. 25, 457-483 (1993).

- Correia, M. J., Hixson, W. C., Niven, J. I. On predictive equations for subjective judgments of vertical and horizon in a force field. Acta oto-laryngologica Supplementum. 230, 3 (1968).

- Barnett-Cowan, M., Harris, L. R. Perceived timing of vestibular stimulation relative to touch, light and sound. Experimental Brain Research. 198, 221-231 (2009).

- Barnett-Cowan, M., Harris, L. R. Temporal processing of active and passive head movement. Experimental Brain Research. 214, 27-35 (2011).

- Sanders, M. C., Chang, N. N., Hiss, M. M., Uchanski, R. M., Hullar, T. E. Temporal binding of auditory and rotational stimuli. Experimental Brain Research. 210, 539-547 (2011).

- Barnett-Cowan, M., Raeder, S. M., Bulthoff, H. H. Persistent perceptual delay for head movement onset relative to auditory stimuli of different duration and rise times. Experimental Brain Research. , (2012).