Automated Rat Single-Pellet Reaching with 3-Dimensional Reconstruction of Paw and Digit Trajectories

Summary

Rodent skilled reaching is commonly used to study dexterous skills, but requires significant time and effort to implement the task and analyze the behavior. We describe an automated version of skilled reaching with motion tracking and three-dimensional reconstruction of reach trajectories.

Abstract

Rodent skilled reaching is commonly used to study dexterous skills, but requires significant time and effort to implement the task and analyze the behavior. Several automated versions of skilled reaching have been developed recently. Here, we describe a version that automatically presents pellets to rats while recording high-definition video from multiple angles at high frame rates (300 fps). The paw and individual digits are tracked with DeepLabCut, a machine learning algorithm for markerless pose estimation. This system can also be synchronized with physiological recordings, or be used to trigger physiologic interventions (e.g., electrical or optical stimulation).

Introduction

Humans depend heavily on dexterous skill, defined as movements that require precisely coordinated multi-joint and digit movements. These skills are affected by a range of common central nervous system pathologies including structural lesions (e.g., stroke, tumor, demyelinating lesions), neurodegenerative disease (e.g., Parkinson’s disease), and functional abnormalities of motor circuits (e.g., dystonia). Understanding how dexterous skills are learned and implemented by central motor circuits therefore has the potential to improve quality of life for a large population. Furthermore, such understanding is likely to improve motor performance in healthy people by optimizing training and rehabilitation strategies.

Dissecting the neural circuits underlying dexterous skill in humans is limited by technological and ethical considerations, necessitating the use of animal models. Nonhuman primates are commonly used to study dexterous limb movements given the similarity of their motor systems and behavioral repertoire to humans1. However, non-human primates are expensive with long generation times, limiting numbers of study subjects and genetic interventions. Furthermore, while the neuroscientific toolbox applicable to nonhuman primates is larger than for humans, many recent technological advances are either unavailable or significantly limited in primates.

Rodent skilled reaching is a complementary approach to studying dexterous motor control. Rats and mice can be trained to reach for, grasp, and retrieve a sugar pellet in a stereotyped sequence of movements homologous to human reaching patterns2. Due to their relatively short generation time and lower housing costs, as well as their ability to acquire skilled reaching over days to weeks, it is possible to study large numbers of subjects during both learning and skill consolidation phases. The use of rodents, especially mice, also facilitates the use of powerful modern neuroscientific tools (e.g., optogenetics, calcium imaging, genetic models of disease) to study dexterous skill.

Rodent skilled reaching has been used for decades to study normal motor control and how it is affected by specific pathologies like stroke and Parkinson’s disease3. However, most versions of this task are labor and time-intensive, mitigating the benefits of studying rodents. Typical implementations involve placing rodents in a reaching chamber with a shelf in front of a narrow slot through which the rodent must reach. A researcher manually places sugar pellets on the shelf, waits for the animal to reach, and then places another one. Reaches are scored as successes or failures either in real time or by video review4. However, simply scoring reaches as successes or failures ignores rich kinematic data that can provide insight into how (as opposed to simply whether) reaching is impaired. This problem was addressed by implementing detailed review of reaching videos to identify and semi-quantitatively score reach submovements5. While this added some data regarding reach kinematics, it also significantly increased experimenter time and effort. Further, high levels of experimenter involvement can lead to inconsistencies in methodology and data analysis, even within the same lab.

More recently, several automated versions of skilled reaching have been developed. Some attach to the home cage6,7, eliminating the need to transfer animals. This both reduces stress on the animals and eliminates the need to acclimate them to a specialized reaching chamber. Other versions allow paw tracking so that kinematic changes under specific interventions can be studied8,9,10, or have mechanisms to automatically determine if pellets were knocked off the shelf11. Automated skilled reaching tasks are especially useful for high-intensity training , as may be required for rehabilitation after an injury12. Automated systems allow animals to perform large numbers of reaches over long periods of time without requiring intensive researcher involvement. Furthermore, systems which allow paw tracking and automated outcome scoring reduce researcher time spent performing data analysis.

We developed an automated rat skilled reaching system with several specialized features. First, by using a movable pedestal to bring the pellet into “reaching position” from below, we obtain a nearly unobstructed view of the forelimb. Second, a system of mirrors allows multiple simultaneous views of the reach with a single camera, allowing three-dimensional (3-D) reconstruction of reach trajectories using a high resolution, high-speed (300 fps) camera. With the recent development of robust machine learning algorithms for markerless motion tracking13, we now track not only the paw but individual knuckles to extract detailed reach and grasp kinematics. Third, a frame-grabber that performs simple video processing allows real-time identification of distinct reaching phases. This information is used to trigger video acquisition (continuous video acquisition is not practical due to file size), and can also be used to trigger interventions (e.g., optogenetics) at precise moments. Finally, individual video frames are triggered by transistor-transistor logic (TTL) pulses, allowing the video to be precisely synchronized with neural recordings (e.g., electrophysiology or photometry). Here, we describe how to build this system, train rats to perform the task, synchronize the apparatus with external systems, and reconstruct 3-D reach trajectories.

Protocol

All methods involving animal use described here have been approved by the Institutional Animal Care and Use Committee (IACUC) of the University of Michigan.

1. Setting up the reaching chamber

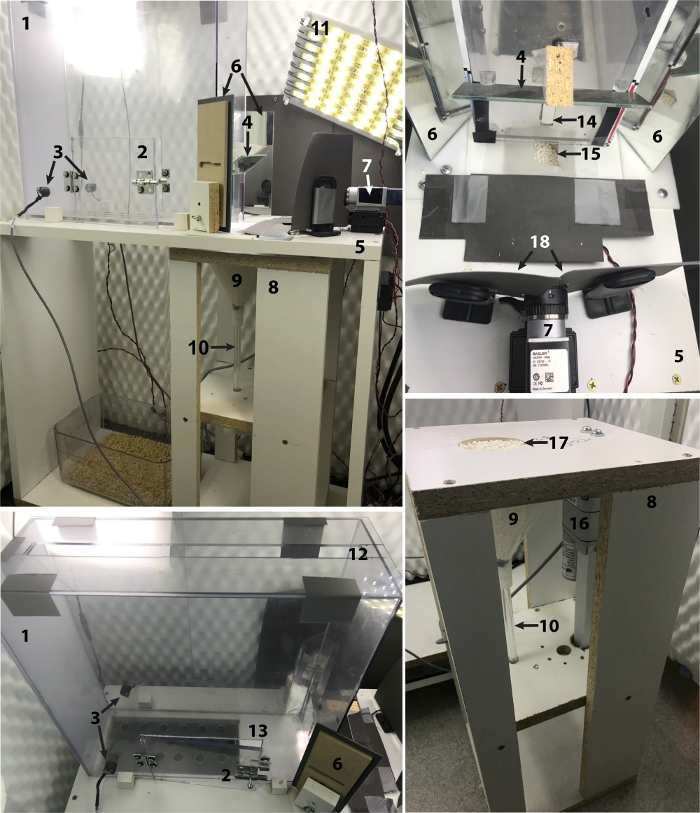

NOTE: See Ellens et al.14 for details and diagrams of the apparatus. Part numbers refer to Figure 1.

- Bond clear polycarbonate panels with acrylic cement to build the reaching chamber (15 cm wide by 40 cm long by 40 cm tall) (part #1). One side panel (part #2) has a hinged door (18 cm wide by 15 cm tall) with a lock. If the rat will be tethered to a cable, cut a slit (5 cm wide by 36 cm long) into the chamber ceiling to accommodate it (part #12). The floor panel has 18 holes (1.75 cm diameter) (part #13) cut into it and is not bonded to the rest of the chamber.

- Mount and align infrared sensors (part #3) in the side panels 4.5 cm from the back of the chamber and 3.8 cm from the floor. The behavioral software has an indicator (‘IR Back’) that is green when the infrared beam is unbroken and red when the beam is broken. Once the software is set up, this can be used to check sensor alignment.

- Mount a mirror (15 cm x 5 cm, part #4) 10 cm above the reaching slot (part #14). Angle the mirror so the pellet delivery rod is visible to the camera.

- Place the chamber on a sanitizable support box (59 cm wide by 67.3 cm long by 30.5 cm tall, part #5). The chamber rests above a hole in the support box (12 cm wide by 25 cm long) that allows litter to fall through the floor holes (part #13) and out of the reaching chamber. Cut a second hole (7 cm wide by 6 cm long, part #15) into the support box in front of the reaching slot, which allows a pellet delivery rod to bring pellets to the reaching slot.

- Mount two mirrors (8.5 cm wide x 18.5 cm tall, part #6) to the floor with magnets on either side of the chamber so that the long edge of the mirror is touching the side panel 3 cm from the front of the reaching box. Angle the mirrors so that the camera can see into the box and the area in front of the reaching slot where the pellet will be delivered.

- Mount the high-definition camera (part #7) 17 cm from the reaching slot, facing the box.

- Mount black paper (part #18) on either side of the camera so the background in the side mirrors is dark. This enhances contrast to improve real-time and off-line paw detection.

- Mount the linear actuator (part #16) on a sanitizable frame (25 cm wide by 55 cm long by 24 cm tall, part #8) with screws. The actuator is mounted upside down to prevent pellet dust from accumulating inside its position-sensing potentiometer.

- Insert a foam O-ring into the neck of the pellet reservoir (funnel) (part #9) to prevent dust from accumulating in the assembly. Mount the funnel below a hole (~6 cm diameter, part #17) in the top of the frame by slipping the edges of the funnel above three screws drilled into the underside of the frame top. Insert the guide tube (part #10) into the neck of the funnel.

- Attach the plastic T connector to the end of the steel rod of the actuator. Insert the tapered end of the pellet delivery rod into the top of the connector and the cupped end through the guide tube into the pellet reservoir.

- Place the linear actuator assembly under the skilled reaching chamber so that the pellet delivery rod can extend through the hole (part #15) in front of the reaching slot.

- Place the entire reaching apparatus in a wheeled cabinet (121 cm x 119 cm x 50 cm) ventilated with computer fans (the interior gets warm when well-lit) and lined with acoustic foam.

- Build five light panels (part #11) by adhering LED light strips on 20.3 cm by 25.4 cm support panels. Mount diffuser film over the light strips. Mount one light panel on the ceiling over the pellet delivery rod area. Mount the other four on the sides of the cabinets along the reaching chamber.

NOTE: It is important to illuminate the area around the reaching slot and pellet delivery rod for real-time paw identification.

2. Setting up the computer and hardware

- Install the FPGA frame grabber and digital extension cards per the manufacturer’s instructions (see the Table of Materials).

NOTE: We recommend at least 16 GB of RAM and an internal solid-state hard drive for data storage, as streaming the high-speed video requires significant buffering capacity. - Install drivers for the high-definition camera and connect it to the FPGA framegrabber. The behavioral software must be running and interfacing with the camera to use the software associated with the camera.

NOTE: The included code (see supplementary files) accesses programmable registers in the camera and may not be compatible with other brands. We recommend recording at least at 300 frames per second (fps); at 150 fps we found that key changes in paw posture were often missed. - Copy the included code (project) in “SR Automation_dig_ext_card_64bit” to the computer.

3. Behavioral training

- Prepare rats prior to training.

- House Long-Evans rats (male or female, ages 10–20 weeks) in groups of 2–3 per cage on a reverse light/dark cycle. Three days before training, place rats on food restriction to maintain body weight 10–20% below baseline.

- Handle rats for several minutes per day for at least 5 days. After handling, place 4–5 sugar pellets per rat in each home cage to introduce the novel food.

- Habituate the rat to the reaching chamber (1–3 days)

- Turn on the LED lights and place 3 sugar pellets in the front and back of the chamber.

- Place the rat in the chamber and allow the rat to explore for 15 min. Monitor if it eats the pellets. Repeat this phase until the rat eats all of the pellets off of the floor.

- Clean the chamber with ethanol between rats.

NOTE: Perform training and testing during the dark phase. Train rats at the same time daily.

- Train the rat to reach and observe paw preference (1–3 days).

- Turn on the lights and place the rat in the skilled reaching chamber.

- Using forceps, hold a pellet through the reaching slot at the front of the box (Figure 1, Figure 2). Allow the rat to eat 3 pellets from the forceps.

- The next time the rat tries to eat the pellet from the forceps, pull the pellet back. Eventually, the rat will attempt to reach for the pellet with its paw.

- Repeat this 11 times. The paw that the rat uses most out of the 11 attempts is the rat’s “paw preference”.

NOTE: An attempt is defined as the paw reaching out past the reaching slot. The rat does not need to successfully obtain and eat the pellet.

- Train the rat to reach to the pellet delivery rod (1–3 days)

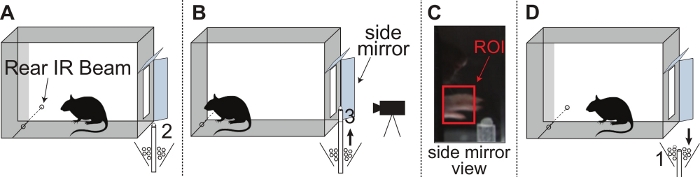

- Align the pellet delivery rod with the side of the reaching slot contralateral to the rat’s preferred paw (use a guide to ensure consistent placement 1.5 cm from the front of the reaching chamber). The top of the delivery rod should align with the bottom of the reaching slot (Figure 2B). Place a pellet on the delivery rod.

NOTE: Positioning the delivery rod opposite the rat’s preferred paw makes it difficult for the rat to obtain the pellet with its non-preferred paw. We have not had issues with rats using their non-preferred paw. However, in certain models (e.g., stroke) this may still occur and a restraint on the non-preferred reaching limb can be added. - Bait the rat with a pellet held using forceps, but direct the rat towards the delivery rod so that its paw hits the pellet on the rod. If the rat knocks the pellet off of the rod, replace it. Some rats may not initially reach out far enough. In this case, move the pellet delivery rod closer to the reaching slot and then slowly move it further away as the rat improves.

- After about 5–15 baited reaches the rat will begin to reach for the pellet on the delivery rod spontaneously. Once the rat has attempted 10 reaches to the delivery rod without being baited, it can advance to the next phase.

- Align the pellet delivery rod with the side of the reaching slot contralateral to the rat’s preferred paw (use a guide to ensure consistent placement 1.5 cm from the front of the reaching chamber). The top of the delivery rod should align with the bottom of the reaching slot (Figure 2B). Place a pellet on the delivery rod.

- Train the rat to request a pellet (2–8 days).

NOTE: Although we have had 100% success training rats to reach for pellets, about 10% of rats fail to learn to request a pellet by moving to the back of the chamber.- Position the pellet delivery rod based on the rat’s paw preference and set it to position 2 (Figure 2A). Set height positions of the pellet delivery rod using the actuator remote. Holding buttons 1 and 2 simultaneously moves the delivery rod up, while holding buttons 3 and 2 moves the delivery rod down. When the delivery rod is at the correct height, hold down the desired number until the light blinks red to set.

- Place the rat in the chamber and bait the rat to the back with a pellet. When the rat moves far enough to the back of the chamber that it would break the infrared beam if the automated version was running, move the pellet delivery rod to position 3 (Figure 2B).

- Wait for the rat to reach for the pellet and then move the pellet delivery rod back to position 2 (Figure 2A). Place a new pellet on the delivery rod if it was knocked off.

- Repeat these steps, gradually baiting the rat less and less, until the rat begins to: (i) move to the back to request a pellet without being baited, and (ii) immediately move to the front after requesting a pellet in the back. Once the rat has done this 10 times, it is ready for training on the automated task.

4. Training rats using the automated system

- Set up the automated system.

- Turn on the lights in the chamber and refill the pellet reservoir if needed.

- Position the pellet delivery rod according to the rat’s paw preference. Check that the actuator positions are set correctly (as in Figure 2A).

- Turn on the computer and open the Skilled Reaching program (SR_dig_extension_card_64bit_(HOST)_3.vi). Enter the rat ID number under Subject and select the paw preference from the Hand drop-down menu. Specify the Save Path for the videos.

- Set Session Time and Max Videos (number of videos at which to end the session at). The program will stop running at whichever limit is reached first.

- Set Pellet Lift Duration (duration of time that the delivery rod remains in position “3” after the rat requests a pellet). Enable or disable Early Reach Penalty (delivery rod resets to position “1” and then back to “2” if the rat reaches before requesting a pellet).

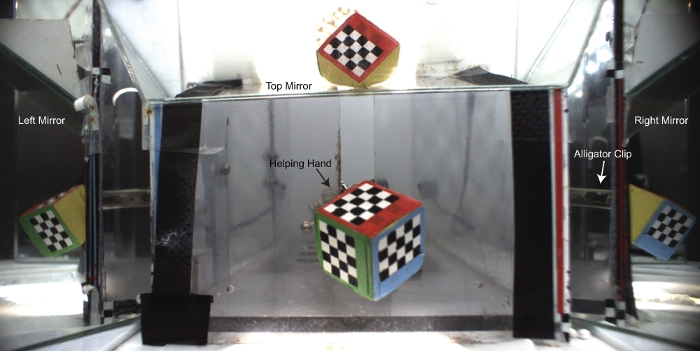

- Take calibration images. The 3-D trajectory reconstruction uses a computer vision toolbox to determine the appropriate transformation matrices, which requires identifying matched points in each view. To do this, use a small cube with checkerboard patterns on each side (Figure 3).

- Place the helping hand inside the reaching chamber and poke the alligator clip through the reaching slot. Hold the cube in front of the reaching slot with the alligator clip.

- Position the cube so that the red side appears in the top mirror, the green side in the left mirror, and the blue side in the right mirror. The entire face of each of the three sides should be visible in the mirrors (Figure 3).

- In the behavioral program, make sure ROI Threshold is set to a very large value (e.g., 60000). Click the run button (white arrow). Once the Camera Initialized button turns green, press START. Note that the video is being acquired.

- Click Cal Mode. Then, take an image by clicking Take Cal Image. The image directory path will now appear under “.png path” with the .png filename formatted as “GridCalibration_YYYYMMDD_img#.png”.

- Move the cube slightly, and take another image. Repeat again for a total of 3 images.

- Stop the program by clicking STOP and then the stop sign button. Remove the helping hand and cube from the box.

- Be careful not to bump anything in the behavioral chamber after calibration images have been taken that day. If anything moves, new calibration images need to be taken.

- Run the automated system.

NOTE: Determine “ROI Threshold” settings (described below) for each mirror before running rats for actual data acquisition. Once these settings have been determined, pre-set them before beginning the program and adjust during acquisition if necessary.- Place the rat in the skilled reaching chamber. Click on the white arrow to run the program.

- Before clicking START, set the position of the ROI for paw detection by adjusting x-Offset (x-coordinate of the top-left corner of the ROI rectangle), y-Offset (y-coordinate of the top-left corner of the ROI), ROI Width and ROI Height.

- Position the ROI in the side mirror that shows the dorsum of the paw, directly in front of the reaching slot (Figure 2C). Be sure that the pellet delivery rod does not enter the ROI and that the ROI does not extend into the box to prevent the pellet or the rat’s fur from triggering a video when the rat is not reaching.

- Click START to begin the program.

- Adjust the “Low ROI Threshold” value until the “Live ROI Trigger Value” is oscillating between “0” and “1” (when the rat is not reaching). This value is the number of pixels within the ROI with intensity values in the threshold range.

- Set the ROI Threshold. Observe the Live ROI Trigger Value when the rat pokes its nose into the ROI and when the rat reaches for the pellet. Set the ROI threshold to be significantly greater than the “Live ROI Trigger Value” during nose pokes and lower than the “Live ROI Trigger Value” when the rat reaches. Adjust until videos are consistently triggered when the rat reaches but not when it pokes its nose through the slot

NOTE: This assumes the paw is lighter colored than the nose; the adjustments would be reversed if the paw is darker than the nose. - Monitor the first few trials to ensure that everything is working correctly. When a rat reaches before requesting a pellet (delivery rod in position “2”), the “Early Reaches” number increases. When a rat reaches after requesting a pellet (delivery rod in position “3”), the “Videos” number increases and a video is saved as a .bin file with the name “RXXXX_YYYYMMDD_HH_MM_SS_trial#”.

NOTE: The default is for videos to contain 300 frames (i.e., 1 s) prior to and 1000 frames after the trigger event (this is configurable in the software), which is long enough to contain the entire reach-to-grasp movement including paw retraction. - Once the session time or max videos is reached, the program stops. Press the stop sign button.

- Clean the chamber with ethanol and repeat with another rat, or if done for the day proceed to converting videos.

- Convert .bin files to .avi files.

NOTE: Compressing videos during acquisition causes dropped frames, so binary files are streamed to disk during acquisition (use a solid state drive because of high data transfer rates). These binary files must be compressed off-line or the storage requirements are prohibitively large.- Open the “bin2avi-color_1473R_noEncode.vi” program.

- Under “File Path Control” click the folder button to select the session (e.g., R0235_20180119a) you want to convert. Repeat for each session (up to six).

- Click the white arrow (run) and then “START” to begin. You can monitor the video compression in the “Overall Progress (%)” bar. Let the program run overnight.

- Before you begin training animals the next day, check that the videos have been converted and delete the .bin files so there is enough space to acquire new videos.

5. Analyzing videos with DeepLabCut

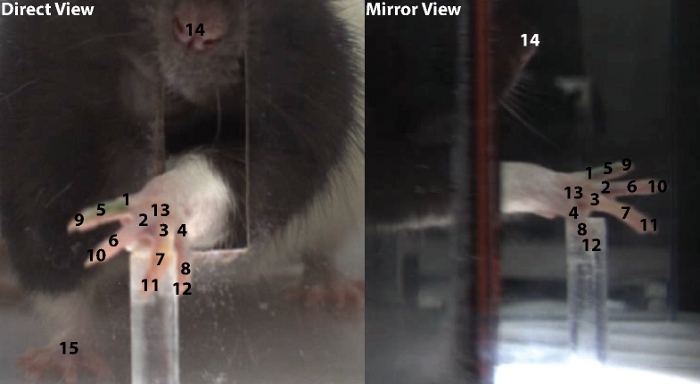

NOTE: Different networks are trained for each paw preference (right paw and left paw) and for each view (direct view and left mirror view for right pawed rats, direct view and right mirror view for left pawed rats). The top mirror view is not used for 3D reconstruction—just to detect when the nose enters the slot, which may be useful to trigger interventions (e.g., optogenetics). Each network is then used to analyze a set of videos cropped for the corresponding paw and view.

- Train the DeepLabCut networks (detailed instructions are provided in DeepLabCut documentation on https://github.com/AlexEMG/DeepLabCut).

- Create and configure a new project in DeepLabCut, a machine learning algorithm for markerless pose estimation13.

- Use the program to extract frames from the skilled reaching videos and crop images to the view to include (direct or mirror view) in the program interface. Crop frames large enough so that the rat and both front paws are visible.

NOTE: Networks typically require 100–150 training frames. More training frames are needed when the paw is inside as compared to outside the chamber because of lighting. Tighter cropping reduces processing time, but be careful that the cropped regions are large enough to detect the paw’s full trajectory for each rat. It should be wide enough for the rat’s entire body to fit in the frame (direct view), and to see as far back into the chamber as possible and in front of the delivery rod (mirror view). - Use the program GUI to label body parts. Label 16 points in each frame: 4 metacarpophalangeal (MCP) joints, 4 proximal interphalangeal (PIP) joints, 4 digit tips, the dorsum of the reaching paw, the nose, the dorsum of the non-reaching paw, and the pellet (Figure 4).

- Follow the DeepLabCut (abbreviated as DLC henceforth) instructions to create the training dataset, train the network, and evaluate the trained network.

- Analyze videos and refine the network.

- Before analyzing all videos with a newly-trained network, analyze 10 videos to evaluate the network’s performance. If there are consistent errors in certain poses, extract additional training frames containing those poses and retrain the network.

- When analyzing videos, make sure to output .csv files, which will be fed into the code for 3D trajectory reconstruction.

6. Box calibration

NOTE: These instructions are used to determine the transformation matrices to convert points identified in the direct and mirror views into 3-D coordinates. For the most up to date version and more details on how to use the boxCalibration package, see the Leventhal Lab GitHub: https://github.com/LeventhalLab/boxCalibration, which includes step-by-step instructions for their use.

- Collect all calibration images in the same folder.

- Using ImageJ/Fiji, manually mark the checkerboard points for each calibration image. Save this image as “GridCalibration_YYYYMMDD_#.tif” where ‘YYYYMMDD’ is the date the calibration image corresponds to and ‘#’ is the image number for that date.

- Use the measurement function in ImageJ (in the toolbar, select Analyze | Measure). This will display a table containing coordinates for all points marked. Save this file with the name “GridCalibration_YYYYMMDD_#.csv”, where the date and image number are the same as the corresponding .tif file.

- From the boxCalibration package, open the ‘setParams.m’ file. This file contains all required variables and their description. Edit variables as needed to fit the project’s specifications.

- Run the calibrateBoxes function. Several prompts will appear in the command window. The first prompt asks if whether to analyze all images in the folder. Typing Y will end the prompts, and all images for all dates will be analyzed. Typing N will prompt the user to enter the dates to analyze.

NOTE: Two new directories will be created in the calibration images folder: ‘markedImages’ contains .png files with the user defined checkerboard marks on the calibration image. The ‘boxCalibration’ folder contains .mat files with the box calibration parameters. - Run the checkBoxCalibration function. This will create a new folder, ‘checkCalibration’ in the ‘boxCalibration’ folder. Each date will have a subfolder containing the images and several .fig files, which are used to verify that box calibration was completed accurately.

7. Reconstructing 3D trajectories

- Assemble the .csv files containing learning program output into the directory structure described in the reconstruct3Dtrajectories script.

- Run reconstruct3Dtrajectories. This script will search the directory structure and match direct/mirror points based on their names in the leaning program (it is important to use the same body part names in both views).

- Run calculateKinematics. This script extracts simple kinematic features from the 3-D trajectory reconstructions, which can be tailored to specific needs.

NOTE: The software estimates the position of occluded body parts based on their neighbors and their location in the complementary view (e.g., the location of a body part in the direct camera view constrains its possible locations in the mirror view). For times when the paw is occluded in the mirror view as it passes through the slot, paw coordinates are interpolated based on neighboring frames.

Representative Results

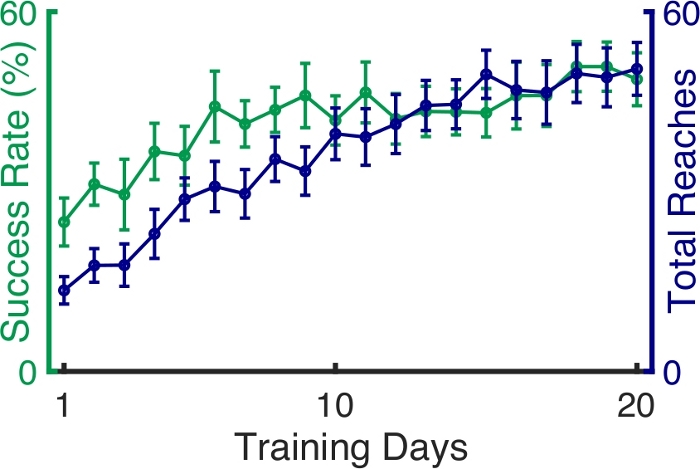

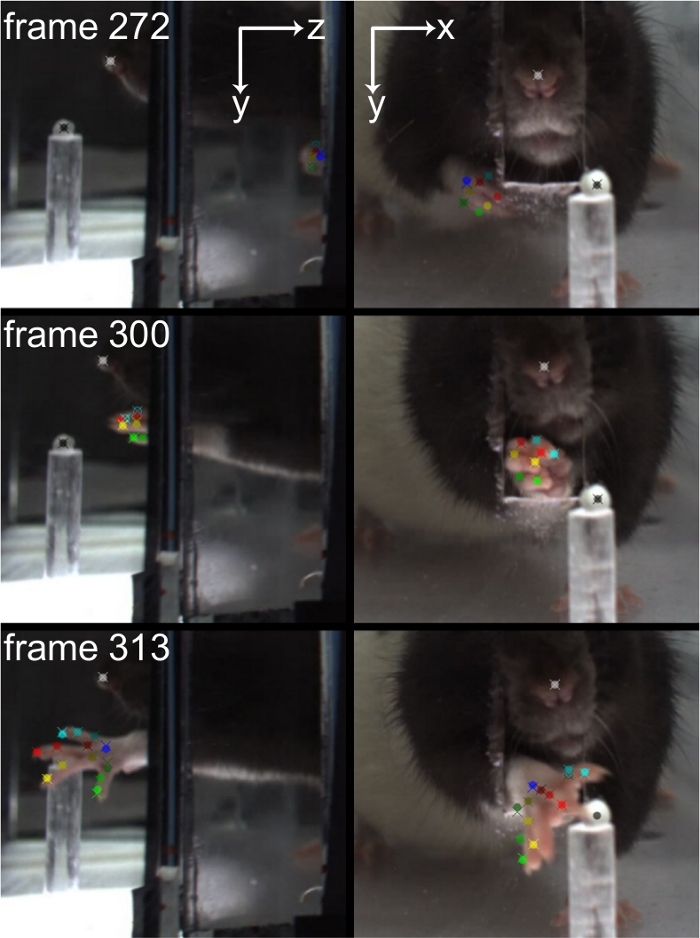

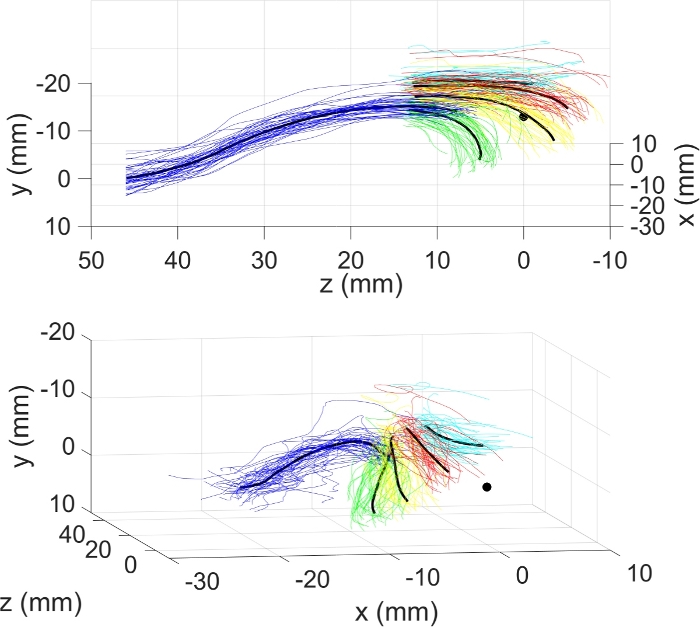

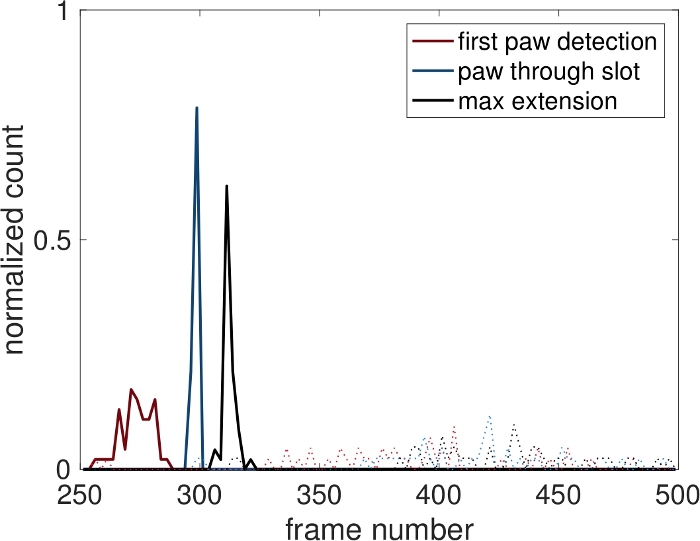

Rats acquire the skilled reaching task quickly once acclimated to the apparatus, with performance plateauing in terms of both numbers of reaches and accuracy over 1–2 weeks (Figure 5). Figure 6 shows sample video frames indicating structures identified by DeepLabCut, and Figure 7 shows superimposed individual reach trajectories from a single session. Finally, in Figure 8, we illustrate what happens if the paw detection trigger (steps 4.3.4–4.3.6) is not accurately set. There is significant variability in the frame at which the paw breaches the reaching slot. This is not a major problem in terms of analyzing reach kinematics. However, it could lead to variability in when interventions (e.g., optogenetics) are triggered during reaching movements.

Figure 1: The skilled reaching chamber.

Clockwise from top left are a side view, a view from the front and above, the frame in which the actuator is mounted (see step 1.8), and a view from the side and above. The skilled reaching chamber (1) has a door (2) cut into one side to allow rats to be placed into and taken out of the chamber. A slit is cut into the ceiling panel (12) to allow the animal to be tethered and holes are cut into the floor panel (13) to allow litter to fall through. Two infrared sensors (3) are aligned on either side of the back of the chamber. A mirror (4) is mounted above the reaching slot (14) at the front of the reaching chamber and two other mirrors (6) are mounted on either side of the reaching chamber. The skilled reaching chamber sits atop a support box (5). The high-definition camera (7) is mounted onto the support box in front of the reaching slot. Two pieces of black paper (18) are mounted on either side of the camera (7) to enhance contrast of the paw in the side mirrors (6). Below the support box is a frame (8) that supports the linear actuator (16) and pellet reservoir (9). A guide tube encasing the pellet delivery rod (10) is fit into the pellet reservoir and controlled by the linear actuator. Holes are cut into the actuator frame (17) and support box (15) above the pellet reservoir to allow the pellet delivery rod to move up and down freely. The box is illuminated with light panels (11) mounted to the cabinet walls and ceiling. Please click here to view a larger version of this figure.

Figure 2: Single trial structure.

(A) A trial begins with the pellet delivery rod (controlled by a linear actuator) positioned at the “ready” position (position 2 – midway between floor and bottom of reaching slot). (B) The rat moves to the back of the chamber to break the infrared (IR) beam, which causes the pellet delivery rod to rise to position 3 (aligned with bottom of reaching slot). (C) The rat reaches through the reaching slot to grasp the pellet. Reaches are detected in real-time using an FPGA framegrabber that detects pixel intensity changes within a region of interest (ROI) in the side mirror view directly in front of the slot. When enough pixels match the user defined “paw intensity”, video acquisition is triggered. (D) Two seconds later the pellet is lowered to position 1, picking up a new pellet from the pellet reservoir before resetting to position 2. Please click here to view a larger version of this figure.

Figure 3: Sample calibration image.

A helping hand is placed inside the skilled reaching chamber. An alligator clip pokes through the reaching slot to hold the calibration cube in place outside of the reaching chamber. The three checkerboard patterns are entirely visible in the direct view and the corresponding mirror views (green: left; red: top; and blue: right). Please click here to view a larger version of this figure.

Figure 4: Learning algorithm marker positions.

Left column: direct view; right column: mirror view. Markers 1–4: MCP joints; 5–8: PIP joints; 9–12: digit tips; 13: dorsum of reaching paw; 14: nose; 15: dorsum of non-reaching paw. Marker 16 (pellet) is not visible. Please click here to view a larger version of this figure.

Figure 5: Rats rapidly acquire the automated skilled reaching task.

Average first reach success rate (green, left axis) and average total trials (blue, right axis) over the first 20 training sessions in the automated skilled reaching task (n = 19). Each training session lasted 30 min. Error bars represent standard error of the mean. Please click here to view a larger version of this figure.

Figure 6: Sample video frames marked by the learning program.

Left column: mirror view; right column: direct view. Cyan, red, yellow, and green dots mark digits 1–4, respectively. The white dot marks the nose, the black dot marks the pellet. Filled circles were identified by DeepLabCut. Open circles mark object positions estimated by where that object appeared in the opposite view. X’s are points re-projected onto the video frames from the estimates of their 3-D locations. This video was triggered at frame 300, as the paw passed through the slot. Top images are from the first frame when the reaching paw was detected. Bottom images are from the frame at which the second digit was maximally extended. These frames were identified by the image processing software. Please click here to view a larger version of this figure.

Figure 7: Sample 3-D trajectories from a single test session.

Both axes show the same data, but rotated for ease of presentation. Black lines indicate mean trajectories. Cyan, red, yellow, and green are individual trajectories of the tips of digits 1–4, respectively. Blue lines indicate the trajectory of the paw dorsum. The large black dot indicates the sugar pellet located at (0,0,0). This represents only initial paw advancement for ease of presentation (including retractions and multiple reaches makes the figure almost uninterpretable). However, all kinematic data are available for analysis. Please click here to view a larger version of this figure.

Figure 8: Histograms of frame numbers in which specific reaching phases were identified for 2 different sessions.

In one session (dark solid lines), the ROI trigger values were carefully set, and the paw was identified breaching the slot within the same few frames in each trial. In the other session (light dashed lines), the nose was often misidentified as the reaching paw, triggering video acquisition prematurely. Note that this would have little effect on off-line kinematic analyses unless the full reach was not captured. However, potential interventions triggered by the reaching paw would be poorly timed. Please click here to view a larger version of this figure.

Discussion

Rodent skilled reaching has become a standard tool to study motor system physiology and pathophysiology. We have described how to implement an automated rat skilled reaching task that allows: training and testing with minimal supervision, 3-D paw and digit trajectory reconstruction (during reaching, grasping, and paw retraction), real-time identification of the paw during reaching, and synchronization with external electronics. It is well-suited to correlate forelimb kinematics with physiology or to perform precisely-timed interventions during reaching movements.

Since we initially reported this design14, our training efficiency has improved so that almost 100% of rats acquire the task. We have identified several important factors that lead to consistently successful training. As with many tasks motivated by hunger, rats should be carefully monitored during caloric restriction to maintain 80–90% of their anticipated body weight. Handling the rats daily, even prior to training, is critically important to acclimate them to humans. Rats should be trained to reach before learning to return to the back of the chamber to request pellets—this greatly reduces training time and improves the likelihood that rats acquire the task. Finally, when transferred between seemingly identical chambers, rats often perform fewer reaches. This was especially true when chambers were used for the first time. We speculate that this is due to differences in scent between chambers. Whatever the reason, it is important to maintain as stable a training environment as possible, or acclimate the rats to all boxes in which testing might occur.

The apparatus described here is readily adaptable to specific needs. We described a rat version of the task, but have also implemented a mouse version (though it is difficult to identify individual digits with DeepLabCut in mice). Because individual video frames are marked with TTL pulses, videos can be synchronized with any recording system that accepts digital or analog inputs (e.g., electrophysiology amplifiers or photometry). Finally, head-fixed mice readily perform skilled reaching9, and a head-fixed version of this task could be implemented for 2-photon imaging or juxtacellular recordings. Importantly, we have only used this system with Long-Evans rats, whose nose and paw fur (black and white, respectively) differ enough in color that nose pokes are not mistaken for reaches (with appropriate ROI settings, Figure 8). This may be a problem for rats with similar coloration on their paws and noses (e.g., albino rats), but could be solved by coloring the paw with ink, nail polish, or tattoos.

The presented version of skilled reaching has several distinct features, which may be advantageous depending on the specific application. The relatively complicated hardware and need for real-time video processing make it poorly suited to home cage training6,7. On the other hand, home cage training makes it difficult to acquire high-speed high-resolution video from multiple angles, or tether the animals for physiologic recordings/interventions. The data acquisition cards and requirement for one computer per chamber makes each chamber relatively expensive, and the videos require significant digital storage space (~200 MB per 4 s video). We have implemented a simpler microcontroller-based version costing about $300 per chamber, though it lacks real-time feedback or the ability to synchronize with external devices. These boxes are essentially identical to those described here, but use a commercial camcorder and do not require a computer except to program the microcontroller (details of this set-up and associated software are available upon request). Real-time video processing on the FPGA frame-grabber is especially useful; we find that it more robustly identifies reaches in real time than infrared beams or proximity sensors (which may mistake the rat’s snout for the reaching paw). Furthermore, multiple triggers may be used to identify the paw at different reaching phases (e.g., approach to the slot, paw lift, extension through slot). This not only allows reproducible, precisely-timed neuronal perturbations, but can be used to trigger storage of short high-speed videos.

While our automated version of skilled reaching has several advantages for specific applications, it also has some limitations. As noted above, the high-speed, high-resolution camera is moderately expensive, but necessary to include mirror and direct views in a single image and capture the very quick reaching movement. Using one camera eliminates the need to synchronize and record multiple video streams simultaneously, or purchase multiple cameras and frame grabbers. The paw in the reflected view is effectively about twice as far from the camera (by ray-tracing) as in the direct view. This means that one of the views is always out of focus, though DLC still robustly identifies individual digits in both views (Figure 4, Figure 6). Finally, we used a color camera because, prior to the availability of DLC, we tried color coding the digits with tattoos. While it is possible that this learning-based program would be equally effective on black and white (or lower resolution) video, we can only verify the effectiveness of the hardware described here. Finally, our analysis code (other than DLC) is written primarily in a commercial software package (see Table of Materials) but should be straightforward to adapt to open source programming languages (e.g., Python) as needed.

There are several ways in which we are working to improve this system. Currently, the mirror view is partially occluded by the front panel. We have therefore been exploring ways to obtain multiple simultaneous views of the paw while minimizing obstructions. Another important development will be to automatically score the reaches (the system can track kinematics, but a human must still score successful versus failed reaches). Methods have been developed to determine whether pellets were knocked off the shelf/pedestal, but cannot determine whether the pellet was grasped or missed entirely11. By tracking the pellet with DLC, we are exploring algorithms to determine the number of reaches per trial, as well as whether the pellet was grasped, knocked off the pedestal, or missed entirely. Along those lines, we are also working to fully automate the workflow from data collection through video conversion, DLC processing, and automatic scoring. Ultimately, we envision a system in which multiple experiments can be run on one day, and by the next morning the full forelimb kinematics and reaching scores for each experiment have been determined.

Declarações

The authors have nothing to disclose.

Acknowledgements

The authors would like to thank Karunesh Ganguly and his laboratory for advice on the skilled reaching task, and Alexander and Mackenzie Mathis for their help in adapting DeepLabCut. This work was supported by the National Institute of Neurological Disease and Stroke (grant number K08-NS072183) and the University of Michigan.

Materials

| clear polycarbonate panels | TAP Plastics | cut to order (see box design) | |

| infrared source/detector | Med Associates | ENV-253SD | 30" range |

| camera | Basler | acA2000-340kc | 2046 x 1086 CMV2000 340 fps Color Camera Link |

| camera lens | Megapixel (computar) | M0814-MP2 | 2/3" 8mm f1.4 w/ locking Iris & Focus |

| camera cables | Basler | #2000031083 | Cable PoCL Camera Link SDR/MDR Full, 5 m – Data Cables |

| mirrors | Amazon | ||

| linear actuator | Concentrics | LACT6P | Linear Actuator 6" Stroke (nominal), 110 Lb Force, 12 VDC, with Potentiometer |

| pellet reservoir/funnel | Amico (Amazon) | a12073000ux0890 | 6" funnel |

| guide tube | ePlastics | ACREXT.500X.250 | 1/2" OD x 1/4" ID Clear. Extruded Plexiglass Acrylic Tube x 6ft long |

| pellet delivery rod | ePlastics | ACRCAR.250 | 0.250" DIA. Cast Acrylic Rod (2' length) |

| plastic T connector | United States Plastic Corp | #62065 | 3/8" x 3/8" x 3/8" Hose ID Black HDPE Tee |

| LED lights | Lighting EVER | 4100066-DW-F | 12V Flexible Waterproof LED Light Strip, LED Tape, Daylight White, Super Bright 300 Units 5050 LEDS, 16.4Ft 5 M Spool |

| Light backing | ePlastics | ACTLNAT0.125X12X36 | 0.125" x 12" x 36" Natural Acetal Sheet |

| Light diffuser films | inventables | 23114-01 | .007×8.5×11", matte two sides |

| cabinet and custom frame materials | various (Home Depot, etc.) | 3/4" fiber board (see protocol for dimensions of each structure) | |

| acoustic foam | Acoustic First | FireFlex Wedge Acoustical Foam (2" Thick) | |

| ventilation fans | Cooler Master (Amazon) | B002R9RBO0 | Rifle Bearing 80mm Silent Cooling Fan for Computer Cases and CPU Coolers |

| cabinet door hinges | Everbilt (Home Depot | #14609 | continuous steel hinge (1.4" x 48") |

| cabinet wheels | Everbilt (Home Depot | #49509 | Soft rubber swivel plate caster with 90 lb. load rating and side brake |

| cabinet door handle | Everbilt (Home Depot | #15094 | White light duty door pull (4.5") |

| computer | Hewlett Packard | Z620 | HP Z620 Desktop Workstation |

| Camera Link Frame Grabber | National Instruments | #781585-01 | PCIe-1473 Virtex-5 LX50 Camera Link – Full |

| Multifunction RIO Board | National Instruments | #781100-01 | PCIe-17841R |

| Analog RIO Board Cable | National Instruments | SCH68M-68F-RMIO | Multifunction Cable |

| Digital RIO Board Cable | National Instruments | #191667-01 | SHC68-68-RDIO Digital Cable for R Series |

| Analog Terminal Block | National Instruments | #782536-01 | SCB-68A Noise Rejecting, Shielded I/O Connector Block |

| Digital Terminal Block | National Instruments | #782536-01 | SCB-68A Noise Rejecting, Shielded I/O Connector Block |

| 24 position relay rack | Measurement Computing Corp. | SSR-RACK24 | Solid state relay backplane (Gordos/OPTO-22 type relays), 24-channel |

| DC switch | Measurement Computing Corp. | SSR-ODC-05 | Solid state relay module, single, DC switch, 3 to 60 VDC @ 3.5 A |

| DC Sense | Measurement Computing Corp. | SSR-IDC-05 | solid state relay module, single, DC sense, 3 to 32 VDC |

| DC Power Supply | BK Precision | 1671A | Triple-Output 30V, 5A Digital Display DC Power Supply |

| sugar pellets | Bio Serv | F0023 | Dustless Precision Pellets, 45 mg, Sucrose (Unflavored) |

| LabVIEW | National Instruments | LabVIEW 2014 SP1, 64 and 32-bit versions | 64-bit LabVIEW is required to access enough memory to stream videos, but FPGA coding must be performed in 32-bit LabVIEW |

| MATLAB | Mathworks | Matlab R2019a | box calibration and trajectory reconstruction software is written in Matlab and requires the Computer Vision toolbox |

Referências

- Chen, J., et al. An automated behavioral apparatus to combine parameterized reaching and grasping movements in 3D space. Journal of Neuroscience Methods. , (2018).

- Sacrey, L. A. R. A., Alaverdashvili, M., Whishaw, I. Q. Similar hand shaping in reaching-for-food (skilled reaching) in rats and humans provides evidence of homology in release, collection, and manipulation movements. Behavioural Brain Research. 204, 153-161 (2009).

- Whishaw, I. Q., Kolb, B. Decortication abolishes place but not cue learning in rats. Behavioural Brain Research. 11, 123-134 (1984).

- Klein, A., Dunnett, S. B. Analysis of Skilled Forelimb Movement in Rats: The Single Pellet Reaching Test and Staircase Test. Current Protocols in Neuroscience. 58, 8.28.1-8.28.15 (2012).

- Whishaw, I. Q., Pellis, S. M. The structure of skilled forelimb reaching in the rat: a proximally driven movement with a single distal rotatory component. Behavioural Brain Research. 41, 49-59 (1990).

- Zeiler, S. R., et al. Medial premotor cortex shows a reduction in inhibitory markers and mediates recovery in a mouse model of focal stroke. Stroke. 44, 483-489 (2013).

- Fenrich, K. K., et al. Improved single pellet grasping using automated ad libitum full-time training robot. Behavioural Brain Research. 281, 137-148 (2015).

- Azim, E., Jiang, J., Alstermark, B., Jessell, T. M. Skilled reaching relies on a V2a propriospinal internal copy circuit. Nature. , (2014).

- Guo, J. Z. Z., et al. Cortex commands the performance of skilled movement. Elife. 4, e10774 (2015).

- Nica, I., Deprez, M., Nuttin, B., Aerts, J. M. Automated Assessment of Endpoint and Kinematic Features of Skilled Reaching in Rats. Frontiers in Behavioral Neuroscience. 11, 255 (2017).

- Wong, C. C., Ramanathan, D. S., Gulati, T., Won, S. J., Ganguly, K. An automated behavioral box to assess forelimb function in rats. Journal of Neuroscience Methods. 246, 30-37 (2015).

- Torres-Espín, A., Forero, J., Schmidt, E. K. A., Fouad, K., Fenrich, K. K. A motorized pellet dispenser to deliver high intensity training of the single pellet reaching and grasping task in rats. Behavioural Brain Research. 336, 67-76 (2018).

- Mathis, A., et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nature Neuroscience. 21, 1281-1289 (2018).

- Ellens, D. J., et al. An automated rat single pellet reaching system with high-speed video capture. Journal of Neuroscience Methods. 271, 119-127 (2016).