Somatosensory Event-related Potentials from Orofacial Skin Stretch Stimulation

Instructor Prep

concepts

Student Protocol

The current experimental protocol follows the guidelines of ethical conduct according to the Yale University Human Investigation Committee.

1. Electroenchephalopgaphy (EEG) Preparation

- Measure head size to determine the appropriate EEG cap.

- Identify the location of the vertex by finding the mid-point between nasion and inion with a measuring tape.

- Place the EEG cap on the head using the pre-determined vertex as Cz. Examine Cz again after placing the cap by using a measuring tape as done in 1.2. Note that the EEG cap is equipped with electrode holders and the placement of the 64 electrodes (or holders) is based on a modified 10-20 system with pre-specified coordinates system based on Cz11.

Note: This representative application uses a 64 electrode configuration to assess scalp distribution changes and for source analysis. For simpler applications (event-related potential changes in amplitude and latency) using fewer electrodes are possible. There are two additional electrodes for ground in the EEG system used here. Those electrode holders are also included in the cap. - Apply electrode gel in the electrode holders using a disposable syringe.

- Attach EEG electrodes (including ground electrodes) into the electrodes holders matching the labels of the electrodes and to the electrode holders on the electrode cap.

- Clean the skin surface with alcohol pads.

Note: For electrodes for detecting eye motion (electro-oculography), the skin locations are above and below the right eye (vertical eye motion), and lateral to the outer canthus of the both eyes (horizontal eye motion); for somatosensory stimulation the skin lateral to the oral angle is cleaned. - Fill the four electro-oculography electrodes with the electrode gel and secure the electrodes with double-sided tape to the sites noted in 1.6.

- Secure all electrode cables using a Velcro strap. If required, tape the cables to participant's body or the other locations that do not introduce any additional electrical or mechanical noise.

- Position the participant in front of the monitor and the robot for somatosensory stimulation. Secure all electrode cables again as in 1.8.

- Connect the EEG and electro-oculography electrodes (including the ground electrodes) into the appropriate connecters (matching label and connecter shape) on the amplifier box of the EEG system.

- Check to see that the EEG signals are artifact free and that the offset value is in an acceptable range (<50 µV or smaller). If noisy signals or large offsets that are usually indicative of high impedance are found, correct those electrode signals by adding additional EEG gel and/or repositioning hair that is directly under the electrode.

- Insert the EEG-compatible earphones and confirm that the sound level is in a comfortable range based on subject report.

2. Somatosensory Stimulation

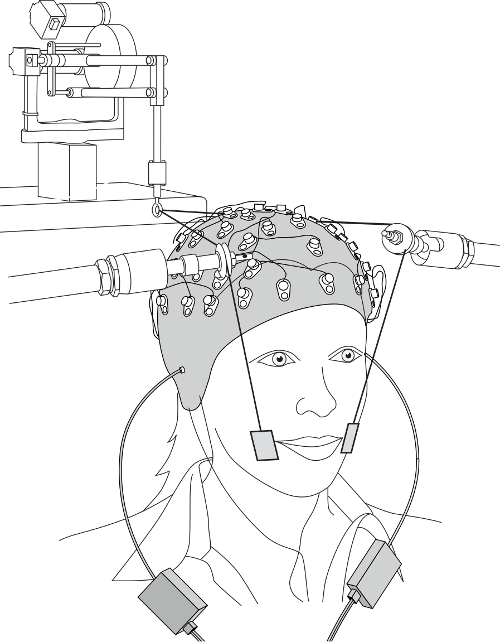

Note: The current protocol applies facial skin stretch for the purpose of somatosensory stimulation. The experimental setup with the EEG system is represented in Figure 1. The details of the somatosensory stimulation device have been described in the previous studies1,7,12-14. Briefly, two small plastic tabs (2 cm wide and 3 cm height) are attached with double-sided tape to the facial skin. The tabs are connected to the robotic device using string. The robot generates systematic skin stretch loads according to experimental designs. The setup protocol for ERP recording is as follows:

- Place the participant's head in the headrest in order to minimize head motion during stimulation. Remove carefully the electrode cables between the participant's head and headrest.

- Ask the participant to hold the safety switch for the robot.

- Attach plastic tabs to the target skin location using double-sided tape for somatosensory stimulation. For the representative results12,13, in which the target is the skin lateral to the oral angle, place the center of the tabs on the modiolus, a few mm lateral to the oral angle with the center of the tabs at approximately the same height of the oral angle.

- Adjust the configuration of the string, string supports and the robot in order to avoid EEG electrodes and cables.

- Apply a few facial skin stretches (one cycle sinusoid at 3 Hz with a maximum force of 4 N) to check for artifacts due to the stimulation (usually observed as relatively large amplitude and lower frequency compared with the electrophysiological response). If artifacts are observed in the EEG signals, go back to 2.4.

3. ERP Recording

- Explain the experimental task to the subject and provide practice trials (one block = 10 trials or less) to confirm if the subject understands the task clearly.

Note: The experimental task and stimulus presentation for ERP recording are preprogramed in software for stimulus presentation.- In the representative test with combined somatosensory and auditory stimulation12, apply the somatosensory stimulation associated with skin deformation to the skin lateral to the oral angle. The pattern of stretch is a one cycle sinusoid (3 Hz) with a maximum force of 4 N. A single synthesized speech utterance that is midway in a 10-step sound continuum between "head" and "had" is used for auditory stimulation.

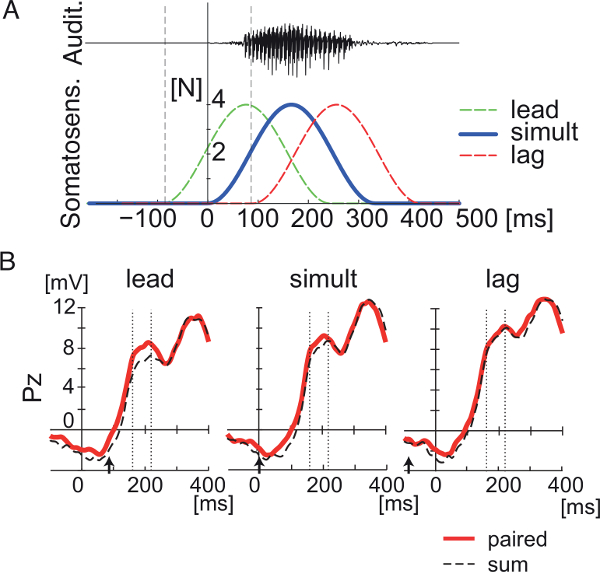

- Present both stimulations separately or in combination. In the combined stimulation, test three onset timings (90 msec lead and lag, and simultaneous in somatosensory and auditory onsets: see Figure 3A).

- Randomize the presentation of five stimulations (somatosensory alone, auditory alone and three combined: lead, simult. and lag). Vary the inter-trial interval between 1,000 and 2,000 msec in order to avoid anticipation and habituation. The experimental task is to identify whether the presented speech sound, which is the sound that is acoustically intermediate between "head" and "had', was "head" by pressing a key on a keyboard. In the somatosensory alone condition, in which there is no auditory stimulation, the participants are instructed to answer not "head".

- Record participant judgments and the reaction time from the stimulus onset to the key press using the software for stimulus presentation. Ask the participant to gaze a fixation point on the display screen in order to reduce artifacts due to eye-movement.

- Remove the fixation point every 10 stimulations for a short break. (See also other example of task and stimulus presentation12,13)

- Start the software for ERP recording at 512 Hz sampling, which also records the onset time of stimulation in the timeline of ERP data. Note that the time stamps of the stimulation, which also includes the information about the type of the stimulation, are sent for every stimulus from the software for stimulus presentation. The two programs (for ERP recording and for the stimulus presentation) are running on two separate PCs that are connected through a parallel port.

- Set the software for the somatosensory stimulation to the trigger-waiting mode and then start stimulus presentation by activating the software for stimulus presentation. Note that the software for the somatosensory stimulation is also running on a separate PC from the other two PCs. Record 100 ERPs per condition.

Note: A trigger signal for the somatosensory stimulation is received through an analog input device that is connected to a digital output device in the PC for sensory stimulation. Single somatosensory stimulation is produced per one trigger.

Somatosensory Event-related Potentials from Orofacial Skin Stretch Stimulation

Learning Objectives

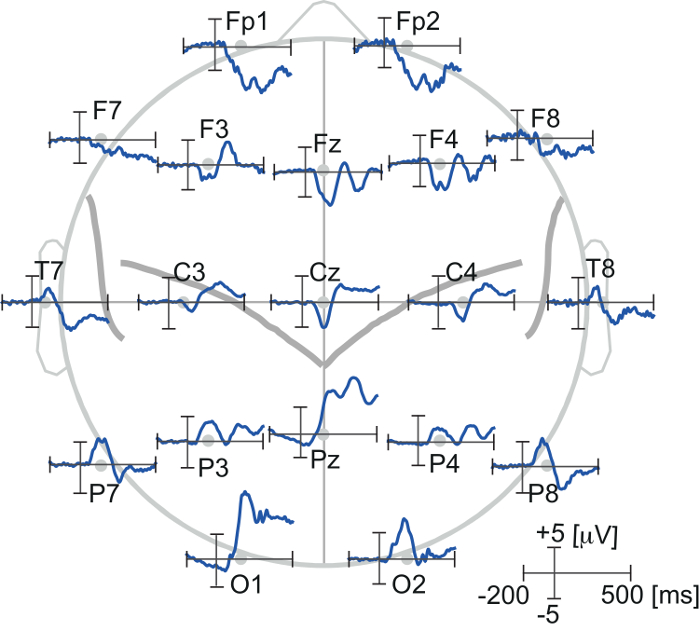

This section presents representative event-related potentials in response to somatosensory stimulation resulting from facial skin deformation. The experimental setup is represented in Figure 1. Sinusoidal stimulation was applied to the facial skin lateral to the oral angle (See Figure 3A as reference). One hundred stretch trials were recorded for each participant with 12 participants tested in total. After removing the trials with blinks and eye movement artifacts offline on the basis of the horizontal and vertical electro-oculography signals (over ±150 µV), more than 85% of trials were averaged. EEG signals were filtered with a 0.5-50 Hz band-pass filter and re-referenced to the average across all electrodes. Figure 2 shows the average somatosensory ERP from selected representative electrodes. In frontal regions, peak negative potentials were induced at 100-200 msec post stimulus onset followed by a positive potential at 200-300 msec. The largest response was observed in the midline electrodes. Different from the previous studies of somatosensory ERP15-18, there is no earlier latency (<100 msec) potentials. This temporal pattern is rather similar to the typical N1-P2 sequence following auditory stimulation19. In comparison between the corresponding pair of electrodes in left and right hemisphere, the temporal pattern is quite similar probably due to the bilateral stimulation.

Figure 1. Experimental setup. Please click here to view a larger version of this figure.

Figure 2. Event related potentials in response to somatosensory stimulation produced by facial skin stretch. The ERPs were obtained from representative electrodes. Please click here to view a larger version of this figure.

The first result shows how the timing of stimulation affects multisensory interaction during speech processing12. In this study, neural response interactions were found by comparing ERPs obtained using somatosensory-auditory stimulus pairs with the algebraic sum of ERPs to the unisensory stimuli presented separately. The pattern of auditory-somatosensory stimulations are represented in Figure 3A. Figure 3B shows the pattern of event-related potentials in response to somatosensory-auditory stimulus pairs (Red line). The black line represents the sum of individual unisensory auditory and somatosensory ERPs. The three panels correspond to the time lag between two stimulus onsets: 90 msec lead of the somatosensory onset (Left), simultaneous (Center) and 90 msec lag (Right). When somatosensory stimulation was presented 90 msec before the auditory onset, there is a difference between paired and summed responses (the left panel in Figure 3B). This interaction effect gradually decreases as a function of the time lag between the somatosensory and auditory inputs (see the change between the two dotted lines in Figure 3B). The results demonstrate that the somatosensory-auditory interaction is dynamically modified with the timing of stimulation.

Figure 3. Event-related potentials reflect a somatosensory-auditory interaction in the context of speech perception. This Figure has been modified from Ito, et al.12 (A) temporal pattern of somatosensory and auditory stimulations. (B) Event-related potentials for combined somatosensory and auditory stimulation in three timing conditions (lead, simultaneous, and lag) at electrode Pz. The red line represents recorded responses to paired ERPs. The dashed line represents the sum of somatosensory and auditory ERPs. The vertical dotted lines define an interval 160-220 msec after somatosensory onset in which differences between "pair" and "sum" responses are assessed. Arrows represent auditory onset. Please click here to view a larger version of this figure.

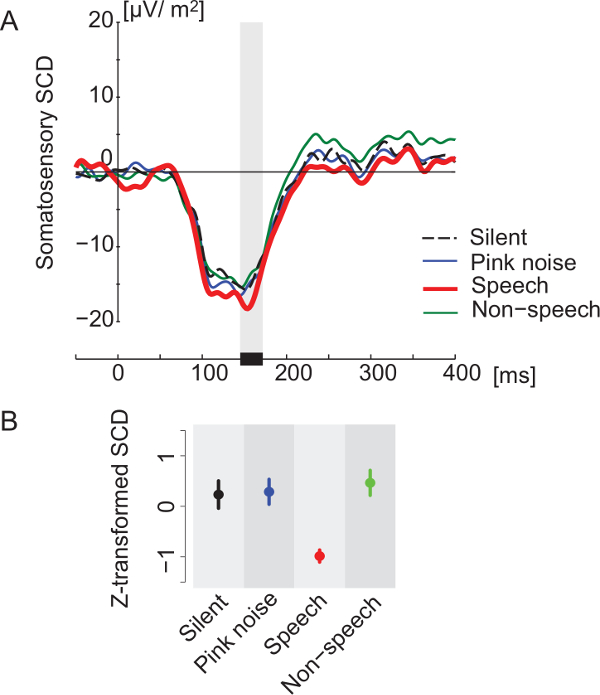

The next result demonstrates that the amplitude of the somatosensory ERP increases in response to listening to speech13. The pattern of somatosensory stimulation is the same as noted above. Figure 4 shows somatosensory ERPs, which are converted into scalp current density20 in off-line analysis, at electrodes (FC3, FC5, C3) over the left sensorimotor area. Somatosensory event-related potentials were recorded while participants listen to speech in the presence of continuous background sounds. The study tested four background conditions: speech, non-speech sounds, pink-noise and silent13. The results indicated the amplitude of somatosensory event-related potentials during listening to speech sounds was significantly greater than the other three conditions. There was no significant difference in amplitude for the other three conditions. Figure 4B shows normalized peak amplitudes in the different conditions. The result indicates that listening to speech sounds alters the somatosensory processing associated with facial skin deformation.

Figure 4. Enhancement of somatosensory event-related potentials due to speech sounds. The ERPs were recorded under four background sound conditions (Silent, Pink noise, Speech and Non-speech). This Figure has been modified from Ito, et al.13 (A) Temporal pattern of somatosensory event-related potentials in the area above left motor and premotor cortex. Each color corresponds to a different background sound condition. The ERPs were converted to scalp current density20. (B) Differences in z-score magnitudes associated with the first peak of the somatosensory ERPs. Error bars are standard errors across participants. Each color corresponds to different background sound conditions, as in Panel A. Please click here to view a larger version of this figure.

List of Materials

| EEG recording system | Biosemi | ActiveTwo | |

| Robotic decice for skin stretch | Geomagic | Phantom Premium 1.0 | |

| EEG-compatible earphones | Etymotic research | ER3A | |

| Software for visual and auditory stimulation | Neurobehavioral Systems | Presentation | |

| Electrode gel | Parker Laboratories, INC | Signa gel | |

| Double sided tape | 3M | 1522 | |

| Disposable syringe | Monoject | 412 Curved Tip | |

| Analog input device | National Instuments | PCI-6036E | |

| Degital output device | Measurement computing | USB-1208FS |

Lab Prep

Cortical processing associated with orofacial somatosensory function in speech has received limited experimental attention due to the difficulty of providing precise and controlled stimulation. This article introduces a technique for recording somatosensory event-related potentials (ERP) that uses a novel mechanical stimulation method involving skin deformation using a robotic device. Controlled deformation of the facial skin is used to modulate kinesthetic inputs through excitation of cutaneous mechanoreceptors. By combining somatosensory stimulation with electroencephalographic recording, somatosensory evoked responses can be successfully measured at the level of the cortex. Somatosensory stimulation can be combined with the stimulation of other sensory modalities to assess multisensory interactions. For speech, orofacial stimulation is combined with speech sound stimulation to assess the contribution of multi-sensory processing including the effects of timing differences. The ability to precisely control orofacial somatosensory stimulation during speech perception and speech production with ERP recording is an important tool that provides new insight into the neural organization and neural representations for speech.

Cortical processing associated with orofacial somatosensory function in speech has received limited experimental attention due to the difficulty of providing precise and controlled stimulation. This article introduces a technique for recording somatosensory event-related potentials (ERP) that uses a novel mechanical stimulation method involving skin deformation using a robotic device. Controlled deformation of the facial skin is used to modulate kinesthetic inputs through excitation of cutaneous mechanoreceptors. By combining somatosensory stimulation with electroencephalographic recording, somatosensory evoked responses can be successfully measured at the level of the cortex. Somatosensory stimulation can be combined with the stimulation of other sensory modalities to assess multisensory interactions. For speech, orofacial stimulation is combined with speech sound stimulation to assess the contribution of multi-sensory processing including the effects of timing differences. The ability to precisely control orofacial somatosensory stimulation during speech perception and speech production with ERP recording is an important tool that provides new insight into the neural organization and neural representations for speech.

Procedure

Cortical processing associated with orofacial somatosensory function in speech has received limited experimental attention due to the difficulty of providing precise and controlled stimulation. This article introduces a technique for recording somatosensory event-related potentials (ERP) that uses a novel mechanical stimulation method involving skin deformation using a robotic device. Controlled deformation of the facial skin is used to modulate kinesthetic inputs through excitation of cutaneous mechanoreceptors. By combining somatosensory stimulation with electroencephalographic recording, somatosensory evoked responses can be successfully measured at the level of the cortex. Somatosensory stimulation can be combined with the stimulation of other sensory modalities to assess multisensory interactions. For speech, orofacial stimulation is combined with speech sound stimulation to assess the contribution of multi-sensory processing including the effects of timing differences. The ability to precisely control orofacial somatosensory stimulation during speech perception and speech production with ERP recording is an important tool that provides new insight into the neural organization and neural representations for speech.